lihongjie

commited on

Commit

·

8ceede9

1

Parent(s):

19a6cff

first commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +50 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/Qwen3-VL-2B-Instruct_vision.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/model.embed_tokens.weight.bfloat16.bin +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l0_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l10_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l11_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l12_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l13_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l14_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l15_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l16_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l17_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l18_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l19_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l1_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l20_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l21_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l22_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l23_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l24_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l25_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l26_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l27_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l2_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l3_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l4_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l5_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l6_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l7_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l8_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l9_together.axmodel +3 -0

- Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_post.axmodel +3 -0

- README.md +233 -3

- config.json +0 -0

- images/demo.jpg +3 -0

- images/demo1.jpg +3 -0

- images/recoAll_attractions_1.jpg +3 -0

- images/recoAll_attractions_2.jpg +3 -0

- images/recoAll_attractions_3.jpg +3 -0

- images/recoAll_attractions_4.jpg +3 -0

- images/ssd_car.jpg +3 -0

- images/ssd_horse.jpg +3 -0

- main_ax650 +3 -0

- main_axcl_aarch64 +3 -0

- main_axcl_x86 +3 -0

- post_config.json +14 -0

- qwen3-vl-tokenizer/README.md +192 -0

- qwen3-vl-tokenizer/chat_template.json +4 -0

- qwen3-vl-tokenizer/config.json +63 -0

- qwen3-vl-tokenizer/configuration.json +1 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,53 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_post.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/Qwen3-VL-2B-Instruct_vision.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l13_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l1_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l20_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l27_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l2_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/model.embed_tokens.weight.bfloat16.bin filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l14_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l9_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l3_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l21_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l22_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l5_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l10_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l12_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l19_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l16_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l18_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l24_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l25_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l7_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l8_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l0_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l23_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l26_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l11_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l15_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l17_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l4_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l6_together.axmodel filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

video/frame_0000.jpg filter=lfs diff=lfs merge=lfs -text

|

| 68 |

+

video/frame_0040.jpg filter=lfs diff=lfs merge=lfs -text

|

| 69 |

+

video/frame_0056.jpg filter=lfs diff=lfs merge=lfs -text

|

| 70 |

+

images/recoAll_attractions_4.jpg filter=lfs diff=lfs merge=lfs -text

|

| 71 |

+

images/recoAll_attractions_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 72 |

+

images/ssd_car.jpg filter=lfs diff=lfs merge=lfs -text

|

| 73 |

+

video/frame_0024.jpg filter=lfs diff=lfs merge=lfs -text

|

| 74 |

+

images/recoAll_attractions_1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 75 |

+

images/recoAll_attractions_3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 76 |

+

images/ssd_horse.jpg filter=lfs diff=lfs merge=lfs -text

|

| 77 |

+

images/demo1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 78 |

+

video/frame_0008.jpg filter=lfs diff=lfs merge=lfs -text

|

| 79 |

+

video/frame_0016.jpg filter=lfs diff=lfs merge=lfs -text

|

| 80 |

+

video/frame_0032.jpg filter=lfs diff=lfs merge=lfs -text

|

| 81 |

+

video/frame_0048.jpg filter=lfs diff=lfs merge=lfs -text

|

| 82 |

+

images/demo.jpg filter=lfs diff=lfs merge=lfs -text

|

| 83 |

+

main_axcl_x86 filter=lfs diff=lfs merge=lfs -text

|

| 84 |

+

main_ax650 filter=lfs diff=lfs merge=lfs -text

|

| 85 |

+

main_axcl_aarch64 filter=lfs diff=lfs merge=lfs -text

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/Qwen3-VL-2B-Instruct_vision.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f8bd7c1864e378ebccd7c50b006586360dea7f0302db8662cfe93773603ae749

|

| 3 |

+

size 452430541

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/model.embed_tokens.weight.bfloat16.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:91c45bb2b9d8b678ceba1e3f0da785e47e5e6316384be544aa71b3c5d68ed733

|

| 3 |

+

size 622329856

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l0_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d35ad01c9630b23ae0715c3604ff01581debc033934f7e42feb38fd385cab4d9

|

| 3 |

+

size 73017579

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l10_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6fae923bae282c1dce739e360615684ecd5790aa8d221c52bec0a10b54b95745

|

| 3 |

+

size 73017227

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l11_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:61a0661023037cafe2666be3d6e74e6656eccfd4e08a4eada20476a46cfc78ac

|

| 3 |

+

size 73013195

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l12_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ec00c49eb7c6f282a3b8df8c5ed12060abd652e4a6fded9feb69c472354ab261

|

| 3 |

+

size 73018731

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l13_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c991fe51f02c8e1f0c3095c7e84888d0aa31d85cb148bf2696fadc31dfbc6cc0

|

| 3 |

+

size 73015723

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l14_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6606f5e112ce0116c5fb2a75e1e859b1262dc88520f9fcce7af9803e2f3b5ed5

|

| 3 |

+

size 73010379

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l15_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:37c53e7d6d1601107b4fd220b5ec7c569754ee8a3ec55876157d06499c1781eb

|

| 3 |

+

size 73010379

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l16_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:371364a9461c0905e97f239b465b3b2f9cbb147b7ae95a356b47ebf74190e98e

|

| 3 |

+

size 73002091

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l17_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d0d661a297436ecf2c20b537c562b54446abb7aeba206fe6ec1f9a7e05a1c57d

|

| 3 |

+

size 73009131

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l18_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:24b0392b48879fb2cb35ff600c11f240b31e7b22126992cfa727047f35c7565c

|

| 3 |

+

size 73010827

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l19_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:78f7899e82de2c02d4a37e5ffe6b37b2fd9f573e4e0ba3aaeaa10dda590adf64

|

| 3 |

+

size 73008747

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l1_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2356413dbd20507f502c05b387d90700f92e960cc5c27a5a8cd56b7b6fa9da44

|

| 3 |

+

size 73015243

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l20_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d92b4ed9e9fa201f09cfd5af7d433d7001fceeb6da3f2332098c178a84e8781f

|

| 3 |

+

size 73007627

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l21_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6fdf7d91127a444f67391aac8c6990582612ca22eaabc4706a5743eb44d7d9a4

|

| 3 |

+

size 73010923

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l22_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d6b386c94c28a85954ba42ad5b679f21b69450e18c5f48e2152c6c33de7e257a

|

| 3 |

+

size 73011915

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l23_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1fa54ebaf7e6f3438a4665a2a106afe6940e379b18c5441c947f46565c80ebf3

|

| 3 |

+

size 73012939

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l24_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4691860fa6623e93e90d6cac9f0f1c35aa39cf444b7867401ba4e954d3f6ef77

|

| 3 |

+

size 73018251

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l25_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0a4cbeea075f7e2666ec64452581769b21d245fa42ec917f891c4a74a5ea3694

|

| 3 |

+

size 73017227

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l26_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:98e4ea7cab1e872fef4315def8b06d8e3dae4cbc5aee09a02c00331ec458bfc0

|

| 3 |

+

size 73012747

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l27_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:158747691d802f9b8873784fe238f54961068d5d337bad8c467720182056904b

|

| 3 |

+

size 73019211

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l2_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d5e3cfd99a40f324c5ab5dd0be0f94536edf72b69c83e32376e3130fbffc87af

|

| 3 |

+

size 73015755

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l3_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2ab35c47469478e044c115d76a33b9e058ff29108aa74563f9d60ca0fa1bf31c

|

| 3 |

+

size 73016427

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l4_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:12d6fe031ade93a0ceecf632c4dcde5411e2262a8632edbdcd89ae9aed278b9f

|

| 3 |

+

size 73014987

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l5_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:361da9989d0fbcfb2730992967565b09303379ddea90e84375e4cf9cdcd89841

|

| 3 |

+

size 73014603

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l6_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1aca7a41fdd3950bdb95198088b24e21aff86ac5ae6375db32b9bd7a2a3b1175

|

| 3 |

+

size 73010507

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l7_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:84a7b57f821e683970fbdd2346f57242c3ac87ef19fd2769823a62acedc3d222

|

| 3 |

+

size 73018955

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l8_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5de3147a63b1ae3974925664ecd5bf640a3155888de211e6ff915ffc2a8bce9a

|

| 3 |

+

size 73017835

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_p128_l9_together.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:75ab25d28cf4bd87a7d6e5f3115432acf92a04144b267bb742bd3c3e9616b84b

|

| 3 |

+

size 73009259

|

Qwen3-VL-2B-Instruct-AX650-c128_p1152/qwen3_vl_text_post.axmodel

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:40ff92e3c93b96b34d4d440d69cf5ae516f445f7dd957eec9775235c0944b053

|

| 3 |

+

size 339277488

|

README.md

CHANGED

|

@@ -1,3 +1,233 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: mit

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

language:

|

| 4 |

+

- en

|

| 5 |

+

- zh

|

| 6 |

+

base_model:

|

| 7 |

+

- Qwen/Qwen3-VL-2B-Instruct

|

| 8 |

+

- Qwen/Qwen3-VL-4B-Instruct

|

| 9 |

+

pipeline_tag: image-text-to-text

|

| 10 |

+

library_name: transformers

|

| 11 |

+

tags:

|

| 12 |

+

- Qwen3-VL

|

| 13 |

+

- Qwen3-VL-2B-Instruct

|

| 14 |

+

- Qwen3-VL-4B-Instruct

|

| 15 |

+

- Int8

|

| 16 |

+

- VLM

|

| 17 |

+

---

|

| 18 |

+

|

| 19 |

+

# Qwen3-VL

|

| 20 |

+

|

| 21 |

+

This version of Qwen3-VL-2B-Instruct and Qwen3-VL-4B-Instruct have been converted to run on the Axera NPU using **w8a16** quantization.

|

| 22 |

+

|

| 23 |

+

Compatible with Pulsar2 version: 5.0

|

| 24 |

+

|

| 25 |

+

## Convert tools links:

|

| 26 |

+

|

| 27 |

+

For those who are interested in model conversion, you can try to export axmodel through the original repo :

|

| 28 |

+

|

| 29 |

+

- https://huggingface.co/Qwen/Qwen3-VL-2B-Instruct

|

| 30 |

+

- https://huggingface.co/Qwen/Qwen3-VL-4B-Instruct

|

| 31 |

+

|

| 32 |

+

[Pulsar2 Link, How to Convert LLM from Huggingface to axmodel](https://pulsar2-docs.readthedocs.io/en/latest/appendix/build_llm.html)

|

| 33 |

+

|

| 34 |

+

[AXera NPU HOST LLM Runtime](https://github.com/AXERA-TECH/Qwen3-VL.AXERA)

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

## Support Platform

|

| 38 |

+

|

| 39 |

+

- AX650

|

| 40 |

+

- AX650N DEMO Board

|

| 41 |

+

- [M4N-Dock(爱芯派Pro)](https://wiki.sipeed.com/hardware/zh/maixIV/m4ndock/m4ndock.html)

|

| 42 |

+

- [M.2 Accelerator card](https://axcl-docs.readthedocs.io/zh-cn/latest/doc_guide_hardware.html)

|

| 43 |

+

|

| 44 |

+

**Image Process**

|

| 45 |

+

|Chips| input size | image num | image encoder | ttft(320 tokens) | w8a16 |

|

| 46 |

+

|--|--|--|--|--|--|

|

| 47 |

+

|AX650| 384*384 | 1 | 207 ms | 392 ms | 9.5 tokens/sec|

|

| 48 |

+

|

| 49 |

+

**Video Process**

|

| 50 |

+

|Chips| input size | image num | image encoder |ttft(600 tokens) | w8a16 |

|

| 51 |

+

|--|--|--|--|--|--|

|

| 52 |

+

|AX650| 384*384 | 8 | 725 ms | 1045 ms | 9.5 tokens/sec|

|

| 53 |

+

|

| 54 |

+

The DDR capacity refers to the CMM memory that needs to be consumed. Ensure that the CMM memory allocation on the development board is greater than this value.

|

| 55 |

+

|

| 56 |

+

## How to use

|

| 57 |

+

|

| 58 |

+

Download all files from this repository to the device

|

| 59 |

+

|

| 60 |

+

**If you using AX650 Board**

|

| 61 |

+

|

| 62 |

+

### Prepare tokenizer server

|

| 63 |

+

|

| 64 |

+

#### Install transformer

|

| 65 |

+

|

| 66 |

+

```

|

| 67 |

+

pip install -r requirements.txt

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

### Demo Run

|

| 71 |

+

|

| 72 |

+

#### Image understand demo

|

| 73 |

+

|

| 74 |

+

##### start tokenizer server for image understand demo

|

| 75 |

+

|

| 76 |

+

```

|

| 77 |

+

python3 tokenizer_images.py --port 8080

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

##### run image understand demo

|

| 81 |

+

|

| 82 |

+

- input text

|

| 83 |

+

|

| 84 |

+

```

|

| 85 |

+

描述这张图片

|

| 86 |

+

```

|

| 87 |

+

|

| 88 |

+

- input image

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

```

|

| 93 |

+

root@ax650 ~/Qwen3-VL # bash run_qwen3_vl_2b_image.sh

|

| 94 |

+

[I][ Init][ 156]: LLM init start

|

| 95 |

+

[I][ Init][ 34]: connect http://127.0.0.1:8080 ok

|

| 96 |

+

bos_id: -1, eos_id: 151645

|

| 97 |

+

img_start_token: 151652

|

| 98 |

+

img_context_token: 151655

|

| 99 |

+

3% | ██ | 1 / 31 [0.01s<0.31s, 100.00 count/s] tokenizer init ok[I][ Init][ 26]: LLaMaEmbedSelector use mmap

|

| 100 |

+

6% | ███ | 2 / 31 [0.02s<0.25s, 125.00 count/s] embed_selector init ok[I][ Init][ 198]: attr.axmodel_num:28

|

| 101 |

+

103% | ██████████████████████████████████ | 32 / 31 [6.58s<6.37s, 4.86 count/s] init vpm axmodel ok,remain_cmm(3678 MB)[I][ Init][ 263]: IMAGE_CONTEXT_TOKEN: 151655, IMAGE_START_TOKEN: 151652

|

| 102 |

+

[I][ Init][ 306]: image encoder output float32

|

| 103 |

+

|

| 104 |

+

[I][ Init][ 336]: max_token_len : 2047

|

| 105 |

+

[I][ Init][ 341]: kv_cache_size : 1024, kv_cache_num: 2047

|

| 106 |

+

[I][ Init][ 349]: prefill_token_num : 128

|

| 107 |

+

[I][ Init][ 353]: grp: 1, prefill_max_token_num : 1

|

| 108 |

+

[I][ Init][ 353]: grp: 2, prefill_max_token_num : 128

|

| 109 |

+

[I][ Init][ 353]: grp: 3, prefill_max_token_num : 256

|

| 110 |

+

[I][ Init][ 353]: grp: 4, prefill_max_token_num : 384

|

| 111 |

+

[I][ Init][ 353]: grp: 5, prefill_max_token_num : 512

|

| 112 |

+

[I][ Init][ 353]: grp: 6, prefill_max_token_num : 640

|

| 113 |

+

[I][ Init][ 353]: grp: 7, prefill_max_token_num : 768

|

| 114 |

+

[I][ Init][ 353]: grp: 8, prefill_max_token_num : 896

|

| 115 |

+

[I][ Init][ 353]: grp: 9, prefill_max_token_num : 1024

|

| 116 |

+

[I][ Init][ 353]: grp: 10, prefill_max_token_num : 1152

|

| 117 |

+

[I][ Init][ 357]: prefill_max_token_num : 1152

|

| 118 |

+

[I][ Init][ 366]: LLM init ok

|

| 119 |

+

Type "q" to exit, Ctrl+c to stop current running

|

| 120 |

+

prompt >> 描述这张图片

|

| 121 |

+

image >> images/demo.jpg

|

| 122 |

+

[I][ Encode][ 490]: image encode time : 207.362000 ms, size : 1

|

| 123 |

+

[I][ Encode][ 533]: input_ids size:167

|

| 124 |

+

[I][ Encode][ 541]: offset 15

|

| 125 |

+

[I][ Encode][ 557]: img_embed.size:1, 294912

|

| 126 |

+

[I][ Encode][ 571]: out_embed size:342016

|

| 127 |

+

[I][ Encode][ 573]: position_ids size:167

|

| 128 |

+

[I][ Run][ 591]: input token num : 167, prefill_split_num : 2

|

| 129 |

+

[I][ Run][ 625]: input_num_token:128

|

| 130 |

+

[I][ Run][ 625]: input_num_token:39

|

| 131 |

+

[I][ Run][ 786]: ttft: 391.51 ms

|

| 132 |

+

好的,这是一张描绘海滩上温馨场景的照片。

|

| 133 |

+

|

| 134 |

+

照片捕捉了一个宁静而美好的瞬间:一位女士和她的狗在沙滩上。她们正坐在柔软的沙地上,背景是波光粼粼的海面和柔和的天空。阳光从画面的上方洒下,为整个场景镀上了一层温暖的金色,营造出一种宁静、舒适和幸福的氛围。

|

| 135 |

+

|

| 136 |

+

女士穿着一件格子衬衫,她正侧身对着镜头,脸上带着温柔的微笑,似乎在与她的狗互动。她的狗,一只毛茸茸的浅色狗,正用前爪轻轻搭在她的腿上,显得非常亲密和信任。狗的尾巴微微翘起,显示出它兴奋和快乐的情绪。

|

| 137 |

+

|

| 138 |

+

整个画面充满了爱与陪伴的温暖感觉,展现了人与宠物之间深厚的情感联系。

|

| 139 |

+

|

| 140 |

+

[N][ Run][ 913]: hit eos,avg 9.35 token/s

|

| 141 |

+

|

| 142 |

+

prompt >>

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

#### Video understand demo

|

| 146 |

+

|

| 147 |

+

##### start tokenizer server for image understand demo

|

| 148 |

+

|

| 149 |

+

```

|

| 150 |

+

python tokenizer_video.py --port 8080

|

| 151 |

+

```

|

| 152 |

+

|

| 153 |

+

##### run video understand demo

|

| 154 |

+

- input text

|

| 155 |

+

|

| 156 |

+

```

|

| 157 |

+

描述这个视频

|

| 158 |

+

```

|

| 159 |

+

|

| 160 |

+

- input video

|

| 161 |

+

|

| 162 |

+

./video

|

| 163 |

+

|

| 164 |

+

```

|

| 165 |

+

root@ax650 ~/Qwen3-VL # bash run_qwen3_vl_2b_video.sh

|

| 166 |

+

[I][ Init][ 156]: LLM init start

|

| 167 |

+

[I][ Init][ 34]: connect http://127.0.0.1:8080 ok

|

| 168 |

+

bos_id: -1, eos_id: 151645

|

| 169 |

+

img_start_token: 151652

|

| 170 |

+

img_context_token: 151656

|

| 171 |

+

3% | ██ | 1 / 31 [0.01s<0.31s, 100.00 count/s] tokenizer init ok[I][ Init][ 26]: LLaMaEmbedSelector use mmap

|

| 172 |

+

6% | ███ | 2 / 31 [0.01s<0.20s, 153.85 count/s] embed_selector init ok[I][ Init][ 198]: attr.axmodel_num:28

|

| 173 |

+

103% | ██████████████████████████████████ | 32 / 31 [30.34s<29.39s, 1.05 count/s] init vpm axmodel ok,remain_cmm(3678 MB)[I][ Init][ 263]: IMAGE_CONTEXT_TOKEN: 151656, IMAGE_START_TOKEN: 151652

|

| 174 |

+

[I][ Init][ 306]: image encoder output float32

|

| 175 |

+

|

| 176 |

+

[I][ Init][ 336]: max_token_len : 2047

|

| 177 |

+

[I][ Init][ 341]: kv_cache_size : 1024, kv_cache_num: 2047

|

| 178 |

+

[I][ Init][ 349]: prefill_token_num : 128

|

| 179 |

+

[I][ Init][ 353]: grp: 1, prefill_max_token_num : 1

|

| 180 |

+

[I][ Init][ 353]: grp: 2, prefill_max_token_num : 128

|

| 181 |

+

[I][ Init][ 353]: grp: 3, prefill_max_token_num : 256

|

| 182 |

+

[I][ Init][ 353]: grp: 4, prefill_max_token_num : 384

|

| 183 |

+

[I][ Init][ 353]: grp: 5, prefill_max_token_num : 512

|

| 184 |

+

[I][ Init][ 353]: grp: 6, prefill_max_token_num : 640

|

| 185 |

+

[I][ Init][ 353]: grp: 7, prefill_max_token_num : 768

|

| 186 |

+

[I][ Init][ 353]: grp: 8, prefill_max_token_num : 896

|

| 187 |

+

[I][ Init][ 353]: grp: 9, prefill_max_token_num : 1024

|

| 188 |

+

[I][ Init][ 353]: grp: 10, prefill_max_token_num : 1152

|

| 189 |

+

[I][ Init][ 357]: prefill_max_token_num : 1152

|

| 190 |

+

[I][ Init][ 366]: LLM init ok

|

| 191 |

+

Type "q" to exit, Ctrl+c to stop current running

|

| 192 |

+

prompt >> 描述这个视频

|

| 193 |

+

image >> video

|

| 194 |

+

video/frame_0000.jpg

|

| 195 |

+

video/frame_0008.jpg

|

| 196 |

+

video/frame_0016.jpg

|

| 197 |

+

video/frame_0024.jpg

|

| 198 |

+

video/frame_0032.jpg

|

| 199 |

+

video/frame_0040.jpg

|

| 200 |

+

video/frame_0048.jpg

|

| 201 |

+

video/frame_0056.jpg

|

| 202 |

+

[I][ Encode][ 490]: image encode time : 751.804993 ms, size : 4

|

| 203 |

+

[I][ Encode][ 533]: input_ids size:600

|

| 204 |

+

[I][ Encode][ 541]: offset 15

|

| 205 |

+

[I][ Encode][ 557]: img_embed.size:4, 294912

|

| 206 |

+

[I][ Encode][ 562]: offset:159

|

| 207 |

+

[I][ Encode][ 562]: offset:303

|

| 208 |

+

[I][ Encode][ 562]: offset:447

|

| 209 |

+

[I][ Encode][ 571]: out_embed size:1228800

|

| 210 |

+

[I][ Encode][ 573]: position_ids size:600

|

| 211 |

+

[I][ Run][ 591]: input token num : 600, prefill_split_num : 5

|

| 212 |

+

[I][ Run][ 625]: input_num_token:128

|

| 213 |

+

[I][ Run][ 625]: input_num_token:128

|

| 214 |

+

[I][ Run][ 625]: input_num_token:128

|

| 215 |

+

[I][ Run][ 625]: input_num_token:128

|

| 216 |

+

[I][ Run][ 625]: input_num_token:88

|

| 217 |

+

[I][ Run][ 786]: ttft: 1040.91 ms

|

| 218 |

+

根据您提供的图片,这是一段关于两只土拨鼠在山地环境中互动的视频片段。

|

| 219 |

+

|

| 220 |

+

- **主体**:画面中有两只土拨鼠(也称“山地土拨鼠”或“黑背土拨鼠”),它们正站在一块布满碎石的草地上。它们的毛色为灰褐色与黑色相间,面部有明显的黑色条纹,这是土拨鼠的典型特征。

|

| 221 |

+

|

| 222 |

+

- **行为**:这两只土拨鼠正进行着一种看似玩耍或社交的互动。它们用前爪互相拍打,身体前倾,姿态充满活力。这种行为在土拨鼠中通常表示友好、玩耍或建立社交联系。

|

| 223 |

+

|

| 224 |

+

- **环境**:背景是连绵起伏的山脉,山坡上覆盖着绿色的植被,天空晴朗,阳光明媚。整个场景给人一种自然、宁静又充满生机的感觉。

|

| 225 |

+

|

| 226 |

+

- **视频风格**:从画面的清晰度和动态感来看,这可能是一段慢动作或高清晰度的视频片段,捕捉了土拨鼠活泼、生动的瞬间。

|

| 227 |

+

|

| 228 |

+

综上所述,这段视频生动地记录了两只土拨鼠在自然山地环境中友好互动的场景,展现了它们活泼、充满活力的天性。

|

| 229 |

+

|

| 230 |

+

[N][ Run][ 913]: hit eos,avg 9.44 token/s

|

| 231 |

+

|

| 232 |

+

prompt >>

|

| 233 |

+

```

|

config.json

ADDED

|

File without changes

|

images/demo.jpg

ADDED

|

Git LFS Details

|

images/demo1.jpg

ADDED

|

Git LFS Details

|

images/recoAll_attractions_1.jpg

ADDED

|

Git LFS Details

|

images/recoAll_attractions_2.jpg

ADDED

|

Git LFS Details

|

images/recoAll_attractions_3.jpg

ADDED

|

Git LFS Details

|

images/recoAll_attractions_4.jpg

ADDED

|

Git LFS Details

|

images/ssd_car.jpg

ADDED

|

Git LFS Details

|

images/ssd_horse.jpg

ADDED

|

Git LFS Details

|

main_ax650

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bdbd72d706b584081c103af2fe6a59426140f0ed0b79d9baf4282a601e2e014c

|

| 3 |

+

size 6593472

|

main_axcl_aarch64

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:14a18915cc18ed06c58265a3ca78a04430ae5538bced459531cd58866eb1cfa3

|

| 3 |

+

size 1856144

|

main_axcl_x86

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e97f652a3b7616e320780734d43cac95429f5f68d77047d395789736b8ca5948

|

| 3 |

+

size 1885400

|

post_config.json

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"enable_temperature" : true,

|

| 3 |

+

"temperature" : 0.7,

|

| 4 |

+

|

| 5 |

+

"enable_repetition_penalty" : false,

|

| 6 |

+

"repetition_penalty" : 1,

|

| 7 |

+

"penalty_window" : 30,

|

| 8 |

+

|

| 9 |

+

"enable_top_p_sampling" : false,

|

| 10 |

+

"top_p" : 0.8,

|

| 11 |

+

|

| 12 |

+

"enable_top_k_sampling" : true,

|

| 13 |

+

"top_k" : 20

|

| 14 |

+

}

|

qwen3-vl-tokenizer/README.md

ADDED

|

@@ -0,0 +1,192 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

pipeline_tag: image-text-to-text

|

| 4 |

+

library_name: transformers

|

| 5 |

+

---

|

| 6 |

+

<a href="https://chat.qwenlm.ai/" target="_blank" style="margin: 2px;">

|

| 7 |

+

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

|

| 8 |

+

</a>

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

# Qwen3-VL-2B-Instruct

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

Meet Qwen3-VL — the most powerful vision-language model in the Qwen series to date.

|

| 15 |

+

|

| 16 |

+

This generation delivers comprehensive upgrades across the board: superior text understanding & generation, deeper visual perception & reasoning, extended context length, enhanced spatial and video dynamics comprehension, and stronger agent interaction capabilities.

|

| 17 |

+

|

| 18 |

+

Available in Dense and MoE architectures that scale from edge to cloud, with Instruct and reasoning‑enhanced Thinking editions for flexible, on‑demand deployment.

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

#### Key Enhancements:

|

| 22 |

+

|

| 23 |

+

* **Visual Agent**: Operates PC/mobile GUIs—recognizes elements, understands functions, invokes tools, completes tasks.

|

| 24 |

+

|

| 25 |

+

* **Visual Coding Boost**: Generates Draw.io/HTML/CSS/JS from images/videos.

|

| 26 |

+

|

| 27 |

+

* **Advanced Spatial Perception**: Judges object positions, viewpoints, and occlusions; provides stronger 2D grounding and enables 3D grounding for spatial reasoning and embodied AI.

|

| 28 |

+

|

| 29 |

+

* **Long Context & Video Understanding**: Native 256K context, expandable to 1M; handles books and hours-long video with full recall and second-level indexing.

|

| 30 |

+

|

| 31 |

+

* **Enhanced Multimodal Reasoning**: Excels in STEM/Math—causal analysis and logical, evidence-based answers.

|

| 32 |

+

|

| 33 |

+

* **Upgraded Visual Recognition**: Broader, higher-quality pretraining is able to “recognize everything”—celebrities, anime, products, landmarks, flora/fauna, etc.

|

| 34 |

+

|

| 35 |

+

* **Expanded OCR**: Supports 32 languages (up from 19); robust in low light, blur, and tilt; better with rare/ancient characters and jargon; improved long-document structure parsing.

|

| 36 |

+

|

| 37 |

+

* **Text Understanding on par with pure LLMs**: Seamless text–vision fusion for lossless, unified comprehension.

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

#### Model Architecture Updates:

|

| 41 |

+

|

| 42 |

+

<p align="center">

|

| 43 |

+

<img src="https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-VL/qwen3vl_arc.jpg" width="80%"/>

|

| 44 |

+

<p>

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

1. **Interleaved-MRoPE**: Full‑frequency allocation over time, width, and height via robust positional embeddings, enhancing long‑horizon video reasoning.

|

| 48 |

+

|

| 49 |

+

2. **DeepStack**: Fuses multi‑level ViT features to capture fine‑grained details and sharpen image–text alignment.

|

| 50 |

+

|

| 51 |

+

3. **Text–Timestamp Alignment:** Moves beyond T‑RoPE to precise, timestamp‑grounded event localization for stronger video temporal modeling.

|

| 52 |

+

|

| 53 |

+

This is the weight repository for Qwen3-VL-2B-Instruct.

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

---

|

| 57 |

+

|

| 58 |

+

## Model Performance

|

| 59 |

+

|

| 60 |

+

**Multimodal performance**

|

| 61 |

+

|

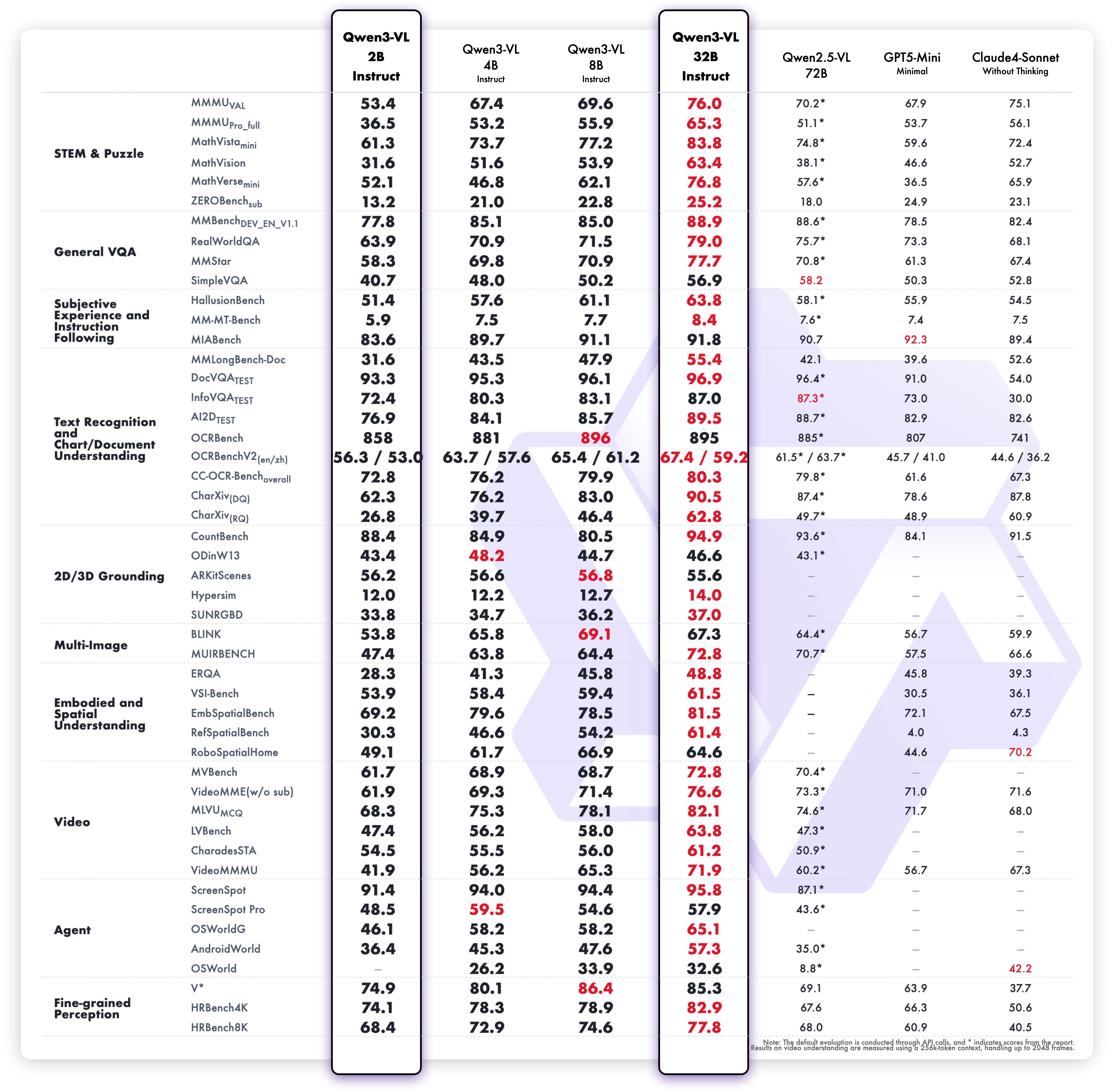

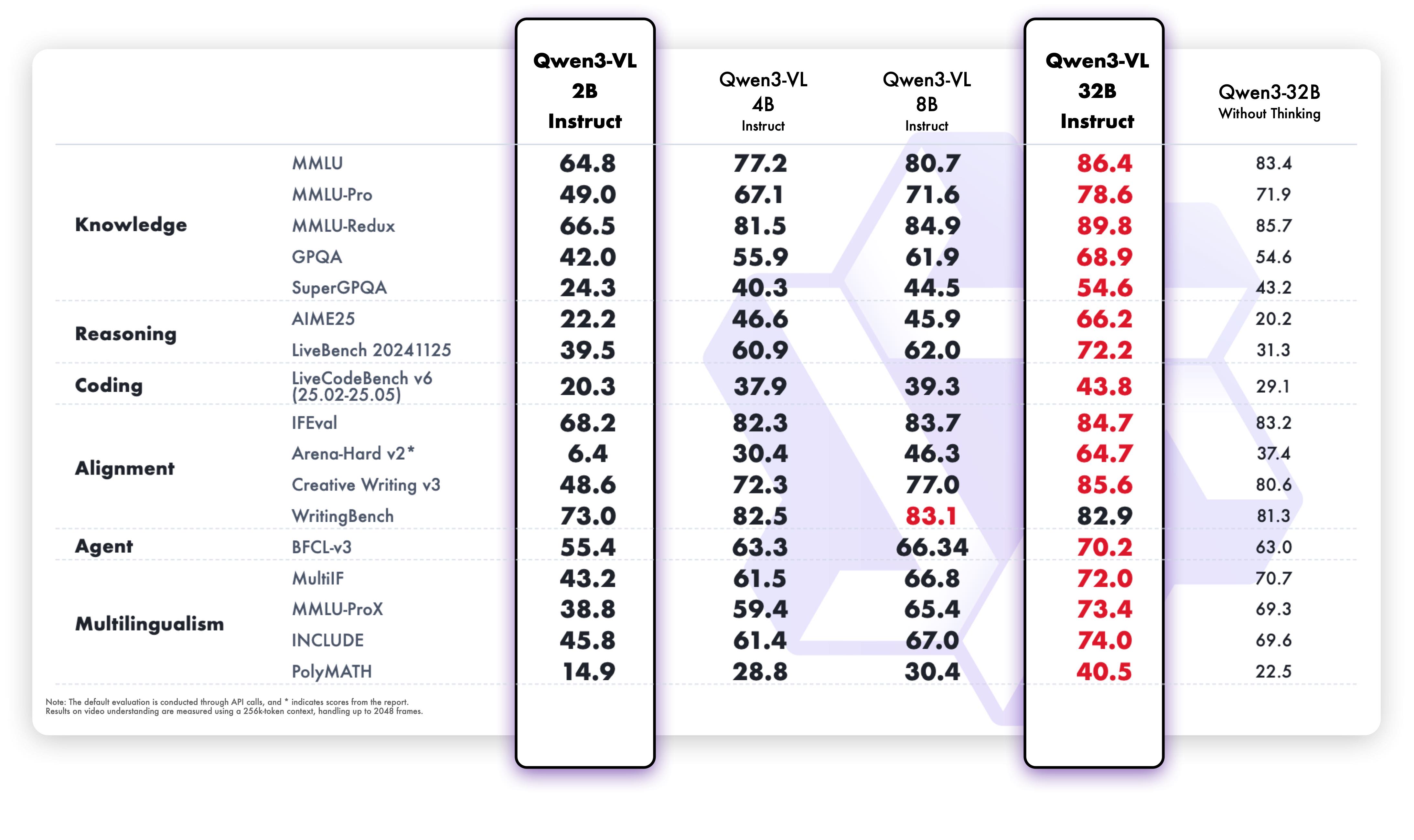

| 62 |

+

|

| 63 |

+

|

| 64 |

+

**Pure text performance**

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

## Quickstart

|

| 68 |

+

|

| 69 |

+

Below, we provide simple examples to show how to use Qwen3-VL with 🤖 ModelScope and 🤗 Transformers.

|

| 70 |

+

|

| 71 |

+

The code of Qwen3-VL has been in the latest Hugging Face transformers and we advise you to build from source with command:

|

| 72 |

+

```

|

| 73 |

+

pip install git+https://github.com/huggingface/transformers

|

| 74 |

+

# pip install transformers==4.57.0 # currently, V4.57.0 is not released

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

### Using 🤗 Transformers to Chat

|

| 78 |

+

|

| 79 |

+

Here we show a code snippet to show how to use the chat model with `transformers`:

|

| 80 |

+

|

| 81 |

+

```python

|

| 82 |

+

from transformers import Qwen3VLForConditionalGeneration, AutoProcessor

|

| 83 |

+

|

| 84 |

+

# default: Load the model on the available device(s)

|

| 85 |

+

model = Qwen3VLForConditionalGeneration.from_pretrained(

|

| 86 |

+

"Qwen/Qwen3-VL-2B-Instruct", dtype="auto", device_map="auto"

|

| 87 |

+

)

|

| 88 |

+

|

| 89 |

+

# We recommend enabling flash_attention_2 for better acceleration and memory saving, especially in multi-image and video scenarios.

|

| 90 |

+

# model = Qwen3VLForConditionalGeneration.from_pretrained(

|

| 91 |

+

# "Qwen/Qwen3-VL-2B-Instruct",

|

| 92 |

+

# dtype=torch.bfloat16,

|

| 93 |

+

# attn_implementation="flash_attention_2",

|

| 94 |

+

# device_map="auto",

|

| 95 |

+

# )

|

| 96 |

+

|

| 97 |

+

processor = AutoProcessor.from_pretrained("Qwen/Qwen3-VL-2B-Instruct")

|

| 98 |

+

|

| 99 |

+

messages = [

|

| 100 |

+

{

|

| 101 |

+

"role": "user",

|

| 102 |

+

"content": [

|

| 103 |

+

{

|

| 104 |

+

"type": "image",

|

| 105 |

+

"image": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen-VL/assets/demo.jpeg",

|

| 106 |

+

},

|

| 107 |

+

{"type": "text", "text": "Describe this image."},

|

| 108 |

+

],

|

| 109 |

+

}

|

| 110 |

+

]

|

| 111 |

+

|

| 112 |

+

# Preparation for inference

|

| 113 |

+

inputs = processor.apply_chat_template(

|

| 114 |

+

messages,

|

| 115 |

+

tokenize=True,

|

| 116 |

+

add_generation_prompt=True,

|

| 117 |

+

return_dict=True,

|

| 118 |

+

return_tensors="pt"

|

| 119 |

+

)

|

| 120 |

+

inputs = inputs.to(model.device)

|

| 121 |

+

|

| 122 |

+

# Inference: Generation of the output

|

| 123 |

+

generated_ids = model.generate(**inputs, max_new_tokens=128)

|

| 124 |

+

generated_ids_trimmed = [

|

| 125 |

+

out_ids[len(in_ids) :] for in_ids, out_ids in zip(inputs.input_ids, generated_ids)

|

| 126 |

+

]

|

| 127 |

+

output_text = processor.batch_decode(

|

| 128 |

+

generated_ids_trimmed, skip_special_tokens=True, clean_up_tokenization_spaces=False

|

| 129 |

+

)

|

| 130 |

+

print(output_text)

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

### Generation Hyperparameters

|

| 134 |

+

#### VL

|

| 135 |

+

```bash

|

| 136 |

+

export greedy='false'

|

| 137 |

+

export top_p=0.8

|

| 138 |

+

export top_k=20

|

| 139 |

+

export temperature=0.7

|

| 140 |

+

export repetition_penalty=1.0

|

| 141 |

+

export presence_penalty=1.5

|

| 142 |

+

export out_seq_length=16384

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

#### Text

|

| 146 |

+

```bash

|

| 147 |

+

export greedy='false'

|

| 148 |

+

export top_p=1.0

|

| 149 |

+

export top_k=40

|

| 150 |

+

export repetition_penalty=1.0

|

| 151 |

+

export presence_penalty=2.0

|

| 152 |

+

export temperature=1.0

|

| 153 |

+

export out_seq_length=32768

|

| 154 |

+

```

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

## Citation

|

| 158 |

+

|

| 159 |

+

If you find our work helpful, feel free to give us a cite.

|

| 160 |

+

|

| 161 |

+

```

|

| 162 |

+

@misc{qwen3technicalreport,

|

| 163 |

+

title={Qwen3 Technical Report},

|

| 164 |

+

author={Qwen Team},

|

| 165 |

+

year={2025},

|

| 166 |

+

eprint={2505.09388},

|

| 167 |

+

archivePrefix={arXiv},

|

| 168 |

+

primaryClass={cs.CL},

|

| 169 |

+

url={https://arxiv.org/abs/2505.09388},

|

| 170 |

+

}

|

| 171 |

+

|

| 172 |

+

@article{Qwen2.5-VL,

|

| 173 |

+

title={Qwen2.5-VL Technical Report},

|

| 174 |

+

author={Bai, Shuai and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Song, Sibo and Dang, Kai and Wang, Peng and Wang, Shijie and Tang, Jun and Zhong, Humen and Zhu, Yuanzhi and Yang, Mingkun and Li, Zhaohai and Wan, Jianqiang and Wang, Pengfei and Ding, Wei and Fu, Zheren and Xu, Yiheng and Ye, Jiabo and Zhang, Xi and Xie, Tianbao and Cheng, Zesen and Zhang, Hang and Yang, Zhibo and Xu, Haiyang and Lin, Junyang},

|

| 175 |

+

journal={arXiv preprint arXiv:2502.13923},

|

| 176 |

+

year={2025}

|

| 177 |

+

}

|

| 178 |

+

|

| 179 |

+

@article{Qwen2VL,

|

| 180 |

+

title={Qwen2-VL: Enhancing Vision-Language Model's Perception of the World at Any Resolution},

|

| 181 |

+

author={Wang, Peng and Bai, Shuai and Tan, Sinan and Wang, Shijie and Fan, Zhihao and Bai, Jinze and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Fan, Yang and Dang, Kai and Du, Mengfei and Ren, Xuancheng and Men, Rui and Liu, Dayiheng and Zhou, Chang and Zhou, Jingren and Lin, Junyang},

|

| 182 |

+

journal={arXiv preprint arXiv:2409.12191},

|

| 183 |

+

year={2024}

|

| 184 |

+

}

|

| 185 |

+

|

| 186 |

+

@article{Qwen-VL,

|

| 187 |

+

title={Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond},

|

| 188 |

+

author={Bai, Jinze and Bai, Shuai and Yang, Shusheng and Wang, Shijie and Tan, Sinan and Wang, Peng and Lin, Junyang and Zhou, Chang and Zhou, Jingren},

|

| 189 |

+

journal={arXiv preprint arXiv:2308.12966},

|

| 190 |

+

year={2023}

|

| 191 |

+

}

|

| 192 |

+

```

|

qwen3-vl-tokenizer/chat_template.json

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"chat_template": "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].role == 'system' %}\n {%- if messages[0].content is string %}\n {{- messages[0].content }}\n {%- else %}\n {%- for content in messages[0].content %}\n {%- if 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '\\n\\n' }}\n {%- endif %}\n {{- \"# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0].role == 'system' %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].content is string %}\n {{- messages[0].content }}\n {%- else %}\n {%- for content in messages[0].content %}\n {%- if 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n{%- set image_count = namespace(value=0) %}\n{%- set video_count = namespace(value=0) %}\n{%- for message in messages %}\n {%- if message.role == \"user\" %}\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content in message.content %}\n {%- if content.type == 'image' or 'image' in content or 'image_url' in content %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif content.type == 'video' or 'video' in content %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"assistant\" %}\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content_item in message.content %}\n {%- if 'text' in content_item %}\n {{- content_item.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {%- if message.tool_calls %}\n {%- for tool_call in message.tool_calls %}\n {%- if (loop.first and message.content) or (not loop.first) %}\n {{- '\\n' }}\n {%- endif %}\n {%- if tool_call.function %}\n {%- set tool_call = tool_call.function %}\n {%- endif %}\n {{- '<tool_call>\\n{\"name\": \"' }}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {%- if tool_call.arguments is string %}\n {{- tool_call.arguments }}\n {%- else %}\n {{- tool_call.arguments | tojson }}\n {%- endif %}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"tool\" %}\n {%- if loop.first or (messages[loop.index0 - 1].role != \"tool\") %}\n {{- '<|im_start|>user' }}\n {%- endif %}\n {{- '\\n<tool_response>\\n' }}\n {%- if message.content is string %}\n {{- message.content }}\n {%- else %}\n {%- for content in message.content %}\n {%- if content.type == 'image' or 'image' in content or 'image_url' in content %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif content.type == 'video' or 'video' in content %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in content %}\n {{- content.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n {{- '\\n</tool_response>' }}\n {%- if loop.last or (messages[loop.index0 + 1].role != \"tool\") %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n' }}\n{%- endif %}\n"

|

| 3 |

+

}

|

| 4 |

+

|

qwen3-vl-tokenizer/config.json

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"Qwen3VLForConditionalGeneration"

|

| 4 |

+

],

|

| 5 |

+

"image_token_id": 151655,

|

| 6 |

+

"model_type": "qwen3_vl",

|

| 7 |

+

"text_config": {

|

| 8 |

+

"attention_bias": false,

|

| 9 |

+

"attention_dropout": 0.0,

|

| 10 |

+

"bos_token_id": 151643,

|

| 11 |

+

"dtype": "bfloat16",

|

| 12 |

+

"eos_token_id": 151645,

|

| 13 |

+

"head_dim": 128,

|

| 14 |

+

"hidden_act": "silu",

|

| 15 |

+

"hidden_size": 2048,

|

| 16 |

+

"initializer_range": 0.02,

|

| 17 |

+

"intermediate_size": 6144,

|

| 18 |

+

"max_position_embeddings": 262144,

|

| 19 |

+

"model_type": "qwen3_vl_text",

|

| 20 |

+

"num_attention_heads": 16,

|

| 21 |

+

"num_hidden_layers": 28,

|

| 22 |

+

"num_key_value_heads": 8,

|

| 23 |

+

"rms_norm_eps": 1e-06,

|

| 24 |

+

"rope_scaling": {

|

| 25 |

+

"mrope_interleaved": true,

|

| 26 |

+

"mrope_section": [

|

| 27 |

+

24,

|

| 28 |

+

20,

|

| 29 |

+

20

|

| 30 |

+

],

|

| 31 |

+

"rope_type": "default"

|

| 32 |

+

},

|

| 33 |

+

"rope_theta": 5000000,

|

| 34 |

+

"tie_word_embeddings": true,

|

| 35 |

+

"use_cache": true,

|

| 36 |

+