Upload README.md with huggingface_hub

Browse files

README.md

ADDED

|

@@ -0,0 +1,62 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model:

|

| 3 |

+

- Tarek07/Legion-V2.1-LLaMa-70B

|

| 4 |

+

library_name: transformers

|

| 5 |

+

tags:

|

| 6 |

+

- mergekit

|

| 7 |

+

- merge

|

| 8 |

+

- llmcompressor

|

| 9 |

+

- GPTQ

|

| 10 |

+

license: llama3.3

|

| 11 |

+

datasets:

|

| 12 |

+

- openerotica/erotiquant3

|

| 13 |

+

---

|

| 14 |

+

<p align="center">

|

| 15 |

+

<img width="120px" alt="Sentient Simulations Plumbob" src="https://www.sentientsimulations.com/transparent-plumbob2.png">

|

| 16 |

+

</p>

|

| 17 |

+

<p align="center"><a href="https://www.sentientsimulations.com/">[🏠Sentient Simulations]</a> | <a href="https://discord.com/invite/JTjbydmUAp">[Discord]</a> | <a href="https://www.patreon.com/SentientSims">[Patreon]</a>

|

| 18 |

+

<hr>

|

| 19 |

+

|

| 20 |

+

# Llama-3.1-70B-ArliAI-RPMax-v1.3-GPTQ

|

| 21 |

+

|

| 22 |

+

This repository contains a 4 bit GPTQ-quantized version of the [Tarek07/Legion-V2.1-LLaMa-70B model](https://huggingface.co/Tarek07/Legion-V2.1-LLaMa-70B) using [llm-compressor](https://github.com/vllm-project/llm-compressor).

|

| 23 |

+

|

| 24 |

+

## Quantization Settings

|

| 25 |

+

|

| 26 |

+

| **Attribute** | **Value** |

|

| 27 |

+

|---------------------------------|------------------------------------------------------------------------------------|

|

| 28 |

+

| **Algorithm** | GPTQ |

|

| 29 |

+

| **Layers** | Linear |

|

| 30 |

+

| **Weight Scheme** | W4A16 |

|

| 31 |

+

| **Group Size** | 128 |

|

| 32 |

+

| **Calibration Dataset** | [openerotica/erotiquant3](https://huggingface.co/datasets/openerotica/erotiquant3) |

|

| 33 |

+

| **Calibration Sequence Length** | 4096 |

|

| 34 |

+

| **Calibration Samples** | 512 |

|

| 35 |

+

|

| 36 |

+

### Dataset Preprocessing

|

| 37 |

+

|

| 38 |

+

The dataset was preprocessed with the following steps:

|

| 39 |

+

1. Extract and structure the conversation data using role-based templates (`SYSTEM`, `USER`, `ASSISTANT`).

|

| 40 |

+

2. Convert the structured conversations into a tokenized format using the model's tokenizer.

|

| 41 |

+

3. Filter out sequences shorter than 4096 tokens.

|

| 42 |

+

4. Shuffle and select 512 samples for calibration.

|

| 43 |

+

|

| 44 |

+

## Quantization Process

|

| 45 |

+

|

| 46 |

+

View the shell and python script used to quantize this model.

|

| 47 |

+

|

| 48 |

+

2 rtx pro 6000 with 565GB of ram, 300GB of disk space was rented on runpod.

|

| 49 |

+

|

| 50 |

+

Quantization took approximately 3.5 hours with a total of \$14.32 in compute costs.

|

| 51 |

+

|

| 52 |

+

- [compress.sh](./compress.sh)

|

| 53 |

+

- [compress.py](./compress.py)

|

| 54 |

+

|

| 55 |

+

## Acknowledgments

|

| 56 |

+

|

| 57 |

+

- Base Model: [Tarek07/Legion-V2.1-LLaMa-70B](https://huggingface.co/Tarek07/Legion-V2.1-LLaMa-70B)

|

| 58 |

+

- Calibration Dataset: [openerotica/erotiquant3](https://huggingface.co/datasets/openerotica/erotiquant3)

|

| 59 |

+

- LLM Compressor: [llm-compressor](https://github.com/vllm-project/llm-compressor)

|

| 60 |

+

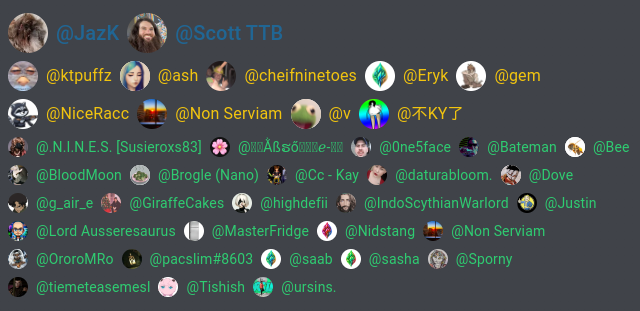

- Everyone subscribed to the [Sentient Simulations Patreon](https://www.patreon.com/SentientSims)

|

| 61 |

+

|

| 62 |

+

|