Upload folder using huggingface_hub (#2)

Browse files- 7d21961d7f6f19ef5e680dfe7ffb787b50a07f8d3a95b6a3941ca100f1888a01 (1dbef9fa1ac6d6c32a4a9bf0eabce5ed7e476565)

- base_results.json +19 -0

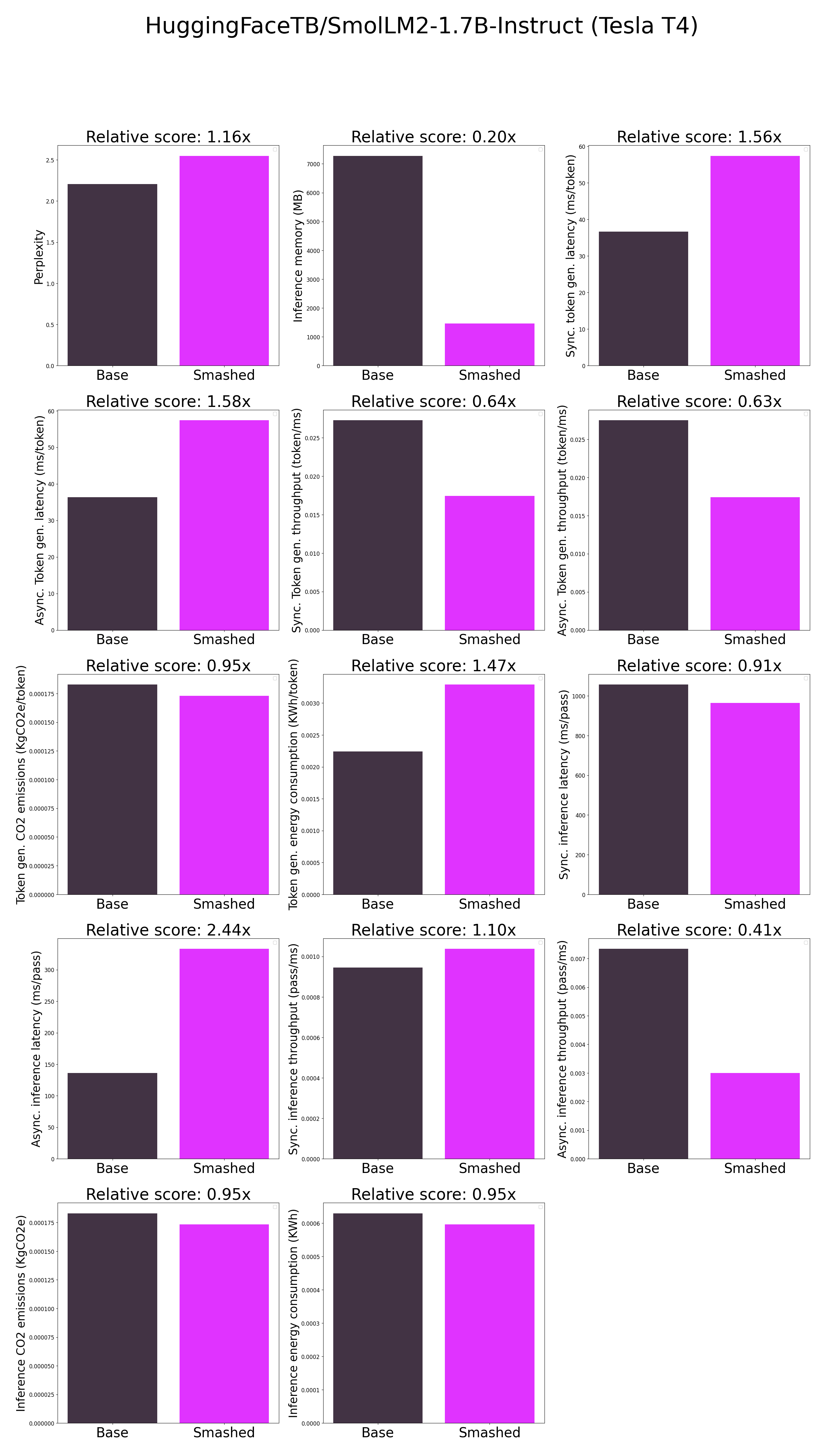

- plots.png +0 -0

- smashed_results.json +19 -0

base_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.2062017917633057,

|

| 5 |

+

"memory_inference_first": 7278.0,

|

| 6 |

+

"memory_inference": 7278.0,

|

| 7 |

+

"token_generation_latency_sync": 36.65739364624024,

|

| 8 |

+

"token_generation_latency_async": 36.3558666780591,

|

| 9 |

+

"token_generation_throughput_sync": 0.02727962630541697,

|

| 10 |

+

"token_generation_throughput_async": 0.027505877080452153,

|

| 11 |

+

"token_generation_CO2_emissions": 0.00018283805669861312,

|

| 12 |

+

"token_generation_energy_consumption": 0.002242028576060243,

|

| 13 |

+

"inference_latency_sync": 1057.4123092651366,

|

| 14 |

+

"inference_latency_async": 136.27676963806152,

|

| 15 |

+

"inference_throughput_sync": 0.000945704897926679,

|

| 16 |

+

"inference_throughput_async": 0.007338007810545461,

|

| 17 |

+

"inference_CO2_emissions": 0.00018305297379900547,

|

| 18 |

+

"inference_energy_consumption": 0.0006297497502826921

|

| 19 |

+

}

|

plots.png

ADDED

|

smashed_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"current_gpu_type": "Tesla T4",

|

| 3 |

+

"current_gpu_total_memory": 15095.0625,

|

| 4 |

+

"perplexity": 2.548238754272461,

|

| 5 |

+

"memory_inference_first": 1458.0,

|

| 6 |

+

"memory_inference": 1458.0,

|

| 7 |

+

"token_generation_latency_sync": 57.3639274597168,

|

| 8 |

+

"token_generation_latency_async": 57.42117892950773,

|

| 9 |

+

"token_generation_throughput_sync": 0.01743255812988466,

|

| 10 |

+

"token_generation_throughput_async": 0.017415177093936633,

|

| 11 |

+

"token_generation_CO2_emissions": 0.00017302643152991706,

|

| 12 |

+

"token_generation_energy_consumption": 0.003290108461267099,

|

| 13 |

+

"inference_latency_sync": 964.0653579711914,

|

| 14 |

+

"inference_latency_async": 333.02977085113525,

|

| 15 |

+

"inference_throughput_sync": 0.0010372740724803456,

|

| 16 |

+

"inference_throughput_async": 0.0030027345526625645,

|

| 17 |

+

"inference_CO2_emissions": 0.0001732807294008593,

|

| 18 |

+

"inference_energy_consumption": 0.0005966248434902247

|

| 19 |

+

}

|