What is Qwen-Agent framework? Inside the Qwen family

🔳 we discuss the timeline of Qwen models, focusing on their agentic capabilities and how they compete with other models, and also explore what is Qwen-Agent framework and how you can use it

While everyone was talking about DeepSeek-R1’s milestone in models reasoning, Qwen models from Alibaba stayed overshadowed, despite they were cooking something interesting and also open-source. From the very beginning of their way they were focusing on making their best models capable of agentic features like tool use, which their earlier models also leveraged. Today we are going to discuss the entire journey of Qwen models to strong reasoning, matching or even being better than state-of-the-art models from OpenAI and DeepSeek. But it’s not all. As the AI and machine learning community is now more into ecosystems and complex frameworks, we’ll dive into Qwen-Agent framework – a full‑fledged agentic ecosystem that lets Qwen models autonomously plan, call functions, and execute complex, multi‑step tasks right out of the box. The Qwen family definitely deserve your attention, so let’s go!

📨 Click follow! If you want to receive our articles straight to your inbox, please subscribe here

In today’s episode, we will cover:

- Introduction

- How it all began: Qwen 1.0 and Qwen 2

- Qwen2.5 and QwQ-32B rivaling DeepSeek-R1

- Qwen-Agent framework

- Examples of applications

- Conclusion: Why Qwen advancements stand out

- Sources and further reading

How it all began: Qwen 1.0 and Qwen 2

But first, a few words about Alibaba, to understand the scale. Alibaba Group, founded in 1999 by Jack Ma in Hangzhou, China, has grown into a global leader in e-commerce and technology. The company reported an 8% revenue increase to 280.2 billion yuan ($38.38 billion) for the quarter ending December 31, 2024, marking its fastest growth in over a year. The company's market capitalization stands at approximately $328.63 billion as of March 2025, positioning it among the world's most valuable companies. It's AI strategy is notable for its substantial investment and integration across its diverse business operations. The company has committed to investing over RMB 380 billion (approximately $53 billion) in AI and cloud computing infrastructure over the next three years, surpassing its total expenditure in these areas over the past decade.

And this where Qwen models start to play an important role.

From the very beginning of Qwen models’ development, we could witness how achieving strong agentic capabilities, including tool use and deep reasoning, shaped the strategy and advancements of Qwen models. Here is a brief timeline of the major Alibaba Cloud’s models, which leads us to what we have today from Qwen for agent development.

In mid-2023, Alibaba Cloud’s Qwen Team first open-sourced the family of LLMs, called Qwen 1.0. It included base LLMs with 1.8B, 7B, 14B, and 72B parameters, pretrained on up to 3 trillion tokens of multilingual data with the main focus on Chinese and English languages. Qwen 1.0 models featured context windows of up to 32K tokens and 8K for some early variants.

Alongside the base models, Alibaba released Qwen-Chat variants aligned via supervised fine-tuning and RLHF. Even at this early stage, it demonstrated a broad skillset – it could hold conversations, generate content, translate, code, solve math, and even use tools or act as an agent when appropriately prompted. So since their first models Qwen team designed their models with agentic behavior in mind and capable of effective tool-use.

In February 2024, the Qwen team announced an upgraded version called Qwen-1.5. This time they introduced uniform 32K context length support across all model sizes and expanded the model lineup to include 0.5B, 4B, 32B, and even a 110B parameter model. Not only its general skills, such as multilingual understanding, long-context reasoning and alignment improved, but also agentic capability jumped to the levels matching GPT4-level in a tool-use benchmark. At that time, it correctly selected and used tools with over 95% accuracy in many cases.

June 2024 brought Qwen 2 which inherited the Transformer-based architecture from the previous models and applied Grouped Query Attention (GQA) to all model sizes (compared to Qwen-1.5) for faster speed and less memory usage in model inference. It was a strong foundation for specialized tasks and later, in August 2024, Qwen2-Math, Qwen2-Audio (an audio-and-text model for understanding and summarizing audio inputs), and Qwen2-VL emerged.

Qwen2-VL was an important milestone. Like DeepSeek does with their models’ features, Qwen Team also finds its own technologies to make their models better. With Qwen2-VL Alibaba Cloud introduced their special innovations like naive dynamic resolution which allowed to process images of any resolution, dynamically converting them into a variable number of visual tokens. To better align positional information across all modalities (text, image, and video) it used Multimodal Rotary Position Embedding (MRoPE). Qwen2-VL can handle 20+ minute videos and can be integrated on devices, like phones and robots.

Qwen2.5 and QwQ-32B rivaling DeepSeek-R1

Further, in September 2024, in response to growing competition, especially from new rivals like DeepSeek, Alibaba launched Qwen2.5. This release included a series of models ranging from 0.5 billion to 72 billion parameters, pretrained on an large dataset up to 18 trillion tokens, and covering applications in language, audio, vision, coding, and math. They support 29+ languages, ultra-long contexts of 128K tokens for input and can generate outputs up to 8K tokens long. But this context length is not the limit for Qwen2.5 – Qwen2.5-1M model (launched in January 2025) can handle extremely long contexts – up to 1 million tokens with a 3-7x speed boost while processing these tokens.

The most impressive one of 2.5 version of models is Qwen2.5-VL, released in January 2025. It acts as a visual agent in digital environments – it doesn’t just describe images, it can interact with them. Qwen2.5-VL was designed to “reason and dynamically direct tools” based on visual input. It leverages native dynamic resolution (for images), dynamic frame rate training and absolute time encoding (for videos), which allow to process images of varying sizes and videos of extended lengths up to hours. To effectively handle long videos, it improves upon Qwen2-VL aligning the temporal component of MRoPE with absolute time.

What is even more fascinating is that Qwen2.5-VL can control devices such as computers and phones, showing that this multimodal model functions similarly to OpenAI’s Operator. This allows it to book flights, retrieve weather information, edit images, and install software extensions – well, to do what agentic systems usually do.

The timing of Qwen2.5’s release (January 26) – coinciding with China’s Lunar New Year holiday – was seen as an answer to the rapid rise of the DeepSeek-R1 model that had shocked the AI community the week before (January, 20).

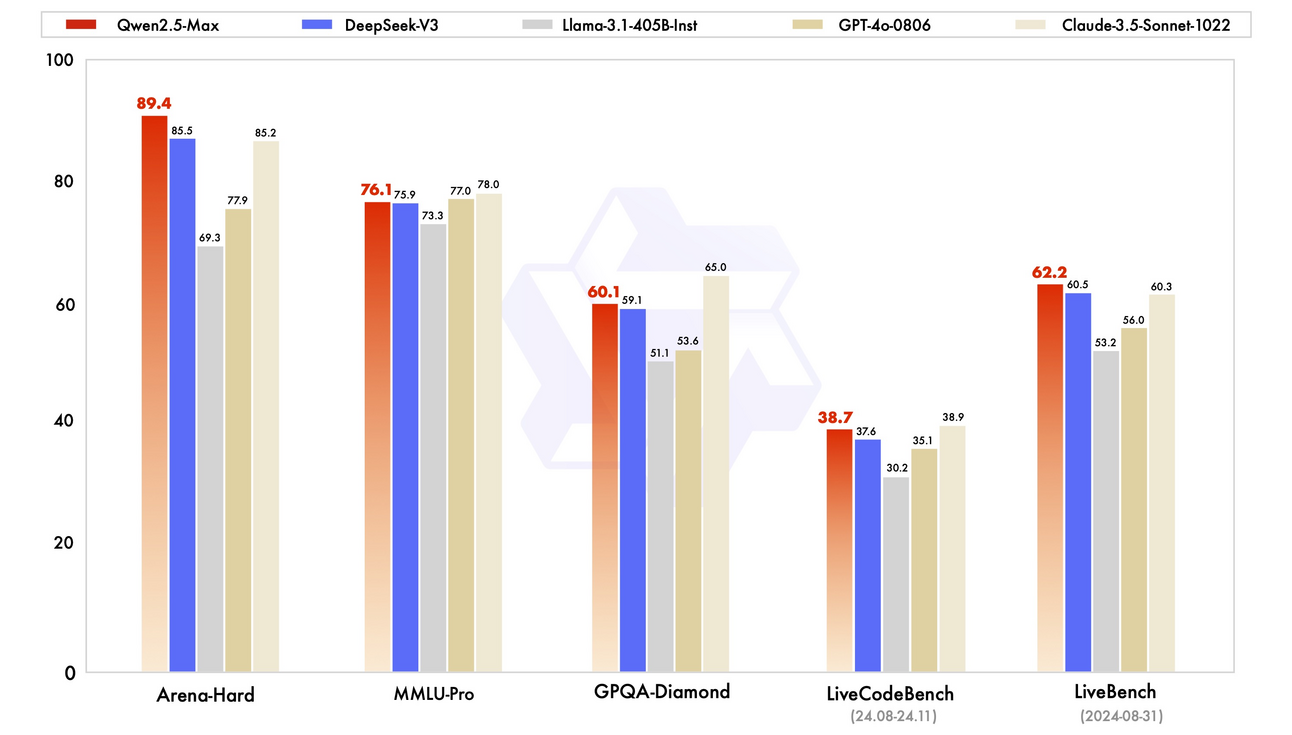

At the same time, Qwen Team also developed a more complex model – Qwen2.5-Max, a large-scale Mixture-of-Experts (MoE) model, trained on over 20 trillion tokens and further refined with Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF). This release showed that Qwen models can rival and even surpass top-tier large models, such as DeepSeek-V3, Llama3.1-405B, GPT-4o and Claude3.5-Sonnet.

Image Credit: Qwen2.5-Max blog post

Image Credit: Qwen2.5-Max blog post

As we can see, the family of Qwen2.5, as Alibaba’s flagship AI models for 2025, combines deep knowledge, tool-use skills, and multi-domain expertise with an extended context length. With the focus on agentic features, they set the stage for building more autonomous AI agentic systems using the Qwen backbone.

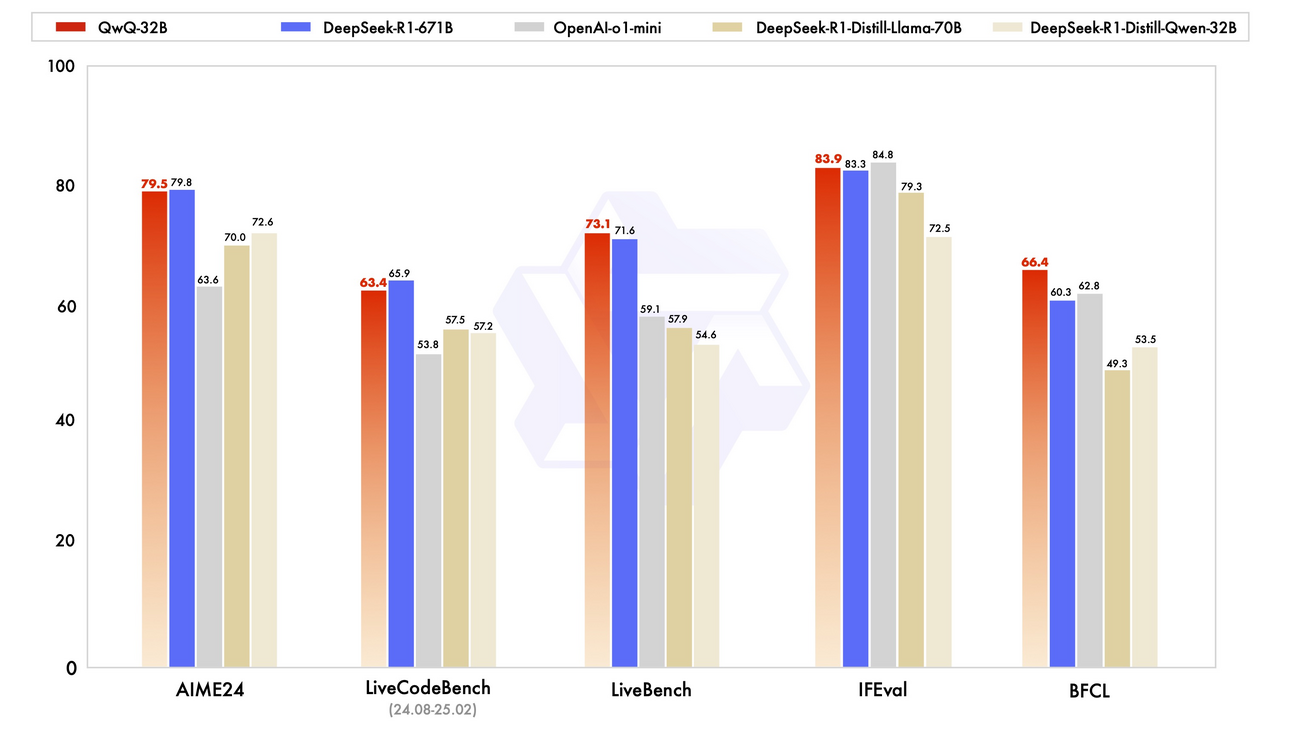

Among Qwen Team’s models of 2025 there is one more notable player – QwQ-32B reasoning model. It was first unveiled in November 2024 as an experimental preview model for enhanced logical reasoning, but gained its forces just recently, in the beginning of March 2025. QwQ-32B is also a result of the “obsession” with RL. Here, effective scaling of RL led to making smaller model just as capable as larger ones: QwQ with only 32-billion parameter delivers performance comparable to DeepSeek-R1, which is much larger (671B parameters, 37B active). It also outperformed smaller o1-mini. These impressive reasoning capabilities opens new possibilities for AI agents that can use tools and adapt to tasks dynamically.

Image Credit: QwQ-32B blog post

Image Credit: QwQ-32B blog post

Well, this was a brief summary of what the Qwen Team has been working on since 2023. We are here to explore what allows leveraging Qwen models for building AI agents and apps – and it’s Qwen-Agent framework. As the community is now more interested in full ecosystems it’s the perfect time to pay attention to this development.

What is Qwen-Agent framework?

Qwen-Agent framework was created to support the development of applications with Qwen models, allowing the models to function as agents in practical settings. It builds on Qwen’s strengths in instruction following, tool integration, multi-step planning, and long-term memory handling. Qwen-Agent provides a modular design where LLMs, with built-in function calling support, and external tools can be combined into higher-level agents.

Key aspects of Qwen-Agent framework include:

Tool integration and function calling

The framework makes it straightforward to define tools (functions, APIs) that the Qwen model can call. It handles the “function calling” JSON-like syntax similar to OpenAI’s function calling spec, so that the model can output a call and receive the tool’s result. Qwen-Agent comes with ready-made tool plugins for web browsing, code execution, database queries, and more. This allows a Qwen model to use tools like a calculator or fetch web content when needed.

Planning and memory

The agent framework equips the model with a working memory and a planner to tackle multi-step tasks. Instead of the user having to prompt each step, Qwen-Agent can let the model plan a sequence of actions internally. For instance, in a complex query, the model might plan to search the web, then summarize results, then draft an answer. Qwen-Agent can maintain a memory of past steps, so the model remembers what tools have returned, and feed that back into the prompt for the next step.

However, the main question is: What can you build with Qwen-Agent?

Examples of Qwen-Agent applications

Here are several applications built with Qwen-Agent, which show how it uses tools and performs planning.

Code Interpreter integration

Qwen-Agent features a built-in Code Interpreter that allows the model to execute Python code for data analysis, calculation, and visualization tasks. In practice, this gives Qwen an integrated sandbox-like capability to run code, similar to OpenAI’s Code Interpreter. Users can upload files or give data, and Qwen will write and run Python code to analyze it or produce a plot. For example, this Code Interpreter can generate charts from data. This feature is powerful but not sandboxed, meaning the code runs on the host environment.

Browser Assistant (BrowserQwen Chrome extension)

A Browser Assistant uses a Qwen model to surf the web and documents in the user’s browser, and answer queries with live information. It is provided as a Chrome extension called BrowserQwen (available on GitHub). It can discuss or answer questions about the current webpage/PDF, and it keeps a history of pages you’ve visited. This lets Qwen summarize content across multiple pages and assist with writing tasks using the collected information.

BrowserQwen also supports plugin integration – for instance, it uses the Code Interpreter tool, stated before, for solving math problems and creating data visualizations right from the browser.

Image Credit: Qwen-Agent GitHub documentation

Image Credit: Qwen-Agent GitHub documentation

But what if you need answers on questions from super-long documents with 1M tokens? Here Qwen-Agent also can help, using smart retrieval approach.

1 Million token context via retrieval

An innovative use of Qwen-Agent is to extend context length by using retrieval. Researchers started with a standard 8k-context chat model and made it capable of handling 1M-token documents for tasks like reading an entire book through three steps:

- Building a strong agent that can process long contexts.

- Generating high-quality training data using this agent.

- Fine-tuning a model with the synthetic data to create a powerful long-context AI.

By combining the following three levels, the system finds and processes the most relevant information from massive texts:

- Level 1: Retrieval-Augmented Generation (RAG)

Image Credit: “Generalizing an LLM from 8k to 1M Context using Qwen-Agent” blog

Image Credit: “Generalizing an LLM from 8k to 1M Context using Qwen-Agent” blog

- Breaks long documents into smaller chunks (for example, 512 tokens).

- Uses keyword-based search to find the most relevant parts.

- Uses traditional BM25 retrieval instead of complex embedding models for efficiency.

- Level 2: Chunk-by-chunk reading

- Instead of just relying on keyword overlap, it scans each chunk separately.

- If a chunk is relevant, it extracts key sentences and refines its search. This prevents important details from being overlooked.

- Level 3: Step-by-step reasoning

- The system uses a multi-step process to answer complex questions.

- It breaks down a query into smaller sub-questions and answers them step by step. For example, to answer, "What vehicle was invented in the same century as Beethoven’s Fifth Symphony?" the system first finds that the symphony was composed in the 19th century, then searches for vehicles invented in that era.

This Qwen-Agent’s retrieval-assisted method and subsequent fine-tuning enabled an LLM to effectively scale from 8K context to 1M tokens. Overall, this process showcases how we can combine an LLM with an agent orchestrator, overcoming base model limits through tool-assisted strategies.

Image Credit: “Generalizing an LLM from 8k to 1M Context using Qwen-Agent” blog

Image Credit: “Generalizing an LLM from 8k to 1M Context using Qwen-Agent” blog

Also notably, Qwen-Agent now powers the backend of the official Qwen Chat web app – when users chat with Qwen online, the agent framework manages the conversation, enabling features like tool usage within the chat.

Conclusion: What makes Qwen developments stand out?

As we can see, there are many exclusive open features that the Qwen Team can offer to the community. Qwen models stand out among DeepSeek-R1 and OpenAI's models due to their strong multilingual performance, open-source availability, enterprise adaptability, and overall holistic approach with its emphasis on agentic capabilities, such as tool use, planning, and function calling. The wide range of open-source models that can be implemented in agentic frameworks, such as Qwen-Agent, makes all Qwen developments resemble a comprehensive agentic ecosystem. While we are still far from truly autonomous AI agents, the Qwen team’s advancements are a step forward. Even now, with the open Qwen-Agent framework, developers can create agents capable of complex tasks, such as reading PDFs, interacting with tools, and performing customized functions.

Notably, many researchers rely on Qwen models for testing due to their balance of accessibility and high performance, making them a preferred choice for advancing AI research.

What’s next for Qwen? Qwen3 or new versions of the QwQ model? Will there be more focus on slow, step-by-step reasoning because it is a trend, or will they find their own path to AGI? We’ll keep an eye on it.

Author: Alyona Vert Editor: Ksenia Se

Sources and further reading

Qwen models:

- Qwen 1.0 - GitHub

- Qwen 1.5 - Blog post

- Qwen 2 - Blog post

- Qwen2-VL - Blog post

- Qwen2-Math - Blog post

- Qwen2-Audio - GitHub

- Qwen2.5 - GitHub

- Qwen2.5-VL - GitHub

- Qwen2.5-Math - GitHub

- Qwen2.5-1M - Blog post

- Qwen2.5-Max - Blog post

- QwQ-32B - Blog post

- Other Qwen models’ blogs

- Family of Qwen on Hugging Face

Resources for further reading:

- Qwen Technical Report by Jinze Bai et al.

- Qwen2 Technical Report by An Yang et al.

- Qwen2-VL: Enhancing Vision-Language Model’s Perception of the World at Any Resolution by Shuai Bai et al.

- Qwen2-Audio Technical Report by Junyang Lin, Yunfei Chu et al.

- Qwen2.5 Technical Report by Binyuan Hui et al.

- Qwen2.5-VL Technical Report by Keqin Chen et al.

- Qwen2.5-1M Technical Report by Dayiheng Liu et al.

- Qwen-Agent framework

- Generalizing an LLM from 8k to 1M Context using Qwen-Agent

- BrowserQwen on GitHub

- Alibaba Stock Analysis

- APNews about Alibaba

Turing Post resources

That’s all for today. Thank you for reading!

Please share this article with your colleagues if it can help them enhance their understanding of AI and stay ahead of the curve.