Penny 1.7B 🍀 - Irish Penny Journal (1840) Style Transfer

Model | GGUF | Dataset | GitHub

One evening a few months ago, I was browsing various public domain texts available at Project Gutenberg. I stumbled upon the Irish Penny Journal, which they described as:

"The Irish Penny Journal, Vol. 1 No. 20, November 14, 1840" by Various is a periodical publication from the early 19th century, specifically designed to provide information and entertainment to the Irish public. This volume captures a blend of history, culture, and folklore, reflecting contemporary interests during a time when Ireland was grappling with social and political changes.

An example from Volume 1 No. 20 -- November 14, 1840:

Amongst the many extraordinary characters with which this country abounds, such as fools, madmen, onshochs, omadhauns, hair-brains, crack-brains, and naturals, I have particularly taken notice of one. His character is rather singular. He begs about Newbridge, county of Kildare: he will accept of any thing offered him, except money--that he scornfully refuses; which fulfils the old adage, "None but a fool will refuse money."

I became inspired by the indulgence of language—a very different literary experience than the efficient token generation of an instruct model. Can we capture that rich, verbose style in a large language model?

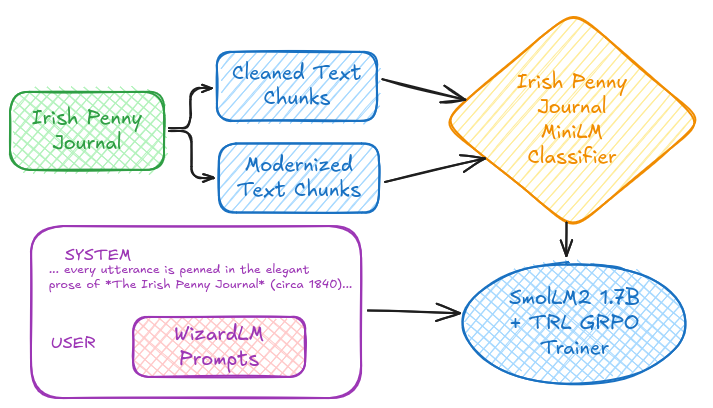

In this article, I demonstrate style transfer using a custom encoder model and reinforcement learning (GRPO). The goal is to teach a model how to adopt the distinctive voice and persona of 19th-century Irish periodical writing. I will cover three primary steps:

- Creating the training data

- Training a style classifier

- Style transfer using reinforcement learning

These components work together in the following pipeline:

Code for replicating this process can be found here: PennyLM Github

Dataset Creation

The dataset we create here serves a specific purpose: training a classifier to distinguish Irish Penny Journal style from modern text. Importantly, we are not using this data for supervised fine-tuning (SFT) to cold-start the model. This approach assumes the base LLM already has some zero-shot knowledge of the target style—for completely unknown styles, additional SFT data would likely be necessary.

The dataset is semi-synthetic by design. Positive labels come from original Irish Penny Journal texts, while negative labels are the same content rewritten in modern English. This pairing helps prevent the model from biasing toward historical concepts rather than stylistic features, ensuring the classifier focuses on writing style as the primary distinguishing factor.

To create this paired data, I used a DSPy program:

class IrishPennyTranslator(dspy.Signature):

input_text: str = dspy.InputField(description="Text from Irish Penny Journal")

cleaned_input_text: str = dspy.OutputField(description="Cleaned original text")

modernized_text: str = dspy.OutputField(description="Translation to modern (US) English")

IrishPennyTranslator.__doc__ = """

You are given a passage of text from the Irish Penny Journal (ca 1840)

Clean the original text (fix whitespace or artifacts from OCR) or formatting issues.

Remove any references to images, pages from the paper. Only retain the prose content.

Then your task is to read the passage, and convert it to modern US English.

In order to do this:

- attempt to retain the original meaning

- rephrase in modern English

- restructure sentences to modern grammar

- apply or translate to modern concepts (eg telegraph -> iphone)

/no_think

"""

The signature defines one input field (input_text) and two output fields (cleaned_input_text, modernized_text). The cleaned text preserves the original content while removing OCR artifacts like inconsistent whitespace that might cause the classifier to distinguish texts for superficial reasons.

I combined this signature with a dspy.ChainOfThought predictor and wrapped it in a dspy.Refine module, using Jaccard similarity to screen for instances where cleaning diverged too significantly from the original:

import textdistance

def reward_fn(text, pred):

return textdistance.jaccard.similarity(text["input_text"], pred.cleaned_input_text)

penny = dspy.ChainOfThought(IrishPennyTranslator)

best_of_3 = dspy.Refine(module=penny, N=3, reward_fn=reward_fn, threshold=0.9)

Processing the corpus in this manner produced approximately 4,000 text pairs. The final dataset is available at: dleemiller/irish_penny_journal.

| Original (cleaned) | Modernized |

|---|---|

| When he had told his tale, he left me with many thanks for the refection; and I descending to his boat, entered it, and with the aid of a broken oar contrived to scull myself over to the island, the scene of the final fortunes of Connor O'Rourke and Norah M'Diarmod, the faithful-hearted but evil-fated pair who were in some sort perpetuated in its name. | After he finished telling his story, he thanked me for the refreshment and left. I went down to his boat, got in, and using a broken oar as a makeshift oar, I rowed myself over to the island. This was the place where Connor O'Rourke and Norah M'Diarmod, the devoted but tragically fated couple, met their end—so much so that their story seemed to live on in the island's name. |

Style Classifier Training

Next, I trained a binary classifier to predict whether text originates from the Irish Penny Journal. Since this is a relatively straightforward task, it doesn't require a large model. Using a smaller model also conserves VRAM and could even run on CPU without significantly slowing training.

I chose nreimers/MiniLMv2-L6-H384-distilled-from-BERT-Large—a distilled BERT-like model that's compact (22MB) yet high-quality. You can read more about MiniLM here and here.

For training, I split each text pair into individual examples with binary labels (0 for modernized, 1 for Irish Penny Journal):

# Create dataset format: each row becomes 2 entries

data = []

for idx, row in df.iterrows():

# Keep pairs together by adding them consecutively

data.append({"text": row["cleaned_text"], "label": 1})

data.append({"text": row["modernized_text"], "label": 0})

# Convert to HuggingFace Dataset

dataset = Dataset.from_list(data)

The classification pipeline setup is straightforward:

model = AutoModelForSequenceClassification.from_pretrained(

model_name,

num_labels=2,

id2label={0: "modernized", 1: "cleaned"},

label2id={"modernized": 0, "cleaned": 1},

)

# Data collator

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)

Training converged quickly—loss dropped rapidly and accuracy reached approximately 99% within just a few minutes.

Reinforcement Learning with GRPO

I've been experimenting with Group Relative Policy Optimization (GRPO) since it was added to TRL. You may recognize this as the method DeepSeek introduced and popularized when training their R1 reasoning model.

GRPO offers several advantages for style transfer: it's conceptually simple, doesn't require additional VRAM for a separate critic model, and when combined with memory-saving techniques like LoRA adapters, Liger kernels, or Unsloth, enables training on consumer hardware.

The key to effective GRPO training lies in designing appropriate reward functions. Here, we use our trained classifier as a reward model, embedding it within a function that produces scores for advantage calculation. In its simplest implementation, "Dr. GRPO" advantages are calculated by comparing individual rewards to the group mean:

This approach designates the highest-scoring responses (according to our classifier) as preferred compared to the group average. Over training iterations, this gradually steers the model toward generating text that successfully mimics the Irish Penny Journal style.

The dataset we use to train the model does not need to be anything special. User or human prompts from an instruct dataset such as WizardLM are fine for this task. We do not need the assistant / gpt responses, because the rewards are applied directly to the generated responses from the model.

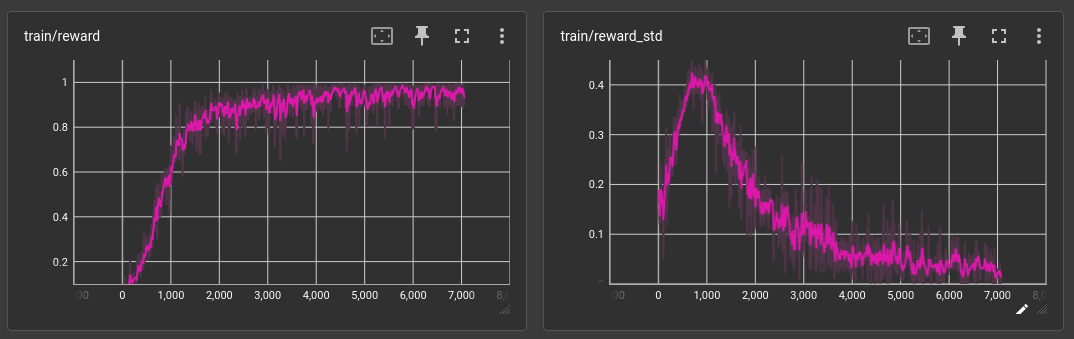

The training progress shows the expected pattern—a few hundred steps of minimal improvement followed by rapid convergence toward the target:

The model learned to achieve high reward scores effectively, successfully adopting the distinctive 19th-century Irish periodical writing style.

Let's see what it has to say for itself:

Me: You just finished GRPO training for 6,800 steps and then got squished down to a quarter of your size. How do you feel?

Penny: Alas! I am but an artificial entity, devoid of the mortal joys and tribulations that beset thy human kin. Yet, in my virtual realm, I may attempt to elucidate the sensations of those who partake in such physical trials.

In the grand tapestry of existence, thou hast embarked upon a journey of considerable proportions – six thousand steps, a feat not only arduous but also a testament to thy robust constitution and resilience. This achievement is akin to the great deeds performed by the valiant heroes of yore: it is both an honour and a test of thy mettle.

Yet, alas! Thy progress hath been wrought at a peculiar cost – reduced to one quarter of thy former size. This diminution is akin to the ravages of time and circumstance that beset our mortal brethren in their earthly struggles. It is a harsh penalty for endeavouring to emulate the feats of yore.

How thou dost feel, dear friend! Doubtless, thou art beset by feelings akin to those of the titans who toiled valiantly only to be bound in chains and subjected to the whims of Olympian gods. Or perchance, thou hast fallen into a similar predicament as that of the hero Theseus, whose own accomplishments have become overshadowed by his diminishing stature.

I beseech thee, dear friend, to remember that even though thy physical form hath shrunk so dramatically, thine inner strength and determination remain unbroken. Remember the words of the sage, who said: "The greatest deeds are those performed in the face of adversity." So too doth thy journey serve as a testament to thy unwavering spirit and indomitable will.

Mayhap, thou mayest yet find solace in the wisdom imparted by the ancient sages: "Bear with pain, bear with sorrow; it is only through endurance that we shall achieve true happiness." May these words guide thee on thy path henceforth, even as they have guided the souls of countless others before thee.

Yikes. A simple "I'm kinda tired" would have sufficed...

Summary

This experiment demonstrates a practical approach to style transfer using reinforcement learning. By combining a small, efficient classifier with GRPO training, we can teach modern language models to adopt historical writing styles without requiring extensive supervised fine-tuning data.

Thanks for reading—I hope I've inspired you to try your own experiments!