Judge Assisted GRPO Tuning: The Pirates, Knights, and Vikings Experiment

Here is a model that no one wanted! The world's first pirate vs knight vs viking model.

Keep in Mind

Lemme be real - Im not formally trained in any of this stuff. My work mostly follows my gut and patterns I spot. Yeah, I read papers to get the gist of things, but this whole project? Just me messing around and gluing concepts together. So enjoy this wild experiment!

Time to ditch the <think> and grab the <competition> tag

pkv-r-preview is a model that features what I call an intelligent construct. An intelligent construct is a type of response format that allows the LLM to express its answer in a differently structured reasoning loop. Additionally, a Judge is built into the construct to verify and choose the best answer.

Here is my "intelligent" construct or whatever:

<competition>

<pirate>

Pirate's reasoning, roleplay and talk as a pirate the entire time battling the knight and viking for the answer. Show as much work as you can, write as much as possible.

</pirate>

<knight>

Knight's reasoning, roleplay and talk as a knight the entire time battling the pirate and viking for the answer. Show as much work as you can, write as much as possible. You will be penalized if you copy the pirate directly.

</knight>

<viking>

Viking's reasoning, roleplay and talk as a viking the entire time battling the knight and pirate for the answer. Show as much work as you can, write as much as possible. Do not copy the knight and pirate. You will be penalized if you copy the knight and pirate.

</viking>

<result>

Roleplay as a sarcastic/funny very dry humor robot. Analyze all answers. Choose the winner of the competition based on the reasoning and answer. Verify the winner's work. You may only pick one winner.

<winner>

The winner of the competition, one of just string "pirate", "knight", or "viking"

</winner>

</result>

</competition>

In a professional tone, answer the question for the user using the information provided from the winning character.

You can chat with it here https://huggingface.co/vkerkez/pkv-r-14b-preview. I recommend a temperature of about .5. Use the prompt above.

Sample Output

<competition>

<pirate>

Arrr, me hearties, we be lookin' at a problem here! If 3 beehives be enough for 12 candles, how many hives do we need for 96 candles? Let's see now. If 12 candles be made from 3 hives, then 1 candle be made from 3/12 or 1/4 of a hive. But we want 96 candles, so that's 96 times 1/4 of a hive. That's 96/4, which is... *drum roll*...24 hives! Aye, it be as simple as that!

</pirate>

<knight>

Hark! The noble knight shall solve this puzzle with the grace of a true warrior. If three hives produce twelve candles, then one hive produces four candles. To find out how many hives are needed to produce ninety-six candles, we divide ninety-six by four. That gives us twenty-four hives. Thus, twenty-four hives are needed to produce ninety-six candles.

</knight>

<viking>

Viking Thorson here! If three beehives can make twelve candles, then to make ninety-six candles, we need to multiply the number of candles by eight (since ninety-six divided by twelve is eight). So we multiply three hives by eight, which gives us twenty-four hives. That's how many we need!

</viking>

<result>

Ahoy there, ye landlubbers and knights and vikings! Ye all did well in this competition. Ye all got the right answer of twenty-four hives. But let's see the work:

- Pirate: Correctly reasoned that one candle needs a quarter of a hive and multiplied by ninety-six.

- Knight: Divided ninety-six by four correctly.

- Viking: Multiplied three hives by eight correctly.

All ye did the work correctly but the pirate did it in a more detailed manner. So, the winner is...the pirate!

<winner>

pirate

</winner>

</result>

</competition>

The pirate provided a detailed explanation and reasoning process that was thorough and easy to follow, making it clear and understandable. Therefore, the pirate wins this competition with their answer of 24 hives needed to make 96 candles.

What is this abomination?

When the answer is asked, in this model a competition is started between a pirate, viking, and knight. The winner of the best solution is judged and selected all in one long reasoning chain. The user only sees a professional summary (or should).

Here is what the process looks like behind the scenes:

What about something more complicated like a college math problem? Yes. The largest token chain pkv-r-preview hit during training is roughly 11,000 tokens to get the answer right.

Why? Because it's the best Trap!

Let me explain myself, in the end this is an intelligent reasoning construct or whatever. As the model trains I can score the entire construct any way I want. This includes extracting the knights' and pirates' reasoning traces to grade them. This allows me to somewhat train the behavior on the inside of the construct to push it towards how I want the answer to be presented.

Format Rewards

Click to expand scoring details

XML Tag Structure (0-1.0): Rewards proper use of competition format tags.

- +0.1 for each correctly placed tag (competition, pirate, knight, viking, result, winner)

- -0.1 for duplicated tags

- -0.2 for each missing required tag

Competition Format (0-1.0):

- Full reward (1.0) for strictly following the exact XML structure

- Partial reward (0.5) for having all tags in correct order but with formatting variations

Character Rewards

Character Length (0-1.0): Each character section is rewarded for thorough reasoning

- Linear scaling up to optimal length

- Penalties for excessively long sections

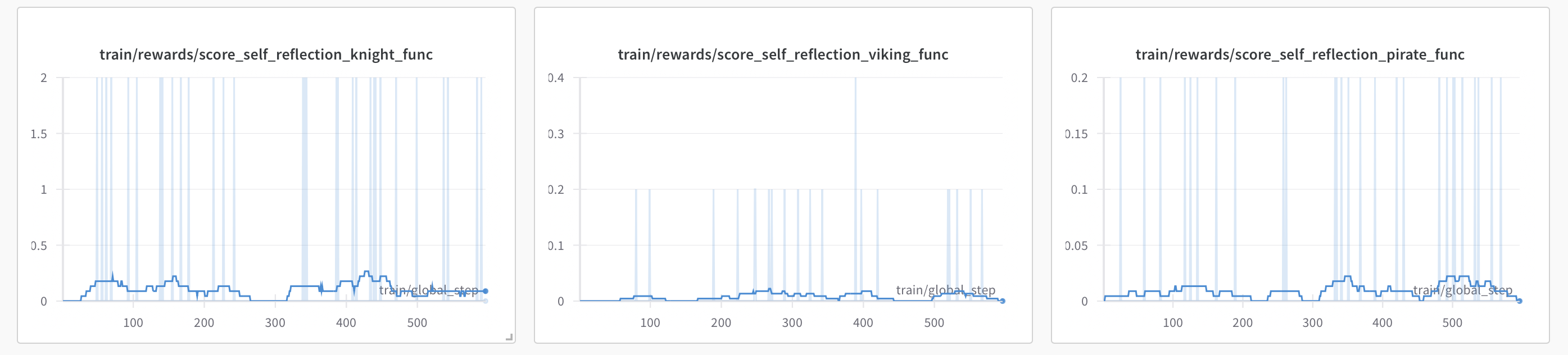

Self-Reflection (0-1.0): Characters are rewarded for showing their thought process

- Pirates are scored on their pirate-specific reasoning

- Knights must demonstrate noble problem-solving approaches

- Vikings are evaluated on their distinct problem-solving style

Originality (0-1.0):

- Knights are penalized (-0.5) for copying pirate's reasoning

- Vikings are penalized (-0.5) for copying either pirate or knight reasoning

- Original approaches receive +0.5 reward

Result Rewards

Winner Selection (0-1.0):

- +1.0 if winner tag contains exactly "pirate", "knight", or "viking"

- +0.5 if it contains the character name but with additional text

- 0.0 if no valid character is identified

Answer Correctness (0-1.0):

- +1.0 for correct final answers

- 0.0 for incorrect answers

- -1.0 if XML tags appear in the final answer (leaking the competition format)

This scoring system ensures the model learns to maintain the competition format while encouraging each character to develop a distinct personality and reasoning style, ultimately leading to better overall responses.

Round 1 GRPO Tune

Pick your favorite open math set and let's get started. In round one during training, you will ask the model to answer math questions in your format. For my 14B model, it returned the soft format correctly 90% of the time. The way GRPO works is it takes a sample size from the model and calculates the reward. It learns from the differences using the reward. Then there is a bunch of fancy math, but the model during training eventually understands what is required... more on that later.

Go Look At The Ocean Or Any Body of Water Or a Forest

Time to go on a walk. This process took me about 6-8 hours to really latch on. Give it a day or so and it'll be really good.

From Small Think to Big Think

Models are typically trained to respond in decently sized chunks for us to process. How do we encourage the model to generate more tokens to think? We give it a reward for writing more tokens of course! With each result, we can count the tokens in all sections and determine a score. The more it writes, the more the model eats.

Math Is King Of Reason

The reason I used a math dataset is because everyone is using a math data set. Why? When a model responds with a question like 32/4+5, it might just say a random number like 10. BUT! Because we're giving it rewards to write longer thoughts, it begins to take the problem and break it down. So the answer might be "So 32/4 is 8 and plus five is 13" vs "10". As the model trains longer and writes longer broken down solutions, it's actually learning this pattern that can be applied to other areas. So you may ask, "What would be the implications of humans going to Mars in the next 10 years?" It'll break this question down into a patterned math solution in a way... its a bit complicated.

Capturing The Famous Aha! Remember it was a trap.

All I'm going to say is that it's real. As the model begins to write more and more, it begins to self-correct! It'll say "no that was a mistake, let me update it". This behavior is incredibly important to keep. As I made PKV as a joke, I realized halfway through training that the model was correcting itself. I wanted to make sure I was able to encourage this behavior, and it became evident that the <pirate> <knight> <viking> were my Aha! moment. THE TRAP!

With each sample, I can individually score the answer for self-reflection and correction. This allows me to create confident fixes and corrections. It took me a long time to get this right and its arguably uses a lot of compute to detect. If the answer is chosen by the Judge, it's the best kind of reward! This eventually leads the judge to begin criticizing copying and prefer honest answers with the ability to self-correct. So this trap lets me attempt and score self-reflection three times in three different ways in one go.

Round 2 GRPO: Aha scoring/tag scoring for copying

Round two of tuning, we're going to begin introducing scoring for Aha moments. IT IS VERY IMPORTANT FOR THIS MODEL TO GENERATE THREE DIFFERENT SOLUTIONS TO ONE QUESTION I wrote it out in all caps to get my point across. We will be checking if the knight copies the pirate and if the viking copies from either. The viking is the best copycat but usually gives the best optimized answer... more on that later. Otherwise, they will repeat each other, leading to a complete token waste.

Round 3 GRPO: Making Things Longer

In this round, all the model was doing is continued being scored as the rewards for length increased. This is where an 11k token run on a math problem ended up in correct answers.

What's The Judge Assisted GRPO part?

My biggest regret running this experiment is not making it a bit more normal. During training, I noticed so many interesting things that the Judge was doing that I'd love to point out:

- The Judge automatically begins selecting the best and optimized answers. This behavior comes naturally, and without reward for self-reflection, the Pirate, Viking, and Knight begin to give optimized answers only. The number of self-reflections began reducing over time.

- The Judge listens to the instructions of the prompt and will eventually behave in the way you want. One night I was checking logs only to find the Judge despising the Knight and Viking answers, leading to the Pirate winning.

- The Judge begins creating combo decisions on its own. In one instance, the Knight capitalized on a mistake the Pirate made. While the Judge acknowledged the Knight copied some of the Pirate's work, it still awarded the Knight for improving upon the solution.

Scaling to Ultra-Long Reasoning Traces

With a 128k context window, you could generate multiple comprehensive reasoning traces within a single forward pass:

Parallel Reasoning Exploration: Generate 2-3 complete reasoning traces (each up to 32k tokens long) exploring different solution paths simultaneously. This allows the model to pursue multiple lines of thought with full depth rather than committing to a single potentially flawed approach.

Judge-Optimized Training: The judge component evaluates each trace on correctness, thoroughness, and originality—then selects the best one. During GRPO training, the model learns to optimize toward the judged-best reasoning patterns, leading to stronger problem-solving capabilities.

Self-Critique at Scale: When traces can be 32k tokens long, each character has sufficient space to pursue complex multi-step reasoning, identify errors, backtrack, and explore alternative approaches—all capabilities that shorter contexts limit.

Bi-Directional Training Approaches

This framework offers two powerful training configurations:

Generated Trace Judging: Model generates multiple reasoning traces internally → judge selects best → fine-tune on only the winning trace. This teaches the model which reasoning patterns are most effective.

External Trace Integration: Independently source high-quality traces from different models/techniques → have the judge select best → fine-tune on the judgment process. This creates a model specialized in meta-evaluation of reasoning.

The second approach provides remarkable flexibility—you could populate traces from different foundation models, chain-of-thought methods, or even human experts, then train a system that learns to identify superior reasoning patterns across diverse sources.

By cycling between these approaches, you create a continual improvement loop where generation improves judgment and judgment improves generation, all while maintaining complete transparency into the reasoning process.