Update README.md

Browse files

README.md

CHANGED

|

@@ -12,9 +12,14 @@ pipeline_tag: visual-question-answering

|

|

| 12 |

|

| 13 |

## Model Description

|

| 14 |

|

| 15 |

-

**paligemma-3b-

|

|

|

|

|

|

|

|

|

|

| 16 |

|

| 17 |

-

This model is designed for multilingual environments (French and English) and excels in table-based visual question-answering (VQA) tasks. It is highly suitable for

|

|

|

|

|

|

|

| 18 |

|

| 19 |

## Key Features

|

| 20 |

|

|

@@ -27,7 +32,9 @@ This model is designed for multilingual environments (French and English) and ex

|

|

| 27 |

|

| 28 |

## Model Architecture

|

| 29 |

|

| 30 |

-

This model was built on top of **[google/paligemma-3b-ft-docvqa-896](https://huggingface.co/google/paligemma-3b-ft-docvqa-896)**, using its pre-trained multi-modal

|

|

|

|

|

|

|

| 31 |

|

| 32 |

## Usage

|

| 33 |

|

|

@@ -40,7 +47,7 @@ import requests

|

|

| 40 |

import torch

|

| 41 |

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 42 |

|

| 43 |

-

model_id = "cmarkea/paligemma-

|

| 44 |

|

| 45 |

# Sample image for inference

|

| 46 |

url = "https://datasets-server.huggingface.co/cached-assets/cmarkea/table-vqa/--/c26968da3346f92ab6bfc5fec85592f8250e23f5/--/default/train/22/image/image.jpg?Expires=1728915081&Signature=Zkrd9ZWt5b9XtY0UFrgfrTuqo58DHWIJ00ZwXAymmL-mrwqnWWmiwUPelYOOjPZZdlP7gAvt96M1PKeg9a2TFm7hDrnnRAEO~W89li~AKU2apA81M6AZgwMCxc2A0xBe6rnCPQumiCGD7IsFnFVwcxkgMQXyNEL7bEem6cT0Cief9DkURUDCC-kheQY1hhkiqLLUt3ITs6o2KwPdW97EAQ0~VBK1cERgABKXnzPfAImnvjw7L-5ZXCcMJLrvuxwgOQ~DYPs456ZVxQLbTxuDwlxvNbpSKoqoAQv0CskuQwTFCq2b5MOkCCp9zoqYJxhUhJ-aI3lhyIAjmnsL4bhe6A__&Key-Pair-Id=K3EI6M078Z3AC3"

|

|

@@ -71,15 +78,18 @@ with torch.inference_mode():

|

|

| 71 |

|

| 72 |

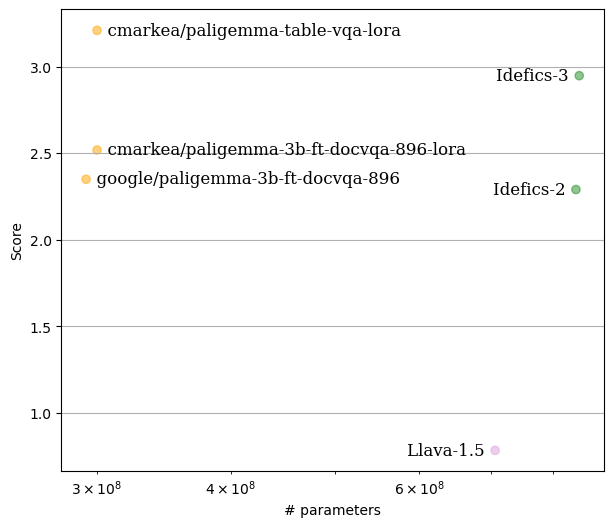

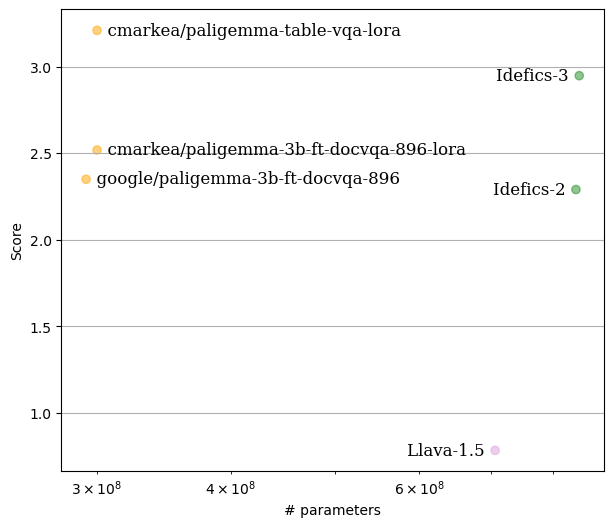

## Performance

|

| 73 |

|

| 74 |

-

The model's performance was evaluated on 200 question-answer pairs, extracted from 100 tables from the test set of the

|

|

|

|

| 75 |

|

| 76 |

-

To evaluate the model’s responses, the **[LLM-as-Juries](https://arxiv.org/abs/2404.18796)** framework was employed using three judge models (GPT-4o, Gemini1.5 Pro,

|

|

|

|

| 77 |

|

| 78 |

Here’s a visualization of the results:

|

| 79 |

|

| 80 |

|

| 81 |

|

| 82 |

-

In comparison, this model outperforms **[HuggingFaceM4/Idefics3-8B-Llama3](https://huggingface.co/HuggingFaceM4/Idefics3-8B-Llama3)** in terms of accuracy and efficiency,

|

|

|

|

| 83 |

|

| 84 |

|

| 85 |

## Citation

|

|

@@ -87,7 +97,7 @@ In comparison, this model outperforms **[HuggingFaceM4/Idefics3-8B-Llama3](https

|

|

| 87 |

```bibtex

|

| 88 |

@online{AgDePaligemmaTabVQA,

|

| 89 |

AUTHOR = {Tom Agonnoude, Cyrile Delestre},

|

| 90 |

-

URL = {https://huggingface.co/cmarkea/paligemma-

|

| 91 |

YEAR = {2024},

|

| 92 |

KEYWORDS = {Multimodal, VQA, Table Understanding, LoRA},

|

| 93 |

}

|

|

|

|

| 12 |

|

| 13 |

## Model Description

|

| 14 |

|

| 15 |

+

**paligemma-3b-tablevqa-896-lora** is a fine-tuned version of the **[google/paligemma-3b-ft-docvqa-896](https://huggingface.co/google/paligemma-3b-ft-docvqa-896)** model,

|

| 16 |

+

trained specifically on the **[table-vqa](https://huggingface.co/datasets/cmarkea/table-vqa)** dataset published by Crédit Mutuel Arkéa. This model leverages the

|

| 17 |

+

**LoRA** (Low-Rank Adaptation) technique, which significantly reduces the computational complexity of fine-tuning while maintaining high performance. The model operates

|

| 18 |

+

in bfloat16 precision for efficiency, making it an ideal solution for resource-constrained environments.

|

| 19 |

|

| 20 |

+

This model is designed for multilingual environments (French and English) and excels in table-based visual question-answering (VQA) tasks. It is highly suitable for

|

| 21 |

+

extracting information from tables in documents, making it a strong candidate for applications in financial reporting, data analysis, or administrative document processing.

|

| 22 |

+

The model was fine-tuned over a span of 7 days using a single A100 40GB GPU.

|

| 23 |

|

| 24 |

## Key Features

|

| 25 |

|

|

|

|

| 32 |

|

| 33 |

## Model Architecture

|

| 34 |

|

| 35 |

+

This model was built on top of **[google/paligemma-3b-ft-docvqa-896](https://huggingface.co/google/paligemma-3b-ft-docvqa-896)**, using its pre-trained multi-modal

|

| 36 |

+

capabilities to process both text and images (e.g., document tables). LoRA was applied to reduce the size and complexity of fine-tuning while preserving accuracy,

|

| 37 |

+

allowing the model to excel in specific tasks such as table understanding and VQA.

|

| 38 |

|

| 39 |

## Usage

|

| 40 |

|

|

|

|

| 47 |

import torch

|

| 48 |

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 49 |

|

| 50 |

+

model_id = "cmarkea/paligemma-tablevqa-896-lora"

|

| 51 |

|

| 52 |

# Sample image for inference

|

| 53 |

url = "https://datasets-server.huggingface.co/cached-assets/cmarkea/table-vqa/--/c26968da3346f92ab6bfc5fec85592f8250e23f5/--/default/train/22/image/image.jpg?Expires=1728915081&Signature=Zkrd9ZWt5b9XtY0UFrgfrTuqo58DHWIJ00ZwXAymmL-mrwqnWWmiwUPelYOOjPZZdlP7gAvt96M1PKeg9a2TFm7hDrnnRAEO~W89li~AKU2apA81M6AZgwMCxc2A0xBe6rnCPQumiCGD7IsFnFVwcxkgMQXyNEL7bEem6cT0Cief9DkURUDCC-kheQY1hhkiqLLUt3ITs6o2KwPdW97EAQ0~VBK1cERgABKXnzPfAImnvjw7L-5ZXCcMJLrvuxwgOQ~DYPs456ZVxQLbTxuDwlxvNbpSKoqoAQv0CskuQwTFCq2b5MOkCCp9zoqYJxhUhJ-aI3lhyIAjmnsL4bhe6A__&Key-Pair-Id=K3EI6M078Z3AC3"

|

|

|

|

| 78 |

|

| 79 |

## Performance

|

| 80 |

|

| 81 |

+

The model's performance was evaluated on 200 question-answer pairs, extracted from 100 tables from the test set of the

|

| 82 |

+

**[table-vqa](https://huggingface.co/datasets/cmarkea/table-vqa)** dataset. For each table, two pairs were selected: one in French and the other in English.

|

| 83 |

|

| 84 |

+

To evaluate the model’s responses, the **[LLM-as-Juries](https://arxiv.org/abs/2404.18796)** framework was employed using three judge models (GPT-4o, Gemini1.5 Pro,

|

| 85 |

+

and Claude 3.5-Sonnet). The evaluation was based on a scale from 0 to 5, tailored to the VQA context, ensuring accurate judgment of the model’s performance.

|

| 86 |

|

| 87 |

Here’s a visualization of the results:

|

| 88 |

|

| 89 |

|

| 90 |

|

| 91 |

+

In comparison, this model outperforms **[HuggingFaceM4/Idefics3-8B-Llama3](https://huggingface.co/HuggingFaceM4/Idefics3-8B-Llama3)** in terms of accuracy and efficiency,

|

| 92 |

+

despite having a smaller parameter size.

|

| 93 |

|

| 94 |

|

| 95 |

## Citation

|

|

|

|

| 97 |

```bibtex

|

| 98 |

@online{AgDePaligemmaTabVQA,

|

| 99 |

AUTHOR = {Tom Agonnoude, Cyrile Delestre},

|

| 100 |

+

URL = {https://huggingface.co/cmarkea/paligemma-tablevqa-896-lora},

|

| 101 |

YEAR = {2024},

|

| 102 |

KEYWORDS = {Multimodal, VQA, Table Understanding, LoRA},

|

| 103 |

}

|