Push model using huggingface_hub.

Browse files- README.md +204 -0

- config.json +18 -0

- model.safetensors +3 -0

README.md

ADDED

|

@@ -0,0 +1,204 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model: ibm-research/biomed.sm.mv-te-84m

|

| 3 |

+

library_name: SmallMoleculeMultiView

|

| 4 |

+

license: apache-2.0

|

| 5 |

+

tags:

|

| 6 |

+

- binding-affinity-prediction

|

| 7 |

+

- bio-medical

|

| 8 |

+

- chemistry

|

| 9 |

+

- drug-discovery

|

| 10 |

+

- drug-target-interaction

|

| 11 |

+

- model_hub_mixin

|

| 12 |

+

- molecular-property-prediction

|

| 13 |

+

- moleculenet

|

| 14 |

+

- molecules

|

| 15 |

+

- multi-view

|

| 16 |

+

- multimodal

|

| 17 |

+

- pytorch_model_hub_mixin

|

| 18 |

+

- small-molecules

|

| 19 |

+

- virtual-screening

|

| 20 |

+

---

|

| 21 |

+

|

| 22 |

+

# ibm-research/biomed.sm.mv-te-84m-CYP-ligand_scaffold_balanced-CYP2C19-101

|

| 23 |

+

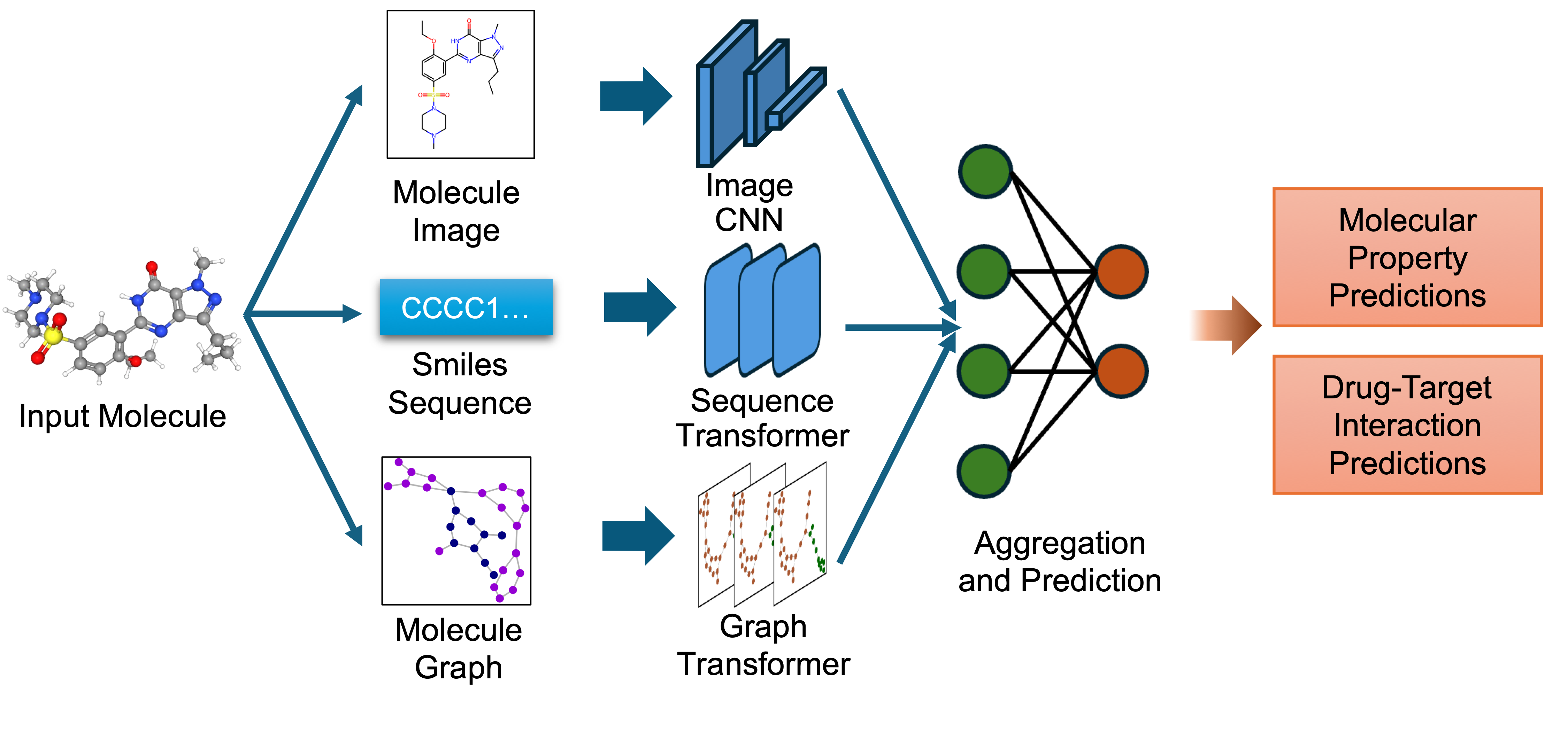

`biomed.sm.mv-te-84m` is a multimodal biomedical foundation model for small molecules created using **MMELON** (**M**ulti-view **M**olecular **E**mbedding with **L**ate Fusi**on**), a flexible approach to aggregate multiple views (sequence, image, graph) of molecules in a foundation model setting. While models based on single view representation typically performs well on some downstream tasks and not others, the multi-view model performs robustly across a wide range of property prediction tasks encompassing ligand-protein binding, molecular solubility, metabolism and toxicity. It has been applied to screen compounds against a large (> 100 targets) set of G Protein-Coupled receptors (GPCRs) to identify strong binders for 33 targets related to Alzheimer’s disease, which are validated through structure-based modeling and identification of key binding motifs [Multi-view biomedical foundation models for molecule-target and property prediction](https://arxiv.org/abs/2410.19704).

|

| 24 |

+

|

| 25 |

+

- **Developers:** IBM Research

|

| 26 |

+

- **GitHub Repository:** [https://github.com/BiomedSciAI/biomed-multi-view](https://github.com/BiomedSciAI/biomed-multi-view)

|

| 27 |

+

- **Paper:** [Multi-view biomedical foundation models for molecule-target and property prediction](https://arxiv.org/abs/2410.19704)

|

| 28 |

+

- **Release Date**: Oct 28th, 2024

|

| 29 |

+

- **License:** [Apache 2.0](https://www.apache.org/licenses/LICENSE-2.0)

|

| 30 |

+

|

| 31 |

+

## Model Description

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

Source code for the model and finetuning is made available in [this repository](https://github.com/BiomedSciAI/biomed-multi-view).

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

* Image Representation: Captures the 2D visual depiction of molecular structures, highlighting features like symmetry, bond angles, and functional groups. Molecular images are generated using RDKit and undergo data augmentation during training to enhance robustness.

|

| 39 |

+

* Graph Representation: Encodes molecules as undirected graphs where nodes represent atoms and edges represent bonds. Atom-specific properties (e.g., atomic number, chirality) and bond-specific properties (e.g., bond type, stereochemistry) are embedded using categorical embedding techniques.

|

| 40 |

+

* Text Representation: Utilizes SMILES strings to represent chemical structures, tokenized with a custom tokenizer. The sequences are embedded using a transformer-based architecture to capture the sequential nature of the chemical information.

|

| 41 |

+

|

| 42 |

+

The embeddings from these single-view pre-trained encoders are combined using an attention-based aggregator module. This module learns to weight each view appropriately, producing a unified multi-view embedding. This approach leverages the strengths of each representation to improve performance on downstream predictive tasks.

|

| 43 |

+

|

| 44 |

+

## Intended Use and Limitations

|

| 45 |

+

|

| 46 |

+

The model is intended for (1) Molecular property prediction. The pre-trained model may be fine-tuned for both regression and classification tasks. Examples include but are not limited to binding affinity, solubility and toxicity. (2) Pre-trained model embeddings may be used as the basis for similarity measures to search a chemical library. (3) Small molecule embeddings provided by the model may be combined with protein embeddings to fine-tune on tasks that utilize both small molecule and protein representation. (4) Select task-specific fine-tuned models are given as examples. Through listed activities, model may aid in aspects of the molecular discovery such as lead finding or optimization.

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

The model’s domain of applicability is small, drug-like molecules. It is intended for use with molecules less than 1000 Da molecular weight. The MMELON approach itself may be extended to include proteins and other macromolecules but does not at present provide embeddings for such entities. The model is at present not intended for molecular generation. Molecules must be given as a valid SMILES string that represents a valid chemically bonded graph. Invalid inputs will impact performance or lead to error.

|

| 50 |

+

|

| 51 |

+

## Usage

|

| 52 |

+

|

| 53 |

+

Using `SmallMoleculeMultiView` API requires the codebase [https://github.com/BiomedSciAI/biomed-multi-view](https://github.com/BiomedSciAI/biomed-multi-view)

|

| 54 |

+

|

| 55 |

+

## Installation

|

| 56 |

+

Follow these steps to set up the `biomed-multi-view` codebase on your system.

|

| 57 |

+

|

| 58 |

+

### Prerequisites

|

| 59 |

+

* Operating System: Linux or macOS

|

| 60 |

+

* Python Version: Python 3.11

|

| 61 |

+

* Conda: Anaconda or Miniconda installed

|

| 62 |

+

* Git: Version control to clone the repository

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

### Step 1: Set up the project directory

|

| 66 |

+

Choose a root directory where you want to install `biomed-multi-view`. For example:

|

| 67 |

+

|

| 68 |

+

```bash

|

| 69 |

+

export ROOT_DIR=~/biomed-multiview

|

| 70 |

+

mkdir -p $ROOT_DIR

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

#### Step 2: Create and activate a Conda environment

|

| 74 |

+

```bash

|

| 75 |

+

conda create -y python=3.11 --prefix $ROOT_DIR/envs/biomed-multiview

|

| 76 |

+

```

|

| 77 |

+

Activate the environment:

|

| 78 |

+

```bash

|

| 79 |

+

conda activate $ROOT_DIR/envs/biomed-multiview

|

| 80 |

+

```

|

| 81 |

+

|

| 82 |

+

#### Step 3: Clone the repository

|

| 83 |

+

Navigate to the project directory and clone the repository:

|

| 84 |

+

```bash

|

| 85 |

+

mkdir -p $ROOT_DIR/code

|

| 86 |

+

cd $ROOT_DIR/code

|

| 87 |

+

|

| 88 |

+

# Clone the repository using HTTPS

|

| 89 |

+

git clone https://github.com/BiomedSciAI/biomed-multi-view.git

|

| 90 |

+

|

| 91 |

+

# Navigate into the cloned repository

|

| 92 |

+

cd biomed-multi-view

|

| 93 |

+

```

|

| 94 |

+

Note: If you prefer using SSH, ensure that your SSH keys are set up with GitHub and use the following command:

|

| 95 |

+

```bash

|

| 96 |

+

git clone [email protected]:BiomedSciAI/biomed-multi-view.git

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

#### Step 4: Install package dependencies

|

| 100 |

+

Install the package in editable mode along with development dependencies:

|

| 101 |

+

``` bash

|

| 102 |

+

pip install -e .['dev']

|

| 103 |

+

```

|

| 104 |

+

Install additional requirements:

|

| 105 |

+

``` bash

|

| 106 |

+

pip install -r requirements.txt

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

#### Step 5: macOS-Specific instructions (Apple Silicon)

|

| 110 |

+

If you are using a Mac with Apple Silicon (M1/M2/M3) and the zsh shell, you may need to disable globbing for the installation command:

|

| 111 |

+

|

| 112 |

+

``` bash

|

| 113 |

+

noglob pip install -e .[dev]

|

| 114 |

+

```

|

| 115 |

+

Install macOS-specific requirements optimized for Apple’s Metal Performance Shaders (MPS):

|

| 116 |

+

```bash

|

| 117 |

+

pip install -r requirements-mps.txt

|

| 118 |

+

```

|

| 119 |

+

|

| 120 |

+

#### Step 6: Installation verification (optional)

|

| 121 |

+

Verify that the installation was successful by running unit tests

|

| 122 |

+

|

| 123 |

+

```bash

|

| 124 |

+

python -m unittest bmfm_sm.tests.all_tests

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

### Get embedding example

|

| 129 |

+

|

| 130 |

+

You can generate embeddings for a given molecule using the pretrained model with the following code.

|

| 131 |

+

|

| 132 |

+

```python

|

| 133 |

+

# Necessary imports

|

| 134 |

+

from bmfm_sm.api.smmv_api import SmallMoleculeMultiViewModel

|

| 135 |

+

from bmfm_sm.core.data_modules.namespace import LateFusionStrategy

|

| 136 |

+

|

| 137 |

+

# Load Model

|

| 138 |

+

model = SmallMoleculeMultiViewModel.from_pretrained(

|

| 139 |

+

LateFusionStrategy.ATTENTIONAL,

|

| 140 |

+

model_path="ibm-research/biomed.sm.mv-te-84m",

|

| 141 |

+

huggingface=True

|

| 142 |

+

)

|

| 143 |

+

|

| 144 |

+

# Load Model and get embeddings for a molecule

|

| 145 |

+

example_smiles = "CC(C)CC1=CC=C(C=C1)C(C)C(=O)O"

|

| 146 |

+

example_emb = SmallMoleculeMultiViewModel.get_embeddings(

|

| 147 |

+

smiles=example_smiles,

|

| 148 |

+

model_path="ibm-research/biomed.sm.mv-te-84m",

|

| 149 |

+

huggingface=True,

|

| 150 |

+

)

|

| 151 |

+

print(example_emb.shape)

|

| 152 |

+

```

|

| 153 |

+

|

| 154 |

+

### Get prediction example

|

| 155 |

+

|

| 156 |

+

You can use the finetuned models to make predictions on new data.

|

| 157 |

+

|

| 158 |

+

``` python

|

| 159 |

+

from bmfm_sm.api.smmv_api import SmallMoleculeMultiViewModel

|

| 160 |

+

from bmfm_sm.api.dataset_registry import DatasetRegistry

|

| 161 |

+

|

| 162 |

+

# Initialize the dataset registry

|

| 163 |

+

dataset_registry = DatasetRegistry()

|

| 164 |

+

|

| 165 |

+

# Example SMILES string

|

| 166 |

+

example_smiles = "CC(C)C1CCC(C)CC1O"

|

| 167 |

+

|

| 168 |

+

# Get dataset information for dataset

|

| 169 |

+

ds = dataset_registry.get_dataset_info("CYP2C19")

|

| 170 |

+

|

| 171 |

+

# Load the finetuned model for the dataset

|

| 172 |

+

finetuned_model_ds = SmallMoleculeMultiViewModel.from_finetuned(

|

| 173 |

+

ds,

|

| 174 |

+

model_path="ibm-research/biomed.sm.mv-te-84m-CYP-ligand_scaffold_balanced-CYP2C19-101",

|

| 175 |

+

inference_mode=True,

|

| 176 |

+

huggingface=True

|

| 177 |

+

)

|

| 178 |

+

|

| 179 |

+

# Get predictions

|

| 180 |

+

prediction = SmallMoleculeMultiViewModel.get_predictions(

|

| 181 |

+

example_smiles, ds, finetuned_model=finetuned_model_ds

|

| 182 |

+

)

|

| 183 |

+

|

| 184 |

+

print("Prediction:", prediction)

|

| 185 |

+

```

|

| 186 |

+

|

| 187 |

+

|

| 188 |

+

For more advanced usage, see our detailed examples at: https://github.com/BiomedSciAI/biomed-multi-view

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

## Citation

|

| 192 |

+

|

| 193 |

+

If you found our work useful, please consider giving a star to the repo and cite our paper:

|

| 194 |

+

```

|

| 195 |

+

@misc{suryanarayanan2024multiviewbiomedicalfoundationmodels,

|

| 196 |

+

title={Multi-view biomedical foundation models for molecule-target and property prediction},

|

| 197 |

+

author={Parthasarathy Suryanarayanan and Yunguang Qiu and Shreyans Sethi and Diwakar Mahajan and Hongyang Li and Yuxin Yang and Elif Eyigoz and Aldo Guzman Saenz and Daniel E. Platt and Timothy H. Rumbell and Kenney Ng and Sanjoy Dey and Myson Burch and Bum Chul Kwon and Pablo Meyer and Feixiong Cheng and Jianying Hu and Joseph A. Morrone},

|

| 198 |

+

year={2024},

|

| 199 |

+

eprint={2410.19704},

|

| 200 |

+

archivePrefix={arXiv},

|

| 201 |

+

primaryClass={q-bio.BM},

|

| 202 |

+

url={https://arxiv.org/abs/2410.19704},

|

| 203 |

+

}

|

| 204 |

+

```

|

config.json

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"agg_arch": "coeff_mlp",

|

| 3 |

+

"agg_gate_input": null,

|

| 4 |

+

"agg_weight_freeze": "unfrozen",

|

| 5 |

+

"dropout_prob": 0.2,

|

| 6 |

+

"head": "mlp",

|

| 7 |

+

"hidden_dims": [

|

| 8 |

+

512,

|

| 9 |

+

384

|

| 10 |

+

],

|

| 11 |

+

"inference_mode": true,

|

| 12 |

+

"input_dim": 512,

|

| 13 |

+

"num_classes_per_task": 1,

|

| 14 |

+

"num_tasks": 1,

|

| 15 |

+

"softmax": false,

|

| 16 |

+

"task_type": "classification",

|

| 17 |

+

"use_norm": false

|

| 18 |

+

}

|

model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8379034b461a363394d520f0117ba982e09cc4522e2d2e4007f93586d47622ad

|

| 3 |

+

size 338415884

|