Upload 3 files

Browse files- README.md +203 -23

- assets/loss.png +0 -0

- assets/thumbnail.webp +0 -0

README.md

CHANGED

|

@@ -1,23 +1,203 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<img src="assets/romulus-thumbnail.webp">

|

| 2 |

+

|

| 3 |

+

# Romulus, continued pre-trained models for French law.

|

| 4 |

+

|

| 5 |

+

Romulus is a series of continued pre-trained models enriched in French law and intended to serve as the basis for a fine-tuning process on labeled data. Please note that these models have not been aligned for the production of usable text as they stand, and will certainly need to be fine-tuned for the desired tasks in order to produce satisfactory results.

|

| 6 |

+

|

| 7 |

+

The training corpus is made up of around 34,864,949 tokens (calculated with the meta-llama/Meta-Llama-3.1-8B-Instruct tokenizer).

|

| 8 |

+

|

| 9 |

+

## Hyperparameters

|

| 10 |

+

|

| 11 |

+

The following table outlines the key hyperparameters used for training Romulus.

|

| 12 |

+

|

| 13 |

+

| **Parameter** | **Description** | **Value** |

|

| 14 |

+

|----------------------------------|-----------------------------------------------------------------|-----------------------------|

|

| 15 |

+

| `max_seq_length` | Maximum sequence length for the model | 4096 |

|

| 16 |

+

| `load_in_4bit` | Whether to load the model in 4-bit precision | False |

|

| 17 |

+

| `model_name` | Pre-trained model name from Hugging Face | meta-llama/Meta-Llama-3.1-8B|

|

| 18 |

+

| `r` | Rank of the LoRA adapter | 128 |

|

| 19 |

+

| `lora_alpha` | Alpha value for the LoRA module | 32 |

|

| 20 |

+

| `lora_dropout` | Dropout rate for LoRA layers | 0 |

|

| 21 |

+

| `bias` | Bias type for LoRA adapters | none |

|

| 22 |

+

| `use_gradient_checkpointing` | Whether to use gradient checkpointing | unsloth |

|

| 23 |

+

| `train_batch_size` | Per device training batch size | 8 |

|

| 24 |

+

| `gradient_accumulation_steps` | Number of gradient accumulation steps | 8 |

|

| 25 |

+

| `warmup_ratio` | Warmup steps as a fraction of total steps | 0.1 |

|

| 26 |

+

| `num_train_epochs` | Number of training epochs | 1 |

|

| 27 |

+

| `learning_rate` | Learning rate for the model | 5e-5 |

|

| 28 |

+

| `embedding_learning_rate` | Learning rate for embeddings | 1e-5 |

|

| 29 |

+

| `optim` | Optimizer used for training | adamw_8bit |

|

| 30 |

+

| `weight_decay` | Weight decay to prevent overfitting | 0.01 |

|

| 31 |

+

| `lr_scheduler_type` | Type of learning rate scheduler | linear |

|

| 32 |

+

|

| 33 |

+

# Training script

|

| 34 |

+

|

| 35 |

+

Romulus was trained using Unsloth on a Nvidia H100 Azure EST US instance provided by the Microsoft for Startups program from this script:

|

| 36 |

+

|

| 37 |

+

```python

|

| 38 |

+

# -*- coding: utf-8 -*-

|

| 39 |

+

import os

|

| 40 |

+

|

| 41 |

+

from typing import (

|

| 42 |

+

Dict,

|

| 43 |

+

)

|

| 44 |

+

|

| 45 |

+

from datasets import load_dataset

|

| 46 |

+

from unsloth import (

|

| 47 |

+

FastLanguageModel,

|

| 48 |

+

is_bfloat16_supported,

|

| 49 |

+

UnslothTrainer,

|

| 50 |

+

UnslothTrainingArguments,

|

| 51 |

+

)

|

| 52 |

+

|

| 53 |

+

max_seq_length = 4096

|

| 54 |

+

dtype = None

|

| 55 |

+

load_in_4bit = False

|

| 56 |

+

|

| 57 |

+

model, tokenizer = FastLanguageModel.from_pretrained(

|

| 58 |

+

model_name="meta-llama/Meta-Llama-3.1-8B",

|

| 59 |

+

max_seq_length=max_seq_length,

|

| 60 |

+

dtype=dtype,

|

| 61 |

+

load_in_4bit=load_in_4bit,

|

| 62 |

+

token="hf_token",

|

| 63 |

+

)

|

| 64 |

+

|

| 65 |

+

model = FastLanguageModel.get_peft_model(

|

| 66 |

+

model,

|

| 67 |

+

r=128,

|

| 68 |

+

target_modules=[

|

| 69 |

+

"q_proj",

|

| 70 |

+

"k_proj",

|

| 71 |

+

"v_proj",

|

| 72 |

+

"o_proj",

|

| 73 |

+

"gate_proj",

|

| 74 |

+

"up_proj",

|

| 75 |

+

"down_proj",

|

| 76 |

+

"embed_tokens",

|

| 77 |

+

"lm_head",

|

| 78 |

+

],

|

| 79 |

+

lora_alpha=32,

|

| 80 |

+

lora_dropout=0,

|

| 81 |

+

bias="none",

|

| 82 |

+

use_gradient_checkpointing="unsloth",

|

| 83 |

+

random_state=3407,

|

| 84 |

+

use_rslora=True,

|

| 85 |

+

loftq_config=None,

|

| 86 |

+

)

|

| 87 |

+

|

| 88 |

+

prompt = """### Référence :

|

| 89 |

+

{}

|

| 90 |

+

### Contenu :

|

| 91 |

+

{}"""

|

| 92 |

+

|

| 93 |

+

EOS_TOKEN = tokenizer.eos_token

|

| 94 |

+

|

| 95 |

+

def formatting_prompts_func(examples):

|

| 96 |

+

"""

|

| 97 |

+

Format input examples into prompts for a language model.

|

| 98 |

+

|

| 99 |

+

This function takes a dictionary of examples containing titles and texts,

|

| 100 |

+

combines them into formatted prompts, and appends an end-of-sequence token.

|

| 101 |

+

|

| 102 |

+

Parameters

|

| 103 |

+

----------

|

| 104 |

+

examples : dict

|

| 105 |

+

A dictionary containing two keys:

|

| 106 |

+

- 'title': A list of titles.

|

| 107 |

+

- 'text': A list of corresponding text content.

|

| 108 |

+

|

| 109 |

+

Returns

|

| 110 |

+

-------

|

| 111 |

+

dict

|

| 112 |

+

A dictionary with a single key 'text', containing a list of formatted prompts.

|

| 113 |

+

|

| 114 |

+

Notes

|

| 115 |

+

-----

|

| 116 |

+

- The function assumes the existence of a global `prompt` variable, which is a

|

| 117 |

+

formatting string used to combine the title and text.

|

| 118 |

+

- The function also assumes the existence of a global `EOS_TOKEN` variable,

|

| 119 |

+

which is appended to the end of each formatted prompt.

|

| 120 |

+

- The input lists 'title' and 'text' are expected to have the same length.

|

| 121 |

+

|

| 122 |

+

Examples

|

| 123 |

+

--------

|

| 124 |

+

>>> examples = {

|

| 125 |

+

... 'title': ['Title 1', 'Title 2'],

|

| 126 |

+

... 'text': ['Content 1', 'Content 2']

|

| 127 |

+

... }

|

| 128 |

+

>>> formatting_cpt_prompts_func(examples)

|

| 129 |

+

{'text': ['<formatted_prompt_1><EOS>', '<formatted_prompt_2><EOS>']}

|

| 130 |

+

"""

|

| 131 |

+

refs = examples["ref"]

|

| 132 |

+

texts = examples["texte"]

|

| 133 |

+

outputs = []

|

| 134 |

+

|

| 135 |

+

for ref, text in zip(refs, texts):

|

| 136 |

+

text = prompt.format(ref, text) + EOS_TOKEN

|

| 137 |

+

outputs.append(text)

|

| 138 |

+

|

| 139 |

+

return {

|

| 140 |

+

"text": outputs,

|

| 141 |

+

}

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

cpt_dataset = load_dataset(

|

| 145 |

+

"louisbrulenaudet/Romulus-cpt-fr",

|

| 146 |

+

split="train",

|

| 147 |

+

token="hf_token",

|

| 148 |

+

)

|

| 149 |

+

|

| 150 |

+

cpt_dataset = cpt_dataset.map(

|

| 151 |

+

formatting_prompts_func,

|

| 152 |

+

batched=True,

|

| 153 |

+

)

|

| 154 |

+

|

| 155 |

+

trainer = UnslothTrainer(

|

| 156 |

+

model=model,

|

| 157 |

+

tokenizer=tokenizer,

|

| 158 |

+

train_dataset=cpt_dataset,

|

| 159 |

+

dataset_text_field="text",

|

| 160 |

+

max_seq_length=max_seq_length,

|

| 161 |

+

dataset_num_proc=2,

|

| 162 |

+

args=UnslothTrainingArguments(

|

| 163 |

+

per_device_train_batch_size=8,

|

| 164 |

+

gradient_accumulation_steps=8,

|

| 165 |

+

warmup_ratio=0.1,

|

| 166 |

+

num_train_epochs=1,

|

| 167 |

+

learning_rate=5e-5,

|

| 168 |

+

embedding_learning_rate=1e-5,

|

| 169 |

+

fp16=not is_bfloat16_supported(),

|

| 170 |

+

bf16=is_bfloat16_supported(),

|

| 171 |

+

logging_steps=1,

|

| 172 |

+

report_to="wandb",

|

| 173 |

+

save_steps=350,

|

| 174 |

+

run_name="romulus-cpt",

|

| 175 |

+

optim="adamw_8bit",

|

| 176 |

+

weight_decay=0.01,

|

| 177 |

+

lr_scheduler_type="linear",

|

| 178 |

+

seed=3407,

|

| 179 |

+

output_dir="outputs",

|

| 180 |

+

),

|

| 181 |

+

)

|

| 182 |

+

|

| 183 |

+

trainer_stats = trainer.train()

|

| 184 |

+

```

|

| 185 |

+

|

| 186 |

+

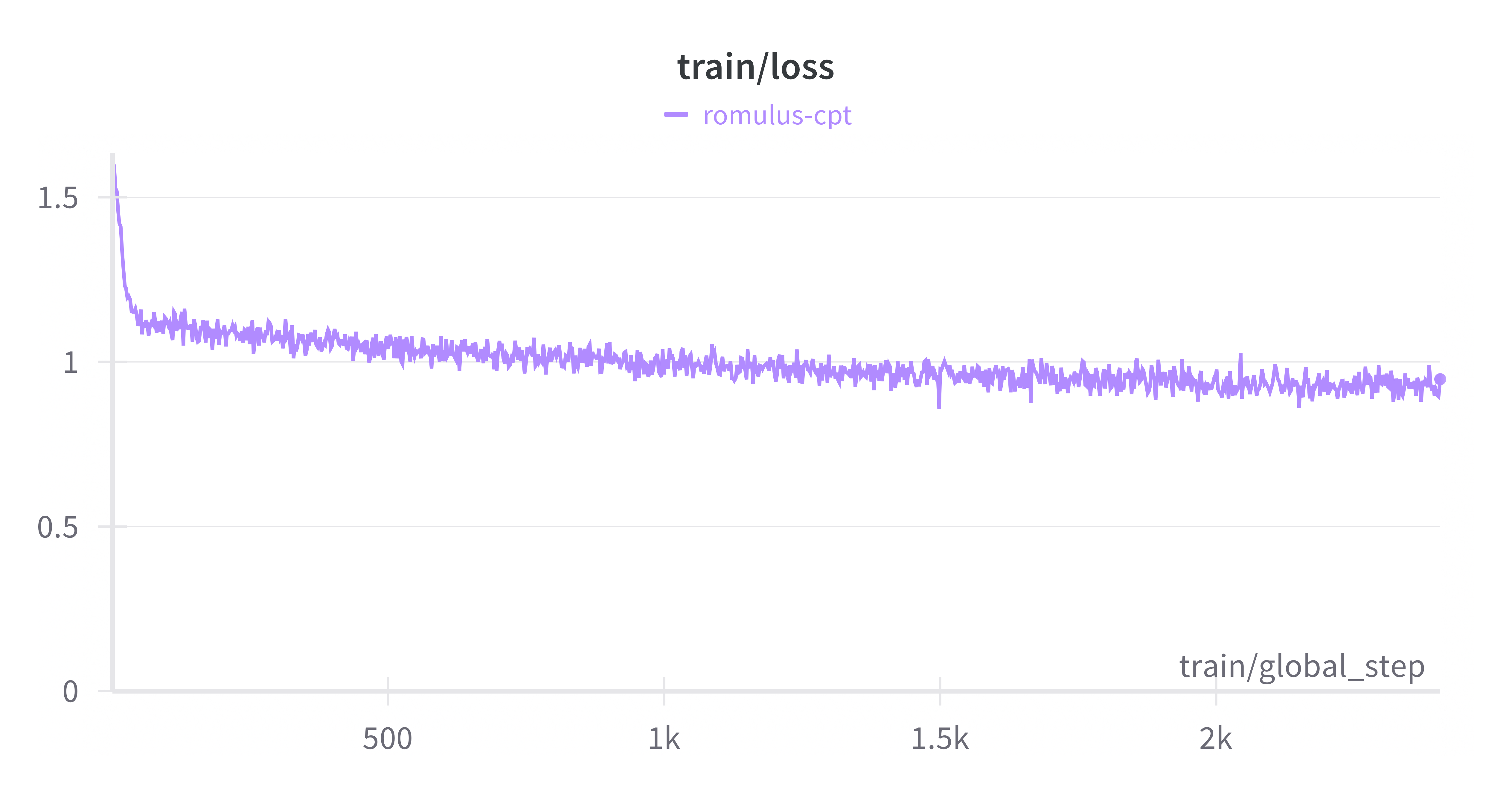

<img src="assets/loss.png">

|

| 187 |

+

|

| 188 |

+

## Citing & Authors

|

| 189 |

+

|

| 190 |

+

If you use this code in your research, please use the following BibTeX entry.

|

| 191 |

+

|

| 192 |

+

```BibTeX

|

| 193 |

+

@misc{louisbrulenaudet2024,

|

| 194 |

+

author = {Louis Brulé Naudet},

|

| 195 |

+

title = {Romulus, continued pre-trained models for French law},

|

| 196 |

+

year = {2024}

|

| 197 |

+

howpublished = {\url{https://huggingface.co/datasets/louisbrulenaudet/Romulus-cpt-fr}},

|

| 198 |

+

}

|

| 199 |

+

```

|

| 200 |

+

|

| 201 |

+

## Feedback

|

| 202 |

+

|

| 203 |

+

If you have any feedback, please reach out at [[email protected]](mailto:[email protected]).

|

assets/loss.png

ADDED

|

assets/thumbnail.webp

ADDED

|