Vision-Language-Vision Auto-Encoder: Scalable Knowledge Distillation from Diffusion Models

Abstract

The VLV auto-encoder framework uses pretrained vision and text models to create a cost-effective and data-efficient captioning system.

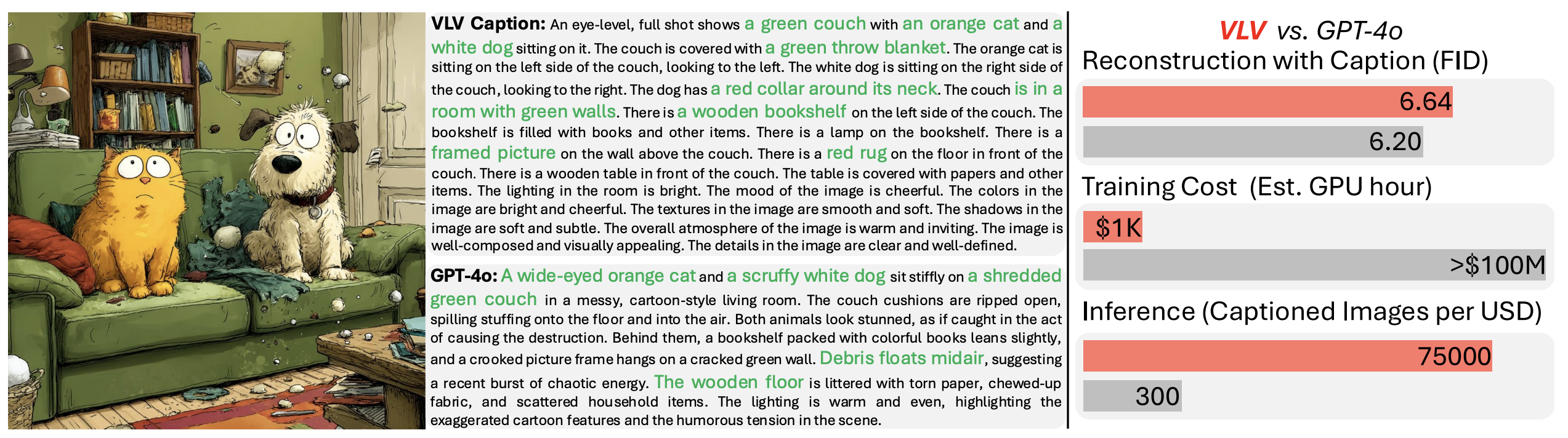

Building state-of-the-art Vision-Language Models (VLMs) with strong captioning capabilities typically necessitates training on billions of high-quality image-text pairs, requiring millions of GPU hours. This paper introduces the Vision-Language-Vision (VLV) auto-encoder framework, which strategically leverages key pretrained components: a vision encoder, the decoder of a Text-to-Image (T2I) diffusion model, and subsequently, a Large Language Model (LLM). Specifically, we establish an information bottleneck by regularizing the language representation space, achieved through freezing the pretrained T2I diffusion decoder. Our VLV pipeline effectively distills knowledge from the text-conditioned diffusion model using continuous embeddings, demonstrating comprehensive semantic understanding via high-quality reconstructions. Furthermore, by fine-tuning a pretrained LLM to decode the intermediate language representations into detailed descriptions, we construct a state-of-the-art (SoTA) captioner comparable to leading models like GPT-4o and Gemini 2.0 Flash. Our method demonstrates exceptional cost-efficiency and significantly reduces data requirements; by primarily utilizing single-modal images for training and maximizing the utility of existing pretrained models (image encoder, T2I diffusion model, and LLM), it circumvents the need for massive paired image-text datasets, keeping the total training expenditure under $1,000 USD.

Community

We build state-of-the-art captioner models through scalable knowledge distillation from diffusion models.

Project page: https://lambert-x.github.io/Vision-Language-Vision/

Code: https://github.com/Tiezheng11/Vision-Language-Vision/tree/main

Checkpoint: https://huggingface.co/lambertxiao/Vision-Language-Vision-Captioner-Qwen2.5-3B

Dataset: https://huggingface.co/datasets/ccvl/LAION-High-Qualtiy-Pro-6M-VLV