---

library_name: transformers

license: apache-2.0

base_model:

- Qwen/Qwen2.5-Math-1.5B

---

# Qwen2.5-Math-1.5B-Oat-Zero

## Links

- 📜 [Paper](https://github.com/sail-sg/understand-r1-zero/blob/main/understand-r1-zero.pdf)

- 💻 [GitHub](https://github.com/sail-sg/understand-r1-zero)

- 🤗 [Oat-Zero Collection](https://huggingface.co/collections/sail/oat-zero-understanding-r1-zero-like-training-67dcdb07b9f3eb05f1501c4a)

## Introduction

This model is trained by the minimalist R1-Zero recipe introduced in our paper:

- **Algorithm**: Dr. DRPO

- **Data**: level 3-5 questions from MATH dataset

- **Base model**: [Qwen/Qwen2.5-Math-1.5B](https://huggingface.co/Qwen/Qwen2.5-Math-1.5B)

- **Template**: Qwen-Math

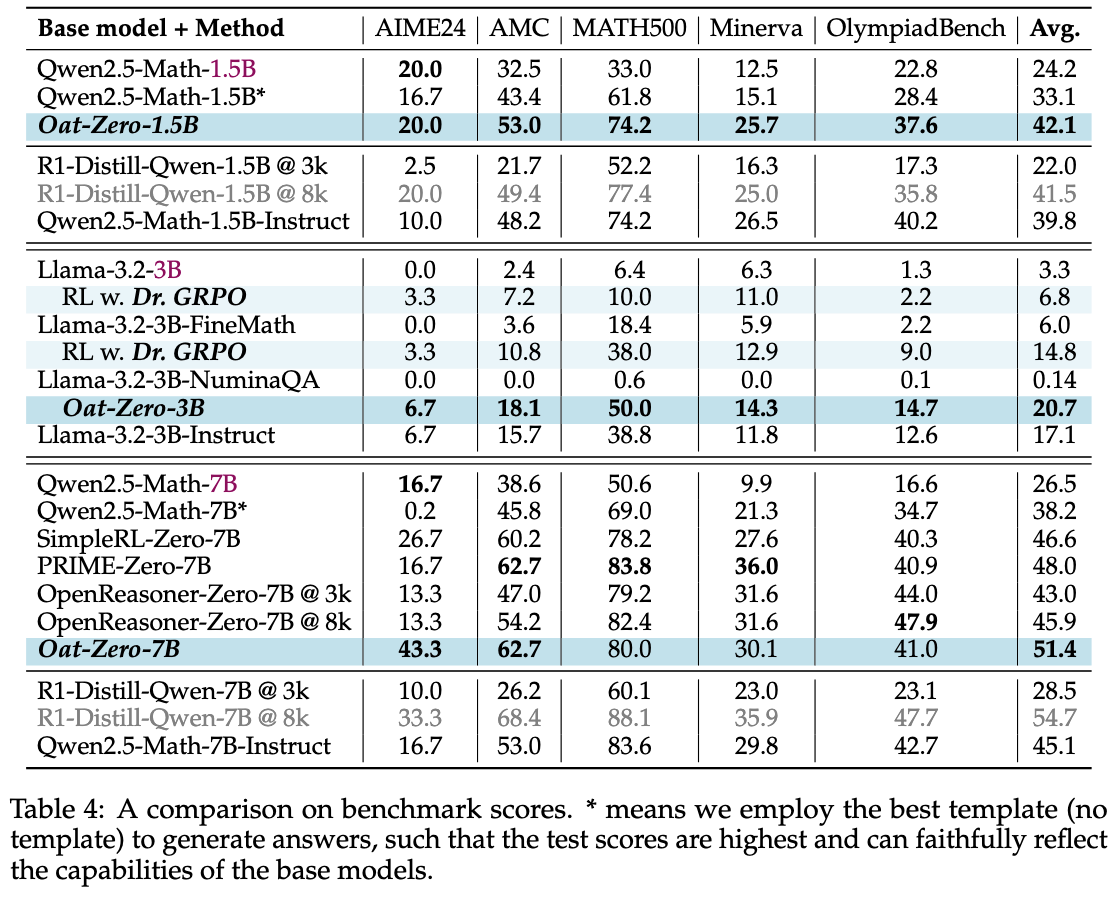

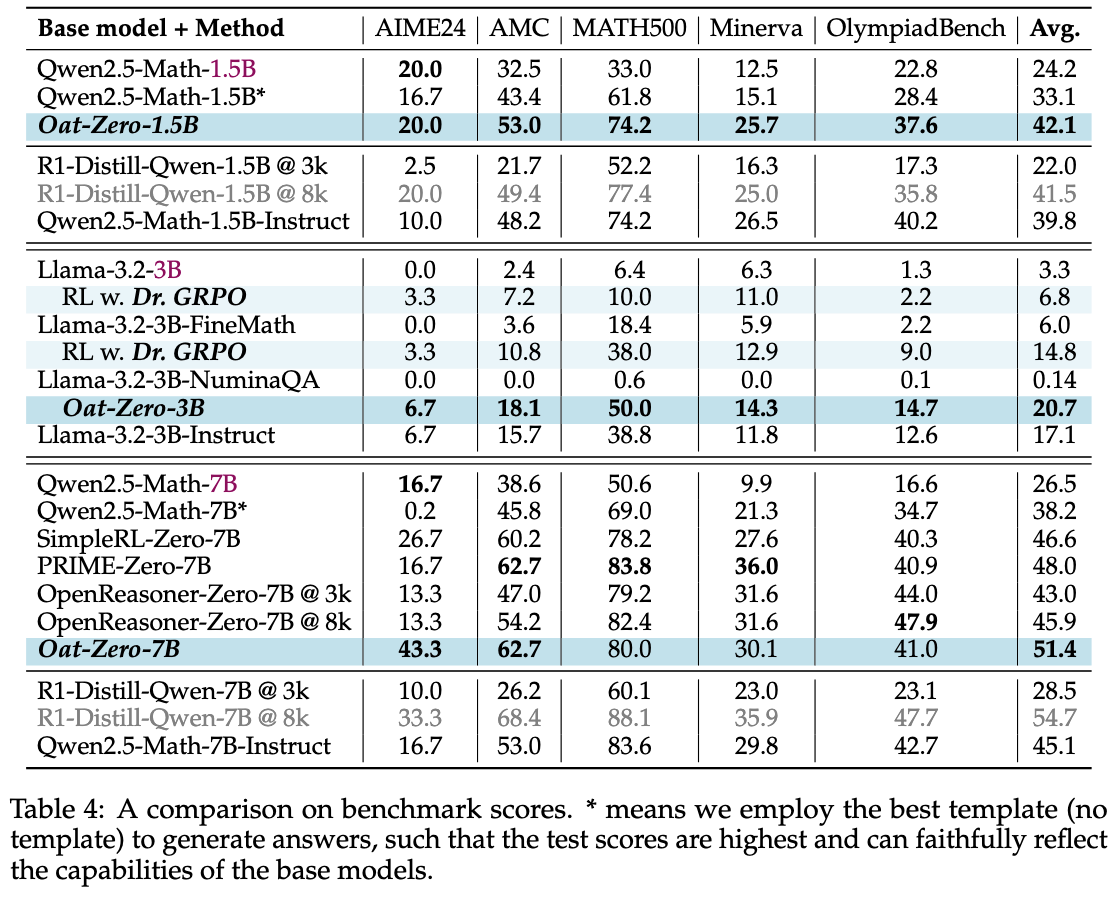

Evaluation results on widely used math benchmarks are shown below:

## Usage

```python

import vllm

def apply_qwen_math_template(question: str):

return (

"<|im_start|>system\nPlease reason step by step, and put your final answer within \\boxed{}.<|im_end|>\n<|im_start|>user\n"

+ question

+ "<|im_end|>\n<|im_start|>assistant\n"

)

def apply_r1_template(question: str):

return (

"A conversation between User and Assistant. The User asks a question, and the Assistant solves it. The Assistant first thinks about the reasoning process in the mind and then provides the User with the answer. "

"The reasoning process is enclosed within and answer is enclosed within tags, respectively, i.e., reasoning process here answer here .\nUser: "

+ question

+ "\nAssistant: "

)

model_name = "sail/Qwen2.5-Math-1.5B-Oat-Zero"

sampling_params = vllm.SamplingParams(

n=1,

temperature=0,

top_p=1,

max_tokens=3000,

)

model = vllm.LLM(

model_name,

max_model_len=4096,

dtype="bfloat16",

enable_prefix_caching=True,

)

if "Llama-3.2-3B-Oat-Zero" in model_name:

apply_template = apply_r1_template

else:

apply_template = apply_qwen_math_template

prompts = [

"How many positive whole-number divisors does 196 have?"

]

prompts = list(map(apply_template, prompts))

outputs = model.generate(prompts, sampling_params)

print(outputs)

```

## Citation

```latex

@misc{liu2025understanding,

title={Understanding R1-Zero-Like Training: A Critical Perspective},

author={Zichen Liu and Changyu Chen and Wenjun Li and Penghui Qi and Tianyu Pang and Chao Du and Wee Sun Lee and Min Lin},

year={2025},

howpublished={\url{https://github.com/sail-sg/understand-r1-zero}},

}

```

## Usage

```python

import vllm

def apply_qwen_math_template(question: str):

return (

"<|im_start|>system\nPlease reason step by step, and put your final answer within \\boxed{}.<|im_end|>\n<|im_start|>user\n"

+ question

+ "<|im_end|>\n<|im_start|>assistant\n"

)

def apply_r1_template(question: str):

return (

"A conversation between User and Assistant. The User asks a question, and the Assistant solves it. The Assistant first thinks about the reasoning process in the mind and then provides the User with the answer. "

"The reasoning process is enclosed within and answer is enclosed within tags, respectively, i.e., reasoning process here answer here .\nUser: "

+ question

+ "\nAssistant: "

)

model_name = "sail/Qwen2.5-Math-1.5B-Oat-Zero"

sampling_params = vllm.SamplingParams(

n=1,

temperature=0,

top_p=1,

max_tokens=3000,

)

model = vllm.LLM(

model_name,

max_model_len=4096,

dtype="bfloat16",

enable_prefix_caching=True,

)

if "Llama-3.2-3B-Oat-Zero" in model_name:

apply_template = apply_r1_template

else:

apply_template = apply_qwen_math_template

prompts = [

"How many positive whole-number divisors does 196 have?"

]

prompts = list(map(apply_template, prompts))

outputs = model.generate(prompts, sampling_params)

print(outputs)

```

## Citation

```latex

@misc{liu2025understanding,

title={Understanding R1-Zero-Like Training: A Critical Perspective},

author={Zichen Liu and Changyu Chen and Wenjun Li and Penghui Qi and Tianyu Pang and Chao Du and Wee Sun Lee and Min Lin},

year={2025},

howpublished={\url{https://github.com/sail-sg/understand-r1-zero}},

}

```

## Usage

```python

import vllm

def apply_qwen_math_template(question: str):

return (

"<|im_start|>system\nPlease reason step by step, and put your final answer within \\boxed{}.<|im_end|>\n<|im_start|>user\n"

+ question

+ "<|im_end|>\n<|im_start|>assistant\n"

)

def apply_r1_template(question: str):

return (

"A conversation between User and Assistant. The User asks a question, and the Assistant solves it. The Assistant first thinks about the reasoning process in the mind and then provides the User with the answer. "

"The reasoning process is enclosed within

## Usage

```python

import vllm

def apply_qwen_math_template(question: str):

return (

"<|im_start|>system\nPlease reason step by step, and put your final answer within \\boxed{}.<|im_end|>\n<|im_start|>user\n"

+ question

+ "<|im_end|>\n<|im_start|>assistant\n"

)

def apply_r1_template(question: str):

return (

"A conversation between User and Assistant. The User asks a question, and the Assistant solves it. The Assistant first thinks about the reasoning process in the mind and then provides the User with the answer. "

"The reasoning process is enclosed within