Spaces:

Sleeping

Sleeping

Upload 44 files

Browse files- .gitattributes +3 -0

- data/.gitignore +3 -0

- data/facenet-pytorch-banner.png +0 -0

- data/multiface.jpg +0 -0

- data/multiface_detected.png +3 -0

- data/onet.pt +3 -0

- data/pnet.pt +3 -0

- data/rnet.pt +3 -0

- data/test_images/angelina_jolie/1.jpg +0 -0

- data/test_images/angelina_jolie/angelina_jolie.pt +3 -0

- data/test_images/bo_vinh/bo_vinh.pt +3 -0

- data/test_images/brad_pitt/brad_pitt.pt +3 -0

- data/test_images/bradley_cooper/1.jpg +3 -0

- data/test_images/bradley_cooper/bradley_cooper.pt +3 -0

- data/test_images/chau_anh/chau_anh.pt +3 -0

- data/test_images/daniel_radcliffe/daniel_radcliffe.pt +3 -0

- data/test_images/hermione_granger/hermione_granger.pt +3 -0

- data/test_images/hien/hien.pt +3 -0

- data/test_images/kate_siegel/1.jpg +0 -0

- data/test_images/kate_siegel/kate_siegel.pt +3 -0

- data/test_images/khanh/khanh.pt +3 -0

- data/test_images/me_hoa/me_hoa.pt +3 -0

- data/test_images/ny_khanh/ny_khanh.pt +3 -0

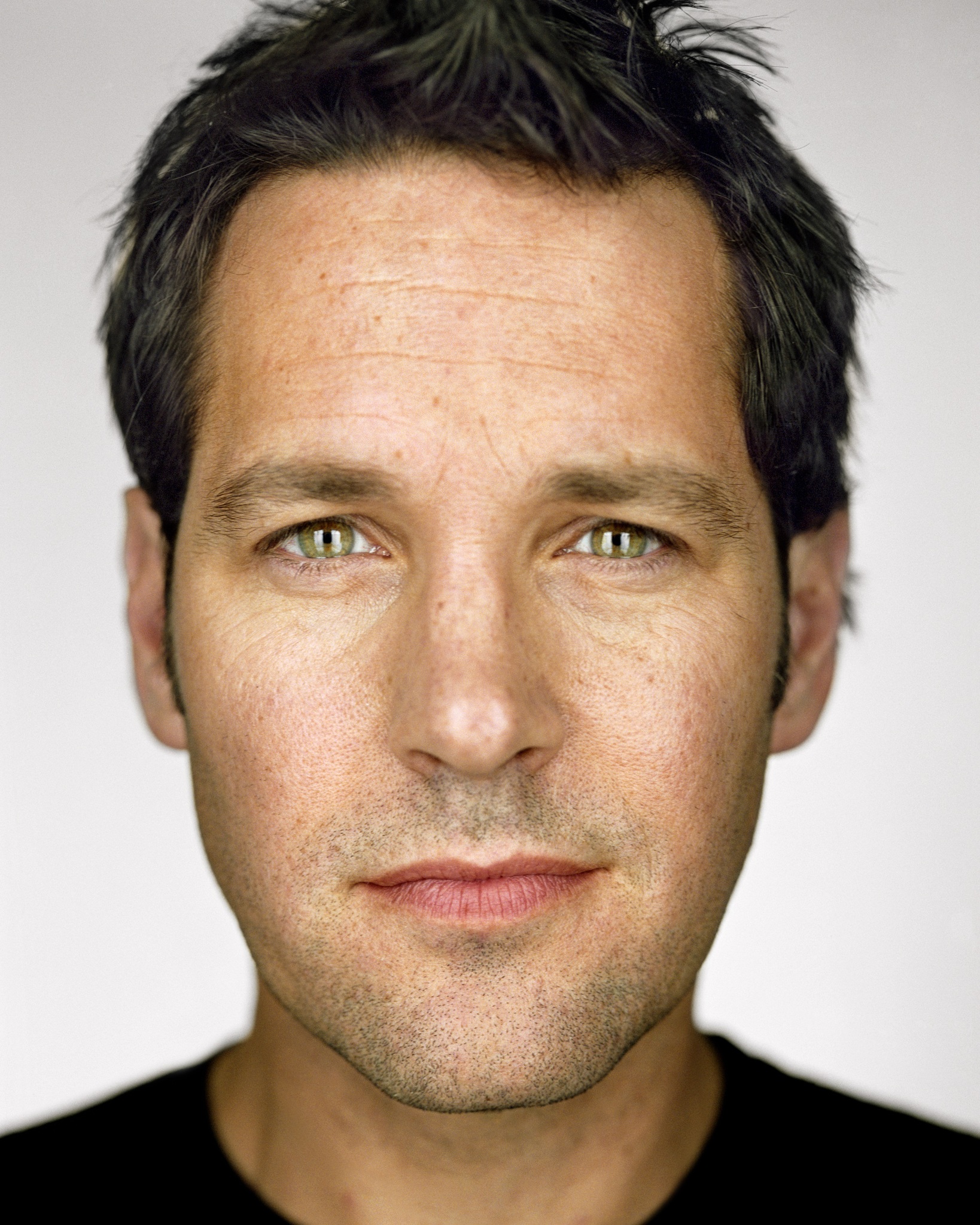

- data/test_images/paul_rudd/1.jpg +0 -0

- data/test_images/paul_rudd/paul_rudd.pt +3 -0

- data/test_images/ron_weasley/ron_weasley.pt +3 -0

- data/test_images/shea_whigham/1.jpg +3 -0

- data/test_images/shea_whigham/shea_whigham.pt +3 -0

- data/test_images/tu_linh/tu_linh.pt +3 -0

- data/test_images_2/angelina_jolie_brad_pitt/1.jpg +0 -0

- data/test_images_2/bong_chanh/1.jpg +0 -0

- data/test_images_2/bong_chanh/2.jpg +0 -0

- data/test_images_2/khanh_va_ny/1.jpg +0 -0

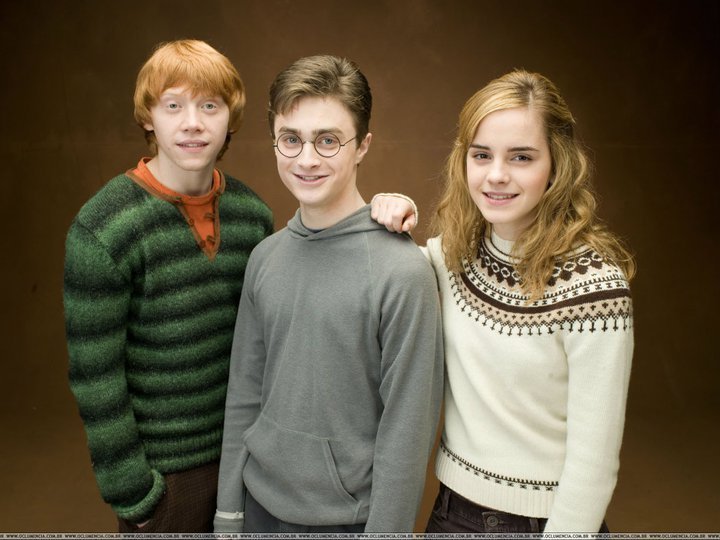

- data/test_images_2/the_golden_trio/1.jpg +0 -0

- data/test_images_aligned/angelina_jolie/1.png +0 -0

- data/test_images_aligned/bradley_cooper/1.png +0 -0

- data/test_images_aligned/kate_siegel/1.png +0 -0

- data/test_images_aligned/paul_rudd/1.png +0 -0

- data/test_images_aligned/shea_whigham/1.png +0 -0

- models/inception_resnet_v1.py +340 -0

- models/mtcnn.py +519 -0

- models/utils/detect_face.py +378 -0

- models/utils/download.py +102 -0

- models/utils/tensorflow2pytorch.py +416 -0

- models/utils/training.py +144 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

data/multiface_detected.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/test_images/bradley_cooper/1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

data/test_images/shea_whigham/1.jpg filter=lfs diff=lfs merge=lfs -text

|

data/.gitignore

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

2018*

|

| 2 |

+

*.json

|

| 3 |

+

profile.txt

|

data/facenet-pytorch-banner.png

ADDED

|

data/multiface.jpg

ADDED

|

data/multiface_detected.png

ADDED

|

Git LFS Details

|

data/onet.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:165bfbe42940416ccfb977545cf0e976d5bf321f67083ae2aaaa5c764280118d

|

| 3 |

+

size 1559269

|

data/pnet.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a2a71925e0b9996a42f63e47efc1ca19043e69558b5c523b978d611dfae49c8f

|

| 3 |

+

size 28570

|

data/rnet.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bbb937de72efc9ef83b186c49f5f558467a1d7e3453a8ece0d71a886633f6a86

|

| 3 |

+

size 403147

|

data/test_images/angelina_jolie/1.jpg

ADDED

|

data/test_images/angelina_jolie/angelina_jolie.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:729a0aee6c1c8f1b7b7db49011b2a238d0d54fda993d49271f9f39d876e94a66

|

| 3 |

+

size 2816

|

data/test_images/bo_vinh/bo_vinh.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4d274aaf7b361cf62066c08e4153601bdf9f8cdc2458e0112bbfc1d6c7191235

|

| 3 |

+

size 2795

|

data/test_images/brad_pitt/brad_pitt.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f70ad8cf619238a4856781ac1ef9a2e7164b486adf29bcb618ac394d5725d289

|

| 3 |

+

size 2801

|

data/test_images/bradley_cooper/1.jpg

ADDED

|

Git LFS Details

|

data/test_images/bradley_cooper/bradley_cooper.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a22e6311cdd61223c03c955fa48fdb348dd2f607266e1be33cdf245deb0bc2b6

|

| 3 |

+

size 2816

|

data/test_images/chau_anh/chau_anh.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:6b393e3ee47643ba619cf48bf58d30aaa816f8943112c8ea24e8e682d97d3d22

|

| 3 |

+

size 2798

|

data/test_images/daniel_radcliffe/daniel_radcliffe.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c5bc1e56b1b34386698721020a685b1deea3bb3870b7ea5c8043599ca607881d

|

| 3 |

+

size 2822

|

data/test_images/hermione_granger/hermione_granger.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9c94f592eb34e84957844f545d841c2559e2ed28a4956c1307d570d41c1bc4ec

|

| 3 |

+

size 2822

|

data/test_images/hien/hien.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7d6a1965b22ac8ab4441fe79dc39345612c398afb78ca58072e66e35df47cc67

|

| 3 |

+

size 2722

|

data/test_images/kate_siegel/1.jpg

ADDED

|

data/test_images/kate_siegel/kate_siegel.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:43a64061efec1325ca8d8ab5822a0b12b974f64a610b21c699b0465b5ede7dd1

|

| 3 |

+

size 2807

|

data/test_images/khanh/khanh.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f9f31fc909cf9bd67055ca9ec2a375e97c314b944e9568e2e69b1cfcca081242

|

| 3 |

+

size 2725

|

data/test_images/me_hoa/me_hoa.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:64861c898832b1c5d30447c469b228d06c84439f52796fdabae90c15d1e61d03

|

| 3 |

+

size 2728

|

data/test_images/ny_khanh/ny_khanh.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dd277690d8a7a10057f3195574e764a5f17472ff1918b4ad8a2b871154778f47

|

| 3 |

+

size 2798

|

data/test_images/paul_rudd/1.jpg

ADDED

|

data/test_images/paul_rudd/paul_rudd.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:92b5ea7bd25a17d2bdceb79a785ecab4944951555469ce27cfdf1709ba6cbdeb

|

| 3 |

+

size 2801

|

data/test_images/ron_weasley/ron_weasley.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:79a4ebf96e0ed3676d0b7ec7c8d017348736097286cef03dda2fe316a25b33c1

|

| 3 |

+

size 2807

|

data/test_images/shea_whigham/1.jpg

ADDED

|

Git LFS Details

|

data/test_images/shea_whigham/shea_whigham.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ab6085300a2227199b57c7eb259c973d785e31ee5e36dbddc936e07019a5eaf9

|

| 3 |

+

size 2810

|

data/test_images/tu_linh/tu_linh.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c9a9afd2eaabb2e7a4808ee168ad7232c51a0bc71a1fc6d50b1ddf6c6a301fe5

|

| 3 |

+

size 2795

|

data/test_images_2/angelina_jolie_brad_pitt/1.jpg

ADDED

|

data/test_images_2/bong_chanh/1.jpg

ADDED

|

data/test_images_2/bong_chanh/2.jpg

ADDED

|

data/test_images_2/khanh_va_ny/1.jpg

ADDED

|

data/test_images_2/the_golden_trio/1.jpg

ADDED

|

data/test_images_aligned/angelina_jolie/1.png

ADDED

|

data/test_images_aligned/bradley_cooper/1.png

ADDED

|

data/test_images_aligned/kate_siegel/1.png

ADDED

|

data/test_images_aligned/paul_rudd/1.png

ADDED

|

data/test_images_aligned/shea_whigham/1.png

ADDED

|

models/inception_resnet_v1.py

ADDED

|

@@ -0,0 +1,340 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import requests

|

| 3 |

+

from requests.adapters import HTTPAdapter

|

| 4 |

+

|

| 5 |

+

import torch

|

| 6 |

+

from torch import nn

|

| 7 |

+

from torch.nn import functional as F

|

| 8 |

+

|

| 9 |

+

from .utils.download import download_url_to_file

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class BasicConv2d(nn.Module):

|

| 13 |

+

|

| 14 |

+

def __init__(self, in_planes, out_planes, kernel_size, stride, padding=0):

|

| 15 |

+

super().__init__()

|

| 16 |

+

self.conv = nn.Conv2d(

|

| 17 |

+

in_planes, out_planes,

|

| 18 |

+

kernel_size=kernel_size, stride=stride,

|

| 19 |

+

padding=padding, bias=False

|

| 20 |

+

) # verify bias false

|

| 21 |

+

self.bn = nn.BatchNorm2d(

|

| 22 |

+

out_planes,

|

| 23 |

+

eps=0.001, # value found in tensorflow

|

| 24 |

+

momentum=0.1, # default pytorch value

|

| 25 |

+

affine=True

|

| 26 |

+

)

|

| 27 |

+

self.relu = nn.ReLU(inplace=False)

|

| 28 |

+

|

| 29 |

+

def forward(self, x):

|

| 30 |

+

x = self.conv(x)

|

| 31 |

+

x = self.bn(x)

|

| 32 |

+

x = self.relu(x)

|

| 33 |

+

return x

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

class Block35(nn.Module):

|

| 37 |

+

|

| 38 |

+

def __init__(self, scale=1.0):

|

| 39 |

+

super().__init__()

|

| 40 |

+

|

| 41 |

+

self.scale = scale

|

| 42 |

+

|

| 43 |

+

self.branch0 = BasicConv2d(256, 32, kernel_size=1, stride=1)

|

| 44 |

+

|

| 45 |

+

self.branch1 = nn.Sequential(

|

| 46 |

+

BasicConv2d(256, 32, kernel_size=1, stride=1),

|

| 47 |

+

BasicConv2d(32, 32, kernel_size=3, stride=1, padding=1)

|

| 48 |

+

)

|

| 49 |

+

|

| 50 |

+

self.branch2 = nn.Sequential(

|

| 51 |

+

BasicConv2d(256, 32, kernel_size=1, stride=1),

|

| 52 |

+

BasicConv2d(32, 32, kernel_size=3, stride=1, padding=1),

|

| 53 |

+

BasicConv2d(32, 32, kernel_size=3, stride=1, padding=1)

|

| 54 |

+

)

|

| 55 |

+

|

| 56 |

+

self.conv2d = nn.Conv2d(96, 256, kernel_size=1, stride=1)

|

| 57 |

+

self.relu = nn.ReLU(inplace=False)

|

| 58 |

+

|

| 59 |

+

def forward(self, x):

|

| 60 |

+

x0 = self.branch0(x)

|

| 61 |

+

x1 = self.branch1(x)

|

| 62 |

+

x2 = self.branch2(x)

|

| 63 |

+

out = torch.cat((x0, x1, x2), 1)

|

| 64 |

+

out = self.conv2d(out)

|

| 65 |

+

out = out * self.scale + x

|

| 66 |

+

out = self.relu(out)

|

| 67 |

+

return out

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

class Block17(nn.Module):

|

| 71 |

+

|

| 72 |

+

def __init__(self, scale=1.0):

|

| 73 |

+

super().__init__()

|

| 74 |

+

|

| 75 |

+

self.scale = scale

|

| 76 |

+

|

| 77 |

+

self.branch0 = BasicConv2d(896, 128, kernel_size=1, stride=1)

|

| 78 |

+

|

| 79 |

+

self.branch1 = nn.Sequential(

|

| 80 |

+

BasicConv2d(896, 128, kernel_size=1, stride=1),

|

| 81 |

+

BasicConv2d(128, 128, kernel_size=(1,7), stride=1, padding=(0,3)),

|

| 82 |

+

BasicConv2d(128, 128, kernel_size=(7,1), stride=1, padding=(3,0))

|

| 83 |

+

)

|

| 84 |

+

|

| 85 |

+

self.conv2d = nn.Conv2d(256, 896, kernel_size=1, stride=1)

|

| 86 |

+

self.relu = nn.ReLU(inplace=False)

|

| 87 |

+

|

| 88 |

+

def forward(self, x):

|

| 89 |

+

x0 = self.branch0(x)

|

| 90 |

+

x1 = self.branch1(x)

|

| 91 |

+

out = torch.cat((x0, x1), 1)

|

| 92 |

+

out = self.conv2d(out)

|

| 93 |

+

out = out * self.scale + x

|

| 94 |

+

out = self.relu(out)

|

| 95 |

+

return out

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

class Block8(nn.Module):

|

| 99 |

+

|

| 100 |

+

def __init__(self, scale=1.0, noReLU=False):

|

| 101 |

+

super().__init__()

|

| 102 |

+

|

| 103 |

+

self.scale = scale

|

| 104 |

+

self.noReLU = noReLU

|

| 105 |

+

|

| 106 |

+

self.branch0 = BasicConv2d(1792, 192, kernel_size=1, stride=1)

|

| 107 |

+

|

| 108 |

+

self.branch1 = nn.Sequential(

|

| 109 |

+

BasicConv2d(1792, 192, kernel_size=1, stride=1),

|

| 110 |

+

BasicConv2d(192, 192, kernel_size=(1,3), stride=1, padding=(0,1)),

|

| 111 |

+

BasicConv2d(192, 192, kernel_size=(3,1), stride=1, padding=(1,0))

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

self.conv2d = nn.Conv2d(384, 1792, kernel_size=1, stride=1)

|

| 115 |

+

if not self.noReLU:

|

| 116 |

+

self.relu = nn.ReLU(inplace=False)

|

| 117 |

+

|

| 118 |

+

def forward(self, x):

|

| 119 |

+

x0 = self.branch0(x)

|

| 120 |

+

x1 = self.branch1(x)

|

| 121 |

+

out = torch.cat((x0, x1), 1)

|

| 122 |

+

out = self.conv2d(out)

|

| 123 |

+

out = out * self.scale + x

|

| 124 |

+

if not self.noReLU:

|

| 125 |

+

out = self.relu(out)

|

| 126 |

+

return out

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

class Mixed_6a(nn.Module):

|

| 130 |

+

|

| 131 |

+

def __init__(self):

|

| 132 |

+

super().__init__()

|

| 133 |

+

|

| 134 |

+

self.branch0 = BasicConv2d(256, 384, kernel_size=3, stride=2)

|

| 135 |

+

|

| 136 |

+

self.branch1 = nn.Sequential(

|

| 137 |

+

BasicConv2d(256, 192, kernel_size=1, stride=1),

|

| 138 |

+

BasicConv2d(192, 192, kernel_size=3, stride=1, padding=1),

|

| 139 |

+

BasicConv2d(192, 256, kernel_size=3, stride=2)

|

| 140 |

+

)

|

| 141 |

+

|

| 142 |

+

self.branch2 = nn.MaxPool2d(3, stride=2)

|

| 143 |

+

|

| 144 |

+

def forward(self, x):

|

| 145 |

+

x0 = self.branch0(x)

|

| 146 |

+

x1 = self.branch1(x)

|

| 147 |

+

x2 = self.branch2(x)

|

| 148 |

+

out = torch.cat((x0, x1, x2), 1)

|

| 149 |

+

return out

|

| 150 |

+

|

| 151 |

+

|

| 152 |

+

class Mixed_7a(nn.Module):

|

| 153 |

+

|

| 154 |

+

def __init__(self):

|

| 155 |

+

super().__init__()

|

| 156 |

+

|

| 157 |

+

self.branch0 = nn.Sequential(

|

| 158 |

+

BasicConv2d(896, 256, kernel_size=1, stride=1),

|

| 159 |

+

BasicConv2d(256, 384, kernel_size=3, stride=2)

|

| 160 |

+

)

|

| 161 |

+

|

| 162 |

+

self.branch1 = nn.Sequential(

|

| 163 |

+

BasicConv2d(896, 256, kernel_size=1, stride=1),

|

| 164 |

+

BasicConv2d(256, 256, kernel_size=3, stride=2)

|

| 165 |

+

)

|

| 166 |

+

|

| 167 |

+

self.branch2 = nn.Sequential(

|

| 168 |

+

BasicConv2d(896, 256, kernel_size=1, stride=1),

|

| 169 |

+

BasicConv2d(256, 256, kernel_size=3, stride=1, padding=1),

|

| 170 |

+

BasicConv2d(256, 256, kernel_size=3, stride=2)

|

| 171 |

+

)

|

| 172 |

+

|

| 173 |

+

self.branch3 = nn.MaxPool2d(3, stride=2)

|

| 174 |

+

|

| 175 |

+

def forward(self, x):

|

| 176 |

+

x0 = self.branch0(x)

|

| 177 |

+

x1 = self.branch1(x)

|

| 178 |

+

x2 = self.branch2(x)

|

| 179 |

+

x3 = self.branch3(x)

|

| 180 |

+

out = torch.cat((x0, x1, x2, x3), 1)

|

| 181 |

+

return out

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

class InceptionResnetV1(nn.Module):

|

| 185 |

+

"""Inception Resnet V1 model with optional loading of pretrained weights.

|

| 186 |

+

|

| 187 |

+

Model parameters can be loaded based on pretraining on the VGGFace2 or CASIA-Webface

|

| 188 |

+

datasets. Pretrained state_dicts are automatically downloaded on model instantiation if

|

| 189 |

+

requested and cached in the torch cache. Subsequent instantiations use the cache rather than

|

| 190 |

+

redownloading.

|

| 191 |

+

|

| 192 |

+

Keyword Arguments:

|

| 193 |

+

pretrained {str} -- Optional pretraining dataset. Either 'vggface2' or 'casia-webface'.

|

| 194 |

+

(default: {None})

|

| 195 |

+

classify {bool} -- Whether the model should output classification probabilities or feature

|

| 196 |

+

embeddings. (default: {False})

|

| 197 |

+

num_classes {int} -- Number of output classes. If 'pretrained' is set and num_classes not

|

| 198 |

+

equal to that used for the pretrained model, the final linear layer will be randomly

|

| 199 |

+

initialized. (default: {None})

|

| 200 |

+

dropout_prob {float} -- Dropout probability. (default: {0.6})

|

| 201 |

+

"""

|

| 202 |

+

def __init__(self, pretrained=None, classify=False, num_classes=None, dropout_prob=0.6, device=None):

|

| 203 |

+

super().__init__()

|

| 204 |

+

|

| 205 |

+

# Set simple attributes

|

| 206 |

+

self.pretrained = pretrained

|

| 207 |

+

self.classify = classify

|

| 208 |

+

self.num_classes = num_classes

|

| 209 |

+

|

| 210 |

+

if pretrained == 'vggface2':

|

| 211 |

+

tmp_classes = 8631

|

| 212 |

+

elif pretrained == 'casia-webface':

|

| 213 |

+

tmp_classes = 10575

|

| 214 |

+

elif pretrained is None and self.classify and self.num_classes is None:

|

| 215 |

+

raise Exception('If "pretrained" is not specified and "classify" is True, "num_classes" must be specified')

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

# Define layers

|

| 219 |

+

self.conv2d_1a = BasicConv2d(3, 32, kernel_size=3, stride=2)

|

| 220 |

+

self.conv2d_2a = BasicConv2d(32, 32, kernel_size=3, stride=1)

|

| 221 |

+

self.conv2d_2b = BasicConv2d(32, 64, kernel_size=3, stride=1, padding=1)

|

| 222 |

+

self.maxpool_3a = nn.MaxPool2d(3, stride=2)

|

| 223 |

+

self.conv2d_3b = BasicConv2d(64, 80, kernel_size=1, stride=1)

|

| 224 |

+

self.conv2d_4a = BasicConv2d(80, 192, kernel_size=3, stride=1)

|

| 225 |

+

self.conv2d_4b = BasicConv2d(192, 256, kernel_size=3, stride=2)

|

| 226 |

+

self.repeat_1 = nn.Sequential(

|

| 227 |

+

Block35(scale=0.17),

|

| 228 |

+

Block35(scale=0.17),

|

| 229 |

+

Block35(scale=0.17),

|

| 230 |

+

Block35(scale=0.17),

|

| 231 |

+

Block35(scale=0.17),

|

| 232 |

+

)

|

| 233 |

+

self.mixed_6a = Mixed_6a()

|

| 234 |

+

self.repeat_2 = nn.Sequential(

|

| 235 |

+

Block17(scale=0.10),

|

| 236 |

+

Block17(scale=0.10),

|

| 237 |

+

Block17(scale=0.10),

|

| 238 |

+

Block17(scale=0.10),

|

| 239 |

+

Block17(scale=0.10),

|

| 240 |

+

Block17(scale=0.10),

|

| 241 |

+

Block17(scale=0.10),

|

| 242 |

+

Block17(scale=0.10),

|

| 243 |

+

Block17(scale=0.10),

|

| 244 |

+

Block17(scale=0.10),

|

| 245 |

+

)

|

| 246 |

+

self.mixed_7a = Mixed_7a()

|

| 247 |

+

self.repeat_3 = nn.Sequential(

|

| 248 |

+

Block8(scale=0.20),

|

| 249 |

+

Block8(scale=0.20),

|

| 250 |

+

Block8(scale=0.20),

|

| 251 |

+

Block8(scale=0.20),

|

| 252 |

+

Block8(scale=0.20),

|

| 253 |

+

)

|

| 254 |

+

self.block8 = Block8(noReLU=True)

|

| 255 |

+

self.avgpool_1a = nn.AdaptiveAvgPool2d(1)

|

| 256 |

+

self.dropout = nn.Dropout(dropout_prob)

|

| 257 |

+

self.last_linear = nn.Linear(1792, 512, bias=False)

|

| 258 |

+

self.last_bn = nn.BatchNorm1d(512, eps=0.001, momentum=0.1, affine=True)

|

| 259 |

+

|

| 260 |

+

if pretrained is not None:

|

| 261 |

+

self.logits = nn.Linear(512, tmp_classes)

|

| 262 |

+

load_weights(self, pretrained)

|

| 263 |

+

|

| 264 |

+

if self.classify and self.num_classes is not None:

|

| 265 |

+

self.logits = nn.Linear(512, self.num_classes)

|

| 266 |

+

|

| 267 |

+

self.device = torch.device('cpu')

|

| 268 |

+

if device is not None:

|

| 269 |

+

self.device = device

|

| 270 |

+

self.to(device)

|

| 271 |

+

|

| 272 |

+

def forward(self, x):

|

| 273 |

+

"""Calculate embeddings or logits given a batch of input image tensors.

|

| 274 |

+

|

| 275 |

+

Arguments:

|

| 276 |

+

x {torch.tensor} -- Batch of image tensors representing faces.

|

| 277 |

+

|

| 278 |

+

Returns:

|

| 279 |

+

torch.tensor -- Batch of embedding vectors or multinomial logits.

|

| 280 |

+

"""

|

| 281 |

+

x = self.conv2d_1a(x)

|

| 282 |

+

x = self.conv2d_2a(x)

|

| 283 |

+

x = self.conv2d_2b(x)

|

| 284 |

+

x = self.maxpool_3a(x)

|

| 285 |

+

x = self.conv2d_3b(x)

|

| 286 |

+

x = self.conv2d_4a(x)

|

| 287 |

+

x = self.conv2d_4b(x)

|

| 288 |

+

x = self.repeat_1(x)

|

| 289 |

+

x = self.mixed_6a(x)

|

| 290 |

+

x = self.repeat_2(x)

|

| 291 |

+

x = self.mixed_7a(x)

|

| 292 |

+

x = self.repeat_3(x)

|

| 293 |

+

x = self.block8(x)

|

| 294 |

+

x = self.avgpool_1a(x)

|

| 295 |

+

x = self.dropout(x)

|

| 296 |

+

x = self.last_linear(x.view(x.shape[0], -1))

|

| 297 |

+

x = self.last_bn(x)

|

| 298 |

+

if self.classify:

|

| 299 |

+

x = self.logits(x)

|

| 300 |

+

else:

|

| 301 |

+

x = F.normalize(x, p=2, dim=1)

|

| 302 |

+

return x

|

| 303 |

+

|

| 304 |

+

|

| 305 |

+

def load_weights(mdl, name):

|

| 306 |

+

"""Download pretrained state_dict and load into model.

|

| 307 |

+

|

| 308 |

+

Arguments:

|

| 309 |

+

mdl {torch.nn.Module} -- Pytorch model.

|

| 310 |

+

name {str} -- Name of dataset that was used to generate pretrained state_dict.

|

| 311 |

+

|

| 312 |

+

Raises:

|

| 313 |

+

ValueError: If 'pretrained' not equal to 'vggface2' or 'casia-webface'.

|

| 314 |

+

"""

|

| 315 |

+

if name == 'vggface2':

|

| 316 |

+

path = 'https://github.com/timesler/facenet-pytorch/releases/download/v2.2.9/20180402-114759-vggface2.pt'

|

| 317 |

+

elif name == 'casia-webface':

|

| 318 |

+

path = 'https://github.com/timesler/facenet-pytorch/releases/download/v2.2.9/20180408-102900-casia-webface.pt'

|

| 319 |

+

else:

|

| 320 |

+

raise ValueError('Pretrained models only exist for "vggface2" and "casia-webface"')

|

| 321 |

+

|

| 322 |

+

model_dir = os.path.join(get_torch_home(), 'checkpoints')

|

| 323 |

+

os.makedirs(model_dir, exist_ok=True)

|

| 324 |

+

|

| 325 |

+

cached_file = os.path.join(model_dir, os.path.basename(path))

|

| 326 |

+

if not os.path.exists(cached_file):

|

| 327 |

+

download_url_to_file(path, cached_file)

|

| 328 |

+

|

| 329 |

+

state_dict = torch.load(cached_file)

|

| 330 |

+

mdl.load_state_dict(state_dict)

|

| 331 |

+

|

| 332 |

+

|

| 333 |

+

def get_torch_home():

|

| 334 |

+

torch_home = os.path.expanduser(

|

| 335 |

+

os.getenv(

|

| 336 |

+

'TORCH_HOME',

|

| 337 |

+

os.path.join(os.getenv('XDG_CACHE_HOME', '~/.cache'), 'torch')

|

| 338 |

+

)

|

| 339 |

+

)

|

| 340 |

+

return torch_home

|

models/mtcnn.py

ADDED

|

@@ -0,0 +1,519 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn

|

| 3 |

+

import numpy as np

|

| 4 |

+

import os

|

| 5 |

+

|

| 6 |

+

from .utils.detect_face import detect_face, extract_face

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class PNet(nn.Module):

|

| 10 |

+

"""MTCNN PNet.

|

| 11 |

+

|

| 12 |

+

Keyword Arguments:

|

| 13 |

+

pretrained {bool} -- Whether or not to load saved pretrained weights (default: {True})

|

| 14 |

+

"""

|

| 15 |

+

|

| 16 |

+

def __init__(self, pretrained=True):

|

| 17 |

+

super().__init__()

|

| 18 |

+

|

| 19 |

+

self.conv1 = nn.Conv2d(3, 10, kernel_size=3)

|

| 20 |

+

self.prelu1 = nn.PReLU(10)

|

| 21 |

+

self.pool1 = nn.MaxPool2d(2, 2, ceil_mode=True)

|

| 22 |

+

self.conv2 = nn.Conv2d(10, 16, kernel_size=3)

|

| 23 |

+

self.prelu2 = nn.PReLU(16)

|

| 24 |

+

self.conv3 = nn.Conv2d(16, 32, kernel_size=3)

|

| 25 |

+

self.prelu3 = nn.PReLU(32)

|

| 26 |

+

self.conv4_1 = nn.Conv2d(32, 2, kernel_size=1)

|

| 27 |

+

self.softmax4_1 = nn.Softmax(dim=1)

|

| 28 |

+

self.conv4_2 = nn.Conv2d(32, 4, kernel_size=1)

|

| 29 |

+

|

| 30 |

+

self.training = False

|

| 31 |

+

|

| 32 |

+

if pretrained:

|

| 33 |

+

state_dict_path = os.path.join(os.path.dirname(__file__), '../data/pnet.pt')

|

| 34 |

+

state_dict = torch.load(state_dict_path)

|

| 35 |

+

self.load_state_dict(state_dict)

|

| 36 |

+

|

| 37 |

+

def forward(self, x):

|

| 38 |

+

x = self.conv1(x)

|

| 39 |

+

x = self.prelu1(x)

|

| 40 |

+

x = self.pool1(x)

|

| 41 |

+

x = self.conv2(x)

|

| 42 |

+

x = self.prelu2(x)

|

| 43 |

+

x = self.conv3(x)

|

| 44 |

+

x = self.prelu3(x)

|

| 45 |

+

a = self.conv4_1(x)

|

| 46 |

+

a = self.softmax4_1(a)

|

| 47 |

+

b = self.conv4_2(x)

|

| 48 |

+

return b, a

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

class RNet(nn.Module):

|

| 52 |

+

"""MTCNN RNet.

|

| 53 |

+

|

| 54 |

+

Keyword Arguments:

|

| 55 |

+

pretrained {bool} -- Whether or not to load saved pretrained weights (default: {True})

|

| 56 |

+

"""

|

| 57 |

+

|

| 58 |

+

def __init__(self, pretrained=True):

|

| 59 |

+

super().__init__()

|

| 60 |

+

|

| 61 |

+

self.conv1 = nn.Conv2d(3, 28, kernel_size=3)

|

| 62 |

+

self.prelu1 = nn.PReLU(28)

|

| 63 |

+

self.pool1 = nn.MaxPool2d(3, 2, ceil_mode=True)

|

| 64 |

+

self.conv2 = nn.Conv2d(28, 48, kernel_size=3)

|

| 65 |

+

self.prelu2 = nn.PReLU(48)

|

| 66 |

+

self.pool2 = nn.MaxPool2d(3, 2, ceil_mode=True)

|

| 67 |

+

self.conv3 = nn.Conv2d(48, 64, kernel_size=2)

|

| 68 |

+

self.prelu3 = nn.PReLU(64)

|

| 69 |

+

self.dense4 = nn.Linear(576, 128)

|

| 70 |

+

self.prelu4 = nn.PReLU(128)

|

| 71 |

+

self.dense5_1 = nn.Linear(128, 2)

|

| 72 |

+

self.softmax5_1 = nn.Softmax(dim=1)

|

| 73 |

+

self.dense5_2 = nn.Linear(128, 4)

|

| 74 |

+

|

| 75 |

+

self.training = False

|

| 76 |

+

|

| 77 |

+

if pretrained:

|

| 78 |

+

state_dict_path = os.path.join(os.path.dirname(__file__), '../data/rnet.pt')

|

| 79 |

+

state_dict = torch.load(state_dict_path)

|

| 80 |

+

self.load_state_dict(state_dict)

|

| 81 |

+

|

| 82 |

+

def forward(self, x):

|

| 83 |

+

x = self.conv1(x)

|

| 84 |

+

x = self.prelu1(x)

|

| 85 |

+

x = self.pool1(x)

|

| 86 |

+

x = self.conv2(x)

|

| 87 |

+

x = self.prelu2(x)

|

| 88 |

+

x = self.pool2(x)

|

| 89 |

+

x = self.conv3(x)

|

| 90 |

+

x = self.prelu3(x)

|

| 91 |

+

x = x.permute(0, 3, 2, 1).contiguous()

|

| 92 |

+

x = self.dense4(x.view(x.shape[0], -1))

|

| 93 |

+

x = self.prelu4(x)

|

| 94 |

+

a = self.dense5_1(x)

|

| 95 |

+

a = self.softmax5_1(a)

|

| 96 |

+

b = self.dense5_2(x)

|

| 97 |

+

return b, a

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

class ONet(nn.Module):

|

| 101 |

+

"""MTCNN ONet.

|

| 102 |

+

|

| 103 |

+

Keyword Arguments:

|

| 104 |

+

pretrained {bool} -- Whether or not to load saved pretrained weights (default: {True})

|

| 105 |

+

"""

|

| 106 |

+

|

| 107 |

+

def __init__(self, pretrained=True):

|

| 108 |

+

super().__init__()

|

| 109 |

+

|

| 110 |

+

self.conv1 = nn.Conv2d(3, 32, kernel_size=3)

|

| 111 |

+

self.prelu1 = nn.PReLU(32)

|

| 112 |

+

self.pool1 = nn.MaxPool2d(3, 2, ceil_mode=True)

|

| 113 |

+

self.conv2 = nn.Conv2d(32, 64, kernel_size=3)

|

| 114 |

+

self.prelu2 = nn.PReLU(64)

|

| 115 |

+

self.pool2 = nn.MaxPool2d(3, 2, ceil_mode=True)

|

| 116 |

+

self.conv3 = nn.Conv2d(64, 64, kernel_size=3)

|

| 117 |

+

self.prelu3 = nn.PReLU(64)

|

| 118 |

+

self.pool3 = nn.MaxPool2d(2, 2, ceil_mode=True)

|

| 119 |

+

self.conv4 = nn.Conv2d(64, 128, kernel_size=2)

|

| 120 |

+

self.prelu4 = nn.PReLU(128)

|

| 121 |

+

self.dense5 = nn.Linear(1152, 256)

|

| 122 |

+

self.prelu5 = nn.PReLU(256)

|

| 123 |

+

self.dense6_1 = nn.Linear(256, 2)

|

| 124 |

+

self.softmax6_1 = nn.Softmax(dim=1)

|

| 125 |

+

self.dense6_2 = nn.Linear(256, 4)

|

| 126 |

+

self.dense6_3 = nn.Linear(256, 10)

|

| 127 |

+

|

| 128 |

+

self.training = False

|

| 129 |

+

|

| 130 |

+

if pretrained:

|

| 131 |

+

state_dict_path = os.path.join(os.path.dirname(__file__), '../data/onet.pt')

|

| 132 |

+

state_dict = torch.load(state_dict_path)

|

| 133 |

+

self.load_state_dict(state_dict)

|

| 134 |

+

|

| 135 |

+

def forward(self, x):

|

| 136 |

+

x = self.conv1(x)

|

| 137 |

+

x = self.prelu1(x)

|

| 138 |

+

x = self.pool1(x)

|

| 139 |

+

x = self.conv2(x)

|

| 140 |

+

x = self.prelu2(x)

|

| 141 |

+

x = self.pool2(x)

|

| 142 |

+

x = self.conv3(x)

|

| 143 |

+

x = self.prelu3(x)

|

| 144 |

+

x = self.pool3(x)

|

| 145 |

+

x = self.conv4(x)

|

| 146 |

+

x = self.prelu4(x)

|

| 147 |

+

x = x.permute(0, 3, 2, 1).contiguous()

|

| 148 |

+

x = self.dense5(x.view(x.shape[0], -1))

|

| 149 |

+

x = self.prelu5(x)

|

| 150 |

+

a = self.dense6_1(x)

|

| 151 |

+

a = self.softmax6_1(a)

|

| 152 |

+

b = self.dense6_2(x)

|

| 153 |

+

c = self.dense6_3(x)

|

| 154 |

+

return b, c, a

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

class MTCNN(nn.Module):

|

| 158 |

+

"""MTCNN face detection module.

|

| 159 |

+

|

| 160 |

+

This class loads pretrained P-, R-, and O-nets and returns images cropped to include the face

|

| 161 |

+

only, given raw input images of one of the following types:

|

| 162 |

+

- PIL image or list of PIL images

|

| 163 |

+

- numpy.ndarray (uint8) representing either a single image (3D) or a batch of images (4D).

|

| 164 |

+

Cropped faces can optionally be saved to file

|

| 165 |

+

also.

|

| 166 |

+

|

| 167 |

+

Keyword Arguments:

|

| 168 |

+

image_size {int} -- Output image size in pixels. The image will be square. (default: {160})

|

| 169 |

+

margin {int} -- Margin to add to bounding box, in terms of pixels in the final image.

|

| 170 |

+

Note that the application of the margin differs slightly from the davidsandberg/facenet

|

| 171 |

+

repo, which applies the margin to the original image before resizing, making the margin

|

| 172 |

+

dependent on the original image size (this is a bug in davidsandberg/facenet).

|

| 173 |

+

(default: {0})

|

| 174 |

+

min_face_size {int} -- Minimum face size to search for. (default: {20})

|

| 175 |

+

thresholds {list} -- MTCNN face detection thresholds (default: {[0.6, 0.7, 0.7]})

|

| 176 |

+

factor {float} -- Factor used to create a scaling pyramid of face sizes. (default: {0.709})

|

| 177 |

+

post_process {bool} -- Whether or not to post process images tensors before returning.

|

| 178 |

+

(default: {True})

|

| 179 |

+

select_largest {bool} -- If True, if multiple faces are detected, the largest is returned.

|

| 180 |

+

If False, the face with the highest detection probability is returned.

|

| 181 |

+

(default: {True})

|

| 182 |

+

selection_method {string} -- Which heuristic to use for selection. Default None. If

|

| 183 |

+

specified, will override select_largest:

|

| 184 |

+

"probability": highest probability selected

|

| 185 |

+

"largest": largest box selected

|

| 186 |

+

"largest_over_threshold": largest box over a certain probability selected

|

| 187 |

+

"center_weighted_size": box size minus weighted squared offset from image center

|

| 188 |

+

(default: {None})

|

| 189 |

+

keep_all {bool} -- If True, all detected faces are returned, in the order dictated by the

|

| 190 |

+

select_largest parameter. If a save_path is specified, the first face is saved to that

|

| 191 |

+

path and the remaining faces are saved to <save_path>1, <save_path>2 etc.

|

| 192 |

+

(default: {False})

|

| 193 |

+

device {torch.device} -- The device on which to run neural net passes. Image tensors and

|

| 194 |

+

models are copied to this device before running forward passes. (default: {None})

|

| 195 |

+

"""

|

| 196 |

+

|

| 197 |

+

def __init__(

|

| 198 |

+

self, image_size=160, margin=0, min_face_size=20,

|

| 199 |

+

thresholds=[0.6, 0.7, 0.7], factor=0.709, post_process=True,

|

| 200 |

+

select_largest=True, selection_method=None, keep_all=False, device=None

|

| 201 |

+

):

|

| 202 |

+

super().__init__()

|

| 203 |

+

|

| 204 |

+

self.image_size = image_size

|

| 205 |

+

self.margin = margin

|

| 206 |

+

self.min_face_size = min_face_size

|

| 207 |

+

self.thresholds = thresholds

|

| 208 |

+

self.factor = factor

|

| 209 |

+

self.post_process = post_process

|

| 210 |

+

self.select_largest = select_largest

|

| 211 |

+

self.keep_all = keep_all

|

| 212 |

+

self.selection_method = selection_method

|

| 213 |

+

|

| 214 |

+

self.pnet = PNet()

|

| 215 |

+

self.rnet = RNet()

|

| 216 |

+

self.onet = ONet()

|

| 217 |

+

|

| 218 |

+

self.device = torch.device('cpu')

|

| 219 |

+

if device is not None:

|

| 220 |

+

self.device = device

|

| 221 |

+

self.to(device)

|

| 222 |

+

|

| 223 |

+

if not self.selection_method:

|

| 224 |

+

self.selection_method = 'largest' if self.select_largest else 'probability'

|

| 225 |

+

|

| 226 |

+

def forward(self, img, save_path=None, return_prob=False):

|

| 227 |

+

"""Run MTCNN face detection on a PIL image or numpy array. This method performs both

|

| 228 |

+

detection and extraction of faces, returning tensors representing detected faces rather

|

| 229 |

+

than the bounding boxes. To access bounding boxes, see the MTCNN.detect() method below.

|

| 230 |

+

|

| 231 |

+

Arguments:

|

| 232 |

+

img {PIL.Image, np.ndarray, or list} -- A PIL image, np.ndarray, torch.Tensor, or list.

|

| 233 |

+

|

| 234 |

+

Keyword Arguments:

|

| 235 |

+

save_path {str} -- An optional save path for the cropped image. Note that when

|

| 236 |

+

self.post_process=True, although the returned tensor is post processed, the saved

|

| 237 |

+

face image is not, so it is a true representation of the face in the input image.

|

| 238 |

+

If `img` is a list of images, `save_path` should be a list of equal length.

|

| 239 |

+

(default: {None})

|

| 240 |

+

return_prob {bool} -- Whether or not to return the detection probability.

|

| 241 |

+

(default: {False})

|

| 242 |

+

|

| 243 |

+

Returns:

|

| 244 |

+

Union[torch.Tensor, tuple(torch.tensor, float)] -- If detected, cropped image of a face

|

| 245 |

+

with dimensions 3 x image_size x image_size. Optionally, the probability that a

|

| 246 |

+

face was detected. If self.keep_all is True, n detected faces are returned in an

|

| 247 |

+

n x 3 x image_size x image_size tensor with an optional list of detection

|

| 248 |

+

probabilities. If `img` is a list of images, the item(s) returned have an extra

|

| 249 |

+

dimension (batch) as the first dimension.

|

| 250 |

+

|

| 251 |

+

Example:

|

| 252 |

+

>>> from facenet_pytorch import MTCNN

|

| 253 |

+

>>> mtcnn = MTCNN()

|

| 254 |

+

>>> face_tensor, prob = mtcnn(img, save_path='face.png', return_prob=True)

|

| 255 |

+

"""

|

| 256 |

+

|

| 257 |

+

# Detect faces

|

| 258 |

+

batch_boxes, batch_probs, batch_points = self.detect(img, landmarks=True)

|

| 259 |

+

# Select faces

|

| 260 |

+

if not self.keep_all:

|

| 261 |

+

batch_boxes, batch_probs, batch_points = self.select_boxes(

|

| 262 |

+

batch_boxes, batch_probs, batch_points, img, method=self.selection_method

|

| 263 |

+

)

|

| 264 |

+

# Extract faces

|

| 265 |

+

faces = self.extract(img, batch_boxes, save_path)

|

| 266 |

+

|

| 267 |

+

if return_prob:

|

| 268 |

+

return faces, batch_probs

|

| 269 |

+

else:

|

| 270 |

+

return faces

|

| 271 |

+

|

| 272 |

+

def detect(self, img, landmarks=False):

|

| 273 |

+

"""Detect all faces in PIL image and return bounding boxes and optional facial landmarks.

|

| 274 |

+

|

| 275 |

+

This method is used by the forward method and is also useful for face detection tasks

|

| 276 |

+

that require lower-level handling of bounding boxes and facial landmarks (e.g., face

|

| 277 |

+

tracking). The functionality of the forward function can be emulated by using this method

|

| 278 |

+

followed by the extract_face() function.

|

| 279 |

+

|

| 280 |

+

Arguments:

|

| 281 |

+

img {PIL.Image, np.ndarray, or list} -- A PIL image, np.ndarray, torch.Tensor, or list.

|

| 282 |

+

|

| 283 |

+

Keyword Arguments:

|

| 284 |

+

landmarks {bool} -- Whether to return facial landmarks in addition to bounding boxes.

|

| 285 |

+

(default: {False})

|

| 286 |

+

|

| 287 |

+

Returns:

|

| 288 |

+

tuple(numpy.ndarray, list) -- For N detected faces, a tuple containing an

|

| 289 |

+

Nx4 array of bounding boxes and a length N list of detection probabilities.

|

| 290 |

+

Returned boxes will be sorted in descending order by detection probability if

|

| 291 |

+

self.select_largest=False, otherwise the largest face will be returned first.

|

| 292 |

+

If `img` is a list of images, the items returned have an extra dimension

|

| 293 |

+

(batch) as the first dimension. Optionally, a third item, the facial landmarks,

|

| 294 |

+

are returned if `landmarks=True`.

|

| 295 |

+

|

| 296 |

+

Example:

|

| 297 |

+

>>> from PIL import Image, ImageDraw

|

| 298 |

+

>>> from facenet_pytorch import MTCNN, extract_face

|

| 299 |

+

>>> mtcnn = MTCNN(keep_all=True)

|

| 300 |

+

>>> boxes, probs, points = mtcnn.detect(img, landmarks=True)

|

| 301 |

+

>>> # Draw boxes and save faces

|

| 302 |

+

>>> img_draw = img.copy()

|

| 303 |

+

>>> draw = ImageDraw.Draw(img_draw)

|

| 304 |

+

>>> for i, (box, point) in enumerate(zip(boxes, points)):

|

| 305 |

+

... draw.rectangle(box.tolist(), width=5)

|

| 306 |

+

... for p in point:

|

| 307 |

+

... draw.rectangle((p - 10).tolist() + (p + 10).tolist(), width=10)

|

| 308 |

+

... extract_face(img, box, save_path='detected_face_{}.png'.format(i))

|

| 309 |

+

>>> img_draw.save('annotated_faces.png')

|

| 310 |

+

"""

|

| 311 |

+