Spaces:

Running

on

Zero

Running

on

Zero

add dependencies

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- croco/LICENSE +52 -0

- croco/NOTICE +21 -0

- croco/README.MD +124 -0

- croco/assets/arch.jpg +0 -0

- croco/croco-stereo-flow-demo.ipynb +191 -0

- croco/datasets/__init__.py +0 -0

- croco/datasets/crops/README.MD +104 -0

- croco/datasets/crops/extract_crops_from_images.py +183 -0

- croco/datasets/habitat_sim/README.MD +76 -0

- croco/datasets/habitat_sim/__init__.py +0 -0

- croco/datasets/habitat_sim/generate_from_metadata.py +125 -0

- croco/datasets/habitat_sim/generate_from_metadata_files.py +36 -0

- croco/datasets/habitat_sim/generate_multiview_images.py +231 -0

- croco/datasets/habitat_sim/multiview_habitat_sim_generator.py +501 -0

- croco/datasets/habitat_sim/pack_metadata_files.py +80 -0

- croco/datasets/habitat_sim/paths.py +179 -0

- croco/datasets/pairs_dataset.py +162 -0

- croco/datasets/transforms.py +135 -0

- croco/interactive_demo.ipynb +271 -0

- croco/models/__pycache__/blocks.cpython-310.pyc +0 -0

- croco/models/__pycache__/blocks.cpython-311.pyc +0 -0

- croco/models/__pycache__/blocks.cpython-312.pyc +0 -0

- croco/models/__pycache__/croco.cpython-310.pyc +0 -0

- croco/models/__pycache__/croco.cpython-311.pyc +0 -0

- croco/models/__pycache__/croco.cpython-312.pyc +0 -0

- croco/models/__pycache__/dpt_block.cpython-310.pyc +0 -0

- croco/models/__pycache__/dpt_block.cpython-311.pyc +0 -0

- croco/models/__pycache__/dpt_block.cpython-312.pyc +0 -0

- croco/models/__pycache__/masking.cpython-310.pyc +0 -0

- croco/models/__pycache__/masking.cpython-311.pyc +0 -0

- croco/models/__pycache__/masking.cpython-312.pyc +0 -0

- croco/models/__pycache__/pos_embed.cpython-310.pyc +0 -0

- croco/models/__pycache__/pos_embed.cpython-311.pyc +0 -0

- croco/models/__pycache__/pos_embed.cpython-312.pyc +0 -0

- croco/models/blocks.py +385 -0

- croco/models/criterion.py +38 -0

- croco/models/croco.py +330 -0

- croco/models/croco_downstream.py +141 -0

- croco/models/curope/__init__.py +4 -0

- croco/models/curope/__pycache__/__init__.cpython-310.pyc +0 -0

- croco/models/curope/__pycache__/__init__.cpython-311.pyc +0 -0

- croco/models/curope/__pycache__/__init__.cpython-312.pyc +0 -0

- croco/models/curope/__pycache__/curope2d.cpython-310.pyc +0 -0

- croco/models/curope/__pycache__/curope2d.cpython-311.pyc +0 -0

- croco/models/curope/__pycache__/curope2d.cpython-312.pyc +0 -0

- croco/models/curope/curope.cpp +69 -0

- croco/models/curope/curope2d.py +40 -0

- croco/models/curope/kernels.cu +108 -0

- croco/models/curope/setup.py +34 -0

- croco/models/dpt_block.py +513 -0

croco/LICENSE

ADDED

|

@@ -0,0 +1,52 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

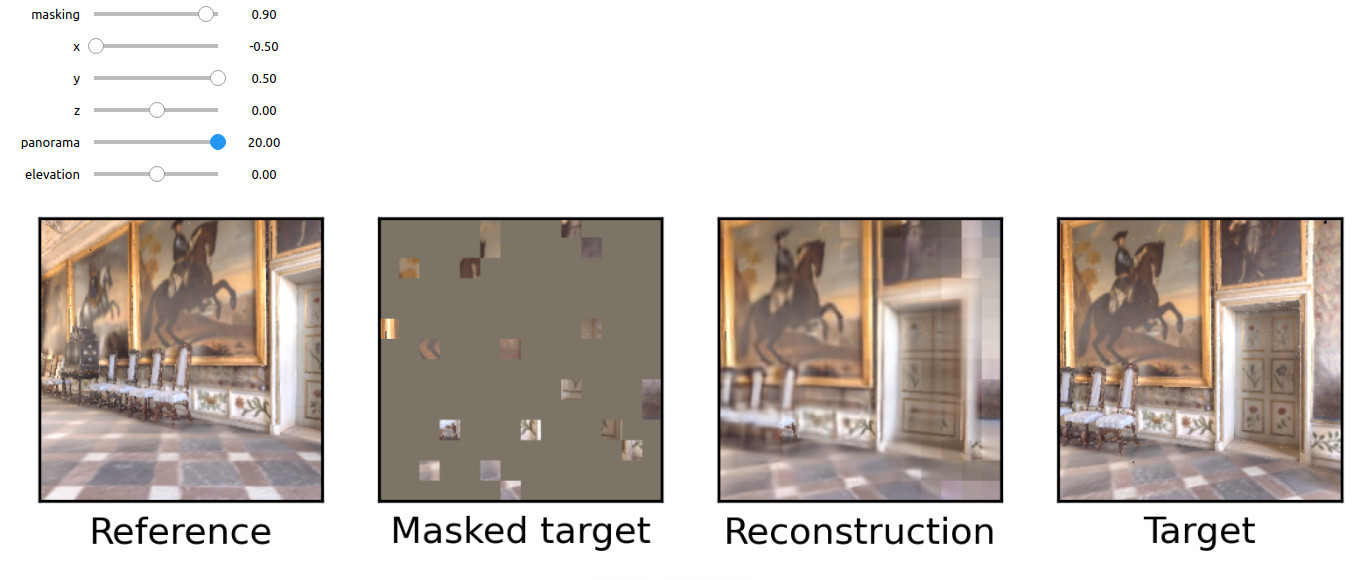

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

CroCo, Copyright (c) 2022-present Naver Corporation, is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 license.

|

| 2 |

+

|

| 3 |

+

A summary of the CC BY-NC-SA 4.0 license is located here:

|

| 4 |

+

https://creativecommons.org/licenses/by-nc-sa/4.0/

|

| 5 |

+

|

| 6 |

+

The CC BY-NC-SA 4.0 license is located here:

|

| 7 |

+

https://creativecommons.org/licenses/by-nc-sa/4.0/legalcode

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

SEE NOTICE BELOW WITH RESPECT TO THE FILE: models/pos_embed.py, models/blocks.py

|

| 11 |

+

|

| 12 |

+

***************************

|

| 13 |

+

|

| 14 |

+

NOTICE WITH RESPECT TO THE FILE: models/pos_embed.py

|

| 15 |

+

|

| 16 |

+

This software is being redistributed in a modifiled form. The original form is available here:

|

| 17 |

+

|

| 18 |

+

https://github.com/facebookresearch/mae/blob/main/util/pos_embed.py

|

| 19 |

+

|

| 20 |

+

This software in this file incorporates parts of the following software available here:

|

| 21 |

+

|

| 22 |

+

Transformer: https://github.com/tensorflow/models/blob/master/official/legacy/transformer/model_utils.py

|

| 23 |

+

available under the following license: https://github.com/tensorflow/models/blob/master/LICENSE

|

| 24 |

+

|

| 25 |

+

MoCo v3: https://github.com/facebookresearch/moco-v3

|

| 26 |

+

available under the following license: https://github.com/facebookresearch/moco-v3/blob/main/LICENSE

|

| 27 |

+

|

| 28 |

+

DeiT: https://github.com/facebookresearch/deit

|

| 29 |

+

available under the following license: https://github.com/facebookresearch/deit/blob/main/LICENSE

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

ORIGINAL COPYRIGHT NOTICE AND PERMISSION NOTICE AVAILABLE HERE IS REPRODUCE BELOW:

|

| 33 |

+

|

| 34 |

+

https://github.com/facebookresearch/mae/blob/main/LICENSE

|

| 35 |

+

|

| 36 |

+

Attribution-NonCommercial 4.0 International

|

| 37 |

+

|

| 38 |

+

***************************

|

| 39 |

+

|

| 40 |

+

NOTICE WITH RESPECT TO THE FILE: models/blocks.py

|

| 41 |

+

|

| 42 |

+

This software is being redistributed in a modifiled form. The original form is available here:

|

| 43 |

+

|

| 44 |

+

https://github.com/rwightman/pytorch-image-models

|

| 45 |

+

|

| 46 |

+

ORIGINAL COPYRIGHT NOTICE AND PERMISSION NOTICE AVAILABLE HERE IS REPRODUCE BELOW:

|

| 47 |

+

|

| 48 |

+

https://github.com/rwightman/pytorch-image-models/blob/master/LICENSE

|

| 49 |

+

|

| 50 |

+

Apache License

|

| 51 |

+

Version 2.0, January 2004

|

| 52 |

+

http://www.apache.org/licenses/

|

croco/NOTICE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

CroCo

|

| 2 |

+

Copyright 2022-present NAVER Corp.

|

| 3 |

+

|

| 4 |

+

This project contains subcomponents with separate copyright notices and license terms.

|

| 5 |

+

Your use of the source code for these subcomponents is subject to the terms and conditions of the following licenses.

|

| 6 |

+

|

| 7 |

+

====

|

| 8 |

+

|

| 9 |

+

facebookresearch/mae

|

| 10 |

+

https://github.com/facebookresearch/mae

|

| 11 |

+

|

| 12 |

+

Attribution-NonCommercial 4.0 International

|

| 13 |

+

|

| 14 |

+

====

|

| 15 |

+

|

| 16 |

+

rwightman/pytorch-image-models

|

| 17 |

+

https://github.com/rwightman/pytorch-image-models

|

| 18 |

+

|

| 19 |

+

Apache License

|

| 20 |

+

Version 2.0, January 2004

|

| 21 |

+

http://www.apache.org/licenses/

|

croco/README.MD

ADDED

|

@@ -0,0 +1,124 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CroCo + CroCo v2 / CroCo-Stereo / CroCo-Flow

|

| 2 |

+

|

| 3 |

+

[[`CroCo arXiv`](https://arxiv.org/abs/2210.10716)] [[`CroCo v2 arXiv`](https://arxiv.org/abs/2211.10408)] [[`project page and demo`](https://croco.europe.naverlabs.com/)]

|

| 4 |

+

|

| 5 |

+

This repository contains the code for our CroCo model presented in our NeurIPS'22 paper [CroCo: Self-Supervised Pre-training for 3D Vision Tasks by Cross-View Completion](https://openreview.net/pdf?id=wZEfHUM5ri) and its follow-up extension published at ICCV'23 [Improved Cross-view Completion Pre-training for Stereo Matching and Optical Flow](https://openaccess.thecvf.com/content/ICCV2023/html/Weinzaepfel_CroCo_v2_Improved_Cross-view_Completion_Pre-training_for_Stereo_Matching_and_ICCV_2023_paper.html), refered to as CroCo v2:

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

```bibtex

|

| 10 |

+

@inproceedings{croco,

|

| 11 |

+

title={{CroCo: Self-Supervised Pre-training for 3D Vision Tasks by Cross-View Completion}},

|

| 12 |

+

author={{Weinzaepfel, Philippe and Leroy, Vincent and Lucas, Thomas and Br\'egier, Romain and Cabon, Yohann and Arora, Vaibhav and Antsfeld, Leonid and Chidlovskii, Boris and Csurka, Gabriela and Revaud J\'er\^ome}},

|

| 13 |

+

booktitle={{NeurIPS}},

|

| 14 |

+

year={2022}

|

| 15 |

+

}

|

| 16 |

+

|

| 17 |

+

@inproceedings{croco_v2,

|

| 18 |

+

title={{CroCo v2: Improved Cross-view Completion Pre-training for Stereo Matching and Optical Flow}},

|

| 19 |

+

author={Weinzaepfel, Philippe and Lucas, Thomas and Leroy, Vincent and Cabon, Yohann and Arora, Vaibhav and Br{\'e}gier, Romain and Csurka, Gabriela and Antsfeld, Leonid and Chidlovskii, Boris and Revaud, J{\'e}r{\^o}me},

|

| 20 |

+

booktitle={ICCV},

|

| 21 |

+

year={2023}

|

| 22 |

+

}

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

## License

|

| 26 |

+

|

| 27 |

+

The code is distributed under the CC BY-NC-SA 4.0 License. See [LICENSE](LICENSE) for more information.

|

| 28 |

+

Some components are based on code from [MAE](https://github.com/facebookresearch/mae) released under the CC BY-NC-SA 4.0 License and [timm](https://github.com/rwightman/pytorch-image-models) released under the Apache 2.0 License.

|

| 29 |

+

Some components for stereo matching and optical flow are based on code from [unimatch](https://github.com/autonomousvision/unimatch) released under the MIT license.

|

| 30 |

+

|

| 31 |

+

## Preparation

|

| 32 |

+

|

| 33 |

+

1. Install dependencies on a machine with a NVidia GPU using e.g. conda. Note that `habitat-sim` is required only for the interactive demo and the synthetic pre-training data generation. If you don't plan to use it, you can ignore the line installing it and use a more recent python version.

|

| 34 |

+

|

| 35 |

+

```bash

|

| 36 |

+

conda create -n croco python=3.7 cmake=3.14.0

|

| 37 |

+

conda activate croco

|

| 38 |

+

conda install habitat-sim headless -c conda-forge -c aihabitat

|

| 39 |

+

conda install pytorch torchvision -c pytorch

|

| 40 |

+

conda install notebook ipykernel matplotlib

|

| 41 |

+

conda install ipywidgets widgetsnbextension

|

| 42 |

+

conda install scikit-learn tqdm quaternion opencv # only for pretraining / habitat data generation

|

| 43 |

+

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

2. Compile cuda kernels for RoPE

|

| 47 |

+

|

| 48 |

+

CroCo v2 relies on RoPE positional embeddings for which you need to compile some cuda kernels.

|

| 49 |

+

```bash

|

| 50 |

+

cd models/curope/

|

| 51 |

+

python setup.py build_ext --inplace

|

| 52 |

+

cd ../../

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

This can be a bit long as we compile for all cuda architectures, feel free to update L9 of `models/curope/setup.py` to compile for specific architectures only.

|

| 56 |

+

You might also need to set the environment `CUDA_HOME` in case you use a custom cuda installation.

|

| 57 |

+

|

| 58 |

+

In case you cannot provide, we also provide a slow pytorch version, which will be automatically loaded.

|

| 59 |

+

|

| 60 |

+

3. Download pre-trained model

|

| 61 |

+

|

| 62 |

+

We provide several pre-trained models:

|

| 63 |

+

|

| 64 |

+

| modelname | pre-training data | pos. embed. | Encoder | Decoder |

|

| 65 |

+

|------------------------------------------------------------------------------------------------------------------------------------|-------------------|-------------|---------|---------|

|

| 66 |

+

| [`CroCo.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo.pth) | Habitat | cosine | ViT-B | Small |

|

| 67 |

+

| [`CroCo_V2_ViTBase_SmallDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTBase_SmallDecoder.pth) | Habitat + real | RoPE | ViT-B | Small |

|

| 68 |

+

| [`CroCo_V2_ViTBase_BaseDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTBase_BaseDecoder.pth) | Habitat + real | RoPE | ViT-B | Base |

|

| 69 |

+

| [`CroCo_V2_ViTLarge_BaseDecoder.pth`](https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo_V2_ViTLarge_BaseDecoder.pth) | Habitat + real | RoPE | ViT-L | Base |

|

| 70 |

+

|

| 71 |

+

To download a specific model, i.e., the first one (`CroCo.pth`)

|

| 72 |

+

```bash

|

| 73 |

+

mkdir -p pretrained_models/

|

| 74 |

+

wget https://download.europe.naverlabs.com/ComputerVision/CroCo/CroCo.pth -P pretrained_models/

|

| 75 |

+

```

|

| 76 |

+

|

| 77 |

+

## Reconstruction example

|

| 78 |

+

|

| 79 |

+

Simply run after downloading the `CroCo_V2_ViTLarge_BaseDecoder` pretrained model (or update the corresponding line in `demo.py`)

|

| 80 |

+

```bash

|

| 81 |

+

python demo.py

|

| 82 |

+

```

|

| 83 |

+

|

| 84 |

+

## Interactive demonstration of cross-view completion reconstruction on the Habitat simulator

|

| 85 |

+

|

| 86 |

+

First download the test scene from Habitat:

|

| 87 |

+

```bash

|

| 88 |

+

python -m habitat_sim.utils.datasets_download --uids habitat_test_scenes --data-path habitat-sim-data/

|

| 89 |

+

```

|

| 90 |

+

|

| 91 |

+

Then, run the Notebook demo `interactive_demo.ipynb`.

|

| 92 |

+

|

| 93 |

+

In this demo, you should be able to sample a random reference viewpoint from an [Habitat](https://github.com/facebookresearch/habitat-sim) test scene. Use the sliders to change viewpoint and select a masked target view to reconstruct using CroCo.

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

## Pre-training

|

| 97 |

+

|

| 98 |

+

### CroCo

|

| 99 |

+

|

| 100 |

+

To pre-train CroCo, please first generate the pre-training data from the Habitat simulator, following the instructions in [datasets/habitat_sim/README.MD](datasets/habitat_sim/README.MD) and then run the following command:

|

| 101 |

+

```

|

| 102 |

+

torchrun --nproc_per_node=4 pretrain.py --output_dir ./output/pretraining/

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

+

Our CroCo pre-training was launched on a single server with 4 GPUs.

|

| 106 |

+

It should take around 10 days with A100 or 15 days with V100 to do the 400 pre-training epochs, but decent performances are obtained earlier in training.

|

| 107 |

+

Note that, while the code contains the same scaling rule of the learning rate as MAE when changing the effective batch size, we did not experimented if it is valid in our case.

|

| 108 |

+

The first run can take a few minutes to start, to parse all available pre-training pairs.

|

| 109 |

+

|

| 110 |

+

### CroCo v2

|

| 111 |

+

|

| 112 |

+

For CroCo v2 pre-training, in addition to the generation of the pre-training data from the Habitat simulator above, please pre-extract the crops from the real datasets following the instructions in [datasets/crops/README.MD](datasets/crops/README.MD).

|

| 113 |

+

Then, run the following command for the largest model (ViT-L encoder, Base decoder):

|

| 114 |

+

```

|

| 115 |

+

torchrun --nproc_per_node=8 pretrain.py --model "CroCoNet(enc_embed_dim=1024, enc_depth=24, enc_num_heads=16, dec_embed_dim=768, dec_num_heads=12, dec_depth=12, pos_embed='RoPE100')" --dataset "habitat_release+ARKitScenes+MegaDepth+3DStreetView+IndoorVL" --warmup_epochs 12 --max_epoch 125 --epochs 250 --amp 0 --keep_freq 5 --output_dir ./output/pretraining_crocov2/

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

Our CroCo v2 pre-training was launched on a single server with 8 GPUs for the largest model, and on a single server with 4 GPUs for the smaller ones, keeping a batch size of 64 per gpu in all cases.

|

| 119 |

+

The largest model should take around 12 days on A100.

|

| 120 |

+

Note that, while the code contains the same scaling rule of the learning rate as MAE when changing the effective batch size, we did not experimented if it is valid in our case.

|

| 121 |

+

|

| 122 |

+

## Stereo matching and Optical flow downstream tasks

|

| 123 |

+

|

| 124 |

+

For CroCo-Stereo and CroCo-Flow, please refer to [stereoflow/README.MD](stereoflow/README.MD).

|

croco/assets/arch.jpg

ADDED

|

croco/croco-stereo-flow-demo.ipynb

ADDED

|

@@ -0,0 +1,191 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "9bca0f41",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# Simple inference example with CroCo-Stereo or CroCo-Flow"

|

| 9 |

+

]

|

| 10 |

+

},

|

| 11 |

+

{

|

| 12 |

+

"cell_type": "code",

|

| 13 |

+

"execution_count": null,

|

| 14 |

+

"id": "80653ef7",

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"outputs": [],

|

| 17 |

+

"source": [

|

| 18 |

+

"# Copyright (C) 2022-present Naver Corporation. All rights reserved.\n",

|

| 19 |

+

"# Licensed under CC BY-NC-SA 4.0 (non-commercial use only)."

|

| 20 |

+

]

|

| 21 |

+

},

|

| 22 |

+

{

|

| 23 |

+

"cell_type": "markdown",

|

| 24 |

+

"id": "4f033862",

|

| 25 |

+

"metadata": {},

|

| 26 |

+

"source": [

|

| 27 |

+

"First download the model(s) of your choice by running\n",

|

| 28 |

+

"```\n",

|

| 29 |

+

"bash stereoflow/download_model.sh crocostereo.pth\n",

|

| 30 |

+

"bash stereoflow/download_model.sh crocoflow.pth\n",

|

| 31 |

+

"```"

|

| 32 |

+

]

|

| 33 |

+

},

|

| 34 |

+

{

|

| 35 |

+

"cell_type": "code",

|

| 36 |

+

"execution_count": null,

|

| 37 |

+

"id": "1fb2e392",

|

| 38 |

+

"metadata": {},

|

| 39 |

+

"outputs": [],

|

| 40 |

+

"source": [

|

| 41 |

+

"import torch\n",

|

| 42 |

+

"use_gpu = torch.cuda.is_available() and torch.cuda.device_count()>0\n",

|

| 43 |

+

"device = torch.device('cuda:0' if use_gpu else 'cpu')\n",

|

| 44 |

+

"import matplotlib.pylab as plt"

|

| 45 |

+

]

|

| 46 |

+

},

|

| 47 |

+

{

|

| 48 |

+

"cell_type": "code",

|

| 49 |

+

"execution_count": null,

|

| 50 |

+

"id": "e0e25d77",

|

| 51 |

+

"metadata": {},

|

| 52 |

+

"outputs": [],

|

| 53 |

+

"source": [

|

| 54 |

+

"from stereoflow.test import _load_model_and_criterion\n",

|

| 55 |

+

"from stereoflow.engine import tiled_pred\n",

|

| 56 |

+

"from stereoflow.datasets_stereo import img_to_tensor, vis_disparity\n",

|

| 57 |

+

"from stereoflow.datasets_flow import flowToColor\n",

|

| 58 |

+

"tile_overlap=0.7 # recommended value, higher value can be slightly better but slower"

|

| 59 |

+

]

|

| 60 |

+

},

|

| 61 |

+

{

|

| 62 |

+

"cell_type": "markdown",

|

| 63 |

+

"id": "86a921f5",

|

| 64 |

+

"metadata": {},

|

| 65 |

+

"source": [

|

| 66 |

+

"### CroCo-Stereo example"

|

| 67 |

+

]

|

| 68 |

+

},

|

| 69 |

+

{

|

| 70 |

+

"cell_type": "code",

|

| 71 |

+

"execution_count": null,

|

| 72 |

+

"id": "64e483cb",

|

| 73 |

+

"metadata": {},

|

| 74 |

+

"outputs": [],

|

| 75 |

+

"source": [

|

| 76 |

+

"image1 = np.asarray(Image.open('<path_to_left_image>'))\n",

|

| 77 |

+

"image2 = np.asarray(Image.open('<path_to_right_image>'))"

|

| 78 |

+

]

|

| 79 |

+

},

|

| 80 |

+

{

|

| 81 |

+

"cell_type": "code",

|

| 82 |

+

"execution_count": null,

|

| 83 |

+

"id": "f0d04303",

|

| 84 |

+

"metadata": {},

|

| 85 |

+

"outputs": [],

|

| 86 |

+

"source": [

|

| 87 |

+

"model, _, cropsize, with_conf, task, tile_conf_mode = _load_model_and_criterion('stereoflow_models/crocostereo.pth', None, device)\n"

|

| 88 |

+

]

|

| 89 |

+

},

|

| 90 |

+

{

|

| 91 |

+

"cell_type": "code",

|

| 92 |

+

"execution_count": null,

|

| 93 |

+

"id": "47dc14b5",

|

| 94 |

+

"metadata": {},

|

| 95 |

+

"outputs": [],

|

| 96 |

+

"source": [

|

| 97 |

+

"im1 = img_to_tensor(image1).to(device).unsqueeze(0)\n",

|

| 98 |

+

"im2 = img_to_tensor(image2).to(device).unsqueeze(0)\n",

|

| 99 |

+

"with torch.inference_mode():\n",

|

| 100 |

+

" pred, _, _ = tiled_pred(model, None, im1, im2, None, conf_mode=tile_conf_mode, overlap=tile_overlap, crop=cropsize, with_conf=with_conf, return_time=False)\n",

|

| 101 |

+

"pred = pred.squeeze(0).squeeze(0).cpu().numpy()"

|

| 102 |

+

]

|

| 103 |

+

},

|

| 104 |

+

{

|

| 105 |

+

"cell_type": "code",

|

| 106 |

+

"execution_count": null,

|

| 107 |

+

"id": "583b9f16",

|

| 108 |

+

"metadata": {},

|

| 109 |

+

"outputs": [],

|

| 110 |

+

"source": [

|

| 111 |

+

"plt.imshow(vis_disparity(pred))\n",

|

| 112 |

+

"plt.axis('off')"

|

| 113 |

+

]

|

| 114 |

+

},

|

| 115 |

+

{

|

| 116 |

+

"cell_type": "markdown",

|

| 117 |

+

"id": "d2df5d70",

|

| 118 |

+

"metadata": {},

|

| 119 |

+

"source": [

|

| 120 |

+

"### CroCo-Flow example"

|

| 121 |

+

]

|

| 122 |

+

},

|

| 123 |

+

{

|

| 124 |

+

"cell_type": "code",

|

| 125 |

+

"execution_count": null,

|

| 126 |

+

"id": "9ee257a7",

|

| 127 |

+

"metadata": {},

|

| 128 |

+

"outputs": [],

|

| 129 |

+

"source": [

|

| 130 |

+

"image1 = np.asarray(Image.open('<path_to_first_image>'))\n",

|

| 131 |

+

"image2 = np.asarray(Image.open('<path_to_second_image>'))"

|

| 132 |

+

]

|

| 133 |

+

},

|

| 134 |

+

{

|

| 135 |

+

"cell_type": "code",

|

| 136 |

+

"execution_count": null,

|

| 137 |

+

"id": "d5edccf0",

|

| 138 |

+

"metadata": {},

|

| 139 |

+

"outputs": [],

|

| 140 |

+

"source": [

|

| 141 |

+

"model, _, cropsize, with_conf, task, tile_conf_mode = _load_model_and_criterion('stereoflow_models/crocoflow.pth', None, device)\n"

|

| 142 |

+

]

|

| 143 |

+

},

|

| 144 |

+

{

|

| 145 |

+

"cell_type": "code",

|

| 146 |

+

"execution_count": null,

|

| 147 |

+

"id": "b19692c3",

|

| 148 |

+

"metadata": {},

|

| 149 |

+

"outputs": [],

|

| 150 |

+

"source": [

|

| 151 |

+

"im1 = img_to_tensor(image1).to(device).unsqueeze(0)\n",

|

| 152 |

+

"im2 = img_to_tensor(image2).to(device).unsqueeze(0)\n",

|

| 153 |

+

"with torch.inference_mode():\n",

|

| 154 |

+

" pred, _, _ = tiled_pred(model, None, im1, im2, None, conf_mode=tile_conf_mode, overlap=tile_overlap, crop=cropsize, with_conf=with_conf, return_time=False)\n",

|

| 155 |

+

"pred = pred.squeeze(0).permute(1,2,0).cpu().numpy()"

|

| 156 |

+

]

|

| 157 |

+

},

|

| 158 |

+

{

|

| 159 |

+

"cell_type": "code",

|

| 160 |

+

"execution_count": null,

|

| 161 |

+

"id": "26f79db3",

|

| 162 |

+

"metadata": {},

|

| 163 |

+

"outputs": [],

|

| 164 |

+

"source": [

|

| 165 |

+

"plt.imshow(flowToColor(pred))\n",

|

| 166 |

+

"plt.axis('off')"

|

| 167 |

+

]

|

| 168 |

+

}

|

| 169 |

+

],

|

| 170 |

+

"metadata": {

|

| 171 |

+

"kernelspec": {

|

| 172 |

+

"display_name": "Python 3 (ipykernel)",

|

| 173 |

+

"language": "python",

|

| 174 |

+

"name": "python3"

|

| 175 |

+

},

|

| 176 |

+

"language_info": {

|

| 177 |

+

"codemirror_mode": {

|

| 178 |

+

"name": "ipython",

|

| 179 |

+

"version": 3

|

| 180 |

+

},

|

| 181 |

+

"file_extension": ".py",

|

| 182 |

+

"mimetype": "text/x-python",

|

| 183 |

+

"name": "python",

|

| 184 |

+

"nbconvert_exporter": "python",

|

| 185 |

+

"pygments_lexer": "ipython3",

|

| 186 |

+

"version": "3.9.7"

|

| 187 |

+

}

|

| 188 |

+

},

|

| 189 |

+

"nbformat": 4,

|

| 190 |

+

"nbformat_minor": 5

|

| 191 |

+

}

|

croco/datasets/__init__.py

ADDED

|

File without changes

|

croco/datasets/crops/README.MD

ADDED

|

@@ -0,0 +1,104 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Generation of crops from the real datasets

|

| 2 |

+

|

| 3 |

+

The instructions below allow to generate the crops used for pre-training CroCo v2 from the following real-world datasets: ARKitScenes, MegaDepth, 3DStreetView and IndoorVL.

|

| 4 |

+

|

| 5 |

+

### Download the metadata of the crops to generate

|

| 6 |

+

|

| 7 |

+

First, download the metadata and put them in `./data/`:

|

| 8 |

+

```

|

| 9 |

+

mkdir -p data

|

| 10 |

+

cd data/

|

| 11 |

+

wget https://download.europe.naverlabs.com/ComputerVision/CroCo/data/crop_metadata.zip

|

| 12 |

+

unzip crop_metadata.zip

|

| 13 |

+

rm crop_metadata.zip

|

| 14 |

+

cd ..

|

| 15 |

+

```

|

| 16 |

+

|

| 17 |

+

### Prepare the original datasets

|

| 18 |

+

|

| 19 |

+

Second, download the original datasets in `./data/original_datasets/`.

|

| 20 |

+

```

|

| 21 |

+

mkdir -p data/original_datasets

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

##### ARKitScenes

|

| 25 |

+

|

| 26 |

+

Download the `raw` dataset from https://github.com/apple/ARKitScenes/blob/main/DATA.md and put it in `./data/original_datasets/ARKitScenes/`.

|

| 27 |

+

The resulting file structure should be like:

|

| 28 |

+

```

|

| 29 |

+

./data/original_datasets/ARKitScenes/

|

| 30 |

+

└───Training

|

| 31 |

+

└───40753679

|

| 32 |

+

│ │ ultrawide

|

| 33 |

+

│ │ ...

|

| 34 |

+

└───40753686

|

| 35 |

+

│

|

| 36 |

+

...

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

##### MegaDepth

|

| 40 |

+

|

| 41 |

+

Download `MegaDepth v1 Dataset` from https://www.cs.cornell.edu/projects/megadepth/ and put it in `./data/original_datasets/MegaDepth/`.

|

| 42 |

+

The resulting file structure should be like:

|

| 43 |

+

|

| 44 |

+

```

|

| 45 |

+

./data/original_datasets/MegaDepth/

|

| 46 |

+

└───0000

|

| 47 |

+

│ └───images

|

| 48 |

+

│ │ │ 1000557903_87fa96b8a4_o.jpg

|

| 49 |

+

│ │ └ ...

|

| 50 |

+

│ └─── ...

|

| 51 |

+

└───0001

|

| 52 |

+

│ │

|

| 53 |

+

│ └ ...

|

| 54 |

+

└─── ...

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

##### 3DStreetView

|

| 58 |

+

|

| 59 |

+

Download `3D_Street_View` dataset from https://github.com/amir32002/3D_Street_View and put it in `./data/original_datasets/3DStreetView/`.

|

| 60 |

+

The resulting file structure should be like:

|

| 61 |

+

|

| 62 |

+

```

|

| 63 |

+

./data/original_datasets/3DStreetView/

|

| 64 |

+

└───dataset_aligned

|

| 65 |

+

│ └───0002

|

| 66 |

+

│ │ │ 0000002_0000001_0000002_0000001.jpg

|

| 67 |

+

│ │ └ ...

|

| 68 |

+

│ └─── ...

|

| 69 |

+

└───dataset_unaligned

|

| 70 |

+

│ └───0003

|

| 71 |

+

│ │ │ 0000003_0000001_0000002_0000001.jpg

|

| 72 |

+

│ │ └ ...

|

| 73 |

+

│ └─── ...

|

| 74 |

+

```

|

| 75 |

+

|

| 76 |

+

##### IndoorVL

|

| 77 |

+

|

| 78 |

+

Download the `IndoorVL` datasets using [Kapture](https://github.com/naver/kapture).

|

| 79 |

+

|

| 80 |

+

```

|

| 81 |

+

pip install kapture

|

| 82 |

+

mkdir -p ./data/original_datasets/IndoorVL

|

| 83 |

+

cd ./data/original_datasets/IndoorVL

|

| 84 |

+

kapture_download_dataset.py update

|

| 85 |

+

kapture_download_dataset.py install "HyundaiDepartmentStore_*"

|

| 86 |

+

kapture_download_dataset.py install "GangnamStation_*"

|

| 87 |

+

cd -

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

### Extract the crops

|

| 91 |

+

|

| 92 |

+

Now, extract the crops for each of the dataset:

|

| 93 |

+

```

|

| 94 |

+

for dataset in ARKitScenes MegaDepth 3DStreetView IndoorVL;

|

| 95 |

+

do

|

| 96 |

+

python3 datasets/crops/extract_crops_from_images.py --crops ./data/crop_metadata/${dataset}/crops_release.txt --root-dir ./data/original_datasets/${dataset}/ --output-dir ./data/${dataset}_crops/ --imsize 256 --nthread 8 --max-subdir-levels 5 --ideal-number-pairs-in-dir 500;

|

| 97 |

+

done

|

| 98 |

+

```

|

| 99 |

+

|

| 100 |

+

##### Note for IndoorVL

|

| 101 |

+

|

| 102 |

+

Due to some legal issues, we can only release 144,228 pairs out of the 1,593,689 pairs used in the paper.

|

| 103 |

+

To account for it in terms of number of pre-training iterations, the pre-training command in this repository uses 125 training epochs including 12 warm-up epochs and learning rate cosine schedule of 250, instead of 100, 10 and 200 respectively.

|

| 104 |

+

The impact on the performance is negligible.

|

croco/datasets/crops/extract_crops_from_images.py

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (C) 2022-present Naver Corporation. All rights reserved.

|

| 2 |

+

# Licensed under CC BY-NC-SA 4.0 (non-commercial use only).

|

| 3 |

+

#

|

| 4 |

+

# --------------------------------------------------------

|

| 5 |

+

# Extracting crops for pre-training

|

| 6 |

+

# --------------------------------------------------------

|

| 7 |

+

|

| 8 |

+

import os

|

| 9 |

+

import argparse

|

| 10 |

+

from tqdm import tqdm

|

| 11 |

+

from PIL import Image

|

| 12 |

+

import functools

|

| 13 |

+

from multiprocessing import Pool

|

| 14 |

+

import math

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

def arg_parser():

|

| 18 |

+

parser = argparse.ArgumentParser(

|

| 19 |

+

"Generate cropped image pairs from image crop list"

|

| 20 |

+

)

|

| 21 |

+

|

| 22 |

+

parser.add_argument("--crops", type=str, required=True, help="crop file")

|

| 23 |

+

parser.add_argument("--root-dir", type=str, required=True, help="root directory")

|

| 24 |

+

parser.add_argument(

|

| 25 |

+

"--output-dir", type=str, required=True, help="output directory"

|

| 26 |

+

)

|

| 27 |

+

parser.add_argument("--imsize", type=int, default=256, help="size of the crops")

|

| 28 |

+

parser.add_argument(

|

| 29 |

+

"--nthread", type=int, required=True, help="number of simultaneous threads"

|

| 30 |

+

)

|

| 31 |

+

parser.add_argument(

|

| 32 |

+

"--max-subdir-levels",

|

| 33 |

+

type=int,

|

| 34 |

+

default=5,

|

| 35 |

+

help="maximum number of subdirectories",

|

| 36 |

+

)

|

| 37 |

+

parser.add_argument(

|

| 38 |

+

"--ideal-number-pairs-in-dir",

|

| 39 |

+

type=int,

|

| 40 |

+

default=500,

|

| 41 |

+

help="number of pairs stored in a dir",

|

| 42 |

+

)

|

| 43 |

+

return parser

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

def main(args):

|

| 47 |

+

listing_path = os.path.join(args.output_dir, "listing.txt")

|

| 48 |

+

|

| 49 |

+

print(f"Loading list of crops ... ({args.nthread} threads)")

|

| 50 |

+

crops, num_crops_to_generate = load_crop_file(args.crops)

|

| 51 |

+

|

| 52 |

+

print(f"Preparing jobs ({len(crops)} candidate image pairs)...")

|

| 53 |

+

num_levels = min(

|

| 54 |

+

math.ceil(math.log(num_crops_to_generate, args.ideal_number_pairs_in_dir)),

|

| 55 |

+

args.max_subdir_levels,

|

| 56 |

+

)

|

| 57 |

+

num_pairs_in_dir = math.ceil(num_crops_to_generate ** (1 / num_levels))

|

| 58 |

+

|

| 59 |

+

jobs = prepare_jobs(crops, num_levels, num_pairs_in_dir)

|

| 60 |

+

del crops

|

| 61 |

+

|

| 62 |

+

os.makedirs(args.output_dir, exist_ok=True)

|

| 63 |

+

mmap = Pool(args.nthread).imap_unordered if args.nthread > 1 else map

|

| 64 |

+

call = functools.partial(save_image_crops, args)

|

| 65 |

+

|

| 66 |

+

print(f"Generating cropped images to {args.output_dir} ...")

|

| 67 |

+

with open(listing_path, "w") as listing:

|

| 68 |

+

listing.write("# pair_path\n")

|

| 69 |

+

for results in tqdm(mmap(call, jobs), total=len(jobs)):

|

| 70 |

+

for path in results:

|

| 71 |

+

listing.write(f"{path}\n")

|

| 72 |

+

print("Finished writing listing to", listing_path)

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def load_crop_file(path):

|

| 76 |

+

data = open(path).read().splitlines()

|

| 77 |

+

pairs = []

|

| 78 |

+

num_crops_to_generate = 0

|

| 79 |

+

for line in tqdm(data):

|

| 80 |

+

if line.startswith("#"):

|

| 81 |

+

continue

|

| 82 |

+

line = line.split(", ")

|

| 83 |

+

if len(line) < 8:

|

| 84 |

+

img1, img2, rotation = line

|

| 85 |

+

pairs.append((img1, img2, int(rotation), []))

|

| 86 |

+

else:

|

| 87 |

+

l1, r1, t1, b1, l2, r2, t2, b2 = map(int, line)

|

| 88 |

+

rect1, rect2 = (l1, t1, r1, b1), (l2, t2, r2, b2)

|

| 89 |

+

pairs[-1][-1].append((rect1, rect2))

|

| 90 |

+

num_crops_to_generate += 1

|

| 91 |

+

return pairs, num_crops_to_generate

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

def prepare_jobs(pairs, num_levels, num_pairs_in_dir):

|

| 95 |

+

jobs = []

|

| 96 |

+

powers = [num_pairs_in_dir**level for level in reversed(range(num_levels))]

|

| 97 |

+

|

| 98 |

+

def get_path(idx):

|

| 99 |

+

idx_array = []

|

| 100 |

+

d = idx

|

| 101 |

+

for level in range(num_levels - 1):

|

| 102 |

+

idx_array.append(idx // powers[level])

|

| 103 |

+

idx = idx % powers[level]

|

| 104 |

+

idx_array.append(d)

|

| 105 |

+

return "/".join(map(lambda x: hex(x)[2:], idx_array))

|

| 106 |

+

|

| 107 |

+

idx = 0

|

| 108 |

+

for pair_data in tqdm(pairs):

|

| 109 |

+

img1, img2, rotation, crops = pair_data

|

| 110 |

+

if -60 <= rotation and rotation <= 60:

|

| 111 |

+

rotation = 0 # most likely not a true rotation

|

| 112 |

+

paths = [get_path(idx + k) for k in range(len(crops))]

|

| 113 |

+

idx += len(crops)

|

| 114 |

+

jobs.append(((img1, img2), rotation, crops, paths))

|

| 115 |

+

return jobs

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

def load_image(path):

|

| 119 |

+

try:

|

| 120 |

+

return Image.open(path).convert("RGB")

|

| 121 |

+

except Exception as e:

|

| 122 |

+

print("skipping", path, e)

|

| 123 |

+

raise OSError()

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

def save_image_crops(args, data):

|

| 127 |

+

# load images

|

| 128 |

+

img_pair, rot, crops, paths = data

|

| 129 |

+

try:

|

| 130 |

+

img1, img2 = [

|

| 131 |

+

load_image(os.path.join(args.root_dir, impath)) for impath in img_pair

|

| 132 |

+

]

|

| 133 |

+

except OSError as e:

|

| 134 |

+

return []

|

| 135 |

+

|

| 136 |

+

def area(sz):

|

| 137 |

+

return sz[0] * sz[1]

|

| 138 |

+

|

| 139 |

+

tgt_size = (args.imsize, args.imsize)

|

| 140 |

+

|

| 141 |

+

def prepare_crop(img, rect, rot=0):

|

| 142 |

+

# actual crop

|

| 143 |

+

img = img.crop(rect)

|

| 144 |

+

|

| 145 |

+

# resize to desired size

|

| 146 |

+

interp = (

|

| 147 |

+

Image.Resampling.LANCZOS

|

| 148 |

+

if area(img.size) > 4 * area(tgt_size)

|

| 149 |

+

else Image.Resampling.BICUBIC

|

| 150 |

+

)

|

| 151 |

+

img = img.resize(tgt_size, resample=interp)

|

| 152 |

+

|

| 153 |

+

# rotate the image

|

| 154 |

+

rot90 = (round(rot / 90) % 4) * 90

|

| 155 |

+

if rot90 == 90:

|

| 156 |

+

img = img.transpose(Image.Transpose.ROTATE_90)

|

| 157 |

+

elif rot90 == 180:

|

| 158 |

+

img = img.transpose(Image.Transpose.ROTATE_180)

|

| 159 |

+

elif rot90 == 270:

|

| 160 |

+

img = img.transpose(Image.Transpose.ROTATE_270)

|

| 161 |

+

return img

|

| 162 |

+

|

| 163 |

+

results = []

|

| 164 |

+

for (rect1, rect2), path in zip(crops, paths):

|

| 165 |

+

crop1 = prepare_crop(img1, rect1)

|

| 166 |

+

crop2 = prepare_crop(img2, rect2, rot)

|

| 167 |

+

|

| 168 |

+

fullpath1 = os.path.join(args.output_dir, path + "_1.jpg")

|

| 169 |

+

fullpath2 = os.path.join(args.output_dir, path + "_2.jpg")

|

| 170 |

+

os.makedirs(os.path.dirname(fullpath1), exist_ok=True)

|

| 171 |

+

|

| 172 |

+

assert not os.path.isfile(fullpath1), fullpath1

|

| 173 |

+

assert not os.path.isfile(fullpath2), fullpath2

|

| 174 |

+

crop1.save(fullpath1)

|

| 175 |

+

crop2.save(fullpath2)

|

| 176 |

+

results.append(path)

|

| 177 |

+

|

| 178 |

+

return results

|

| 179 |

+

|

| 180 |

+

|

| 181 |

+

if __name__ == "__main__":

|

| 182 |

+

args = arg_parser().parse_args()

|

| 183 |

+

main(args)

|

croco/datasets/habitat_sim/README.MD

ADDED

|

@@ -0,0 +1,76 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Generation of synthetic image pairs using Habitat-Sim

|

| 2 |

+

|

| 3 |

+

These instructions allow to generate pre-training pairs from the Habitat simulator.

|

| 4 |

+

As we did not save metadata of the pairs used in the original paper, they are not strictly the same, but these data use the same setting and are equivalent.

|

| 5 |

+

|

| 6 |

+

### Download Habitat-Sim scenes

|

| 7 |

+

Download Habitat-Sim scenes:

|

| 8 |

+

- Download links can be found here: https://github.com/facebookresearch/habitat-sim/blob/main/DATASETS.md

|

| 9 |

+

- We used scenes from the HM3D, habitat-test-scenes, Replica, ReplicaCad and ScanNet datasets.

|

| 10 |

+

- Please put the scenes under `./data/habitat-sim-data/scene_datasets/` following the structure below, or update manually paths in `paths.py`.

|

| 11 |

+

```

|

| 12 |

+

./data/

|

| 13 |

+

└──habitat-sim-data/

|

| 14 |

+

└──scene_datasets/

|

| 15 |

+

├──hm3d/

|

| 16 |

+

├──gibson/

|

| 17 |

+

├──habitat-test-scenes/

|

| 18 |

+

├──replica_cad_baked_lighting/

|

| 19 |

+

├──replica_cad/

|

| 20 |

+

├──ReplicaDataset/

|

| 21 |

+

└──scannet/

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

### Image pairs generation

|

| 25 |

+

We provide metadata to generate reproducible images pairs for pretraining and validation.

|

| 26 |

+

Experiments described in the paper used similar data, but whose generation was not reproducible at the time.

|

| 27 |

+

|

| 28 |

+

Specifications:

|

| 29 |

+

- 256x256 resolution images, with 60 degrees field of view .

|

| 30 |

+

- Up to 1000 image pairs per scene.

|

| 31 |

+

- Number of scenes considered/number of images pairs per dataset:

|

| 32 |

+

- Scannet: 1097 scenes / 985 209 pairs

|

| 33 |

+

- HM3D:

|

| 34 |

+

- hm3d/train: 800 / 800k pairs

|

| 35 |

+

- hm3d/val: 100 scenes / 100k pairs

|

| 36 |

+

- hm3d/minival: 10 scenes / 10k pairs

|

| 37 |

+

- habitat-test-scenes: 3 scenes / 3k pairs

|

| 38 |

+

- replica_cad_baked_lighting: 13 scenes / 13k pairs

|

| 39 |

+

|

| 40 |

+

- Scenes from hm3d/val and hm3d/minival pairs were not used for the pre-training but kept for validation purposes.

|

| 41 |

+

|

| 42 |

+

Download metadata and extract it:

|

| 43 |

+

```bash

|

| 44 |

+

mkdir -p data/habitat_release_metadata/

|

| 45 |

+

cd data/habitat_release_metadata/

|

| 46 |

+

wget https://download.europe.naverlabs.com/ComputerVision/CroCo/data/habitat_release_metadata/multiview_habitat_metadata.tar.gz

|

| 47 |

+

tar -xvf multiview_habitat_metadata.tar.gz

|

| 48 |

+

cd ../..

|

| 49 |

+

# Location of the metadata

|

| 50 |

+

METADATA_DIR="./data/habitat_release_metadata/multiview_habitat_metadata"

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

Generate image pairs from metadata:

|

| 54 |

+

- The following command will print a list of commandlines to generate image pairs for each scene:

|

| 55 |

+

```bash

|

| 56 |

+

# Target output directory

|

| 57 |

+

PAIRS_DATASET_DIR="./data/habitat_release/"

|

| 58 |

+

python datasets/habitat_sim/generate_from_metadata_files.py --input_dir=$METADATA_DIR --output_dir=$PAIRS_DATASET_DIR

|

| 59 |

+

```

|

| 60 |

+

- One can launch multiple of such commands in parallel e.g. using GNU Parallel:

|

| 61 |

+

```bash

|

| 62 |

+

python datasets/habitat_sim/generate_from_metadata_files.py --input_dir=$METADATA_DIR --output_dir=$PAIRS_DATASET_DIR | parallel -j 16

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

## Metadata generation

|

| 66 |

+

|

| 67 |

+

Image pairs were randomly sampled using the following commands, whose outputs contain randomness and are thus not exactly reproducible:

|

| 68 |

+

```bash

|

| 69 |

+

# Print commandlines to generate image pairs from the different scenes available.

|

| 70 |

+

PAIRS_DATASET_DIR=MY_CUSTOM_PATH

|

| 71 |

+

python datasets/habitat_sim/generate_multiview_images.py --list_commands --output_dir=$PAIRS_DATASET_DIR

|

| 72 |

+

|

| 73 |

+

# Once a dataset is generated, pack metadata files for reproducibility.

|

| 74 |

+

METADATA_DIR=MY_CUSTON_PATH

|

| 75 |

+

python datasets/habitat_sim/pack_metadata_files.py $PAIRS_DATASET_DIR $METADATA_DIR

|

| 76 |

+

```

|

croco/datasets/habitat_sim/__init__.py

ADDED

|

File without changes

|

croco/datasets/habitat_sim/generate_from_metadata.py

ADDED

|

@@ -0,0 +1,125 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|