MS-LC-EQ-D-VR VAE: another reproduction of EQ-VAE on variable VAEs and then some

Current VAEs present:

- SDXL VAE

- FLUX VAE

EQ-VAE paper: https://arxiv.org/abs/2502.09509

VIVAT paper: https://arxiv.org/pdf/2506.07863v1

Thanks to Kohaku and his reproduction that made me look into this: https://huggingface.co/KBlueLeaf/EQ-SDXL-VAE

Base model adapted to EQ VAE: https://huggingface.co/Anzhc/Noobai11-EQ

Latent to PCA

IMPORTANT: This VAE requires reflection padding on conv layers. It should be added both in your trainer, and your webui.

You can do it with this function on VAE model:

for module in self.model.modules():

if isinstance(module, nn.Conv2d):

pad_h, pad_w = module.padding if isinstance(module.padding, tuple) else (module.padding, module.padding)

if pad_h > 0 or pad_w > 0:

module.padding_mode = "reflect"

If you have trained without this - don't worry, just add this modification and do a small tune to fix up artefacts on edges.

ComfyUI/SwarmUI padding for VAEs - https://github.com/Jelosus2/comfyui-vae-reflection

Trainer fork with optional padding (loras only) - https://github.com/Jelosus2/LoRA_Easy_Training_Scripts

Introduction

Refer to https://huggingface.co/KBlueLeaf/EQ-SDXL-VAE for introduction to EQ-VAE.

This implementation additionally utilizes some of fixes proposed in VIVAT paper, and custom in-house regularization techniques, as well as training implementation.

For additional examples and more information refer to: https://arcenciel.io/articles/20 and https://arcenciel.io/models/10994

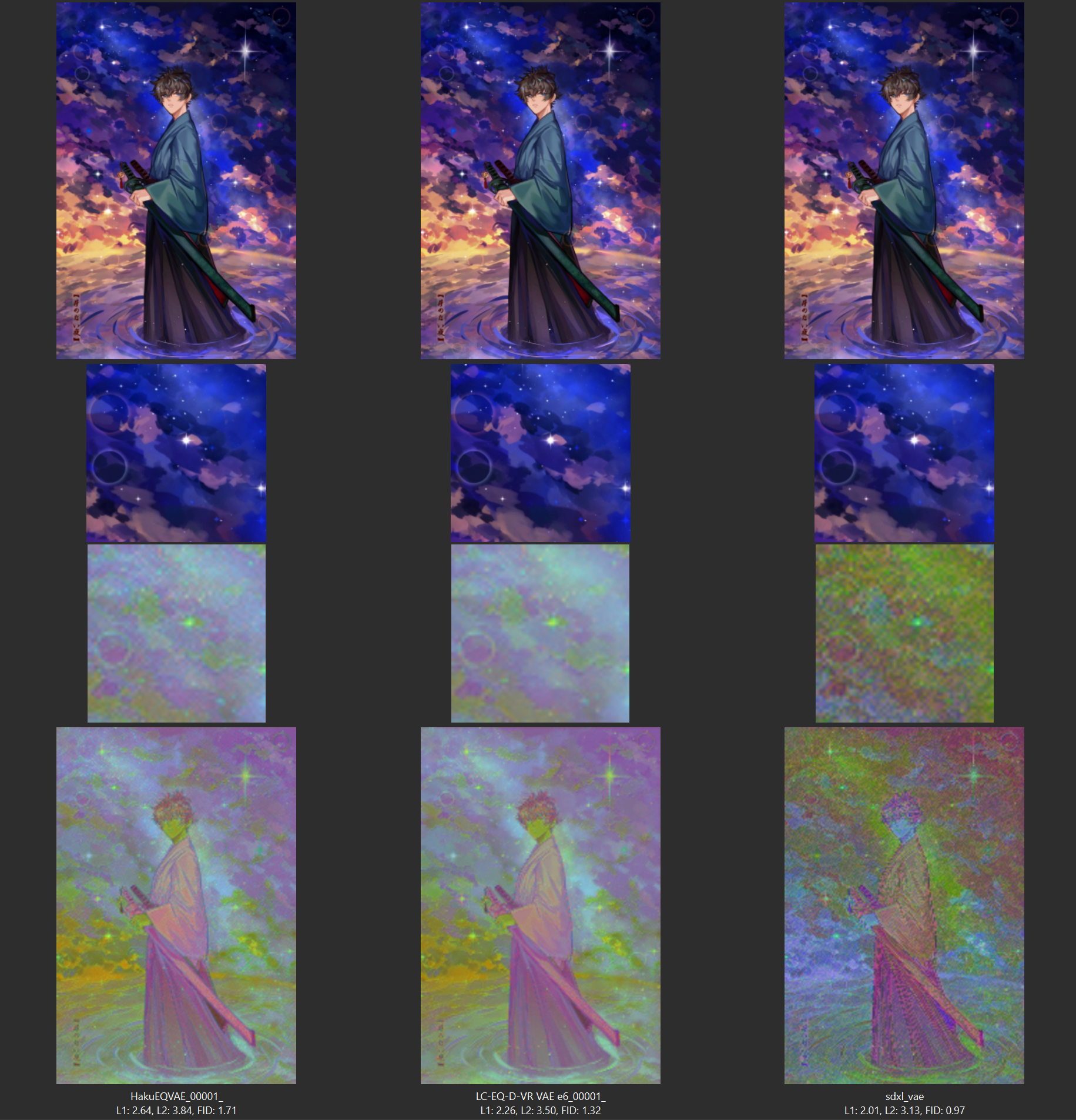

Visual Examples

Usage

This is a finetuned SDXL VAE, adapted with new regularization, and other techniques. You can use this with your existing SDXL model, but image will be quite artefacting, particularly - oversharpening and ringing.

This VAE is supposed to be used for finetune, after that images will become normal. But be aware, compatibility with old VAEs, that are not EQ, will be lost(They will become blurry).

Training Setup

Base SDXL:

Base Model: SDXL-VAE

Resolution: 256

Dataset: ~12.8k Illustrations from Boorus

Batch Size: 128 (bs 8, grad acc 16)

Samples Seen: ~75k

Loss Weights:

- L1: 0.3

- L2: 0.5

- SSIM: 0.5

- LPIPS: 0.5

- KL: 0.000001

- Consistency Loss: 0.75

Both Encoder and Decoder were trained.

Training Time: ~8-10 hours on 4060Ti

B2:

Base Model: First version

Resolution: 256

Dataset: 87.8k Illustrations from Boorus

Batch Size: 128 (bs 8, grad acc 16)

Samples Seen: ~150k

Loss Weights:

- L1: 0.2

- L2: 0.4

- SSIM: 0.6

- LPIPS: 0.8

- KL: 0.000001

- Consistency Loss: 0.75

Both Encoder and Decoder were trained.

Training Time: ~16 hours on 4060Ti

B3:

Base Model: B2

Resolution: 256

Dataset: 162.8k Illustrations from Boorus

Batch Size: 128 (bs 8, grad acc 16)

Samples Seen: ~225k

Loss Weights:

- L1: 0.2

- L2: 0.4

- SSIM: 0.6

- LPIPS: 0.8

- KL: 0.000001

- Consistency Loss: 0.75

Both Encoder and Decoder were trained.

Training Time: ~24 hours on 4060Ti

B4:

Base Model: B3

Resolution: 320

Dataset: ~237k Illustrations from Boorus

Batch Size: 72 (bs 6, grad acc 12)

Samples Seen: ~300k

Loss Weights:

- L1: 0.5

- L2: 0.9

- SSIM: 0.6

- LPIPS: 0.7

- KL: 0.000001

- Consistency Loss: 0.75

- wavelet: 0.3

Both Encoder and Decoder were trained.

Total Training Time: ~33 hours on 4060Ti

B4:

Base Model: B4

Resolution: 384

Dataset: ~312k Illustrations from Boorus

Batch Size: 48 (bs 4, grad acc 12)

Samples Seen: ~375k

Loss Weights:

- L1: 0.5

- L2: 0.9

- SSIM: 0.6

- LPIPS: 0.7

- KL: 0.000001

- Consistency Loss: 0.75

- wavelet: 0.3

Both Encoder and Decoder were trained.

Total Training Time: ~48 hours on 4060Ti

B2 is a direct continuation of base version, stats displayed are cumulative across multiple runs. I took batch of 75k images, so samples seen never repeated.

B3 repeats B2 for another batch of data and further solidifies cleaner latents. Minor tweaks were done to training code for better regularization.

B4 changes mixture a bit, to concentrate more on reconstruction quality. Additionally, resolution was increased to 320. Wavelet loss was added at low values(but it's effect is yet to be studied).

B5 same as B4, but higher resolution again.

Base FLUX:

Base Model: FLUX-VAE

Dataset: ~12.8k Illustrations from Boorus

Batch Size: 128 (bs 8, grad acc 16)

Samples Seen: ~62.5k

Loss Weights:

- L1: 0.3

- L2: 0.4

- SSIM: 0.6

- LPIPS: 0.6

- KL: 0.000001

- Consistency Loss: 0.75

Both Encoder and Decoder were trained.

Training Time: ~6 hours on 4060Ti

Evaluation Results

Im using small test set i have on me, separated into anime(434) and photo(500) images. Additionally, im measuring noise in latents. Sorgy for no larger test sets.

Results on small benchmark of 500 photos

| VAE SDXL | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS-SSIM ↑ | KL ↓ | Consistency ↓ | RFID ↓ |

|---|---|---|---|---|---|---|---|---|

| sdxl_vae | 6.282 | 10.534 | 29.278 | 0.063 | 0.947 | 31.216 | 0.0086 | 4.819 |

| Kohaku EQ-VAE | 6.423 | 10.428 | 29.140 | 0.082 | 0.945 | 43.236 | n/a | 6.202 |

| Anzhc MS-LC-EQ-D-VR VAE | 5.975 | 10.096 | 29.526 | 0.106 | 0.952 | 33.176 | n/a | 5.578 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 6.082 | 10.214 | 29.432 | 0.103 | 0.951 | 33.535 | n/a | 5.509 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | 6.066 | 10.151 | 29.475 | 0.104 | 0.951 | 34.341 | n/a | 5.538 |

| Anzhc MS-LC-EQ-D-VR VAE B4 | 5.839 | 9.818 | 29.788 | 0.112 | 0.9535 | 35.762 | n/a | 5.260 |

| Anzhc MS-LC-EQ-D-VR VAE B5 | 5.8117 | 9.7627 | 29.8545 | 0.1112 | 0.9538 | 36.5573 | 0.0080 | 4.963894 |

| Anzhc MS-LC-EQ-D-VR VAE B7 | 5.7046 | 9.5975 | 30.0106 | 0.0980 | 0.9553 | 39.4477 | 0.0071 | 4.017592 |

| VAE FLUX | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS-SSIM ↑ | KL ↓ | CONSISTENCY ↓ | rFID ↓ |

|---|---|---|---|---|---|---|---|---|

| FLUX VAE | 4.1471 | 6.2940 | 33.3887 | 0.0209 | 0.9868 | 12.1461 | 0.0077 | 0.564150 |

| MS-LC-EQ-D-VR VAE FLUX | 3.799 | 6.077 | 33.807 | 0.032 | 0.986 | 10.992 | — | 1.692 |

| Flux EQ v2 B1 | 3.4560 | 5.5851 | 34.6641 | 0.0281 | 0.9884 | 11.4340 | 0.0040 | 0.686061 |

Noise in latents

| VAE SDXL | Noise ↓ |

|---|---|

| sdxl_vae | 27.508 |

| Kohaku EQ-VAE | 17.395 |

| Anzhc MS-LC-EQ-D-VR VAE | 15.527 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 13.914 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | 13.124 |

| Anzhc MS-LC-EQ-D-VR VAE B4 | 12.354 |

| Anzhc MS-LC-EQ-D-VR VAE B5 | 11.846 |

| Anzhc MS-LC-EQ-D-VR VAE B7 | 12.1471 |

| VAE FLUX | Noise ↓ |

|---|---|

| FLUX VAE | 10.499 |

| MS‑LC‑EQ‑D‑VR VAE FLUX | 7.635 |

| Flux EQ v2 B1 | 8.5019 |

Results on a small benchmark of 434 Illustrations from Boorus

| VAE SDXL | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS-SSIM ↑ | KL ↓ | Consistency ↓ | RFID ↓ |

|---|---|---|---|---|---|---|---|---|

| sdxl_vae | 4.369 | 7.905 | 31.080 | 0.038 | 0.969 | 35.057 | 0.0079 | 5.088 |

| Kohaku EQ-VAE | 4.818 | 8.332 | 30.462 | 0.048 | 0.967 | 50.022 | n/a | 7.264 |

| Anzhc MS-LC-EQ-D-VR VAE | 4.351 | 7.902 | 30.956 | 0.062 | 0.970 | 36.724 | n/a | 6.239 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 4.313 | 7.935 | 30.951 | 0.059 | 0.970 | 36.963 | n/a | 6.147 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | 4.323 | 7.910 | 30.977 | 0.058 | 0.970 | 37.809 | n/a | 6.075 |

| Anzhc MS-LC-EQ-D-VR VAE B4 | 4.140 | 7.617 | 31.343 | 0.058 | 0.971 | 39.057 | n/a | 5.670 |

| Anzhc MS-LC-EQ-D-VR VAE B5 | 4.0998 | 7.5481 | 31.4378 | 0.0569 | 0.9717 | 39.8600 | 0.0070 | 5.178428 |

| Anzhc MS-LC-EQ-D-VR VAE B7 | 3.9949 | 7.3784 | 31.6544 | 0.0508 | 0.9731 | 42.8447 | 0.0063 | 4.216971 |

| VAE FLUX | L1 ↓ | L2 ↓ | PSNR ↑ | LPIPS ↓ | MS-SSIM ↑ | KL ↓ | CONSISTENCY ↓ | rFID ↓ |

|---|---|---|---|---|---|---|---|---|

| FLUX VAE | 3.0600 | 4.7752 | 35.4400 | 0.0112 | 0.9905 | 12.4717 | 0.0079 | 0.669906 |

| MS-LC-EQ-D-VR VAE FLUX | 2.933 | 4.856 | 35.251 | 0.018 | 0.990 | 11.225 | — | 1.561 |

| Flux EQ v2 B1 | 2.4825 | 4.2776 | 36.6027 | 0.0132 | 0.9916 | 11.6388 | 0.0039 | 0.744904 |

Noise in latents

| VAE SDXL | Noise ↓ |

|---|---|

| sdxl_vae | 26.359 |

| Kohaku EQ-VAE | 17.314 |

| Anzhc MS-LC-EQ-D-VR VAE | 14.976 |

| Anzhc MS-LC-EQ-D-VR VAE B2 | 13.649 |

| Anzhc MS-LC-EQ-D-VR VAE B3 | 13.247 |

| Anzhc MS-LC-EQ-D-VR VAE B4 | 12.652 |

| Anzhc MS-LC-EQ-D-VR VAE B5 | 12.217 |

| Anzhc MS-LC-EQ-D-VR VAE B7 | 12.3996 |

| VAE FLUX | Noise ↓ |

|---|---|

| FLUX VAE | 9.913 |

| MS‑LC‑EQ‑D‑VR VAE FLUX | 7.723 |

| Flux EQ v2 B1 | 8.4004 |

KL loss suggests that this VAE implementation is much closer to SDXL, and likely will be a better candidate for further finetune, but that is just a theory.

B2 further improves latent clarity, while maintaining same or better performance. Particularly improved very fine texture handling, which previously would be overcorrected into smooth surface. Performs better in such cases now.

B3 cleans them up ever more, but at that point visually they are +- same.

B4 Moar.

B5 MOAR. (also benchmarked with padding added, so results are overall a tiny bit more consistent due to fixed edges)

B6-7 Concentration on improving details. Previous runs were clearing latents up as much as possible, now target is to preserve and improve, while allowing model to still change latents to accommodate for new details in a clear way.

References

[1] [2502.09509] EQ-VAE: Equivariance Regularized Latent Space for Improved Generative Image Modeling

[2] [2506.07863] VIVAT: VIRTUOUS IMPROVING VAE TRAINING THROUGH ARTIFACT MITIGATION

[3] sdxl-vae

Cite

@misc{anzhc_ms-lc-eq-d-vr_vae,

author = {Anzhc},

title = {MS-LC-EQ-D-VR VAE: another reproduction of EQ-VAE on cariable VAEs and then some},

year = {2025},

howpublished = {Hugging Face model card},

url = {https://huggingface.co/Anzhc/MS-LC-EQ-D-VR_VAE},

note = {Finetuned SDXL-VAE with EQ regularization and more, for improved latent representation.}

}

Acknowledgement

My friend Bluvoll, for no particular reason.

- Downloads last month

- 7,871