T2V works fine. I2V throws error:

Given groups=1, weight of size [5120, 36, 1, 2, 2], expected input[1, 32, 21, 96, 96] to have 36 channels, but got 32 channels instead

Using fp8 scaled versions on a 3090 btw

Same problem. Tried various combinations of VAE (There's a WAN2.1 and a WAN2.2 in the repo) and text encoders, same issue. Default ComfyUI workflow from Browse Templates, exact files downloaded from the links provided in the "notes" node. Windows 10, RTX 3090, 128GB RAM, ComfyUI Portable freshly updated.

Facing the same issue, anyone solved it?

I am using A5000 24 gb Vram, 128 gb ram

same here on a rtx 5060 ti 16gb vram

Given groups=1, weight of size [5120, 36, 1, 2, 2], expected input[1, 32, 31, 90, 160] to have 36 channels, but got 32 channels instead

vae tried the new 2.1 and also 2.2 and every other wan vae i got too, same issue

Did you guys try the original split models?

what do you mean original? models are:

from https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/tree/main/split_files/diffusion_models

T2Video works

Did you guys try the original split models?

I used the comfy version

This error means you don't have latest ComfyUI.

well then update the desktop version ;-) thx

I literally updated ComfyUI before trying these new models using update_comfyui.bat, then that didn't fix it, so I used update_comfyui_and_python_dependencies.bat, which still didn't fix the issue. Then I went into the ComfyUI Manager and forced an update to all nodes. Then went back and ran both ComfyUI update scripts again, just in case.

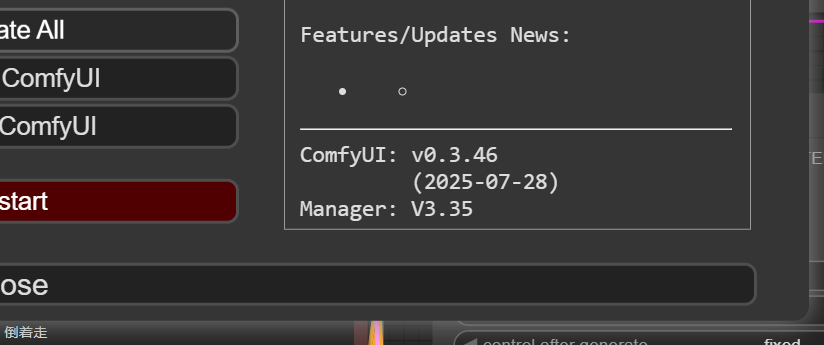

Here's my version:

ComfyUI: v0.3.46-1-gc60dc417

(2025-07-28)

Manager: V3.35

How exactly do I get the latest version if the update script doesn't do it?

Which VAE is selected in your workflow? I had a similar error trying I2V but it was resolved by downloading and enabling the wan_2.1_vae as opposed to 2.2.

I also updated my entire ComfyUI by simply renaming the old one and re-downloading the latest from github (git clone, NOT downloading a release) and creating a new virtual environment. This means reinstalling custom nodes, but this fresh method is working for me.

I've tried all 3. The 2.1 VAE from the original WAN 2.1 repo, then the 2.1 VAE from the WAN 2.2 repo, then the 2.2 VAE from the WAN 2.2 repo. None of them work. It's not an issue with the VAE. From the best I can tell there's a problem with the WANImageToVideo node that's not correctly sizing the channels to 36 instead of 32.

It's strange to me that updating ComfyUI got the new template for the workflow, but didn't get the updated node that actually works.

Does the 5b model is correctly implemented? i feel is too slow...i think the vae needs fixing?

Finally figured it out. The update script is broken, or at least the version from April 25th 2025. I downloaded the latest ComfyUI Portable, ran the update script and WAN 2.2 I2V is working with the default workflow from the templates. It's the "same version" as my other install, but the new one actually works. It is SLOOOooooowwwwww. It's only using 19GB of my 24GB VRAM, but it says the model is only partially loaded, even though none of the "shared VRAM" is being used. Apparently 116 frames@1280x720 is going to take 1.5x2 (3 hours) hours on a RTX 3090.

....aaaaand installing Triton and Sage attention broke it. Clean latest ComfyUI_Portable install, after running the update script and installing the ComfyUI Manager, that's it.

This breaks the WAN 2.2 I2V functionality, giving this error: Given groups=1, weight of size [5120, 36, 1, 2, 2], expected input[1, 32, 21, 96, 96] to have 36 channels, but got 32 channels instead

This is how I installed Sage and Triton.

Excerpts from the Triton/Sage install script:

winget install --id Microsoft.VisualStudio.2022.BuildTools -e --source winget --override "--quiet --wait --norestart --add Microsoft.VisualStudio.Component.VC.Tools.x86.x64 --add Microsoft.VisualStudio.Component.Windows10SDK.20348"

python_embeded\python.exe -m pip install torch==2.7.0 torchvision torchaudio --index-url https://download.pytorch.org/whl/cu%cuda_version:.=%

python_embeded\python.exe -m pip install -U --pre triton-windows

git clone https://github.com/thu-ml/SageAttention

python_embeded\python.exe -s -m pip install -e SageAttention

Had the "Given groups=1, weight of size [5120, 36, 1, 2, 2], expected input[1, 32, 21, 96, 96] to have 36 channels, but got 32 channels instead" error and spent several hours trying to resolve it. The solution for me was to delete the "WanImageToVideo (Flow2)" in the custom_nodes directory, restart ComfyUI and all works as it should. Very strange, the cursed "WanImageToVideo (Flow2)" node wasn't even in any of the workflows I was using with Wan 2.2

I'll give that a try just to test. I've installed a new install of ComfyUI Portable and used BlackMixture's Sage and Triton install instructions and everything is working now. It's completely possible I did something screwy with my old install.

The fp16 versions are faster than the fp8 scaled ones for me on my 3090. I use https://github.com/kijai/ComfyUI-WanVideoWrapper/blob/main/example_workflows/wanvideo2_2_I2V_A14B_example_WIP.json and base_precision fp16_fast and quantization disabled and 20 blockswap. It is around 20-30 secs per step at 81 frames with torch compile, it was 60 seconds with the fp8 scaled ones. Before the 40xx gpus, bf16 is treated as fp32 in place by torch. So something like that may be happening. As a sidenote for 3090 homie I recommend passing cli arg --fp32-vae bcs by default ComfyUI will use bf16 for vae.

Using the fp8_scaled and the ComfyUI template workflow video_wan2_2_14B_i2v it took 2.5 minutes per step. With the fp16 it takes ~2.0 minutes per step.

also for 3090 homie.

import torch

from safetensors.torch import load_file, save_file

def convert_bf16_to_fp16(input_path, output_path):

state_dict = load_file(input_path)

converted = {}

for name, tensor in state_dict.items():

if tensor.dtype == torch.bfloat16:

tensor = tensor.to(torch.float32).to(torch.float16)

converted[name] = tensor

save_file(converted, output_path)

A lot of loras are bf16.

Had the "Given groups=1, weight of size [5120, 36, 1, 2, 2], expected input[1, 32, 21, 96, 96] to have 36 channels, but got 32 channels instead" error and spent several hours trying to resolve it. The solution for me was to delete the "WanImageToVideo (Flow2)" in the custom_nodes directory, restart ComfyUI and all works as it should. Very strange, the cursed "WanImageToVideo (Flow2)" node wasn't even in any of the workflows I was using with Wan 2.2

This was the case for me as well! Thanks for finding this. I need to move the flow2-wan-video folder away from the custom_nodes folder. But even with GGuf models it is very very slow for I2V.

I upgrade from 0.3.43 to 0.3.48 and this problem no longer occurs