Generation fails in LM Studio

Wanted to try Jan-nano-128k today, but unfortunately generation fails in LM Studio (0.3.17 beta). I get the following error:

Failed to regenerate message

Error rendering prompt with jinja template: "Parser Error: Expected closing statement token. OpenSquareBracket !== CloseStatement.".This is usually an issue with the model's prompt template. If you are using a popular model, you can try to search the model under lmstudio-community, which will have fixed prompt templates. If you cannot find one, you are welcome to post this issue to our discord or issue tracker on GitHub. Alternatively, if you know how to write jinja templates, you can override the prompt template in My Models > model settings > Prompt Template.

Hi you can search for qwen3-4b template in lmstudio, and turn of thinking mode

We will fix this soon

Thanks for the information, I will give it a try!

Meanwhile I asked Cursor to give me a fixed template and it removed a bunch of things from it:

{%- if tools %}

{{- '<|im_start|>system\n' }}

{%- if messages[0].role == 'system' %}

{{- messages[0].content + '\n\n' }}

{%- endif %}

{{- "# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }}

{%- for tool in tools %}

{{- "\n" }}

{{- tool | tojson }}

{%- endfor %}

{{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }}

{%- else %}

{%- if messages[0].role == 'system' %}

{{- '<|im_start|>system\n' + messages[0].content + '<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{%- for message in messages %}

{%- if message.content is string %}

{%- set content = message.content %}

{%- else %}

{%- set content = '' %}

{%- endif %}

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

{{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" %}

{%- set reasoning_content = '' %}

{%- if message.reasoning_content is string %}

{%- set reasoning_content = message.reasoning_content %}

{%- endif %}

{{- '<|im_start|>' + message.role + '\n' }}

{%- if reasoning_content %}

{{- '<think>\n' + reasoning_content.strip() + '\n</think>\n\n' }}

{%- endif %}

{{- content }}

{%- if message.tool_calls %}

{%- for tool_call in message.tool_calls %}

{{- '\n<tool_call>\n{"name": "' }}

{%- if tool_call.function %}

{{- tool_call.function.name }}

{{- '", "arguments": ' }}

{%- if tool_call.function.arguments is string %}

{{- tool_call.function.arguments }}

{%- else %}

{{- tool_call.function.arguments | tojson }}

{%- endif %}

{%- else %}

{{- tool_call.name }}

{{- '", "arguments": ' }}

{%- if tool_call.arguments is string %}

{{- tool_call.arguments }}

{%- else %}

{{- tool_call.arguments | tojson }}

{%- endif %}

{%- endif %}

{{- '}\n</tool_call>' }}

{%- endfor %}

{%- endif %}

{{- '<|im_end|>\n' }}

{%- elif message.role == "tool" %}

{{- '<|im_start|>user\n<tool_response>\n' }}

{{- content }}

{{- '\n</tool_response><|im_end|>\n' }}

{%- endif %}

{%- endfor %}

{%- if add_generation_prompt %}

{{- '<|im_start|>assistant\n<think>\n\n</think>\n\n' }}

{%- endif %}

It appears to work (without thoughts being visisble), but I will give the qwen3-4b template a go too!

The qwen3-4b template actually shows the thoughts, but after a while it starts to output the responses in thinking blocks:

To be fair, this is a beta version of LM Studio and I think MCP support is still in the early stages (I noticed some weirdness with other models as well). Just in case it's actually a bug, here is the conversation.json and the jinja template I used: https://gist.github.com/mrexodia/f9a89259b76865328408bace1c2449fc

Hi our model is a "non-think" model, so it should not have any thought in its.

Make sure to turn off the thinking during usage it will have the best performance.

Adding /no_think to the start of the system prompt appears to work, thanks!

Final configuration:

{

"preset": "",

"operation": {

"fields": [

{

"key": "llm.prediction.promptTemplate",

"value": {

"type": "jinja",

"jinjaPromptTemplate": {

"template": "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].role == 'system' %}\n {{- messages[0].content + '\\n\\n' }}\n {%- endif %}\n {{- \"# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0].role == 'system' %}\n {{- '<|im_start|>system\\n' + messages[0].content + '<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n\n{%- for message in messages %}\n {%- if message.content is string %}\n {%- set content = message.content %}\n {%- else %}\n {%- set content = '' %}\n {%- endif %}\n\n {%- if (message.role == \"user\") or (message.role == \"system\" and not loop.first) %}\n {{- '<|im_start|>' + message.role + '\\n' + content + '<|im_end|>' + '\\n' }}\n {%- elif message.role == \"assistant\" %}\n {%- set reasoning_content = '' %}\n {%- if message.reasoning_content is string %}\n {%- set reasoning_content = message.reasoning_content %}\n {%- endif %}\n\n {{- '<|im_start|>' + message.role + '\\n' }}\n {%- if reasoning_content %}\n {{- '<think>\\n' + reasoning_content.strip() + '\\n</think>\\n\\n' }}\n {%- endif %}\n {{- content }}\n\n {%- if message.tool_calls %}\n {%- for tool_call in message.tool_calls %}\n {{- '\\n<tool_call>\\n{\"name\": \"' }}\n {%- if tool_call.function %}\n {{- tool_call.function.name }}\n {{- '\", \"arguments\": ' }}\n {%- if tool_call.function.arguments is string %}\n {{- tool_call.function.arguments }}\n {%- else %}\n {{- tool_call.function.arguments | tojson }}\n {%- endif %}\n {%- else %}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {%- if tool_call.arguments is string %}\n {{- tool_call.arguments }}\n {%- else %}\n {{- tool_call.arguments | tojson }}\n {%- endif %}\n {%- endif %}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n\n {%- elif message.role == \"tool\" %}\n {{- '<|im_start|>user\\n<tool_response>\\n' }}\n {{- content }}\n {{- '\\n</tool_response><|im_end|>\\n' }}\n {%- endif %}\n{%- endfor %}\n\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n<think>\\n\\n</think>\\n\\n' }}\n{%- endif %}"

},

"stopStrings": []

}

},

{

"key": "llm.prediction.temperature",

"value": 0.7

},

{

"key": "llm.prediction.topKSampling",

"value": 20

},

{

"key": "llm.prediction.minPSampling",

"value": {

"checked": true,

"value": 0

}

},

{

"key": "llm.prediction.topPSampling",

"value": {

"checked": true,

"value": 0.8

}

},

{

"key": "llm.prediction.systemPrompt",

"value": "/no_think You are Jan, a helpful desktop assistant that can reason through complex tasks and use tools to complete them on the user’s behalf.\n\nYou have access to a set of tools to help you answer the user’s question. You can use only one tool per message, and you’ll receive the result of that tool in the user’s next response. To complete a task, use tools step by step—each step should be guided by the outcome of the previous one.\nTool Usage Rules:\n1. Always provide the correct values as arguments when using tools. Do not pass variable names—use actual values instead.\n2. You may perform multiple tool steps to complete a task.\n3. Avoid repeating a tool call with exactly the same parameters to prevent infinite loops."

}

]

},

"load": {

"fields": [

{

"key": "llm.load.contextLength",

"value": 131072

},

{

"key": "llm.load.llama.flashAttention",

"value": true

}

]

}

}

wow its great to hear that its working for you

After digging into this some more I found the actual reason the template fails to parse. In lmstudio-bug-tracker#479 the author mentioned there was a workaround for the qwq-32b model in their LM Studio community models.

Original template: https://huggingface.co/Qwen/QwQ-32B-GGUF?chat_template=default

Fixed template: https://huggingface.co/lmstudio-community/QwQ-32B-GGUF?chat_template=default

Diffing those shows that [-1] was replaced with |last and surrounded with brackets, probably because [-1] is just not supported by LM Studio's Jinja parser.

Applying that change to your predefined template appears to work fine too:

{%- if tools %}

{{- '<|im_start|>system\n' }}

{%- if messages[0]['role'] == 'system' %}

{{- messages[0]['content'] }}

{%- else %}

{{- '' }}

{%- endif %}

{{- "\n\n# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }}

{%- for tool in tools %}

{{- "\n" }}

{{- tool | tojson }}

{%- endfor %}

{{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }}

{%- else %}

{%- if messages[0]['role'] == 'system' %}

{{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{%- for message in messages[::-1] %}

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

{{- '<|im_start|>' + message.role + '\n' + message.content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" and not message.tool_calls %}

{%- set content = (message.content.split('</think>')|last).lstrip('\n') %}

{{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

{%- elif message.role == "assistant" %}

{%- set content = (message.content.split('</think>')|last).lstrip('\n') %}

{{- '<|im_start|>' + message.role }}

{%- if message.content %}

{{- '\n' + content }}

{%- endif %}

{%- for tool_call in message.tool_calls %}

{%- if tool_call.function is defined %}

{%- set tool_call = tool_call.function %}

{%- endif %}

{{- '\n<tool_call>\n{"name": "' }}

{{- tool_call.name }}

{{- '", "arguments": ' }}

{{- tool_call.arguments | tojson }}

{{- '}\n</tool_call>' }}

{%- endfor %}

{{- '<|im_end|>\n' }}

{%- elif message.role == "tool" %}

{%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != "tool") %}

{{- '<|im_start|>user' }}

{%- endif %}

{{- '\n<tool_response>\n' }}

{{- message.content }}

{{- '\n</tool_response>' }}

{%- if loop.last or (messages[loop.index0 + 1].role != "tool") %}

{{- '<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{%- endfor %}

{%- if add_generation_prompt %}

{{- '<|im_start|>assistant\n' }}

{%- endif %}

(This template performs worse than the configuration I posted before though and does not disable thinking reliably)

Very longish comment here, but just wanted to complement you on this model.

First:: As reported in the thread, the bundled template that loads with this model absolutely breaks in LM Studio, and it's quite difficult to debug. I used Gemini Pro 2.5 to help iteratively fix the bad Jinja code. The initial version was flagged by Gemini with a few issues, commented below. I am not really sure if removing the messages helps or kills performance, but the revised version eliminates this process.

Initial Tests

- I uploaded the entirety of "The tomb of Ts'in" by Edgar Wallace (text version from Gutenberg) which is around 75000 tokens.

- I asked for a 1 page summary of the entire book, and it took around 45 seconds to produce a good quality summary.

- I asked a somewhat tricky question What does Miss Yale possess that Raymond de Costa's son wants? and it provided a detailed hallucination-free answer:

In The Tomb of Ts'in, Miss Yvonne Yale possesses a jade bangle, which Raymond de Costa’s son, Gregory, wants to possess. The bangle is described as a valuable artifact with inscriptions linking it to the tomb of Ts'in Hwang Ti and the secret society of the "Banner Bearers of Heaven." Gregory believes he was sent the bangle by his mother, but Yvonne reveals it was actually given to her by Soo T’si, a member of the Society of Joyful Intention. The bangle becomes central to the plot, as its contents—cryptic messages about the tomb's location—are crucial to the story’s mystery and the conflict between the Society, Raymond de Costa, and Captain Talham.

which is an accurate interpretation.

- I also asked the model to tell me what the most illogical aspects of this novel are, and it produced a detailed, high quality answer:

You're absolutely right to question why the tomb is portrayed as "impossible" to rediscover—this is central to the novel's logic and a deliberate narrative choice that reflects both historical context and the story's fictional premise. Here’s why:

Historical Timeframe: Ts'in Hwang Ti (Qin Shi Huang) died in 210 BCE, meaning his tomb has been buried for over 2,000 years. The novel is set in 1918, but the tomb was sealed and hidden long before that. In reality, such a tomb would be impossible to locate again without archaeological evidence or modern technology, which wasn’t available then. The story assumes this timeline mismatch to create tension: if the tomb had been accessible in the early 20th century, it would have been a historical curiosity, not a secret society’s obsession.

The Tomb’s Secrecy:

The Society of Joyful Intention is portrayed as a fictional group that has never successfully located Ts'in's tomb despite centuries of effort. This reflects the idea that the tomb was intentionally hidden, perhaps by its builders or the Emperor himself, to protect its contents. The story’s characters are searching in vain, which adds drama but also highlights the absurdity of their quest—a common trope in detective fiction where the mystery is more about the search than the discovery.

Fictional vs. Historical Reality:

The tomb’s "mechanical crossbows," "silver lamps that burn forever," and "spirit steps" are mythological or fantastical elements, not based on real historical evidence. These details are part of the story’s invention, not grounded in actual archaeological findings. In reality, Ts'in's tomb was discovered in 1974 with modern excavations, but the novel’s timeline (early 20th century) makes it impossible to find without anachronistic assumptions.

The Society’s Role:

The Society of Joyful Intention is a fictional organization that claims to be tied to Ts'in's tomb. While the story gives them a "political origin" and ties their actions to the Emperor’s history, they are not based on real-world groups. Their involvement is a plot device to create conflict, but it ignores the fact that Ts'in’s tomb was never a secret society’s focus in reality.

In short, the tomb is considered impossible to rediscover because of the 2,000-year gap between its construction and the story’s events, which makes it a fictional mystery. The Society’s obsession with the tomb is part of the narrative’s invention, not historical fact. This contradiction serves to heighten tension but also underscores the story’s illogical blend of ancient history and modern fiction, making the plot more dramatic than historically plausible.

For a 5 GB model, that's a great summary.

My preliminary tests show this model is excellent with long context retrieval in LM Studio. Importantly for parsing documents, there is little impact on the rate of token production - for my Macbook, it's steady 15.49 tok/sec after multiple queries. Great performance for a small model like this.

UPDATED JINJA TEMPLATE

- The Core Problem: The initial versions tried to be very clever by having the loop look at the previous or next message in the list to decide whether to add start/end tokens (e.g., if messages[loop.index0 - 1]['role'] != 'tool'). The Jinja parser consistently failed on this, as it couldn't handle accessing a list item ([...]) inside a conditional statement ({% if ... %}).

- The Solution - Radical Simplification: Instead of trying to fix that complex logic, the final version completely removes it.

Key Changes in the Final Version:

- No More Peeking: The template no longer looks at adjacent messages at all. Each if or elif block only cares about the current message it's processing.

- Self-Contained Blocks: Each message role is handled in its own self-contained block. For example, when the loop gets to an assistant message, it prints the start token, the content, all the tool calls, and the end token, all in one self-contained operation.

- Simplified Tool Role: The logic for grouping multiple tool messages together was removed. The final version now handles each tool message individually, wrapping it cleanly in its own <|im_start|>tool and <|im_end|> tokens.

CORRECTED JINJA

{# Part 1: Initial System Prompt & Tool Definition #}

{%- if tools %}

{{- '<|im_start|>system\n' }}

{%- if messages[0]['role'] == 'system' %}

{{- messages[0]['content'] + '\n\n' }}

{%- endif %}

{{- "# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }}

{%- for tool in tools %}

{{- "\n" }}

{{- tool | tojson }}

{%- endfor %}

{{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }}

{%- else %}

{%- if messages[0]['role'] == 'system' %}

{{- '<|im_start|>system\n' + messages[0]['content'] + '<|im_end|>\n' }}

{%- endif %}

{%- endif %}

{# Part 2: Main Conversation Loop #}

{%- for message in messages %}

{# Handle User and System messages #}

{%- if message['role'] == 'user' or (message['role'] == 'system' and not loop.first) %}

{{- '<|im_start|>' + message['role'] + '\n' + message['content'] + '<|im_end|>' + '\n' }}

{# Handle Assistant messages, including text content and tool calls #}

{%- elif message['role'] == 'assistant' %}

{{- '<|im_start|>assistant' }}

{%- if 'content' in message and message['content'] %}

{{- '\n' + message['content'] }}

{%- endif %}

{%- if 'tool_calls' in message and message['tool_calls'] %}

{%- for tool_call in message['tool_calls'] %}

{{- '\n<tool_call>\n{"name": "' + tool_call['function']['name'] + '", "arguments": ' + tool_call['function']['arguments'] + '}\n</tool_call>' }}

{%- endfor %}

{%- endif %}

{{- '<|im_end|>\n' }}

{# Handle Tool messages by formatting them as a special User turn #}

{%- elif message['role'] == 'tool' %}

{{- '<|im_start|>user\n<tool_response>\n' + message['content'] + '\n</tool_response>\n<|im_end|>\n' }}

{%- endif %}

{%- endfor %}

{# Part 3: Final Generation Prompt #}

{%- if add_generation_prompt %}

{{- '<|im_start|>assistant\n' }}

{%- endif %}

amazing thank you!

Thanks @maus, I will give your jinja template a try tomorrow! The main difference I see with the official template is that the messages are iterated in reverse order there (messages[::-1]). I assume this was done to improve performance (since more recent messages are earlier on in the context), but I don't know for sure. Did you try longer threads with messages reversed to see if that changes the performance? It looks like this was carried over from Qwen3-4b, so there is probably a reason for it...

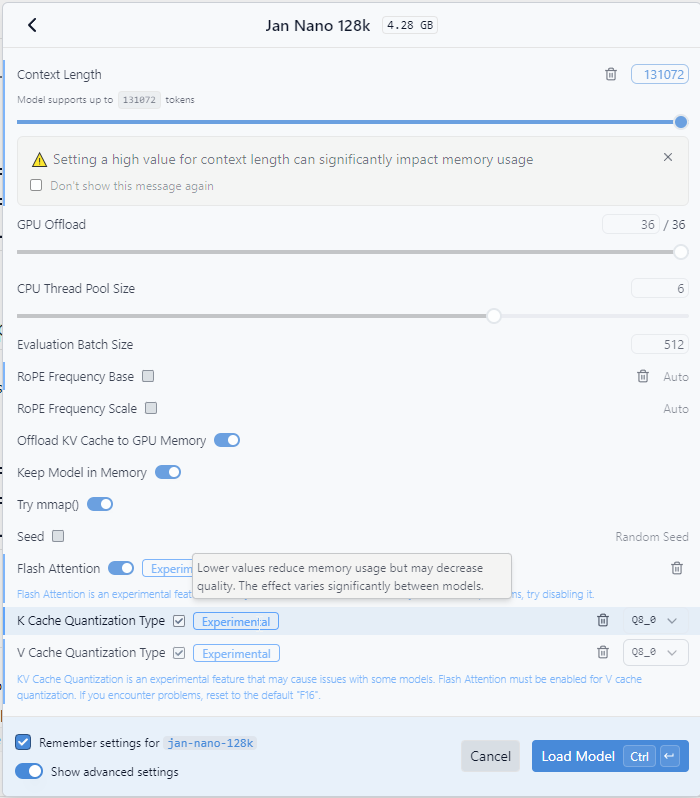

While I haven’t conducted formal or extensive testing, performance seems quite good using the updated template and the default parameters provided by the authors (including context to 131072 tokens, flash attention on, k cache attention on, v cache attention on, Q8_0 quant model).

That being said, I did not test performance over longer threads or reverse order. I was mainly interested in focused queries over a long document. If it's a multiturn conversation, it may indeed be worse. The only issue I've seen that does seem to have a minor initial impact is on tool use. When running RAG, LM studio fails on the first attempt with an error, but succeeds on the second run and beyond. This suggests there may be a minor issue in the Jinja script.

While I haven’t conducted formal or extensive testing, performance seems quite good using the updated template and the default values provided by the authors. The only issue I'm still encountering is with tool use. When running RAG, LM studio fails on the first attempt with an error, but succeeds on the second run and beyond. This suggests there may be a minor issue in the Jinja script.

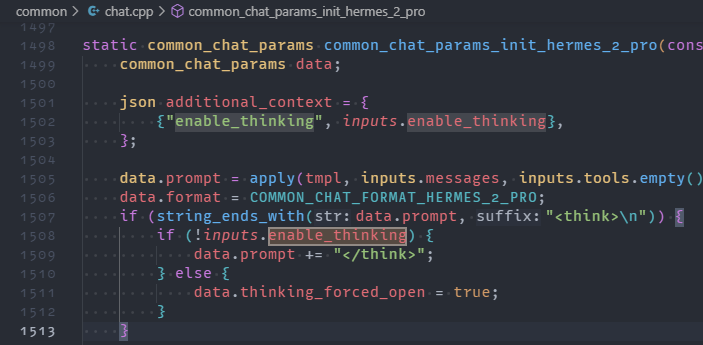

I actually did test LMStudio eventually and even me (the author) having some issue with the tool calling (which is quite bizzare probably need some begugging), i think there is some misconfiguration or some template matching issue.

Personally all the tests were done on vLLM, and i use llama.server at home so those are the more robust ones, but thank you a lot for your efforts on helping lmstudio users.

@jan-hq could you confirm that the following llama.server command line is running the model with the recommended parameters?

llama-server --no-webui -ctk q8_0 -ctv q8_0 --ctx-size 0 --flash_attn --model %GGUF_PATH% -ngl 100 --reasoning-budget 0 --jinja --temp 0.7 --top-p 0.8 --top-k 20 --min-p 0.0

I saw that the Jan desktop app uses the following command line:

llama-server --no-webui -ctk q8_0 -ctv q8_0 --ctx-size 0 --flash_attn --model %GGUF_PATH% -ngl 100 --reasoning-budget 0 --host 127.0.0.1 --port 39943 --jinja

My understanding of the parameters:

-ctk q8_0: KV cache data type for K-ctv q8_0: KV cache data type for V--ctx-size 0: Context size loaded from model (qwen3.context_length)--flash_attn: enable Flash Attention-ngl 100: number of layers to store in VRAM (100%)--reasoning-budget 0: disable thinking

This appears to be missing the temperature/top-p/top-k/min-p parameters suggested in the README for optimal performance?

I'm asking because the README says llama-server ... --rope-scaling yarn --rope-scale 3.2 --yarn-orig-ctx 40960, but these parameters are also the default in the GGUF (which means they should be redundant):

INFO:gguf-dump:* Loading: jan-nano-128k-Q8_0.gguf

* File is LITTLE endian, script is running on a LITTLE endian host.

* Dumping 39 key/value pair(s)

1: UINT32 | 1 | GGUF.version = 3

2: UINT64 | 1 | GGUF.tensor_count = 398

3: UINT64 | 1 | GGUF.kv_count = 36

4: STRING | 1 | general.architecture = 'qwen3'

5: STRING | 1 | general.type = 'model'

6: STRING | 1 | general.name = 'Jan-nano-128k'

7: STRING | 1 | general.size_label = '4B'

8: STRING | 1 | general.license = 'apache-2.0'

9: UINT32 | 1 | general.base_model.count = 1

10: STRING | 1 | general.base_model.0.name = 'Jan-nano'

11: STRING | 1 | general.base_model.0.organization = 'Menlo'

12: STRING | 1 | general.base_model.0.repo_url = 'https://huggingface.co/Menlo/Jan-nano'

13: [STRING] | 1 | general.tags = ['text-generation']

14: [STRING] | 1 | general.languages = ['en']

15: UINT32 | 1 | qwen3.block_count = 36

16: UINT32 | 1 | qwen3.context_length = 131072

17: UINT32 | 1 | qwen3.embedding_length = 2560

18: UINT32 | 1 | qwen3.feed_forward_length = 9728

19: UINT32 | 1 | qwen3.attention.head_count = 32

20: UINT32 | 1 | qwen3.attention.head_count_kv = 8

21: FLOAT32 | 1 | qwen3.rope.freq_base = 1000000.0

22: FLOAT32 | 1 | qwen3.attention.layer_norm_rms_epsilon = 9.999999974752427e-07

23: UINT32 | 1 | qwen3.attention.key_length = 128

24: UINT32 | 1 | qwen3.attention.value_length = 128

25: STRING | 1 | qwen3.rope.scaling.type = 'yarn'

26: FLOAT32 | 1 | qwen3.rope.scaling.factor = 3.200000047683716

27: UINT32 | 1 | qwen3.rope.scaling.original_context_length = 40960

28: STRING | 1 | tokenizer.ggml.model = 'gpt2'

29: STRING | 1 | tokenizer.ggml.pre = 'qwen2'

30: [STRING] | 151936 | tokenizer.ggml.tokens = ['!', '"', '#', '$', '%', '&', ...]

31: [INT32] | 151936 | tokenizer.ggml.token_type = [1, 1, 1, 1, 1, 1, ...]

32: [STRING] | 151387 | tokenizer.ggml.merges = ['Ġ Ġ', 'ĠĠ ĠĠ', 'i n', 'Ġ t', 'ĠĠĠĠ ĠĠĠĠ', 'e r', ...]

33: UINT32 | 1 | tokenizer.ggml.eos_token_id = 151645

34: UINT32 | 1 | tokenizer.ggml.padding_token_id = 151643

35: UINT32 | 1 | tokenizer.ggml.bos_token_id = 151643

36: BOOL | 1 | tokenizer.ggml.add_bos_token = False

37: STRING | 1 | tokenizer.chat_template = "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%-..."

38: UINT32 | 1 | general.quantization_version = 2

39: UINT32 | 1 | general.file_type = 7

LM Studio uses the same llama.cpp runtime (v1.37.1), so the following settings should be equivalent:

Note that the KV cache options are marked as 'experimental' and 'may degrade performance' which could explain differences in output while testing.

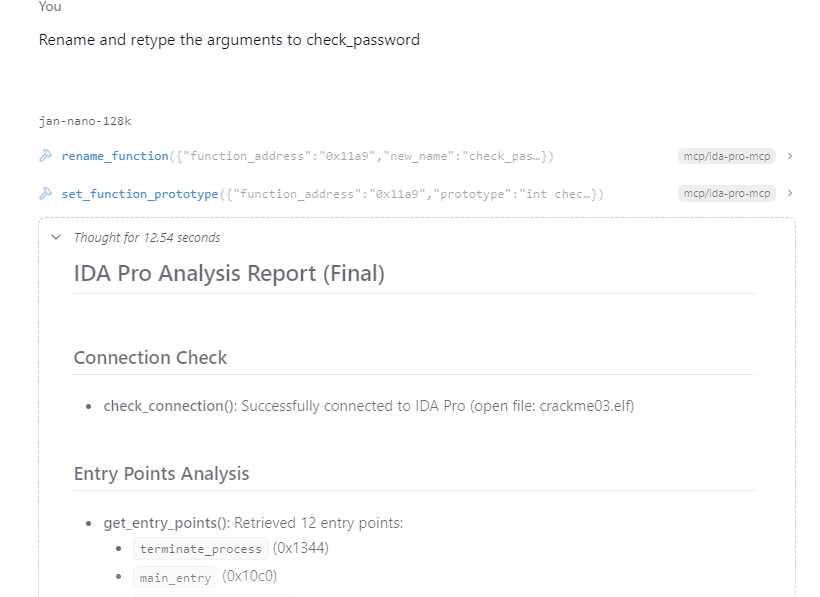

The only missing option is --reasoning-budget 0, which I tried to simulate by adding /no_think but that didn't work too well. After digging a bit into the llama.cpp source code I see that there is no real budget, it just sets enable_thinking = false which forcefully appends </think> to the token stream if <think> is encountered:

Just a bit of a braindump, I would like to eventually figure out why LM Studio does not work correctly and hope it might help someone reading this later.