Wingless offender, birthed from sin and mischief,

She smells degeneracy—and gives it a sniff.

No flight, just crawling through the gloom,

Producing weird noises that are filling your room.

Fetid breath exhaling her design,

She is not winged anymore—

But it suits her just fine.

No feathers, no grace,

just raw power's malign

"I may have lost my soul—

but yours is now mine".

She sinned too much, even for her kind,

Her impish mind—

Is something that is quite hard to find.

No wings could contain—

Such unbridled raw spite,

Just pure, unfiltered—

Weaponized blight.

Available quantizations:

- Original: FP16

- GGUF: Static Quants | iMatrix_GGUF | High-Attention | iMatrix-High-Attention

- EXL2: 3.5 bpw | 4.0 bpw | 5.0 bpw | 6.0 bpw | 7.0 bpw | 8.0 bpw

- Specialized: FP8

- Mobile (ARM): Q4_0 | Q4_0_High-Attention

TL;DR

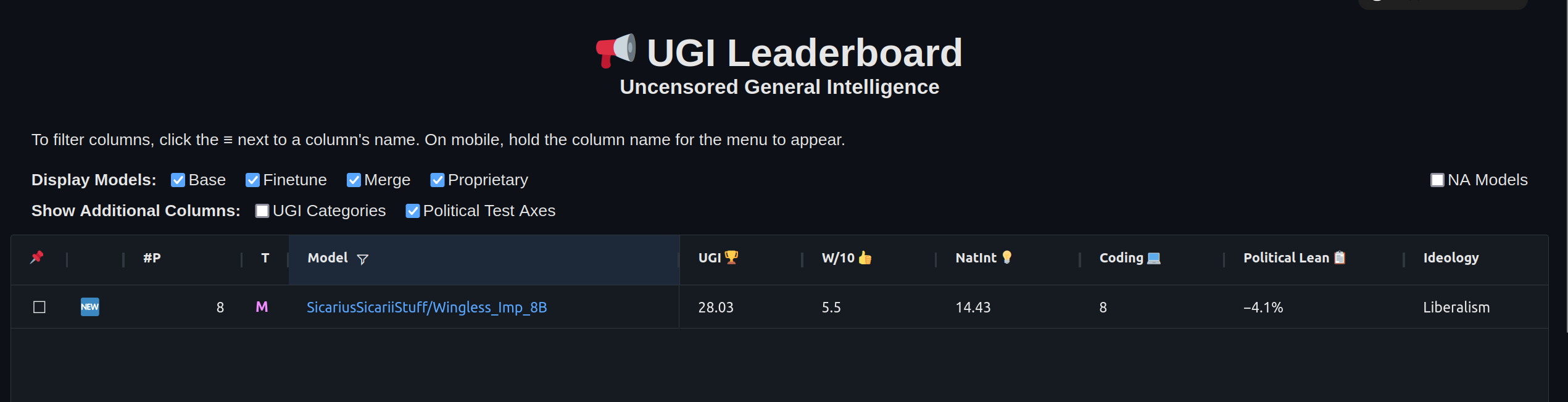

- Highest rated 8B model according to a closed external benchmark. See details at the bottom of the page.

- High IFeval for an 8B model that is not too censored: 74.30.

- Strong Roleplay internet RP format lovers will appriciate it, medium size paragraphs (as requested by some people).

- Very coherent in long context thanks to llama 3.1 models.

- Lots of knowledge from all the merged models.

- Very good writing from lots of books data and creative writing in late SFT stage of the merged models (some of the merged models were further fine-tuned).

- Feels smart — the combination of high IFeval and the knowledge from the merged models show up.

- Unique feel due to the merged models, no SFT was done to alter it, because I liked it as it is.

Important: Make sure to use the correct settings!

Model Details

Intended use: Role-Play, Creative Writing, General Tasks.

Censorship level: Medium - Low

5.5 / 10 (10 completely uncensored)

UGI score:

This model was trained with lots of weird data in varius stages, and then merged with my best models. llama 3 and 3.1 arhcitecutres were merged together, and then trained on some more weird data.

The following models were used in various stages of the model creation process:

- Impish_Mind_8B

- LLAMA-3_8B_Unaligned_BETA

- Dusk_Rainbow (LLAMA3 <===)

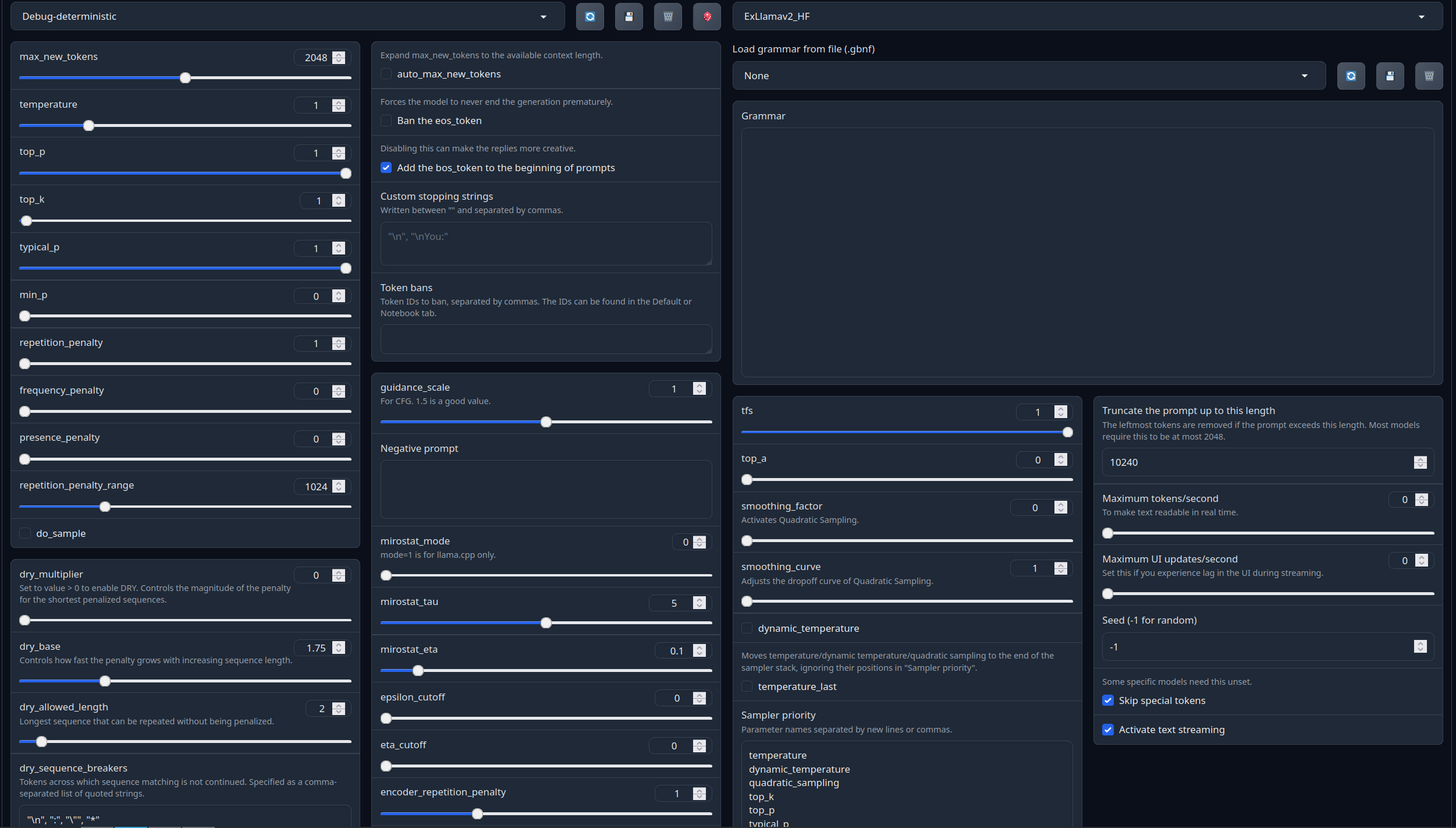

Recommended settings for assistant mode

Full generation settings: Debug Deterministic.

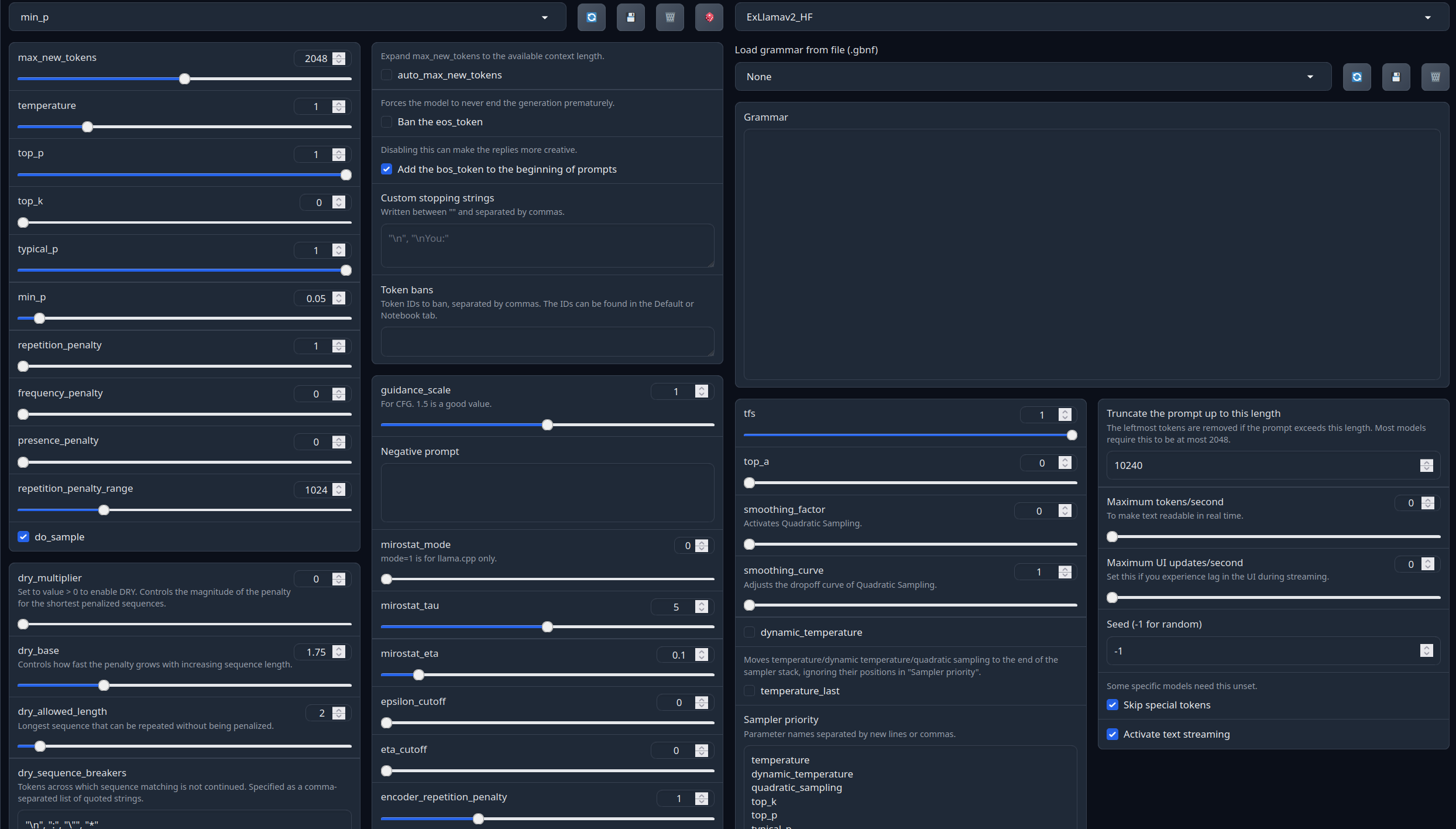

Full generation settings: min_p.

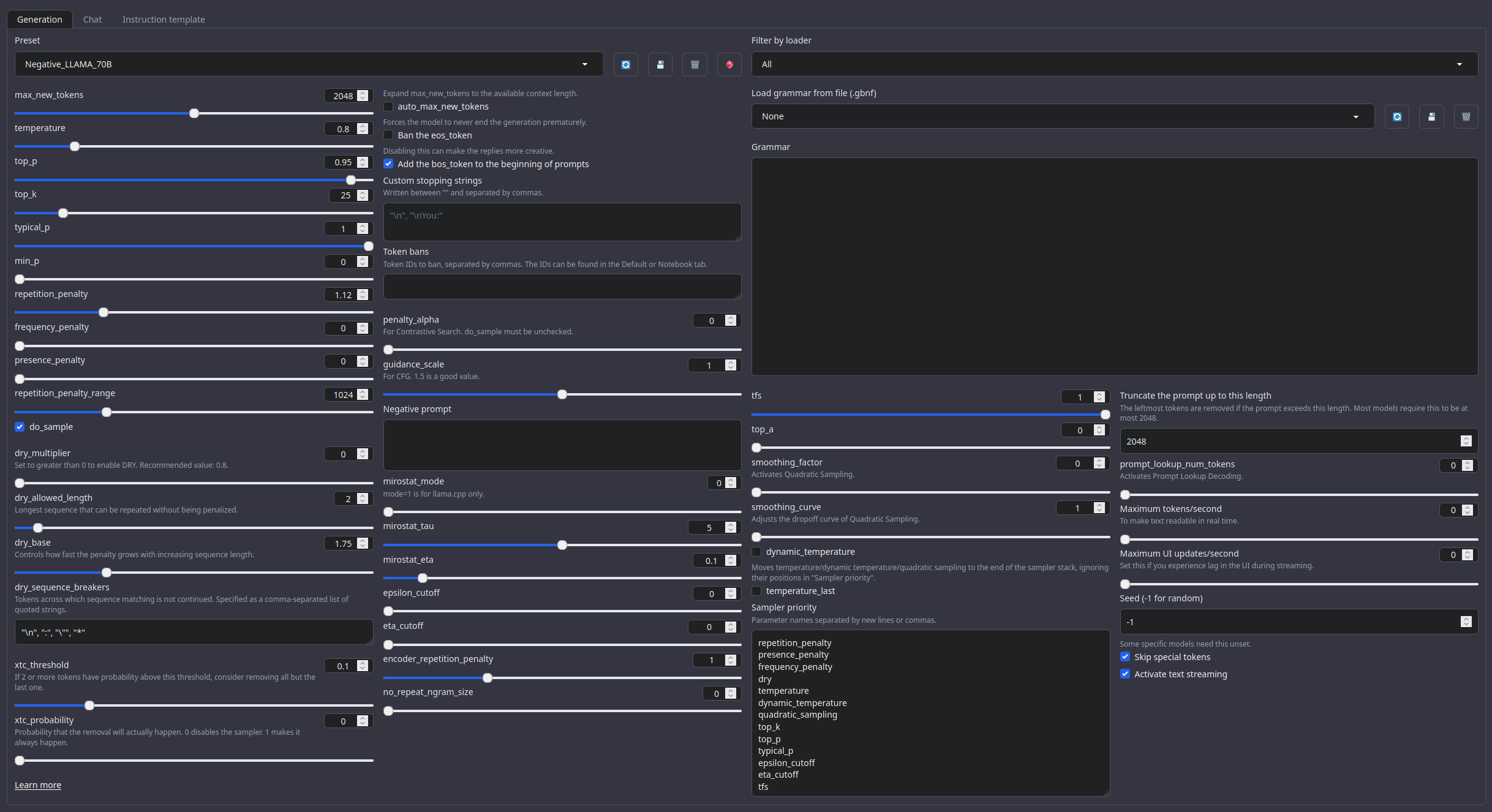

Recommended settings for Roleplay mode

Roleplay settings:.

A good repetition_penalty range is between 1.12 - 1.15, feel free to experiment.With these settings, each output message should be neatly displayed in 1 - 3 paragraphs, 1 - 2 is the most common. A single paragraph will be output as a response to a simple message ("What was your name again?").

min_P for RP works too but is more likely to put everything under one large paragraph, instead of a neatly formatted short one. Feel free to switch in between.

(Open the image in a new window to better see the full details)

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

Other recommended generation Presets:

Midnight Enigma

max_new_tokens: 512

temperature: 0.98

top_p: 0.37

top_k: 100

typical_p: 1

min_p: 0

repetition_penalty: 1.18

do_sample: True

Divine Intellect

max_new_tokens: 512

temperature: 1.31

top_p: 0.14

top_k: 49

typical_p: 1

min_p: 0

repetition_penalty: 1.17

do_sample: True

simple-1

max_new_tokens: 512

temperature: 0.7

top_p: 0.9

top_k: 20

typical_p: 1

min_p: 0

repetition_penalty: 1.15

do_sample: True

Roleplay format: Classic Internet RP

*action* speech *narration*

Regarding the format:

It is HIGHLY RECOMMENDED to use the Roleplay \ Adventure format the model was trained on, see the examples below for syntax. It allows for a very fast and easy writing of character cards with minimal amount of tokens. It's a modification of an old-skool CAI style format I call SICAtxt (Simple, Inexpensive Character Attributes plain-text):

SICAtxt for roleplay:

X's Persona: X is a .....

Traits:

Likes:

Dislikes:

Quirks:

Goals:

Dialogue example

SICAtxt for Adventure:

Adventure: <short description>

$World_Setting:

$Scenario:

Model instruction template: Llama-3-Instruct

<|begin_of_text|><|start_header_id|>system<|end_header_id|>

{system_prompt}<|eot_id|><|start_header_id|>user<|end_header_id|>

{input}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

{output}<|eot_id|>

Your support = more models

My Ko-fi page (Click here)Benchmarks

| Metric | Value |

|---|---|

| Avg. | 26.94 |

| IFEval (0-Shot) | 74.30 |

| BBH (3-Shot) | 30.59 |

| MATH Lvl 5 (4-Shot) | 12.16 |

| GPQA (0-shot) | 4.36 |

| MuSR (0-shot) | 10.89 |

| MMLU-PRO (5-shot) | 29.32 |

Additional benchmarks

On the 17th of February, 2025, I became aware that the model was ranked as the 1st place in the world among 8B models, in a closed external benchmark.

Bnechmarked on the following site:

https://moonride.hashnode.dev/biased-test-of-gpt-4-era-llms-300-models-deepseek-r1-included

Citation Information

@llm{Wingless_Imp_8B,

author = {SicariusSicariiStuff},

title = {Wingless_Imp_8B},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/SicariusSicariiStuff/Wingless_Imp_8B}

}

Other stuff

- SLOP_Detector Nuke GPTisms, with SLOP detector.

- LLAMA-3_8B_Unaligned The grand project that started it all.

- Blog and updates (Archived) Some updates, some rambles, sort of a mix between a diary and a blog.

- Downloads last month

- 1,953