license: other

language:

- zh

- en

base_model:

- THUDM/glm-4v-9b

pipeline_tag: image-text-to-text

library_name: transformers

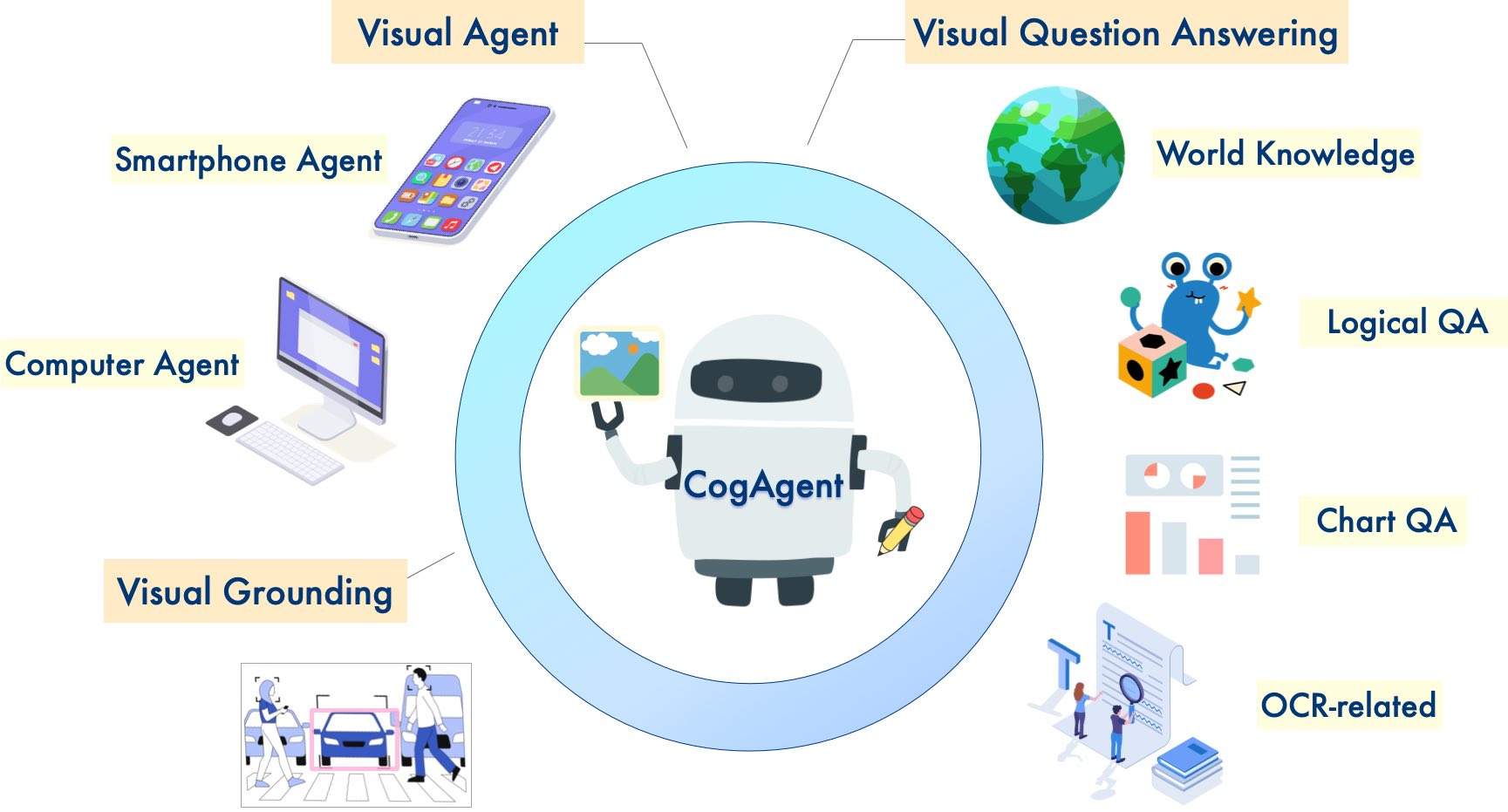

CogAgent

About the Model

The CogAgent-9B-20241220 model is based on GLM-4V-9B, a bilingual

open-source VLM base model. Through data collection and optimization, multi-stage training, and strategy improvements,

CogAgent-9B-20241220 achieves significant advancements in GUI perception, inference prediction accuracy, action space

completeness, and task generalizability. The model supports bilingual (Chinese and English) interaction with both

screenshots and language input.

This version of the CogAgent model has already been applied in ZhipuAI's GLM-PC product. We hope this release will assist researchers and developers in advancing the research and applications of GUI agents based on vision-language models.

Running the Model

Please refer to our GitHub for specific examples of running the model.

Input and Output

cogagent-9b-20241220 is an agent execution model rather than a conversational model. It does not support continuous

conversations but does support continuous execution history. Below are guidelines on how users should format their input

for the model and interpret the formatted output.

User Input

taskfield

A task description provided by the user, similar to a textual prompt. The input should be concise and clear to guide theCogAgent-9B-20241220model to complete the task.platformfieldCogAgent-9B-20241220supports operation on several platforms with GUI interfaces:- Windows: Use the

WINfield for Windows 10 or 11. - Mac: Use the

MACfield for Mac 14 or 15. - Mobile: Use the

Mobilefield for Android 13, 14, 15, or similar Android-based UI versions.

If using other systems, results may vary. Use the

Mobilefield for mobile devices,WINfor Windows, andMACfor Mac.- Windows: Use the

formatfield

Specifies the desired format of the returned data. Options include:Answer in Action-Operation-Sensitive format.: The default format in our demo, returning actions, corresponding operations, and sensitivity levels.Answer in Status-Plan-Action-Operation format.: Returns status, plans, actions, and corresponding operations.Answer in Status-Action-Operation-Sensitive format.: Returns status, actions, corresponding operations, and sensitivity levels.Answer in Status-Action-Operation format.: Returns status, actions, and corresponding operations.Answer in Action-Operation format.: Returns actions and corresponding operations.

historyfield

The input should be concatenated in the following order:query = f'{task}{history}{platform}{format}'

Model Output

- Sensitive Operations: Includes types like

<<Sensitive Operation>>or<<General Operation>>, returned only whenSensitiveis requested. Plan,Agent,Status,Actionfields: Describe the model's behavior and operations, returned based on the requested format.- General Responses: Summarizes the output before formatting.

Grounded Operationfield: Describes the model's specific actions, such as coordinates, element types, and descriptions. Actions include:CLICK: Simulates mouse clicks or touch gestures.LONGPRESS: Simulates long presses (supported only inMobilemode).

Example

If the user wants to mark all emails as read on a Mac system and requests an Action-Operation-Sensitive format, the

prompt should be:

Task: Mark all emails as read

(Platform: Mac)

(Answer in Action-Operation-Sensitive format.)

Below are examples of model responses based on different requested formats:

Answer in Action-Operation-Sensitive format

Action: Click the "Mark All as Read" button at the top toolbar to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable Text', element_info='Mark All as Read')

<<General Operation>>

Answer in Status-Plan-Action-Operation format

Status: None

Plan: None

Action: Click the "Mark All as Read" button at the top center of the inbox page to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable Text', element_info='Mark All as Read')

Answer in Status-Action-Operation-Sensitive format

Status: Currently on the email interface [[0, 2, 998, 905]], with email categories on the left [[1, 216, 144, 570]] and the inbox in the center [[144, 216, 998, 903]]. The "Mark All as Read" button [[223, 178, 311, 210]] has been clicked.

Action: Click the "Mark All as Read" button at the top toolbar to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable Text', element_info='Mark All as Read')

<<General Operation>>

Answer in Status-Action-Operation format

Status: None

Action: On the inbox page, click the "Mark All as Read" button to mark all emails as read.

Grounded Operation: CLICK(box=[[219,186,311,207]], element_type='Clickable Text', element_info='Mark All as Read')

Answer in Action-Operation format

Action: Right-click on the first email in the left-side list to open the action menu.

Grounded Operation: RIGHT_CLICK(box=[[154,275,343,341]], element_info='[AXCell]')

Notes

- This model is not a conversational model and does not support continuous dialogue. Please send specific instructions and use the suggested concatenation method.

- Images must be provided as input; textual prompts alone cannot execute GUI agent tasks.

- The model outputs strictly formatted STR data and does not support JSON format.

Previous Work

In November 2023, we released the first generation of CogAgent. You can find related code and weights in the CogVLM & CogAgent Official Repository.

CogVLM📖 Paper: CogVLM: Visual Expert for Pretrained Language Models CogVLM is a powerful open-source vision-language model (VLM). CogVLM-17B has 10 billion vision parameters and 7 billion language parameters, supporting 490x490 resolution image understanding and multi-turn conversations. CogVLM-17B achieved state-of-the-art performance on 10 classic cross-modal benchmarks including NoCaps, Flicker30k captioning, RefCOCO, RefCOCO+, RefCOCOg, Visual7W, GQA, ScienceQA, VizWiz VQA, and TDIUC benchmarks. |

CogAgent📖 Paper: CogAgent: A Visual Language Model for GUI Agents CogAgent is an improved open-source vision-language model based on CogVLM. CogAgent-18B has 11 billion vision parameters and 7 billion language parameters, supporting image understanding at 1120x1120 resolution. Beyond CogVLM's capabilities, it also incorporates GUI agent capabilities. CogAgent-18B achieved state-of-the-art performance on 9 classic cross-modal benchmarks, including VQAv2, OK-VQ, TextVQA, ST-VQA, ChartQA, infoVQA, DocVQA, MM-Vet, and POPE benchmarks. It significantly outperformed existing models on GUI operation datasets like AITW and Mind2Web. |

License

Please follow the Model License for using the model weights.