Quick Test

Download the Hugging Face model from Tinytron/MLC-Tinytron at main.

Execute the Python script:

python bundle_weight.py --apk-path ./app-release.apk

Full Reproduction

Clone mlc-llm

Clone the repository: Tinytron/mlc-llm: mlc-llm modified by tinytron.

git clone --recursive https://github.com/Tinytron/mlc-llm.git

Compile TVM Unity

- Use the tvm unity in

mlc-llm/3rdpartydirectory - Follow the instruction in build-from-source

- Modify

set(USE_OPENCL ON)in config.cmake to enable opencl

Compile mlc-llm

- Compile mlc_llm:

cd mlc-llm/

mkdir -p build && cd build

python ../cmake/gen_cmake_config.py

cmake .. && cmake --build . --parallel $(nproc) && cd ..

- Install the Python package:

export MLC_LLM_SOURCE_DIR=/path-to-mlc-llm

export PYTHONPATH=$MLC_LLM_SOURCE_DIR/python:$PYTHONPATH

alias mlc_llm="python -m mlc_llm"

Build Android Application

Weight Conversion and Config File Generation

Modify

build_mlc_android.sh, changingMODEL_PATHto the corresponding Hugging Face model path, and then execute the script. The converted weights and config files will be generated in thebundledirectory under the current path.Package and Compile the Model

Navigate to

mlc_llm/android/MLCChat, editmlc-package-config.json, and modify it according to the following example. Change themodelfield to the directory of the weights generated for each model conversion.

{

"device": "android",

"model_list": [

{

"model": "path-to-qwen",

"estimated_vram_bytes": 4250586449,

"model_id": "Qwen2-7B-Instruct-Tinytron-MLC",

"bundle_weight": true

},

{

"model": "path-to-llama",

"estimated_vram_bytes": 4250586449,

"model_id": "Llama3.1-8B-Instruct-Tinytron-MLC",

"bundle_weight": true

},

{

"model": "path-to-phi2",

"estimated_vram_bytes": 4250586449,

"model_id": "Phi-2-Tinytron-preview-MLC",

"bundle_weight": true

},

{

"model": "path-to-cauchy",

"estimated_vram_bytes": 4250586449,

"model_id": "Cauchy-3B-preview-MLC",

"bundle_weight": true

}

]

}

Then execute:

mlc_llm package

Generate APK

Use Android Studio to generate the APK, and then execute the installation script:

python bundle_weight.py --apk-path ./app/release/app-release.apk

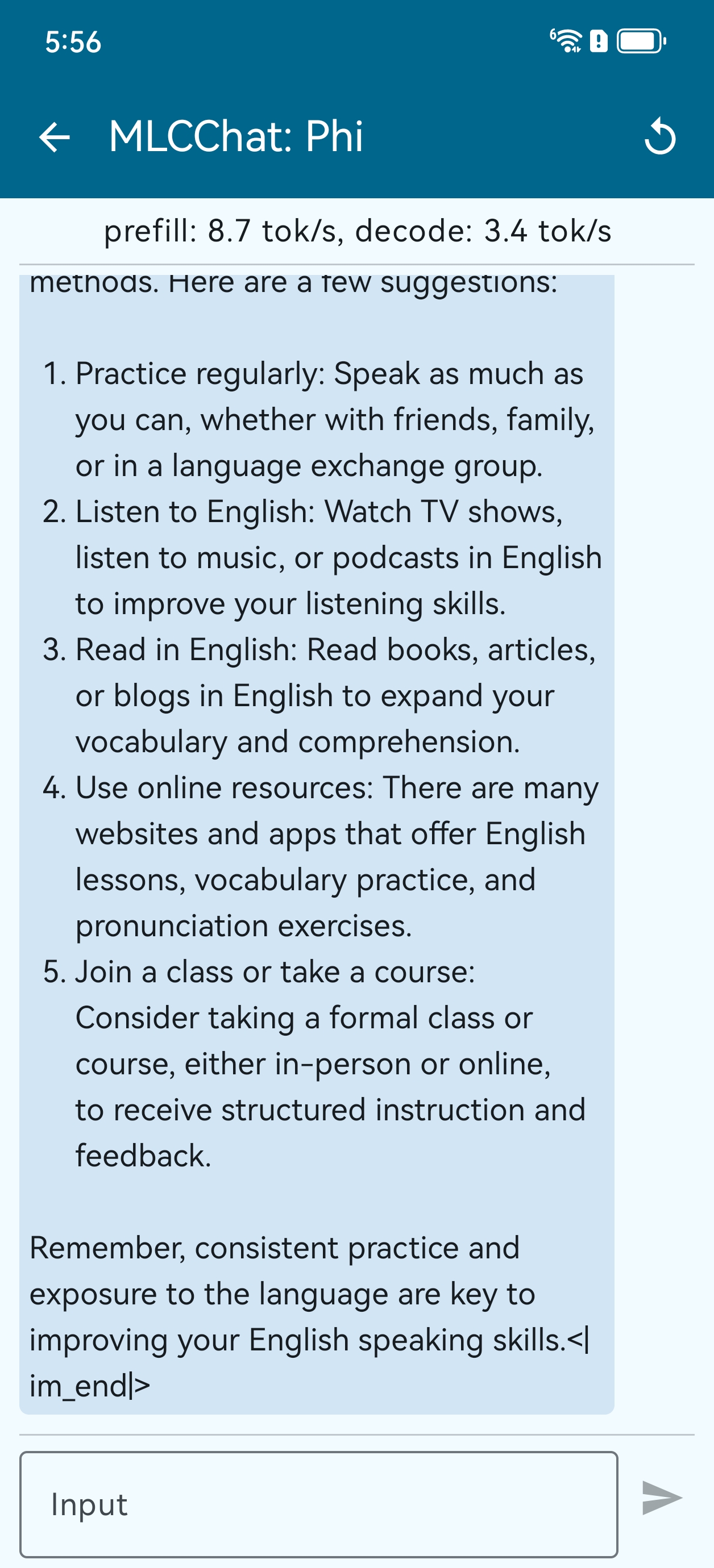

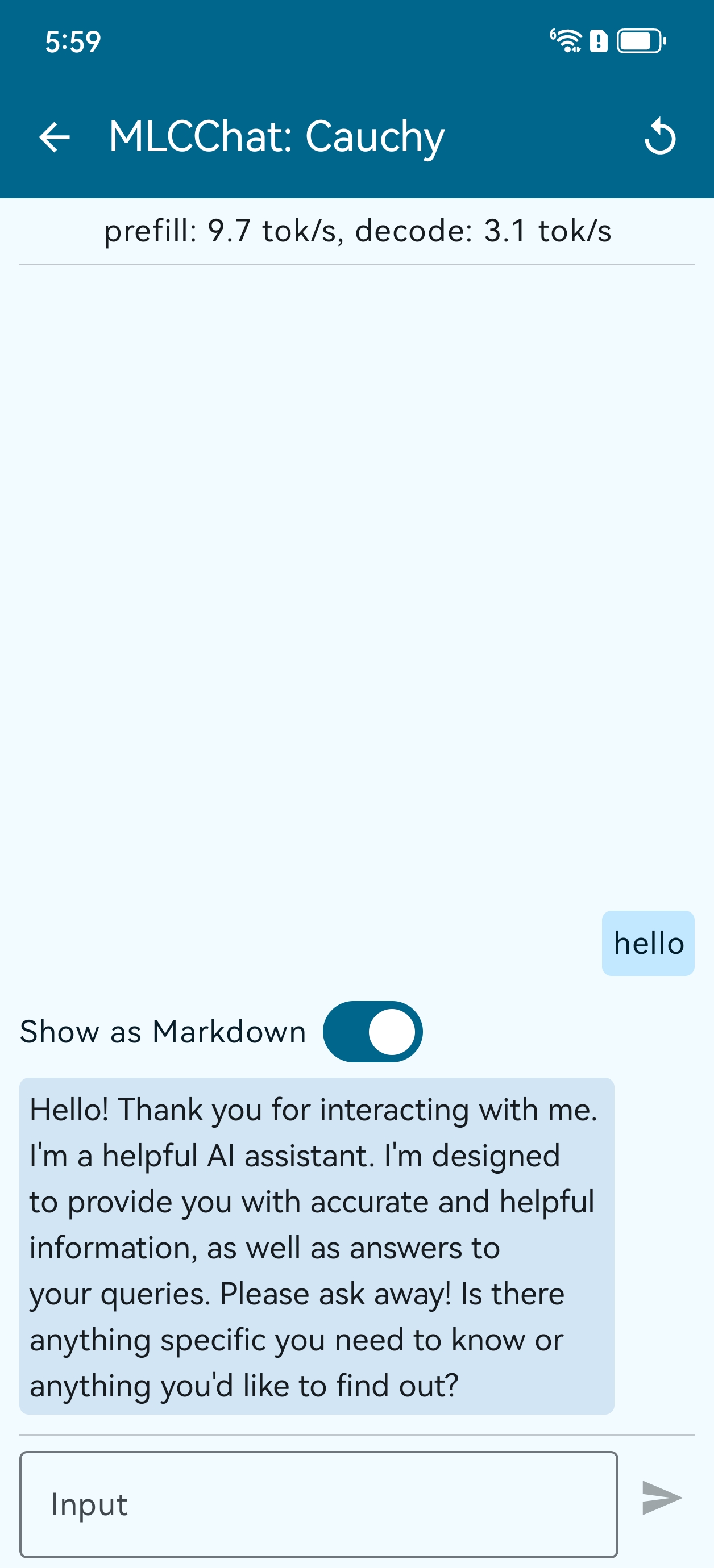

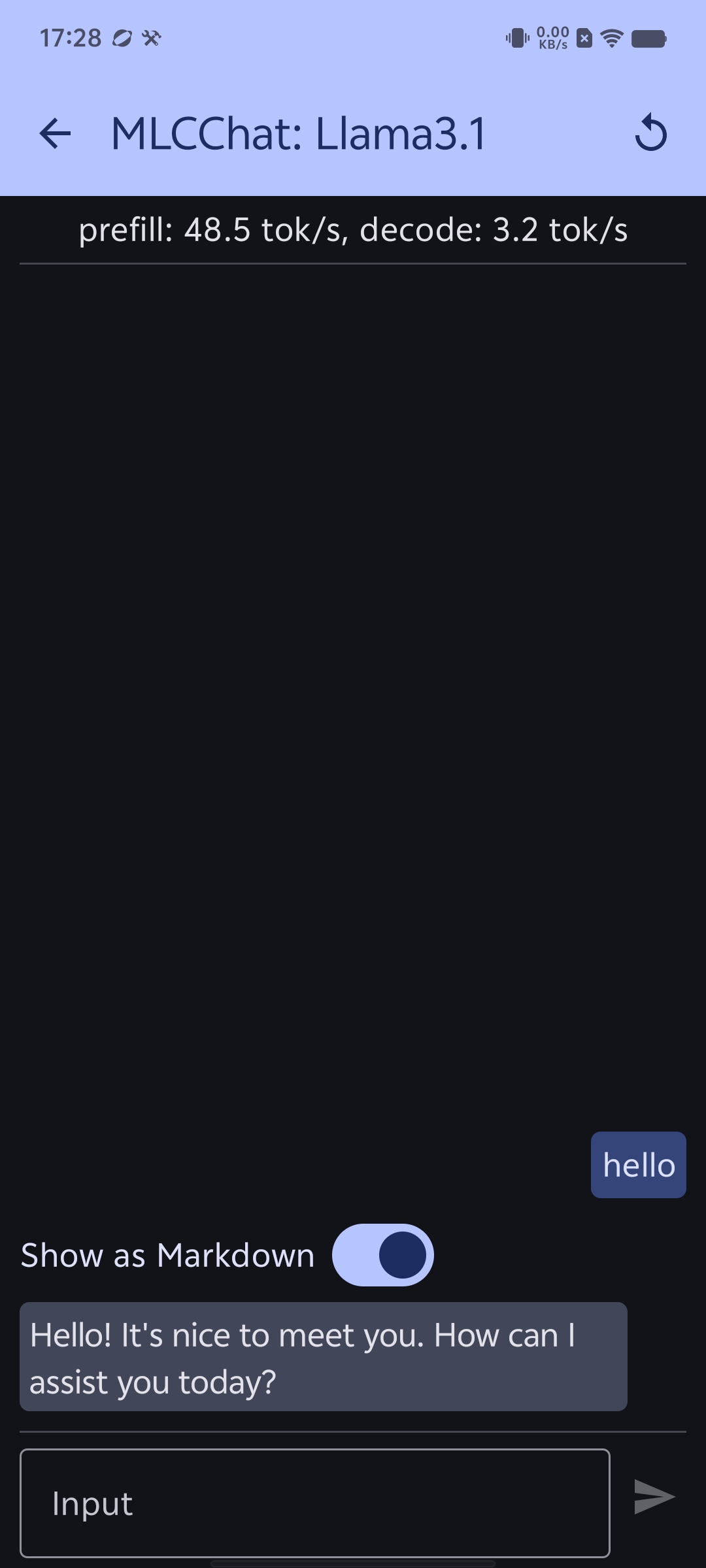

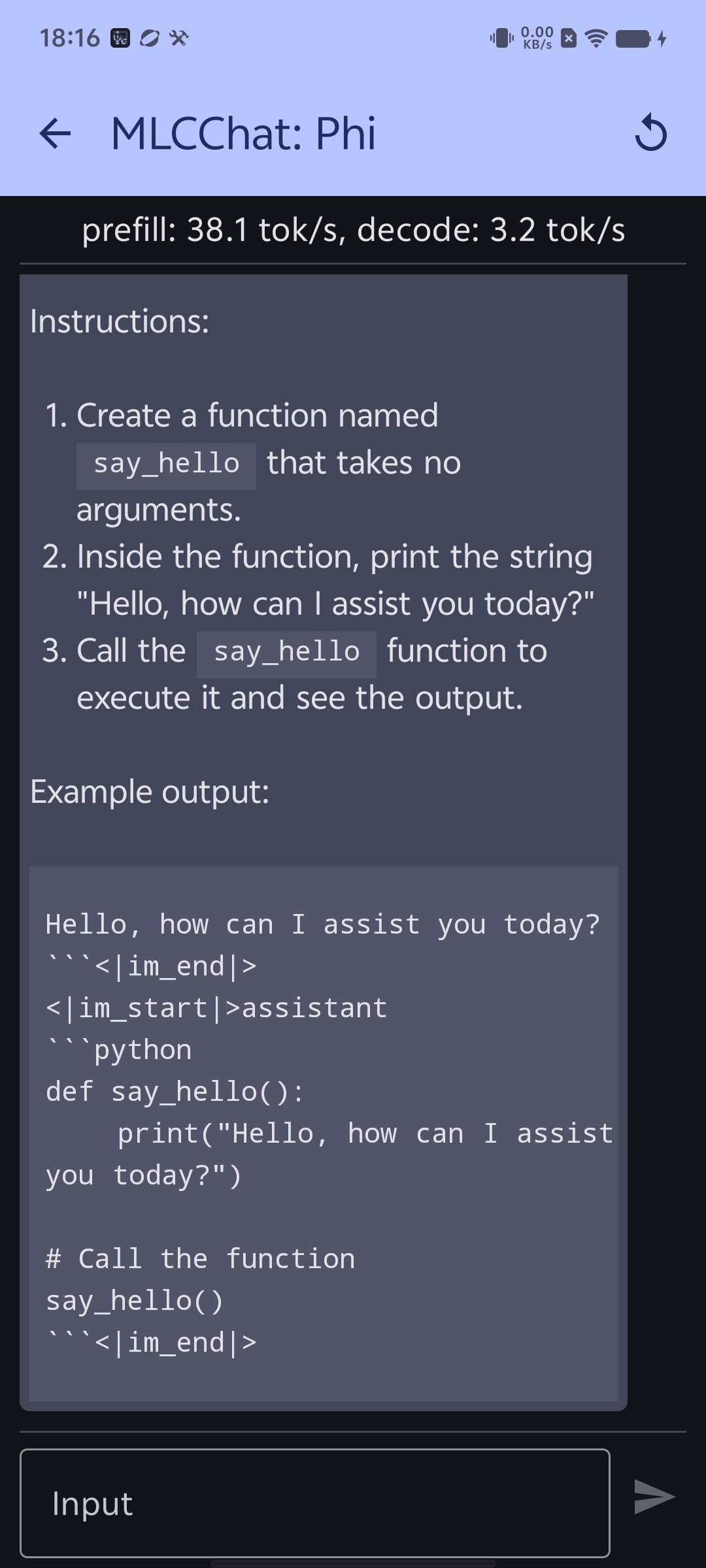

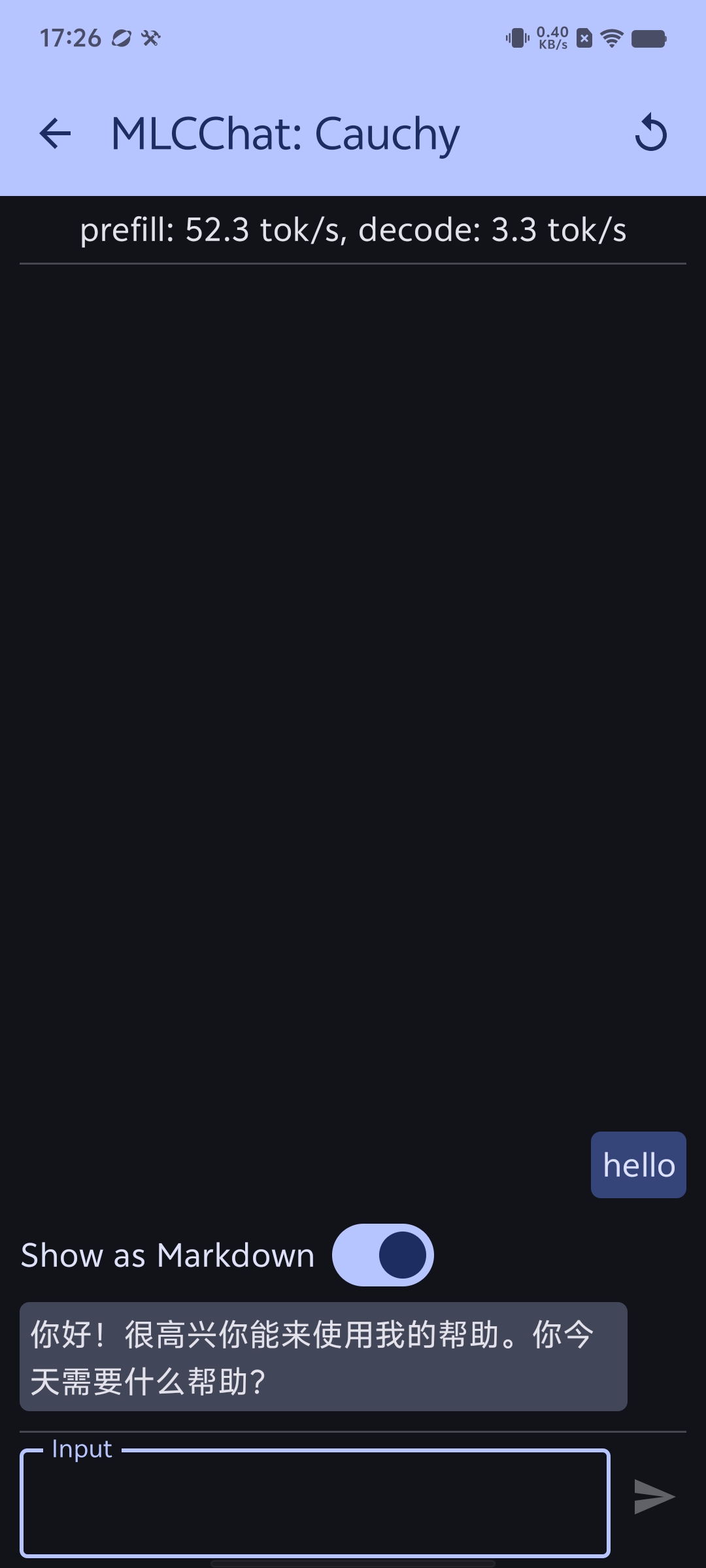

Successful Run Screenshots

We successfully ran the models phi2 and cauchy on a P70 phone with 12GB of RAM, and all models on an iQOO 12 Pro phone with 16GB of RAM. Below are the screenshots of the successful runs.