gguf quantized ace-step-v1-3.5b

- base model from ace-step

- full set gguf (model+encoder+vae) works right away

setup (once)

- drag ace-step to >

./ComfyUI/models/diffusion_models - drag umt5-base to >

./ComfyUI/models/text_encoders - drag pig to >

./ComfyUI/models/vae

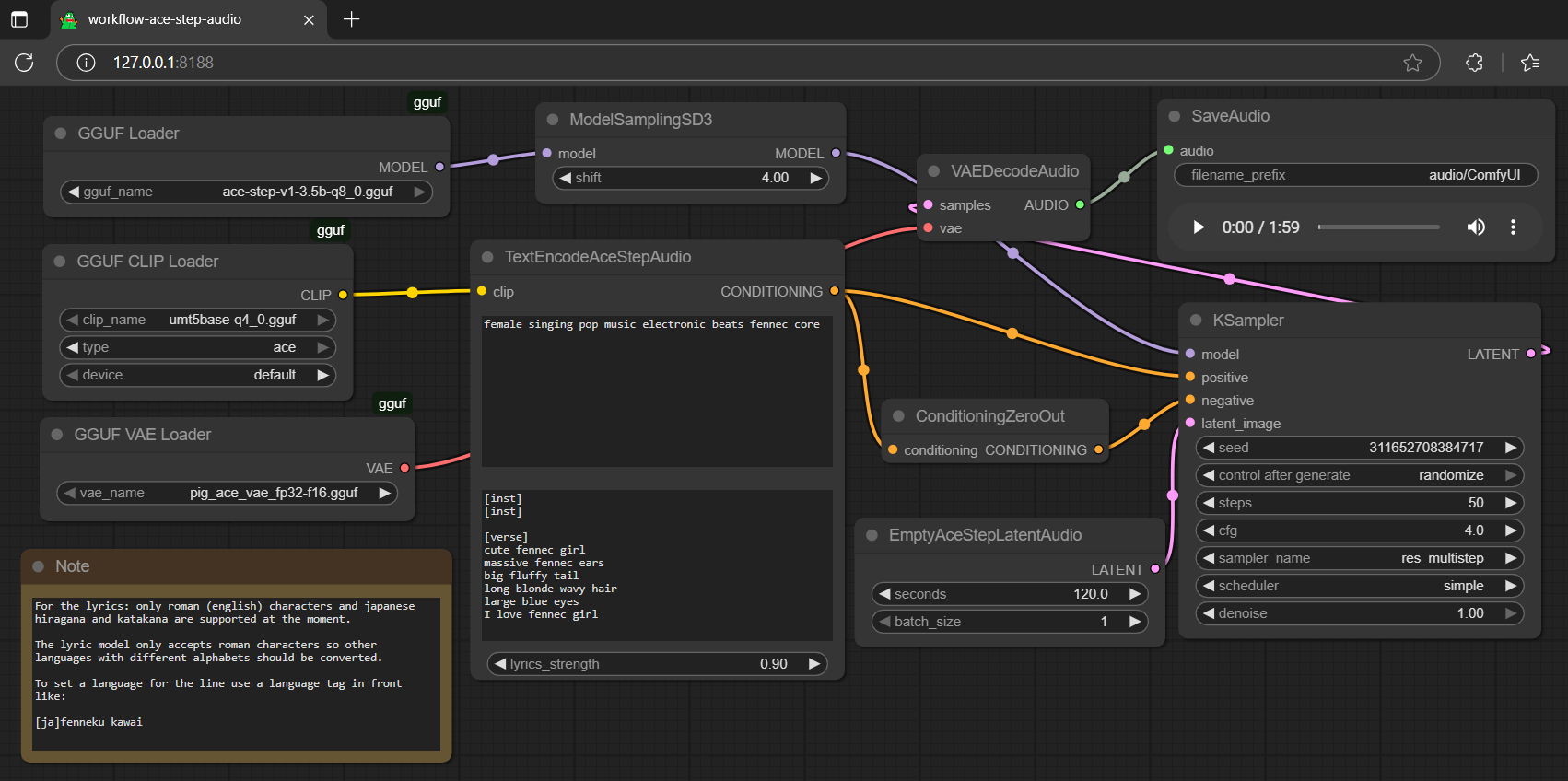

workflow

- drag json or demo audio below to browser for workflow

| Prompt | Audio Sample |

|---|---|

female singing pop music electronic beats fennec corecute fennec girlmassive fennec earsbig fluffy taillong blonde wavy hairlarge blue eyesI love fennec girl |

🎧 ace-step |

female singing pop music electronic beats fennec corecute pinky pigmassive pinky earsbig fluffy taillong cutie wavy hairlarge blue eyesI love pinky pig |

🎧 ace-audio |

review

- note: as need to keep some key tensors (in f32 status) to make it works; file size might not decrease that much; but load faster than safetensors checkpoint in general (no last minute bottle neck problem)

- rebuilding umt5-base tokenizer logic applied successfully; upgrade your node to the latest version for umt5-base encoder support; hence, safetensors checkpoint is no longer needed (removed here; if you want it still, you could get it from comfyui-org)

- get more umt5-base encoder here

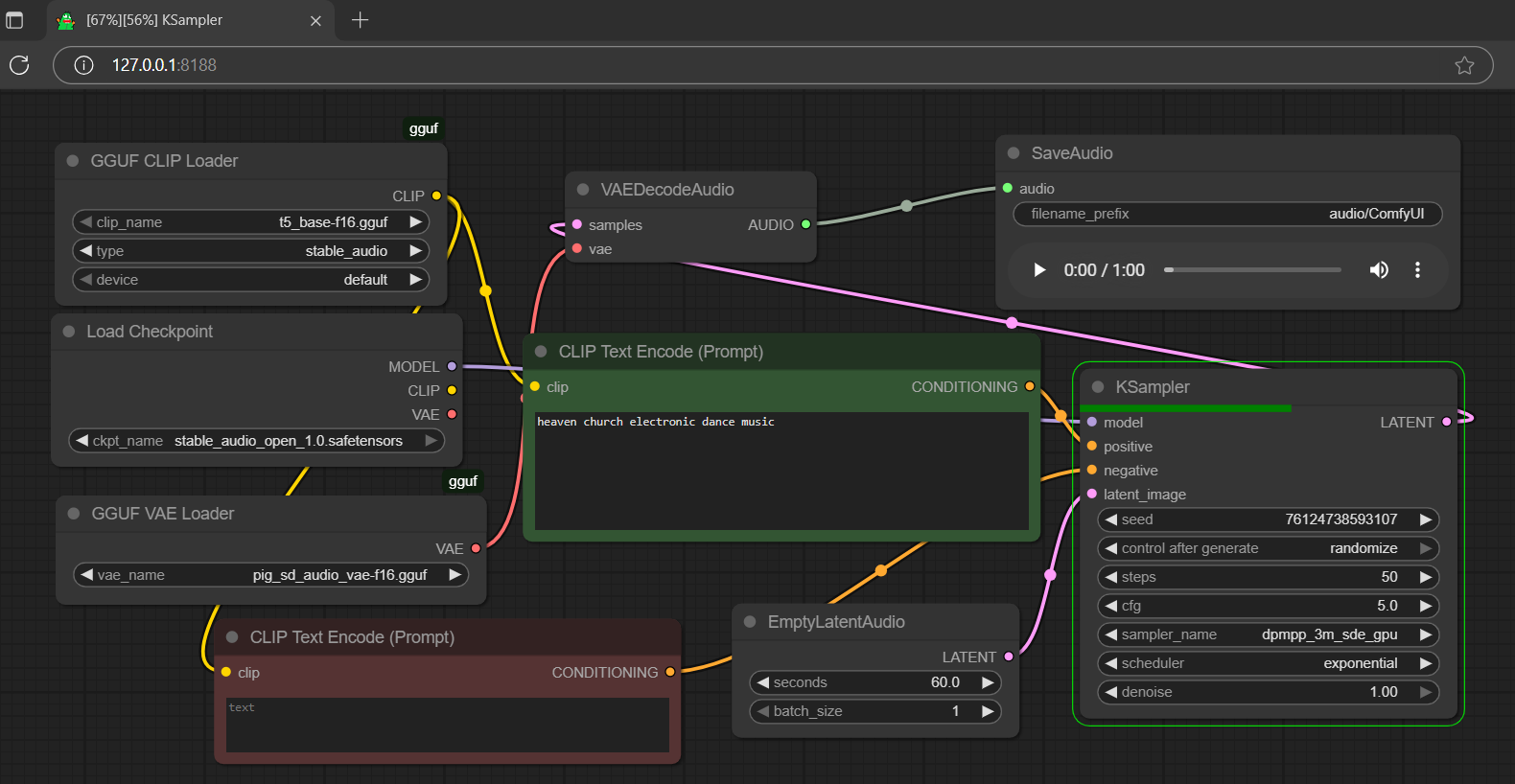

bonus: fp8/16/32 scaled stable-audio-open-1.0 with gguf quantized t5_base encoder

- base model from stabilityai

- note: this is a different model; don't mix it up; also powerful and lite weight

setup (once)

- drag t5-base to >

./ComfyUI/models/text_encoders - drag safetensors to >

./ComfyUI/models/checkpoints - drag pig to >

./ComfyUI/models/vae

| Prompt | Audio Sample |

|---|---|

| heaven church electronic dance music | 🎧 stable-audio |

review

- note: the safetensors checkpoint in this repo is an extracted version; only contains model and condition switch tensors (extremely lite weighted); no clip and vae inside; should use it along with separate clip (text encoder) and vae

- opt to get fp8/16/32 scaled checkpoint with model and vae embedded here

- get more t5-base encoder here

reference

- comfyui from comfyanonymous

- pig architecture from connector

- gguf-node (pypi|repo|pack)

- Downloads last month

- 1,061

Hardware compatibility

Log In

to view the estimation

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/ace-gguf

Base model

ACE-Step/ACE-Step-v1-3.5B