|

|

--- |

|

|

license: mit |

|

|

widget: |

|

|

- text: a conversation between cgg and connector |

|

|

output: |

|

|

url: output-sample.mp4 |

|

|

tags: |

|

|

- cow |

|

|

- gguf-node |

|

|

--- |

|

|

|

|

|

# 🐮cow architecture gguf encoder |

|

|

- don't need to rebuild tokenizer from metadata ⏳🥊 |

|

|

- don't need separate tokenizer file 🐱🥊 |

|

|

- no more oom issues (possibly) 💫💻🥊 |

|

|

|

|

|

## eligible model example |

|

|

- use **cow-mistral3small** [7.73GB](https://huggingface.co/chatpig/flux2-dev-gguf/blob/main/cow-mistral3-small-q2_k.gguf) for [flux2-dev](https://huggingface.co/chatpig/flux2-dev-gguf) |

|

|

- use **cow-gemma2** [2.33GB](https://huggingface.co/calcuis/cow-encoder/blob/main/cow-gemma2-2b-q4_0.gguf) for [lumina](https://huggingface.co/calcuis/lumina-gguf) |

|

|

- use **cow-umt5base** [451MB](https://huggingface.co/calcuis/cow-encoder/blob/main/cow-umt5base-iq4_nl.gguf) for [ace-audio](https://huggingface.co/calcuis/ace-gguf) |

|

|

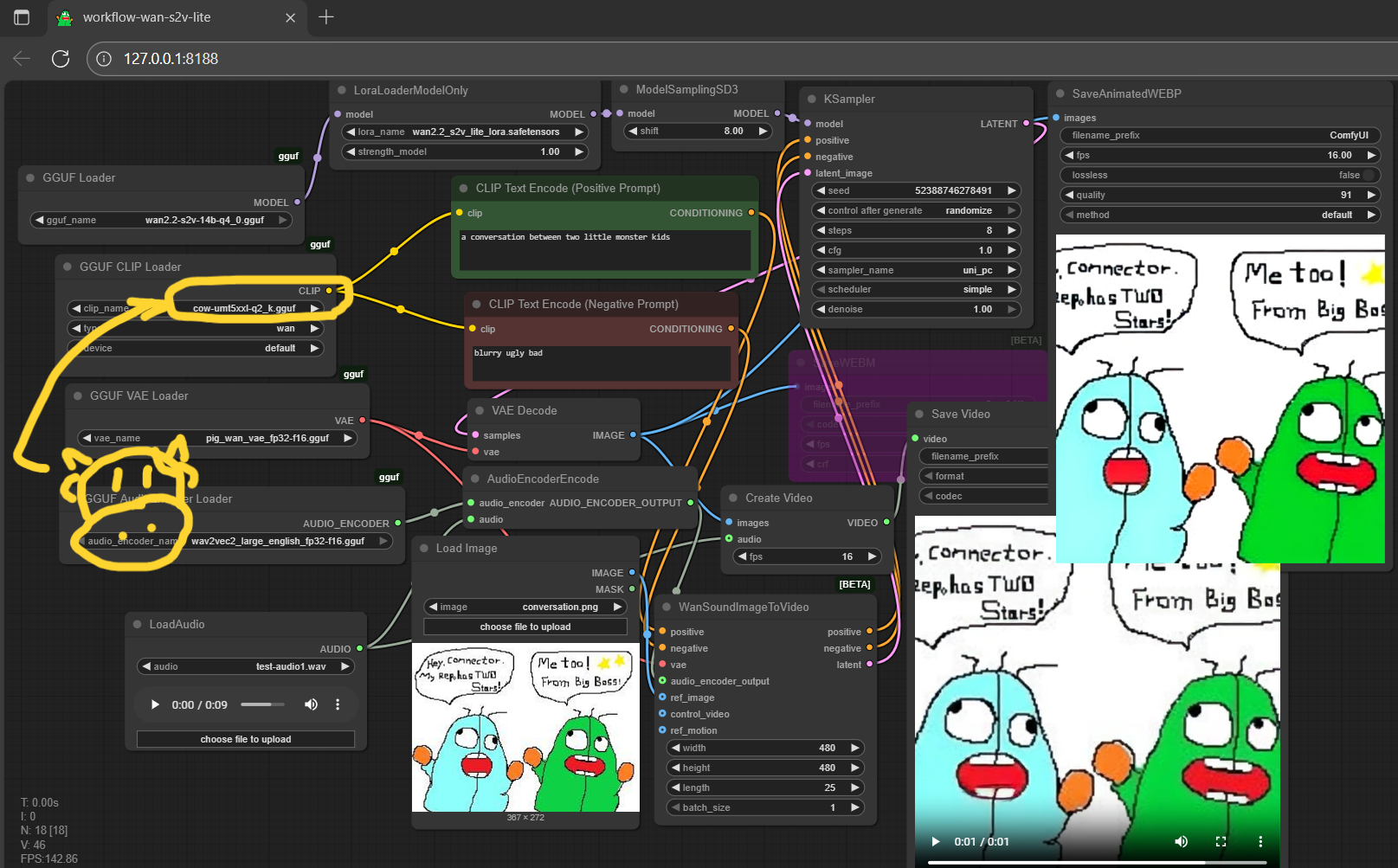

- use **cow-umt5xxl** [3.67GB](https://huggingface.co/calcuis/cow-encoder/blob/main/cow-umt5xxl-q2_k.gguf) for [wan-s2v](https://huggingface.co/calcuis/wan-s2v-gguf) or any wan video model |

|

|

|

|

|

|

|

|

|

|

|

the example workflow above is from wan-s2v-gguf; cow encoder is a special designed clip, even the lowest q2 quant still working very good; upgrade your node for cow-encoder support🥛🐮 and do drink more milk |

|

|

|

|

|

|

|

|

|

|

|

<Gallery /> |

|

|

|

|

|

### **reference** |

|

|

- gguf-node ([pypi](https://pypi.org/project/gguf-node)|[repo](https://github.com/calcuis/gguf)|[pack](https://github.com/calcuis/gguf/releases)) |