gguf quantized version of higgs

- base model from bosonai

- text-to-speech synthesis

run it with gguf-connector

ggc h2

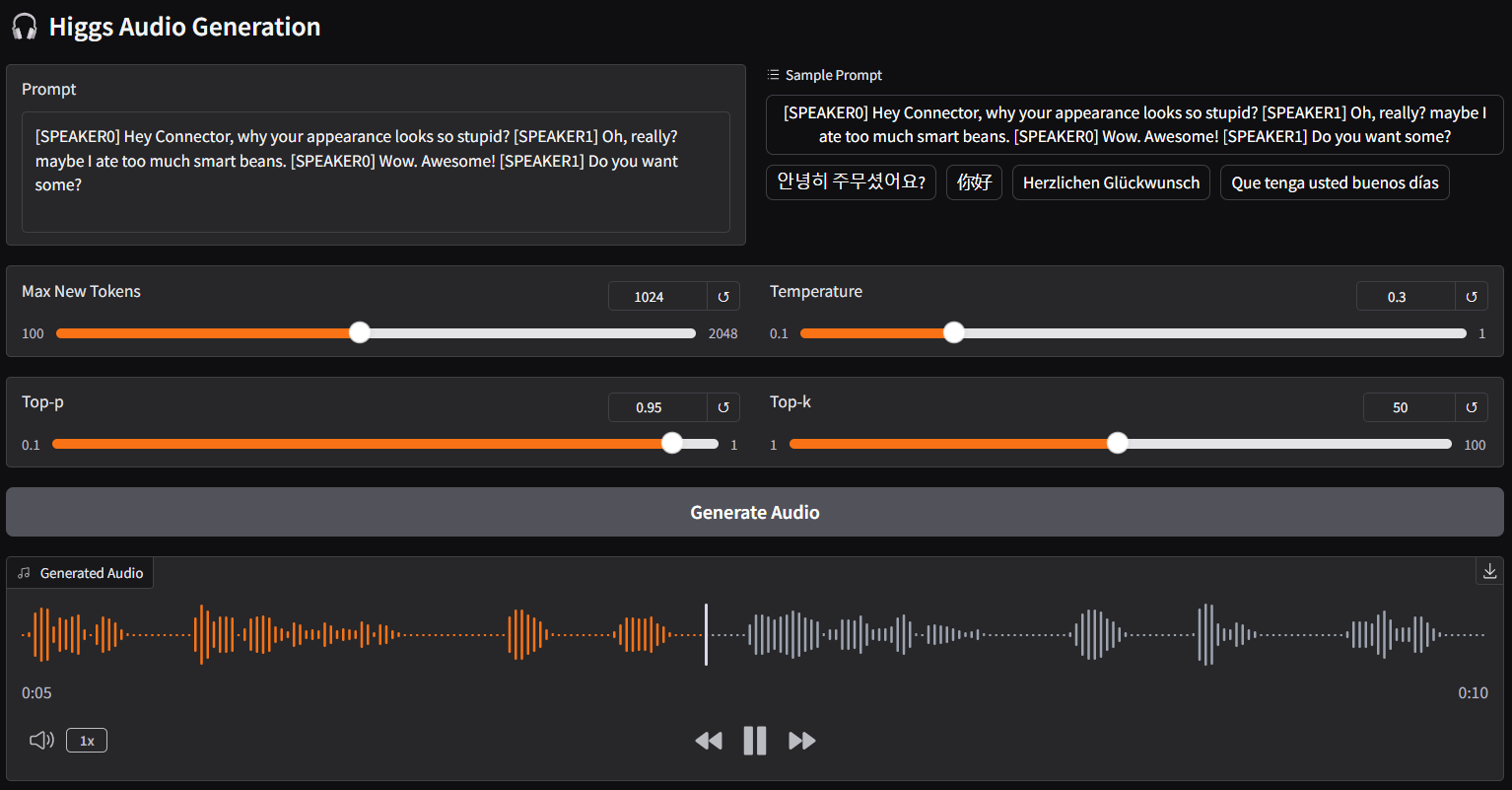

| Prompt | Audio Sample |

|---|---|

[SPEAKER0] Hey Connector, why your appearance looks so stupid?[SPEAKER1] Oh, really? maybe I ate too much smart beans.[SPEAKER0] Wow. Awesome![SPEAKER1] Do you want some? |

🎧 audio-1-english |

안녕히 주무셨어요? |

🎧 audio-2-korean |

你好 |

🎧 audio-3-chinese |

Herzlichen Glückwunsch |

🎧 audio-4-german |

Que tenga usted buenos días |

🎧 audio-5-spanish |

こんにちは |

🎧 audio-6-japanese |

reference

- simply execute the command (

ggc h2) above in console/terminal - note: model file(s) will be pulled to local cache automatically during the first launch; then opt to run it entirely offline; i.e., from local URL: http://127.0.0.1:7860 with lazy webui

- gguf-connector (pypi)

- Downloads last month

- 462

Hardware compatibility

Log In

to view the estimation

1-bit

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/higgs-gguf

Base model

bosonai/higgs-audio-v2-generation-3B-base