How to quantize Illustrious models?

Did you use this method on your github?

https://github.com/calcuis/gguf-quantizor

I can't get it working. Any tips to create q4 and lower quants?

follow the instruction/description in the repo, simply,

- get the quantizor.exe

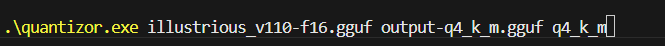

- execute the command as below

- .\quantizor.exe [input_path] [output_path] [tag]

for example,

.\quantizor.exe your-gguf-f16.gguf output-gguf-q4_k_m.gguf q4_k_m

btw, you have to make the bf16 or f16 gguf with the convertor alpha first

I tried and nothing happened. I went to Comfyui, used your alpha converter to make an Illustrious model into the GGUF. Installed your GGUF Quantizeer model on my drive. Followed the instructions. Then nothing happened.

PS E:\gguf-quantizor> .\quantizor.exe miaomiaoHarem_vPredDogma10-f16.gguf output-gguf-q4_k_m.gguf q4_k_m

I tried and nothing happened. I went to Comfyui, used your alpha converter to make an Illustrious model into the GGUF. Installed your GGUF Quantizeer model on my drive. Followed the instructions. Then nothing happened.

PS E:\gguf-quantizor> .\quantizor.exe miaomiaoHarem_vPredDogma10-f16.gguf output-gguf-q4_k_m.gguf q4_k_m

do you have the c/c++ compiler in your machine? don't need to install, just an exe file only; it works when you have set the PATH for your c/c++ compiler

pull the quantizor.exe and the f16 gguf in the same directory and execute the command

it will start processing, and give you the output file afterwards, like this

I tried, can't get it working. Is there any way for this to be implemented into Comfyui where we could get all the quants done? I kind of give up. It's above my paygrade. Even AI couldn't help me get it running after 2 days.

np, the quantization code is based on llama.cpp; which is written in c/c++; can't run it without compiler; those ram/memory level operations are very efficient with c; for old school users, everybody has c, java, etc. compilers installed by default; otherwise you can't even use the program, not exclusively for developers