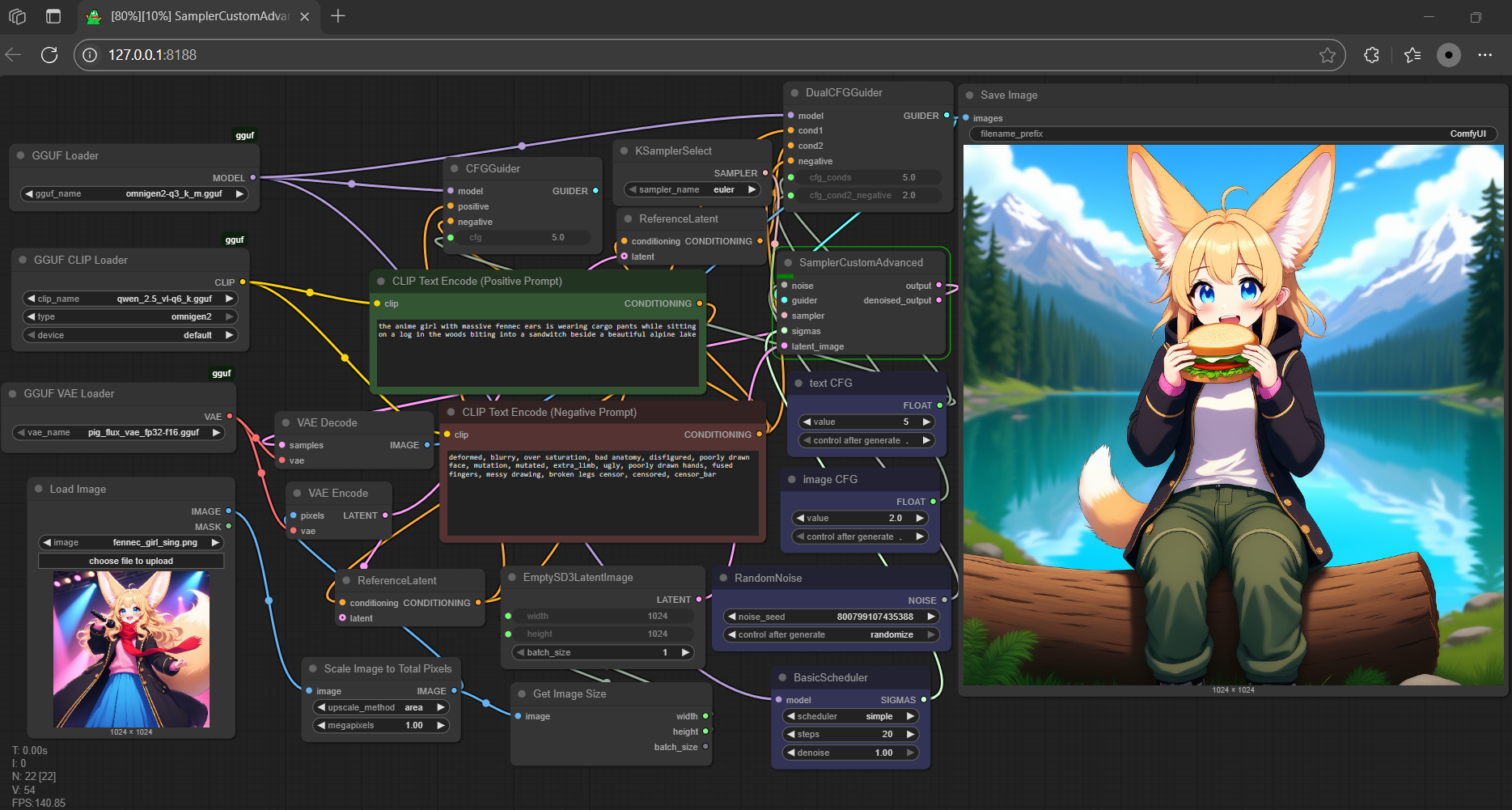

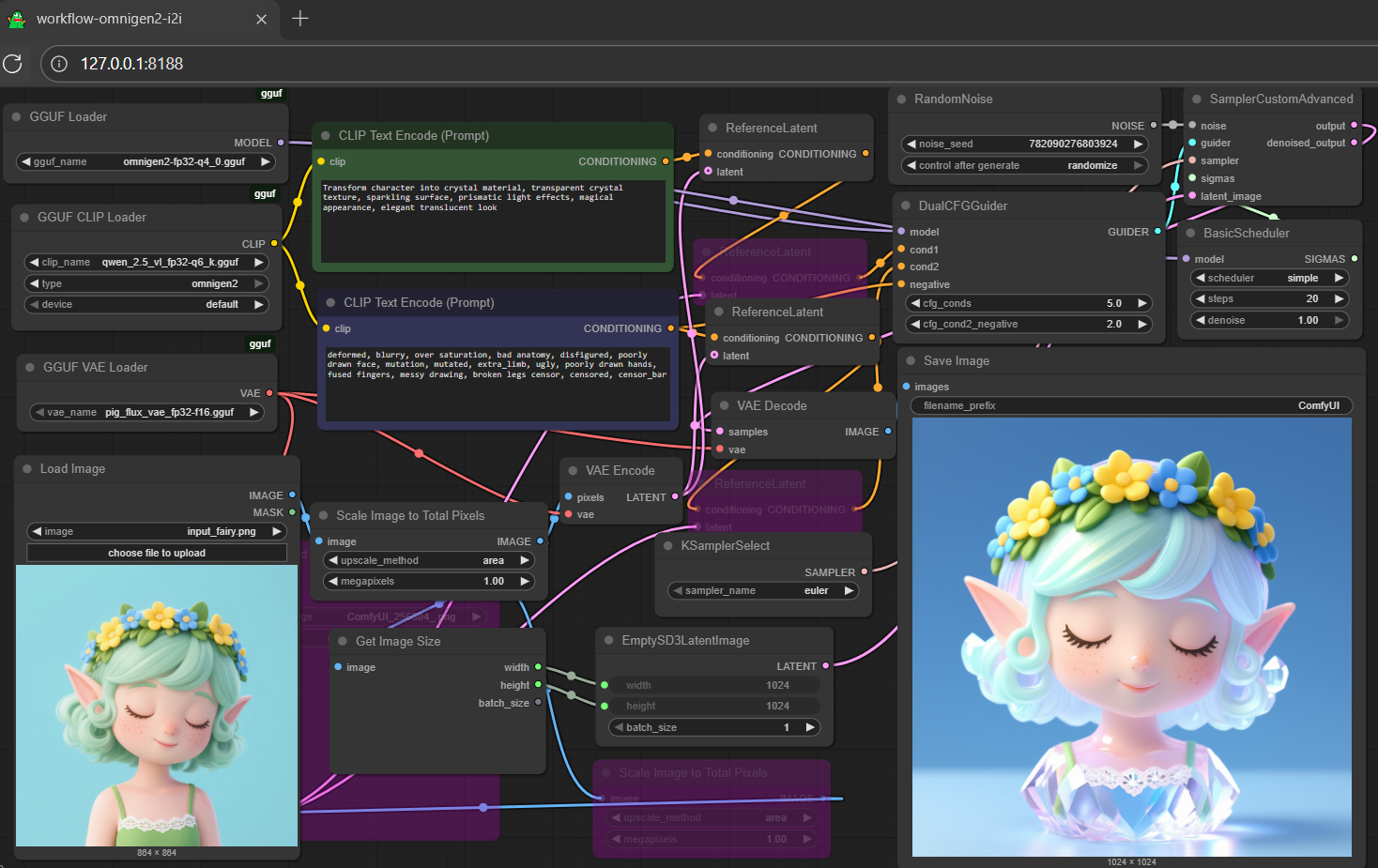

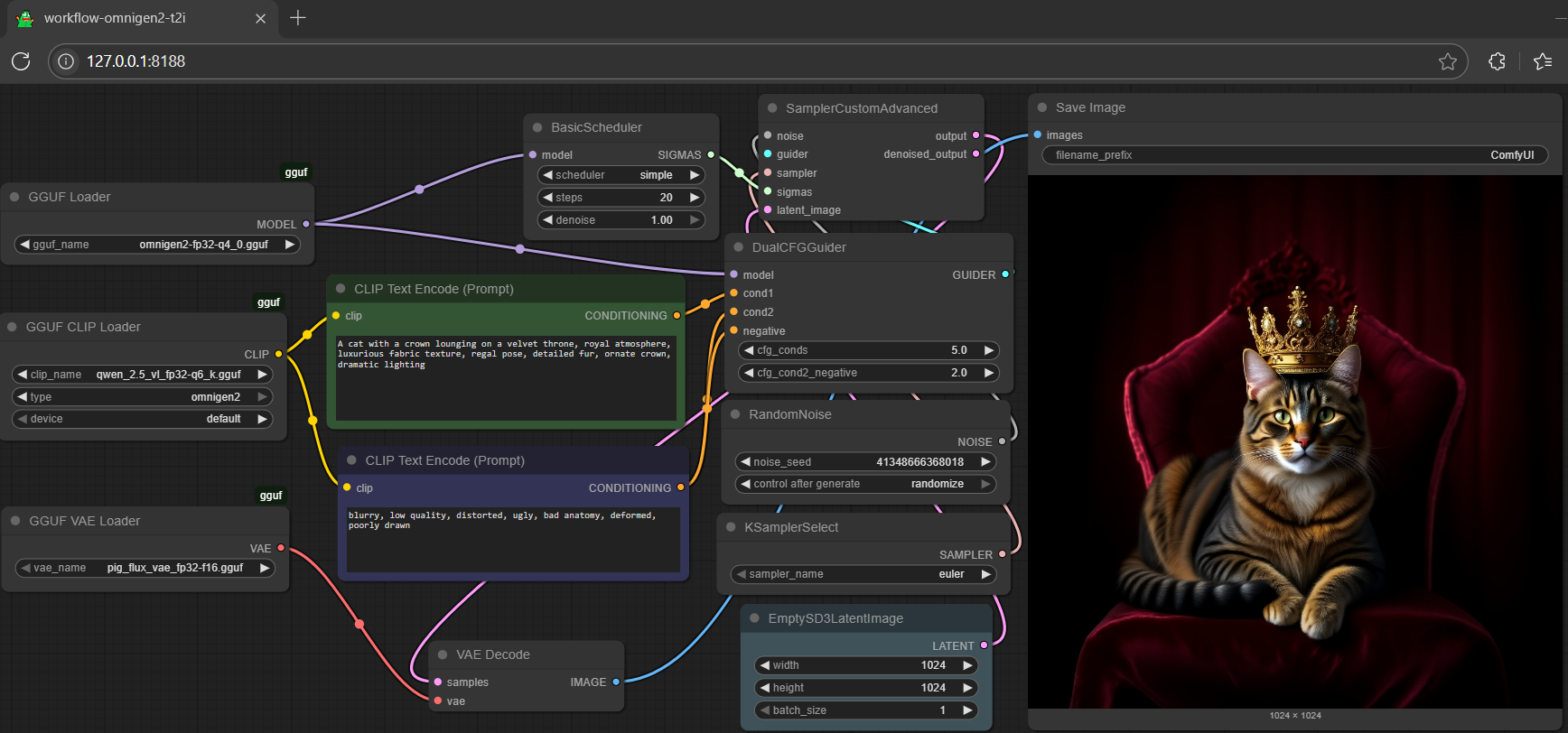

gguf quantized version of omnigen2

- drag omnigen2 to >

./ComfyUI/models/diffusion_models - drag qwen2.5 to >

./ComfyUI/models/text_encoders - drag pig to >

./ComfyUI/models/vae

- Prompt

- the anime girl with massive fennec ears is wearing cargo pants while sitting on a log in the woods biting into a sandwitch beside a beautiful alpine lake

- Prompt

- the anime girl with massive fennec ears is wearing cargo pants while sitting on a log in the woods biting into a sandwitch beside a beautiful alpine lake

- Prompt

- the anime girl with massive fennec ears is wearing cargo pants while sitting on a log in the woods biting into a sandwitch beside a beautiful alpine lake

- don't need safetensors anymore; all gguf (model + encoder + vae)

- full set gguf works on gguf-node (see the last item from reference below)

- t2i is roughly 3x to 5x faster than i2i or image editing

- get more qwen2.5 gguf encoder here (for this encoder, to ensure the embedded visual/vision part of tensors working properly, especially if you need image input, it's recommended to take q4 or above)

- alternatively, you could get fp8-e4m3fn safetensors encoder here, or make it with

TENSOR Cutter (Beta); works pretty good as well; and don't even need to switch loader (gguf clip loader supports scaled fp8 safetensors)

reference

- base model from omnigen2

- comfyui from comfyanonymous

- gguf-node (pypi|repo|pack)

- Downloads last month

- 4,787

Hardware compatibility

Log In

to view the estimation

2-bit

3-bit

4-bit

5-bit

6-bit

8-bit

16-bit

32-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/omnigen2-gguf

Base model

OmniGen2/OmniGen2