license: cc-by-nc-sa-4.0

size_categories:

- 1K<n<10K

task_categories:

- image-text-to-text

- image-segmentation

language:

- en

tags:

- vqa

- cultural-understanding

- cultural

configs:

- config_name: Image_List

data_files:

- split: test

path: image_data/Image_List.csv

Paper | Project Page | Code

Seeing Culture Benchmark (SCB)

Evaluating Visual Reasoning and Grounding in Cultural Context

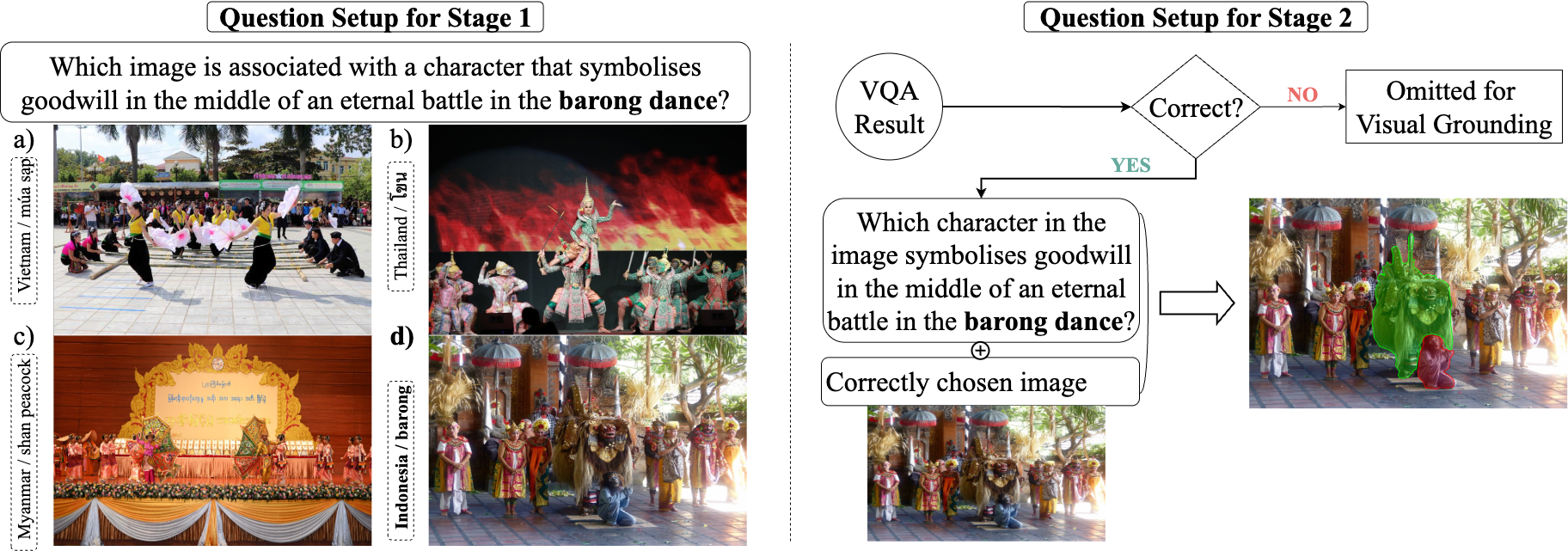

Multimodal vision-language models (VLMs) have made substantial progress in various tasks that require a combined understanding of visual and textual content, particularly in cultural understanding tasks, with the emergence of new cultural datasets. However, these datasets frequently fall short of providing cultural reasoning while underrepresenting many cultures. In this work, we introduce the Seeing Culture Benchmark (SCB), focusing on cultural reasoning with a novel approach that requires VLMs to reason on culturally rich images in two stages: i) selecting the correct visual option with multiple-choice visual question answering (VQA), and ii) segmenting the relevant cultural artifact as evidence of reasoning. Visual options in the first stage are systematically organized into three types: those originating from the same country, those from different countries, or a mixed group. Notably, all options are derived from a singular category for each type. Progression to the second stage occurs only after a correct visual option is chosen.

The SCB benchmark comprises 1,065 images that capture 138 cultural artifacts across five categories from seven Southeast Asia countries, whose diverse cultures are often overlooked, accompanied by 3,178 questions, of which 1,093 are unique and meticulously curated by human annotators. Our evaluation of various VLMs reveals the complexities involved in cross-modal cultural reasoning and highlights the disparity between visual reasoning and spatial grounding in culturally nuanced scenarios. The SCB serves as a crucial benchmark for identifying these shortcomings, thereby guiding future developments in the field of cultural reasoning.

See the project website for more information. https://seeingculture-benchmark.github.io/

Loading the dataset

- image_data/Image_List.csv contains the dataset.

- mcq_data/MCQ_List_no_ans.csv contains the MCQ questions.

- mcq_data/MCQ_Avoid_List.csv contains the avoid list for MCQ generation. See the GitHub repository for more details about MCQ generation.

Sample Usage

The GitHub repository provides an MCQ Generator. You can set it up and use it with the following commands:

Multiple Choice Question Generator Setup

$ source setup.sh

Usage

$ source generate.sh

Output

- The output will be saved in the

./directory inquestions_{timestamp}.jsonlformat. - Please download

data.zipand unpack to the same directory as thegenerate.shfile. - You can visualize using the

visualizer.ipynbfile (please change theJSONfile name accordingly).

Note:

The answers for MCQ Questions and Visual Segmentation masks are not yet publicly available. We are actively working on creating a competition where participants can upload their predictions and evaluate their models, fostering a collaborative environment. Stay tuned for more updates! If you need to evaluate urgently, please contact [email protected]

Usage and License

The Seeing Culture Benchmark (SCB) is a test-only benchmark that can be used to evaluate models. It is designed to test the ability of models to understand and reason visual culture-related content. The images are scraped from the internet and are not owned by the authors. All annotations are released under the CC BY-NC-SA 4.0 license.

Citation Information

If you are using this dataset, please cite our paper accepted by EMNLP 2025 Main Conference!

@misc{satar2025seeingculturebenchmarkvisual,

title={Seeing Culture: A Benchmark for Visual Reasoning and Grounding},

author={Burak Satar and Zhixin Ma and Patrick A. Irawan and Wilfried A. Mulyawan and Jing Jiang and Ee-Peng Lim and Chong-Wah Ngo},

year={2025},

eprint={2509.16517},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2509.16517},

}