Large Language Diffusion with Ordered Unmasking (LLaDOU)

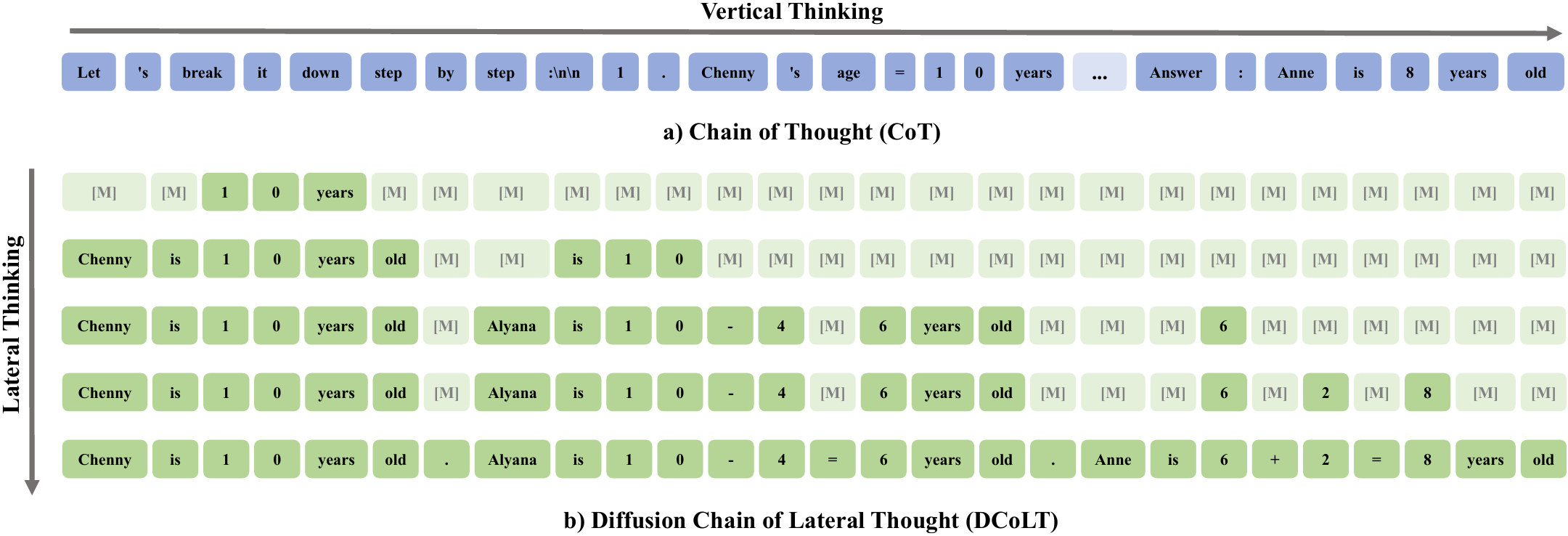

We introduce the Large Language Diffusion with Ordered Unmasking (LLaDOU), which is trained by reinforcing a new reasoning paradigm named the Diffusion Chain of Lateral Thought (DCoLT) for diffusion language models.

Compared to standard CoT, DCoLT is distinguished with several notable features:

- Bidirectional Reasoning: Allowing global refinement throughout generations with bidirectional self-attention masks.

- Format-Free Reasoning: No strict rule on grammatical correctness amid its intermediate steps of thought.

- Nonlinear Generation: Generating tokens at various positions in different steps.

Instructions

LLaDOU-v0-Code is a code-specific model trained on a subset of KodCode-V1-SFT-R1.

For inference codes and detailed instructions, please refer our github page: maple-research-lab/LLaDOU.

- Downloads last month

- 21

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for maple-research-lab/LLaDOU-v0-Code

Base model

GSAI-ML/LLaDA-8B-Instruct