Flex.2-preview

Open Source 8B parameter Text to Image Diffusion Model with universal control and inpainting support built in. Early access preview release. The next version of Flex.1-alpha

Features

- 8 billion parameters

- Guidance embedder (2x as fast to generate)

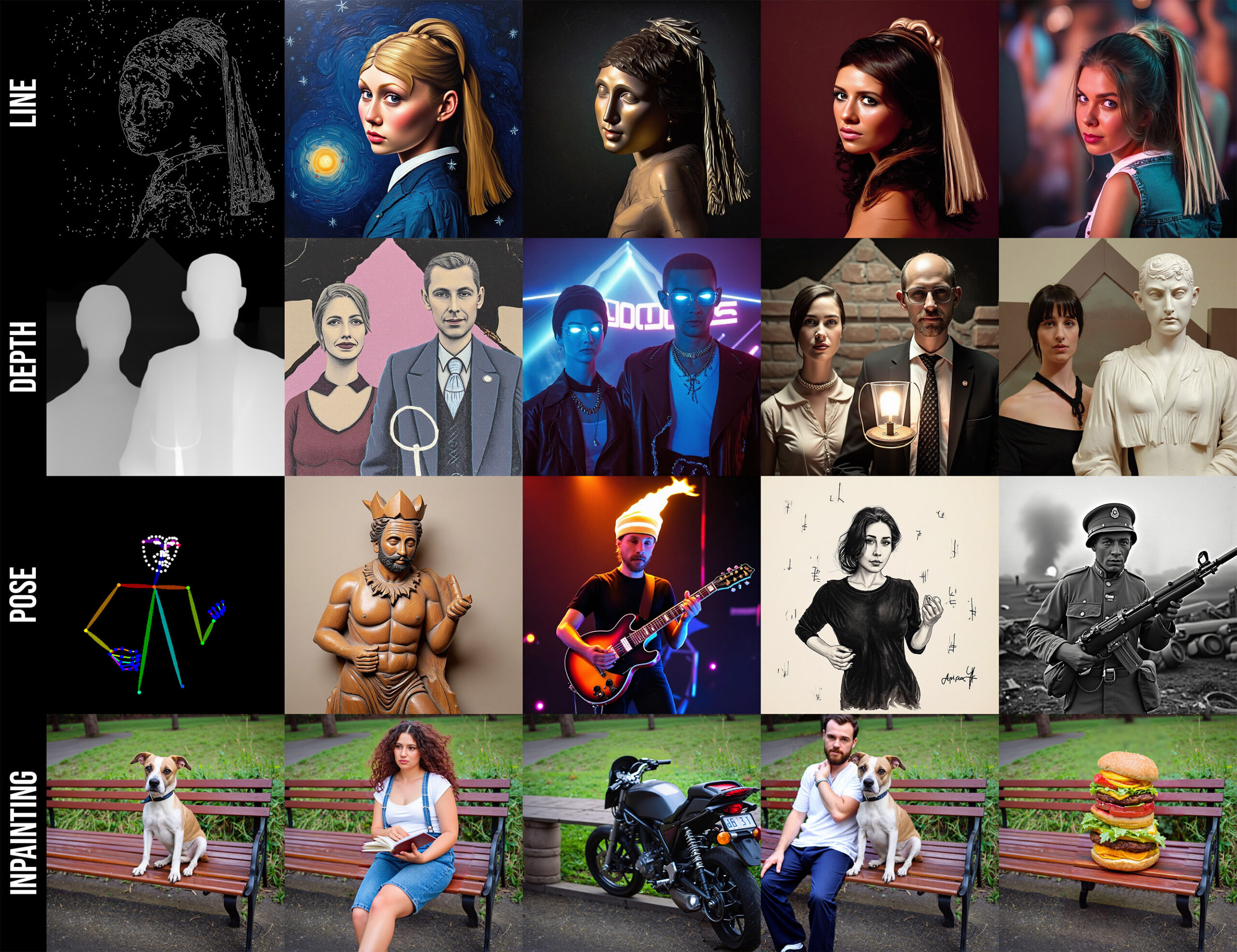

- Built in inpainting

- Universal control input (line, pose, depth)

- Fine tunable

- OSI compliant license (Apache 2.0)

- 512 token length input

- 16 channel latent space

- Made by the community, for the community

About

Flex.2 is currently the most flexable text to image diffusion model released, making it truely live up to its name. It has taken a lot to get here:

Flux.1 Schnell -> OpenFlux.1 -> Flex.1-alpha -> Flex.2-preview.

Numerous improvements have been made with every step, but Flex.2 is the biggest step so far, and best of all, it was just trained by a some guy who relies 100% on community support to make a living and fund the outrageous compute cost needed to keep training models like this. Speaking of which, and since you brought it up, not me, I am always in need of support. Everything I create is free and open, with permissive licenses. So if you find my work beneficial, or use it in a commercial setting, please consider contributing to my continued desire to live and develop more open source projects and models. Visit the Support section below to find out how you can help out and see the awesome people who already do.

Flex.2 preview is an early release to get feedback on the new features and to encourage experimentation and tooling. I would love to hear suggestions as well as be made aware of weak points so I can address them while training continues. Flex.2 is a continuation of Flex.1-alpha, with a lot of goodies thrown in. The most important new features and improvements over Flex.1-alpha are:

- Inpainting: Flex.2 as built in inpainting support trained into the base model.

- Universal Control: It has a universal control input that has been trained to accept pose, line, and depth inputs.

I wanted to put all the tools I use and love straight into the base model so one model is all you need to empower creativity far beyond what a simple text to image model could ever do on its own.

Usage

Support His Work

If you enjoy my projects or use them commercially, please consider sponsoring me. Every bit helps! 💖

- Downloads last month

- 17