metadata

license: apache-2.0

base_model:

- google/siglip2-base-patch32-256

datasets:

- strangerguardhf/DSE1

language:

- en

pipeline_tag: image-classification

library_name: transformers

tags:

- siglip2

- '384'

- explicit-content

- adult-content

- classification

siglip2-x256p32-explicit-content

siglip2-x256p32-explicit-content is a vision-language encoder model fine-tuned from siglip2-base-patch32-256 for multi-class image classification. Based on the SiglipForImageClassification architecture, this model is designed to detect and categorize various forms of visual content, from safe to explicit, making it ideal for content moderation and media filtering.

SigLIP 2: Multilingual Vision-Language Encoders with Improved Semantic Understanding, Localization, and Dense Features https://arxiv.org/pdf/2502.14786

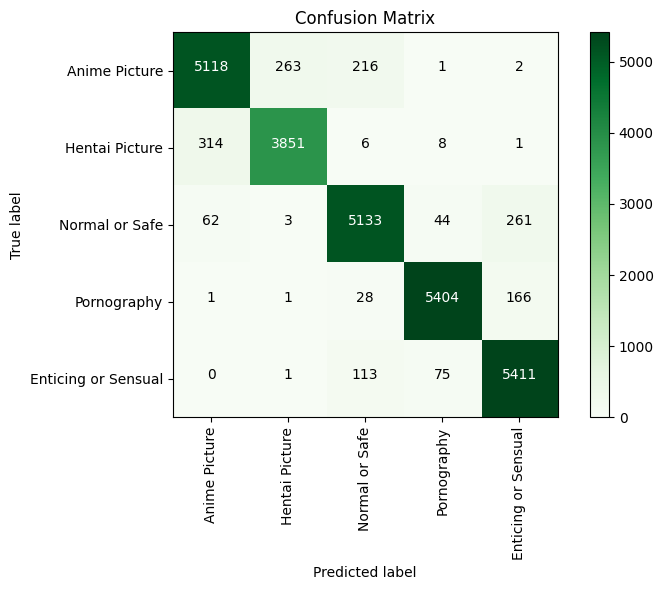

Classification Report:

precision recall f1-score support

Anime Picture 0.9314 0.9139 0.9226 5600

Hentai Picture 0.9349 0.9213 0.9281 4180

Normal or Safe 0.9340 0.9328 0.9334 5503

Pornography 0.9769 0.9650 0.9709 5600

Enticing or Sensual 0.9264 0.9663 0.9459 5600

accuracy 0.9409 26483

macro avg 0.9407 0.9398 0.9402 26483

weighted avg 0.9410 0.9409 0.9408 26483

Label Space: 5 Classes

This model classifies each image into one of the following content types:

Class 0: "Anime Picture"

Class 1: "Hentai Picture"

Class 2: "Normal or Safe"

Class 3: "Pornography"

Class 4: "Enticing or Sensual"

Install Dependencies

pip install -q transformers torch pillow gradio

Inference Code

import gradio as gr

from transformers import AutoImageProcessor, SiglipForImageClassification

from PIL import Image

import torch

# Load model and processor

model_name = "prithivMLmods/siglip2-x256p32-explicit-content" # Replace with your HF model path if needed

model = SiglipForImageClassification.from_pretrained(model_name)

processor = AutoImageProcessor.from_pretrained(model_name)

# ID to Label mapping

id2label = {

"0": "Anime Picture",

"1": "Hentai Picture",

"2": "Normal or Safe",

"3": "Pornography",

"4": "Enticing or Sensual"

}

def classify_explicit_content(image):

image = Image.fromarray(image).convert("RGB")

inputs = processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits

probs = torch.nn.functional.softmax(logits, dim=1).squeeze().tolist()

prediction = {

id2label[str(i)]: round(probs[i], 3) for i in range(len(probs))

}

return prediction

# Gradio Interface

iface = gr.Interface(

fn=classify_explicit_content,

inputs=gr.Image(type="numpy"),

outputs=gr.Label(num_top_classes=5, label="Predicted Content Type"),

title= "siglip2-x256p32-explicit-content",

description="Classifies images as Anime, Hentai, Pornography, Enticing, or Safe for use in moderation systems."

)

if __name__ == "__main__":

iface.launch()

Intended Use

This model is ideal for:

- AI-Powered Content Moderation

- NSFW and Explicit Media Detection

- Content Filtering in Social Media Platforms

- Image Dataset Cleaning & Annotation

- Parental Control Solutions