Spaces:

Running

A newer version of the Gradio SDK is available:

5.16.0

title: SmolLM2-135M

emoji: 🚀

colorFrom: purple

colorTo: pink

sdk: gradio

sdk_version: 5.13.1

app_file: app.py

pinned: false

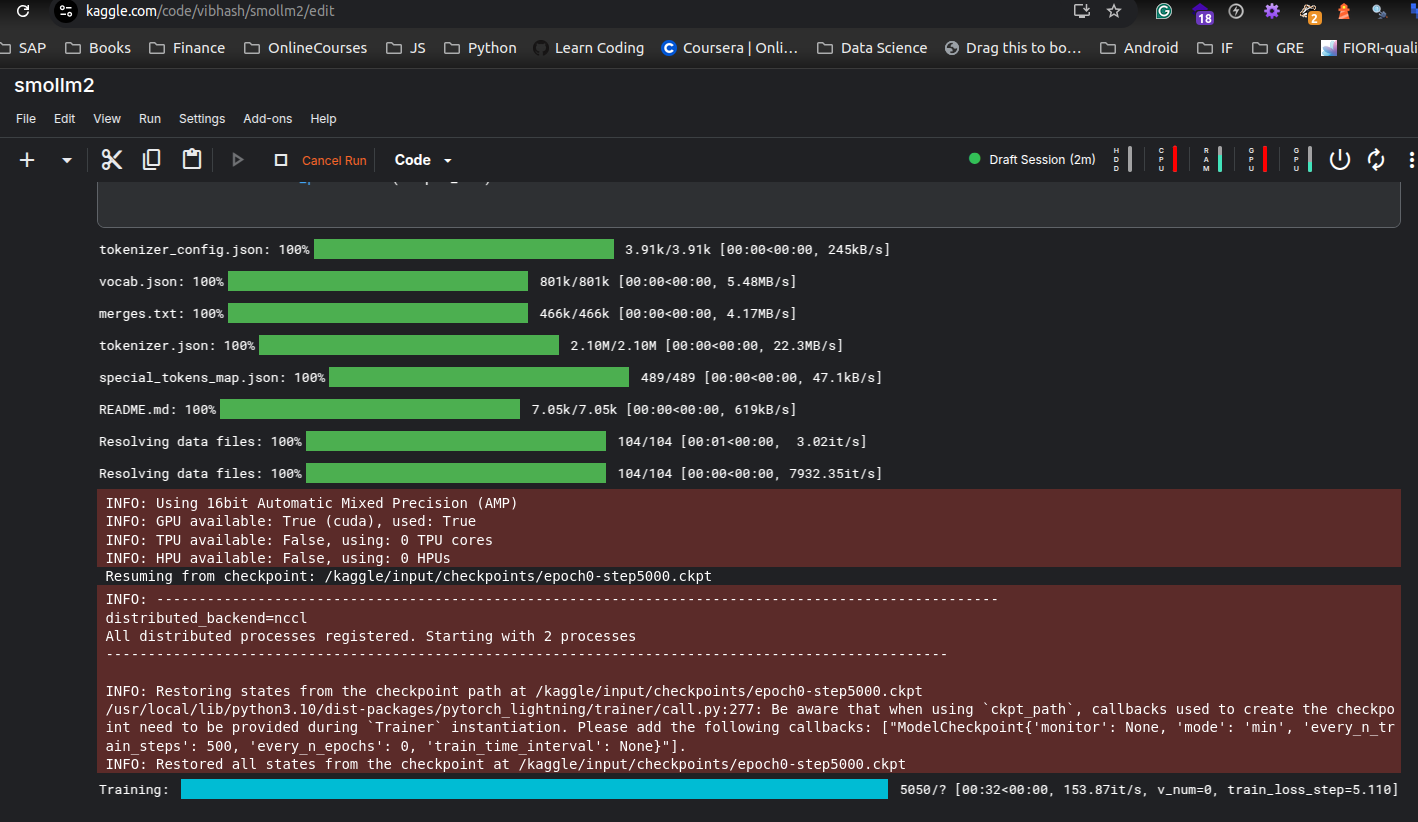

training restarting from step 5000

uv venv

source .venv/bin/activate

use dataset from https://huggingface.co/datasets/HuggingFaceTB/smollm-corpus/tree/main/cosmopedia-v2

dataset = load_dataset("HuggingFaceTB/smollm-corpus", "cosmopedia-v2")

use tokeniser from https://huggingface.co/HuggingFaceTB/cosmo2-tokenizer

tokenizer = AutoTokenizer.from_pretrained("HuggingFaceTB/cosmo2-tokenizer")

use config from https://huggingface.co/HuggingFaceTB/SmolLM2-135M/blob/main/config_smollm2_135M.yaml

https://github.com/huggingface/smollm/blob/main/pre-training/smollm2/config_smollm2_135M.yaml

create model from above parameters

Use it for training using pytorch lightning

LlamaForCausalLM( (model): LlamaModel( (embed_tokens): Embedding(49152, 576) (layers): ModuleList( (0-29): 30 x LlamaDecoderLayer( (self_attn): LlamaAttention( (q_proj): Linear(in_features=576, out_features=576, bias=False) (k_proj): Linear(in_features=576, out_features=192, bias=False) (v_proj): Linear(in_features=576, out_features=192, bias=False) (o_proj): Linear(in_features=576, out_features=576, bias=False) ) (mlp): LlamaMLP( (gate_proj): Linear(in_features=576, out_features=1536, bias=False) (up_proj): Linear(in_features=576, out_features=1536, bias=False) (down_proj): Linear(in_features=1536, out_features=576, bias=False) (act_fn): SiLU() ) (input_layernorm): LlamaRMSNorm((576,), eps=1e-05) (post_attention_layernorm): LlamaRMSNorm((576,), eps=1e-05) ) ) (norm): LlamaRMSNorm((576,), eps=1e-05) (rotary_emb): LlamaRotaryEmbedding() ) (lm_head): Linear(in_features=576, out_features=49152, bias=False) )