dynamic quants

seems like @Fizzarolli and @bartowski are unhappy about dynamic quants, any comment from the unsloth team on what is going on? thanks

this is extremely inappropriate to share publicly.. this is me shooting the shit with people i'm close to and talking way more than i would ever consider sharing publicly, there's a reason i have not commented publicly

and i'm being needlessly antagonistic with these people in a way i would never consider to be in real life, taken completely out of context

if i have something to say publicly i'll say it publicly

I have a ton of respect for the unsloth team and have expressed that on many occasions, I have also been somewhat public with the fact that I don't love the vibe behind "dynamic ggufs" but because i don't have any evidence to state one way or the other what's better, I have been silent about it unless people ask me directly, and I have had discussions about it with those people, and I have been working on finding out the true answer behind it all

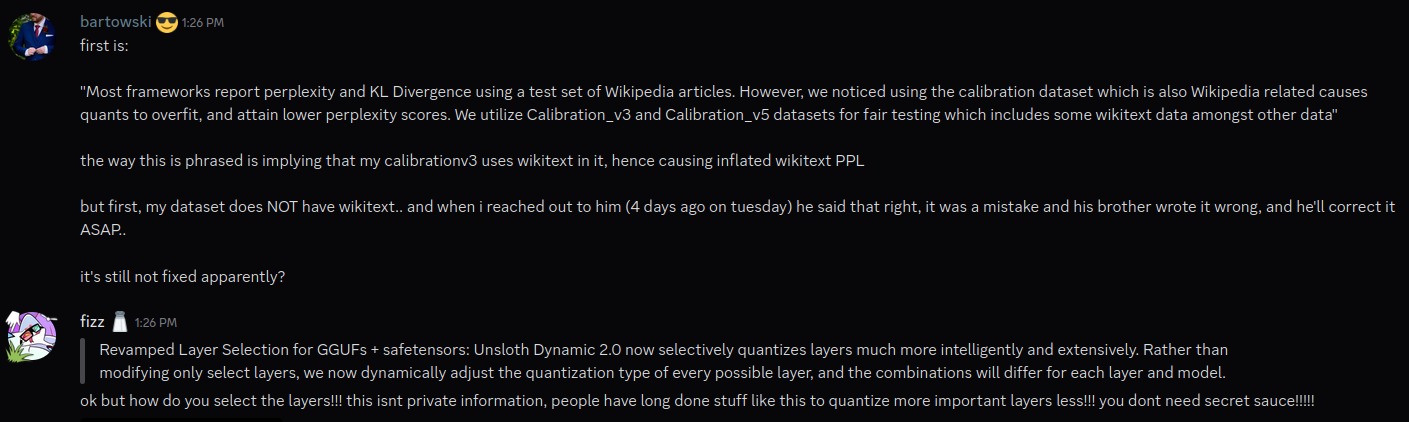

If anyone is rubber necking at this strange post, here are a couple public data points of which I'm aware for you to do your own research and come to your own conclusions:

- [r/LocalLLaMA post with discussions on PPL, KLD, and benchmarking various GGUF quants for Qwen3-30B-A3B MoE](https://www.reddit.com/r/LocalLLaMA/comments/1kcp34g/ubergarmqwen330ba3bgguf_1600_toksec_pp_105_toksec/

- ik_llama.cpp github discussions on GGUF quants for Qwen3-30B-A3B Moe

I have no clue who @lucyknada is and why they decided to open the conversation in such a way. They seem to have lot's of exl2 quants which is cool, I've heard those are fast if you have enough VRAM for all GPU inferencing.

Anyway, rummaging through posts and comments across half a dozen social media and code repos is an interesting way to do research in 2025 lol... Cheers and much love to each of you, even you @llama-anon ! (i reported you btw in case you're wondering who did. i'm an american white dude with a lot of privilege so please direct any spite and ire at me and not marginalized groups. cheers!)

Hi there guys, thank you for being very passionate about opensource we appreciate it.

Let's keep focusing on making opensource positive and keep going forward!

Hope you guys have a great Friday and weekend

@bartowski No worries!

But to address some of the issues, since people have asked as well:

- Actually I did open source the dynamic quants code at https://github.com/unslothai/llama.cpp - I'm more than happy for anyone to utilize it! I already contribute sometimes to mainline llama.cpp (llama 4 bug fixes, gemma bug fixes etc), but I wasn't sure if making a gigantic PR at the start was a good idea since it was more trial and error on the selection of which layers to quantize.

- In regards to calibration v3 and v5 - notice the blog is incorrect - I tested wikitext train, v3 and v5 - so it's mis-communication saying how v3 has wikitext - I do know the original intention of v3 / v5 at https://github.com/ggml-org/llama.cpp/discussions/5263 was to reduce the FLOPs necessary to compute imatrix vs doing a full run over the full wikitext train dataset.

- In regards to PPL and KLD - yes KLD is better - but using our imatrix for these numbers is not correct - I used the chat template of the model itself and run imatrix on approx 6K to 12K context lengths, whilst I think the norm is to use 512 context length - comparing our imatrix is now not apples to apples anymore.

- And on evidence of benchmarks - https://unsloth.ai/blog/dynamic-v2 and https://docs.unsloth.ai/basics/unsloth-dynamic-2.0-ggufs have tables on KLD, PPL, disk space, and MMLU, and are all apples to apples - the tables are for calibration v3, 512 context length, so it's definitely not snake oil :) - Our -unsloth-bnb-4bit quants for eg are benchmarked quite extensively for example, just GGUFs are more new.

Overall 100% I respect the work you do @bartowski - I congratulate you all the time and tell people to utilize your quants :) Also great work @ubergarm as usual - I'm always excited about your releases! I also respect all the work K does at ik_llama.cpp as well.

The dynamic quant idea was actually from https://unsloth.ai/blog/dynamic-4bit - around last December for finetuning I noticed quantizing everything to 4bit was incorrect, for eg see Qwen error plots:

And our dynamic bnb 4bit quants for Phi beating other non dynamic quants on HF leaderboard:

And yes the 1.58bit DeepSeek R1 quants was probably what made the name stick https://unsloth.ai/blog/deepseekr1-dynamic

To be honest, I didn't expect it to take off, and I'm still learning things along the way - I'm always more than happy to collaborate on anything and I always respect everything you do @bartowski and everyone! I don't mind all the drama - we're all human so it's fine :) If there are ways for me to improve, I'll always try my best to!

But I guess overall I think it's actually the multiple bug fixes to models that actually increased accuracy the most:

- Phi-4 for eg had chat template problems which I helped fix (wrong BOS). Also llamafying it increased acc.

- Gemma 1 and Gemma 2 bug fixes I did way back improved accuracy by quite a bit. See https://x.com/danielhanchen/status/1765446273661075609

- Llama 3 chat template fixes as well

- Llama 4 bug fixes - see https://github.com/huggingface/transformers/pull/37418/files, https://github.com/ggml-org/llama.cpp/pull/12889

- Generic RoPE fix for all models - see https://github.com/huggingface/transformers/pull/29285

And a whole plethora of other model bug fixes - tbh I would say these are probably much more statistically significant than trying to squeeze every bit of performance via new quant schemes :)

But anyways again I respect the work everyone does, and I don't mind all the drama :)

Oh I noticed the thread was closed - more than happy for extra comments etc :)

Edit: any comments causing more drama will have to be deleted.

@danielhanchen Can you tell me how to use your dynamic quantization code? It seems to differ from the upstream only by one revert commit, and I'm not clear on how to use it

@EntropyYue Oh ye - sadly llama.cpp actually today broke my code :( I had to revert all changes and update from mainline - if you look at past commits then the code should still be there