GPT-2 Large RLHF Model for OpenAI TLDR Summarization

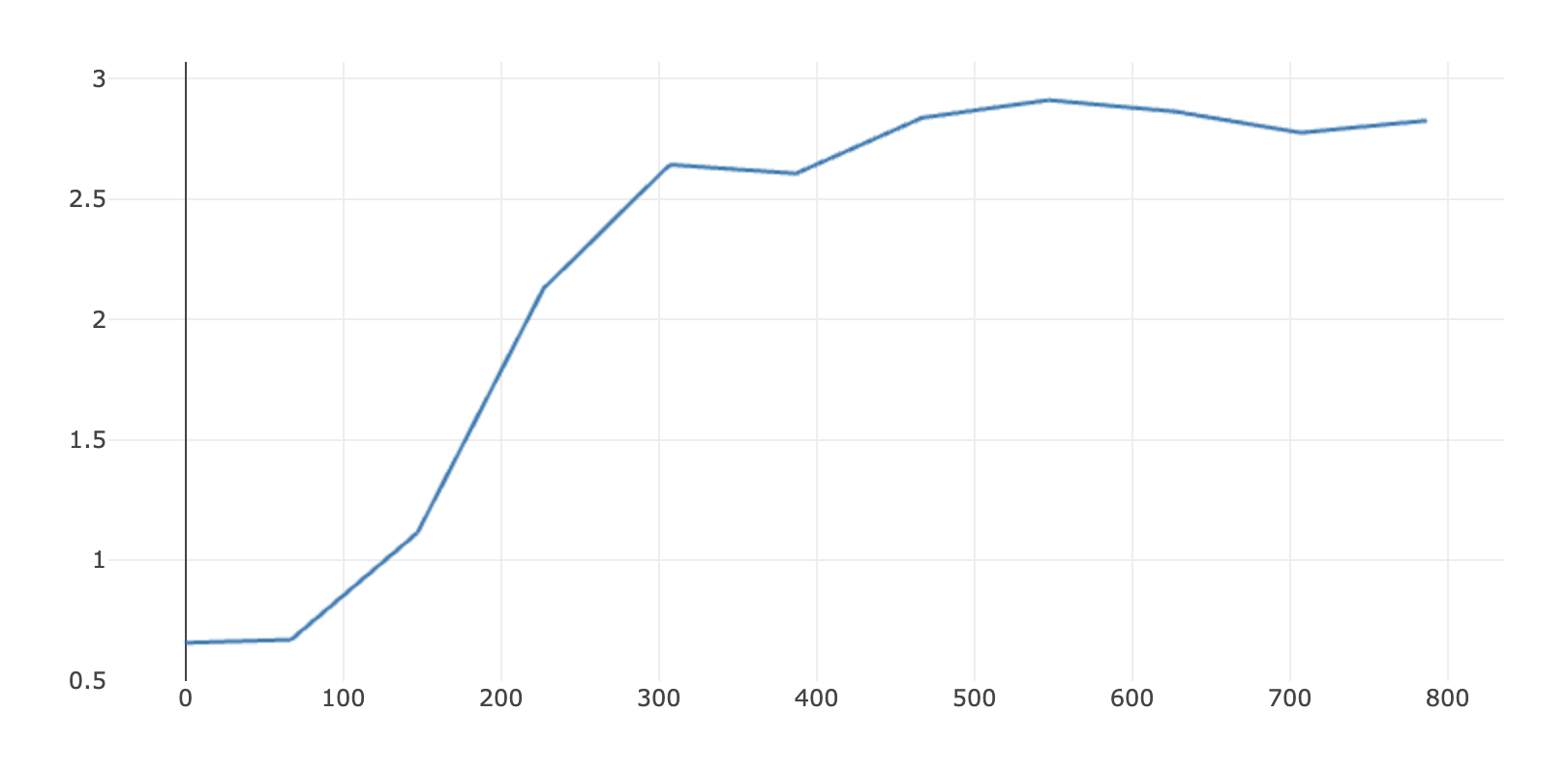

Based on SFT model ellipseai/gpt2-large-tldr-sum and perform RLHF training for better human alignment. The training curve on validation reward is

We perform evaluation for SFT model and RL model on 386 test set on the summarization quality with Claude-v2 to judge winner. We observe that RL model is significantly better than SFT model. This demonstrate that RL training is work very well.

| model | win | loss | tie | win rate | loss rate | win rate adjusted |

|---|---|---|---|---|---|---|

| ellipseai/gpt2-large-tldr-sum | 24 | 151 | 211 | 0.0622 | 0.3911 | 33.55% |

| ellipseai/gpt2-large-tldr-sum-rlhf | 151 | 24 | 211 | 0.3911 | 0.0622 | 66.45% |

- Downloads last month

- 139

Inference Providers

NEW

This model is not currently available via any of the supported third-party Inference Providers, and

the model is not deployed on the HF Inference API.