anchor

stringlengths 1

23.8k

| positive

stringlengths 1

23.8k

| negative

stringlengths 1

31k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

We built an AI-powered physical trainer/therapist that provides real-time feedback and companionship as you exercise.

With the rise of digitization, people are spending more time indoors, leading to increasing trends of obesity and inactivity. We wanted to make it easier for people to get into health and fitness, ultimately improving lives by combating these the downsides of these trends. Our team built an AI-powered personal trainer that provides real-time feedback on exercise form using computer vision to analyze body movements. By leveraging state-of-the-art technologies, we aim to bring accessible and personalized fitness coaching to those who might feel isolated or have busy schedules, encouraging a more active lifestyle where it can otherwise be intimidating.

## What it does

Our AI personal trainer is a web application compatible with laptops equipped with webcams, designed to lower the barriers to fitness. When a user performs an exercise, the AI analyzes their movements in real-time using a pre-trained deep learning model. It provides immediate feedback in both textual and visual formats, correcting form and offering tips for improvement. The system tracks progress over time, offering personalized workout recommendations and gradually increasing difficulty based on performance. With voice guidance included, users receive tailored fitness coaching from anywhere, empowering them to stay consistent in their journey and helping to combat inactivity and lower the barriers of entry to the great world of fitness.

## How we built it

To create a solution that makes fitness more approachable, we focused on three main components:

Computer Vision Model: We utilized MediaPipe and its Pose Landmarks to detect and analyze users' body movements during exercises. MediaPipe's lightweight framework allowed us to efficiently assess posture and angles in real-time, which is crucial for providing immediate form correction and ensuring effective workouts.

Audio Interface: We initially planned to integrate OpenAI’s real-time API for seamless text-to-speech and speech-to-text capabilities, enhancing user interaction. However, due to time constraints with the newly released documentation, we implemented a hybrid solution using the Vosk API for speech recognition. While this approach introduced slightly higher latency, it enabled us to provide real-time auditory feedback, making the experience more engaging and accessible.

User Interface: The front end was built using React with JavaScript for a responsive and intuitive design. The backend, developed in Flask with Python, manages communication between the AI model, audio interface, and user data. This setup allows the machine learning models to run efficiently, providing smooth real-time feedback without the need for powerful hardware.

On the user interface side, the front end was built using React with JavaScript for a responsive and intuitive design. The backend, developed in Flask with Python, handles the communication between the AI model, audio interface, and the user's data.

## Challenges we ran into

One of the major challenges was integrating the real-time audio interface. We initially planned to use OpenAI’s real-time API, but due to the recent release of the documentation, we didn’t have enough time to fully implement it. This led us to use the Vosk API in conjunction with our system, which introduced increased codebase complexity in handling real-time feedback.

## Accomplishments that we're proud of

We're proud to have developed a functional AI personal trainer that combines computer vision and audio feedback to lower the barriers to fitness. Despite technical hurdles, we created a platform that can help people improve their health by making professional fitness guidance more accessible. Our application runs smoothly on various devices, making it easier for people to incorporate exercise into their daily lives and address the challenges of obesity and inactivity.

## What we learned

Through this project, we learned that sometimes you need to take a "back door" approach when the original plan doesn’t go as expected. Our experience with OpenAI’s real-time API taught us that even with exciting new technologies, there can be limitations or time constraints that require alternative solutions. In this case, we had to pivot to using the Vosk API alongside our real-time system, which, while not ideal, allowed us to continue forward. This experience reinforced the importance of flexibility and problem-solving when working on complex, innovative projects.

## What's next for AI Personal Trainer

Looking ahead, we plan to push the limits of the OpenAI real-time API to enhance performance and reduce latency, further improving the user experience. We aim to expand our exercise library and refine our feedback mechanisms to cater to users of all fitness levels. Developing a mobile app is also on our roadmap, increasing accessibility and convenience. Ultimately, we hope to collaborate with fitness professionals to validate and enhance our AI personal trainer, making it a reliable tool that encourages more people to lead healthier, active lives. | ## Inspiration

With more people working at home due to the pandemic, we felt empowered to improve healthcare at an individual level. Existing solutions for posture detection are expensive, lack cross-platform support, and often require additional device purchases. We sought to remedy these issues by creating Upright.

## What it does

Upright uses your laptop's camera to analyze and help you improve your posture. Register and calibrate the system in less than two minutes, and simply keep Upright open in the background and continue working. Upright will notify you if you begin to slouch so you can correct it. Upright also has the Upright companion iOS app to view your daily metrics.

Some notable features include:

* Smart slouch detection with ML

* Little overhead - get started in < 2 min

* Native notifications on any platform

* Progress tracking with an iOS companion app

## How we built it

We created Upright’s desktop app using Electron.js, an npm package used to develop cross-platform apps. We created the individual pages for the app using HTML, CSS, and client-side JavaScript. For the onboarding screens, users fill out an HTML form which signs them in using Firebase Authentication and uploads information such as their name and preferences to Firestore. This data is also persisted locally using NeDB, a local JavaScript database. The menu bar addition incorporates a camera through a MediaDevices web API, which gives us frames of the user’s posture. Using Tensorflow’s PoseNet model, we analyzed these frames to determine if the user is slouching and if so, by how much. The app sends a desktop notification to alert the user about their posture and also uploads this data to Firestore. Lastly, our SwiftUI-based iOS app pulls this data to display metrics and graphs for the user about their posture over time.

## Challenges we ran into

We faced difficulties when managing data throughout the platform, from the desktop app backend to the frontend pages to the iOS app. As this was our first time using Electron, our team spent a lot of time discovering ways to pass data safely and efficiently, discussing the pros and cons of different solutions. Another significant challenge was performing the machine learning on the video frames. The task of taking in a stream of camera frames and outputting them into slouching percentage values was quite demanding, but we were able to overcome several bugs and obstacles along the way to create the final product.

## Accomplishments that we're proud of

We’re proud that we’ve come up with a seamless and beautiful design that takes less than a minute to setup. The slouch detection model is also pretty accurate, something that we’re pretty proud of. Overall, we’ve built a robust system that we believe outperforms other solutions using just the webcamera of your computer, while also integrating features to track slouching data on your mobile device.

## What we learned

This project taught us how to combine multiple complicated moving pieces into one application. Specifically, we learned how to make a native desktop application with features like notifications built-in using Electron. We also learned how to connect our backend posture data with Firestore to relay information from our Electron application to our OS app. Lastly, we learned how to integrate a machine learning model in Tensorflow within our Electron application.

## What's next for Upright

The next step is improving the posture detection model with more training data, tailored for each user. While the posture detection model we currently use is pretty accurate, by using more custom-tailored training data, it would take Upright to the next level. Another step for Upright would be adding Android integration for our mobile app, which currently only supports iOS as of now. | # Inspiration 🌟

**What is the problem?**

Physical activity early on can drastically increase longevity and productivity for later stages of life. Without finding a dependable routine during your younger years, you may experience physical impairment in the future. 50% of functional decline that occurs in those 30 to 70 years old is due to lack of exercise.

During the peak of the COVID-19 pandemic in Canada, nationwide isolation brought everyone indoors. There was still a vast number of people that managed to work out in their homes, which motivated us to create an application that further encouraged engaging in fitness, using their devices, from the convenience of their homes.

# Webapp Summary 📜

Inspired, our team decided to tackle this idea by creating a web app that helps its users maintain a consistent and disciplined routine.

# What does it do? 💻

*my trAIner* plans to aid you and your journey to healthy fitness by displaying the number of calories you have burned while also counting your reps. It additionally helps to motivate you through words of encouragement. For example, whenever nearing a rep goal, *my trAIner* will use phrases like, “almost there!” or “keep going!” to push you to the last rep. Once completing your set goal *my trAIner* will congratulate you.

We hope that people may utilize this to make the best of their workouts. Utilizing AI technology to help those reach their rep standard and track calories, we believe could help students and adults in the present and future.

# How we built it:🛠

To build this application, we used **JavaScript, CSS,** and **HTML.** To make the body mapping technology, we used a **TensorFlow** library. We mapped out different joints on the body and compared them as they moved, in order to determine when an exercise was completed. We also included features like parallax scrolling and sound effects from DeltaHacks staff.

# Challenges that we ran into 🚫

Learning how to use **TensorFlow**’s pose detection proved to be a challenge, as well as integrating our own artwork into the parallax scrolling. We also had to refine our backend as the library’s detection was shaky at times. Additional challenges included cleanly linking **HTML, JS, and CSS** as well as managing the short amount of time we were given.

# Accomplishments that we’re proud of 🎊

We are proud that we put out a product with great visual aesthetics as well as a refined detection method. We’re also proud that we were able to take a difficult idea and prove to ourselves that we were capable of creating this project in a short amount of time. More than that though, we are most proud that we could make a web app that could help out people trying to be more healthy.

# What we learned 🍎

Not only did we develop our technical skills like web development and AI, but we also learned crucial things about planning, dividing work, and time management. We learned the importance of keeping organized with things like to-do lists and constantly communicating to see what each other’s limitations and abilities were. When challenges arose, we weren't afraid to delve into unknown territories.

# Future plans 📅

Due to time constraints, we were not able to completely actualize our ideas, however, we will continue growing and raising efficiency by giving ourselves more time to work on *my trAIner*. Potential future ideas to incorporate may include constructive form correction, calorie intake calculator, meal preps, goal setting, recommended workouts based on BMI, and much more. We hope to keep on learning and applying newly obtained concepts to *my trAIner*. | winning |

## Inspiration

Minecraft has an interesting map mechanic where your character holds a map which "draws itself" while exploring the world. I am also very interested in building a plotter, which is a printer that uses a pen and (XY) gantry to produce images. These ideas seemed to fit together quite well.

## What it does

Press a button, copy GPS coordinates and run the custom "gcode" compiler to generate machine/motor driving code for the arduino. Wait around 15 minutes for a 48 x 48 output.

## How we built it

Mechanical assembly - Tore apart 3 dvd drives and extracted a multitude of components, including sled motors (linear rails). Unfortunately, they used limit switch + DC motor rather than stepper, so I had to saw apart the enclosure and **glue** in my own steppers with a gear which (you guessed it) was also glued to the motor shaft.

Electronics - I designed a simple algorithm to walk through an image matrix and translate it into motor code, that looks a lot like a video game control. Indeed, the stepperboi/autostepperboi main source code has utilities to manually control all three axes like a tiny claw machine :)

U - Pen Up

D - Pen Down

L - Pen Left

R - Pen Right

Y/T - Pen Forward (top)

B - Pen Backwards (bottom)

Z - zero the calibration

O - returned to previous zeroed position

## Challenges we ran into

* I have no idea about basic mechanics / manufacturing so it's pretty slipshod, the fractional resolution I managed to extract is impressive in its own right

* Designing my own 'gcode' simplification was a little complicated, and produces strange, pointillist results. I like it though.

## Accomplishments that we're proud of

* 24 hours and a pretty small cost in parts to make a functioning plotter!

* Connected to mapbox api and did image processing quite successfully, including machine code generation / interpretation

## What we learned

* You don't need to take MIE243 to do low precision work, all you need is superglue, a glue gun and a dream

* GPS modules are finnicky and need to be somewhat near to a window with built in antenna

* Vectorizing an image is quite a complex problem

* Mechanical engineering is difficult

* Steppers are *extremely* precise, and I am quite surprised at the output quality given that it's barely held together.

* Iteration for mechanical structure is possible, but difficult

* How to use rotary tool and not amputate fingers

* How to remove superglue from skin (lol)

## What's next for Cartoboy

* Compacting the design it so it can fit in a smaller profile, and work more like a polaroid camera as intended. (Maybe I will learn solidworks one of these days)

* Improving the gcode algorithm / tapping into existing gcode standard | ## Inspiration

Having previously volunteered and worked with children with cerebral palsy, we were struck with the monotony and inaccessibility of traditional physiotherapy. We came up with a cheaper, more portable, and more engaging way to deliver treatment by creating virtual reality games geared towards 12-15 year olds. We targeted this age group because puberty is a crucial period for retention of plasticity in a child's limbs. We implemented interactive games in VR using Oculus' Rift and Leap motion's controllers.

## What it does

We designed games that targeted specific hand/elbow/shoulder gestures and used a leap motion controller to track the gestures. Our system improves motor skill, cognitive abilities, emotional growth and social skills of children affected by cerebral palsy.

## How we built it

Our games use of leap-motion's hand-tracking technology and the Oculus' immersive system to deliver engaging, exciting, physiotherapy sessions that patients will look forward to playing. These games were created using Unity and C#, and could be played using an Oculus Rift with a Leap Motion controller mounted on top. We also used an Alienware computer with a dedicated graphics card to run the Oculus.

## Challenges we ran into

The biggest challenge we ran into was getting the Oculus running. None of our computers had the ports and the capabilities needed to run the Oculus because it needed so much power. Thankfully we were able to acquire an appropriate laptop through MLH, but the Alienware computer we got was locked out of windows. We then spent the first 6 hours re-installing windows and repairing the laptop, which was a challenge. We also faced difficulties programming the interactions between the hands and the objects in the games because it was our first time creating a VR game using Unity, leap motion controls, and Oculus Rift.

## Accomplishments that we're proud of

We were proud of our end result because it was our first time creating a VR game with an Oculus Rift and we were amazed by the user experience we were able to provide. Our games were really fun to play! It was intensely gratifying to see our games working, and to know that it would be able to help others!

## What we learned

This project gave us the opportunity to educate ourselves on the realities of not being able-bodied. We developed an appreciation for the struggles people living with cerebral palsy face, and also learned a lot of Unity.

## What's next for Alternative Physical Treatment

We will develop more advanced games involving a greater combination of hand and elbow gestures, and hopefully get testing in local rehabilitation hospitals. We also hope to integrate data recording and playback functions for treatment analysis.

## Business Model Canvas

<https://mcgill-my.sharepoint.com/:b:/g/personal/ion_banaru_mail_mcgill_ca/EYvNcH-mRI1Eo9bQFMoVu5sB7iIn1o7RXM_SoTUFdsPEdw?e=SWf6PO> | ## Inspiration

As a team, we were immediately intrigued by the creative freedom involved in building a ‘useless invention’ and inspiration was drawn from the ‘useless box’ that turns itself off. We thought ‘why not have it be a robot arm and give it an equally intriguing personality?’ and immediately got to work taking our own spin on the concept.

## What It Does

The robot has 3 servos that allow the robot to move with personality. Whenever the switch is pressed, the robot executes a sequence of actions in order to flick the switch and then shut down.

## How We Built It

We started by dividing tasks between members: the skeleton of the code, building the physical robot, and electronic components. A CAD model was drawn up to get a gauge for scale, and then it was right into cutting and glueing popsicle sticks. An Exacto blade was used to create holes in the base container for components to fit through to keep everything neat and compact. Simultaneously, as much of the code and electronic wiring was done to not waste time.

After the build was complete, a test code was run and highlighted areas that needed to be reinforced. While that was happening, calculations were being done to determine the locations the servo motors would need to reach in order to achieve our goal. Once a ‘default’ sequence was achieved, team members split to write 3 of our own sequences before converging to achieve the 5th and final sequence. After several tests were run and the code was tweaked, a demo video was filmed.

## Challenges We Ran Into

The design itself is rather rudimentary, being built out of a Tupperware container, popsicle sticks and various electronic components to create the features such as servo motors and a buzzer. Challenges consisted of working with materials as fickle as popsicle sticks – a decision driven mainly by the lack of realistic accessibility to 3D printers. The wood splintered and was weaker than expected, therefore creative design was necessary so that it held together.

Another challenge was the movement. Working with 3 servo motors proved difficult when assigning locations and movement sequences, but once we found a ‘default’ sequence that worked, the other following sequences slid into place. Unfortunately, our toils were not over as now the robot had to be able to push the switch, and initial force proved to be insufficient.

## Accomplishments That We’re Proud Of

About halfway through, while we were struggling with getting the movement to work, thoughts turned toward what we would do in different sequences. Out of inspiration from other activities occurring during the event, it was decided that we would add a musical element to our ‘useless machine’ in the form of a buzzer playing “Tequila” by The Champs. This was our easiest success despite involving transposing sheet music and changing rhythms until we found the desired effect.

We also got at least 3 sequences into the robot! That is more than we were expecting 12 hours into the build due to difficulties with programming the servos.

## What We Learned

When we assigned tasks, we all chose roles that we were not normally accustomed to. Our mechanical member worked heavily in software while another less familiar with design focused on the actual build. We all exchanged roles over the course of the project, but this rotation of focus allowed us to get the most out of the experience. You can do a lot with relatively few components; constraint leads to innovation.

## What’s Next for Little Dunce

So far, we have only built in the set of 5 sequences, but we want Little Dunce to have more of a personality and more varied and random reactions. As of now, it is a sequence of events, but we want Little Dunce to act randomly so that everyone can get a unique experience with the invention. We also want to add an RGB LED light for mood indication dependent on the sequence chosen. This would also serve as the “on/off” indicator since the initial proposal was to have a robot that goes to sleep. | winning |

## Inspiration

Sometimes, we all just have one of those days. Maybe you didn't make any plans and now you're sitting alone, eating lunch by yourself. Maybe it's a new semester of college in a new place, and you feel out of place and isolated. While the solution (make friends!) seems obvious enough, it's not always easy to approach people, and sometimes, we just don't have the energy to socialize on top of heavy course loads and extracurriculars.

## What it does

meet2eat is a website that makes it easy to spontaneously schedule a dinner with someone new. All you have to do is provide your name and when you're available, and meet2eat will randomly choose a dining hall setting and pair you with someone. Because meet2eat forgoes creating a profile, it's more convenient and places less social pressure on the user. Additionally, because meet2eat only lets users schedule a meal on the same day, meet2eat is very noncommittal. At its heart, meet2eat helps people across all years and backgrounds connect and hopefully, spark meaningful conversations and relationships.

meet2eat is not only for university students--it can also connect the elderly with university students for a meal, as loneliness is similarly a big issue for elderly people in nursing homes. University students can have a meal with the elderly and learn from them while earning service hours.

## How we built it

This web app was built using React as a front end and Flask as a back end.

## Challenges we ran into

We ran into some challenges with connecting front end with the back end, and using the REST API to transfer data between the two portions

## Accomplishments that we're proud of

Hashing out the structure, and learning new frameworks on the fly!

## What we learned

We learned how to leverage technology to help solve a growing problem on university campuses.

## What's next for meet2eat

Being able to provide seating options by including table reservations and live updates on seat availability. | ## Inspiration

We came up with the idea of Budget Bites when we faced the challenge of affording groceries and restaurants in the city, as well as the issue of food waste and environmental impact. We wanted to make a web app that would help people find the best deals on food and groceries that are close to expiry or on the date of expiry, and incentivize them to buy them before they go to waste by offering discounts on the items. With our app, you can easily find quick groceries for a fast meal, and see the cheapest and freshest options available in your area, along with the distance and directions to help you reduce your carbon footprint. Budget Bites is our pocket-friendly food guide that helps you save money, enjoy good food, and protect the planet every day of the week.

## What it does

Budget Bites is a website that gives you a list of the prices, freshness, and distance of food and groceries near you. You can also get discounts and coupons on items that are close to sale, and enjoy good food every day of the week without breaking the bank or harming the planet. Budget Bites is your pocket-friendly food guide that makes eating well easy and affordable.

## How we built it

We built the web app with React.js, Typescript, Vite, and React Router, as well as React Context Hooks. For styling and design, we utilized SASS and TailwindCSS. We used various React components/libraries such as Fuse.js and React-toast, as well as a payment system using Stripe.

## Challenges we ran into

One of our biggest challenges was trying to integrate the JavaScript SDK for Google Maps into our program. This was very new to us as none of us had any any experience with the Maps API. Getting the Google Maps API to function took hours of intense problems solving. It didn't stop there though as right after that we had to try adding landmarks which was another problem however we overcame it by looking through documentation and through the consultation of mentors

## Accomplishments that we're proud of

An accomplishment that we are proud of is that we figured out how to use google maps jdk and successfully implemented the google maps features to our web app. We also figured out how to calculate distances from where the user is to the store as well.

## What we learned

One of the key skills that we gained over the duration of this hackathon is that we learnt how to implement Google Maps to our system. This involved us learning how the Maps SDKs worked to integrate them into our application to provide proper location services. We also delved into the realm of distance and time calculations using two points. Using Google Maps, we learned how to determine the distance between where the user starts and where his end point is supposed to be as well as an estimate for the time it will take to reach the given point.

## What's next for Budget Bites

Our next steps for Budget Bites is to make it a more frequently used app as well as a global app that helps people around the world enjoy good food without wasting money or resources. To do this, we will make sure our app is tailored to the languages, cultures, preferences, and regulations of each market we enter. We will use Google Translate to make our app accessible and understandable in different languages. We will also work with local experts, partners, or customers to learn about their specific needs and expectations. We will also follow the local laws and standards that apply to our app and its features. We will design and execute a marketing strategy and a distribution plan for our app in each market we target. We will use Facebook Ads and Google Ads to create and run online campaigns that highlight the benefits and features of our app, such as saving money, reducing food waste, and enjoying good food. We will also use social media platforms such as Instagram and Twitter to share deals, testimonials, and reviews that showcase the experience that people have with budget bites to allow a greater influx of customers. | ## Inspiration

We set out to build a product that solves two core pain points in our daily lives: 1) figuring out what to do for every meal 😋 and 2) maintaining personal relationships 👥.

As college students, we find ourselves on a daily basis asking the question, “What should I do for lunch today?” 🍔 — many times with a little less than an hour left before it’s time to eat. The decision process usually involves determining if one has the willpower to cook at home, and if not, figuring out where to eat out and if there is anyone to eat out with. For us, this usually just ends up being our roommates, and we find ourselves quite challenged by maintaining depth of relationships with people we want to because the context windows are too large to juggle.

Enter, BiteBuddy.

## What it does

We divide the problem we’re solving into two main scenarios.

1. **Spontaneous (Eat Now!)**: It’s 12PM and Jason realizes that he doesn’t have lunch plans. BiteBuddy will help him make some! 🍱

2. **Futuristic (Schedule Ahead!)**: It’s Friday night and Parth decides that he wants to plan out his entire next week (Forkable, anyone?). 🕒

**Eat Now** allows you to find friends that are near you and automatically suggests nearby restaurants that would be amenable to both of you based on dietary and financial considerations. Read more below to learn some of the cool API interactions and ML behind this :’). 🗺️

**Schedule Ahead** allows you to plan your week ahead and actually think about personal relationships. It analyzes closeness between friends, how long it’s been since you last hung out, looks at calendars, and similar to above automatically suggests time and restaurants. Read more below for how! 🧠

We also offer a variety of other features to support the core experience:

1. **Feed**. View a streaming feed of the places your friends have been going. Enhance the social aspect of the network.

2. **Friends** (no, we don’t offer friends). Manage your relationships in a centralized way and view LLM-generated insights regarding relationships and when might be the right time/how to rekindle them.

## How we built it

The entire stack we used for this project was Python, with the full stack web development being enabled by the **Reflex** Python package, and database being Firebase.

**Eat Now** is a feature that bases itself around geolocation, dietary preferences, financial preferences, calendar availability, and LLM recommendation systems. We take your location, go through your friends list and find the friends who are near you and don’t have immediate conflicts on their calendar, compute an intersection of possible restaurants via the Yelp API that would be within a certain radius of both of you, filter this intersection with dietary + financial preferences (vegetarian? vegan? cheap?), then pass all our user context into a LLAMA-13B-Chat 💬 to generate a final recommendation. This recommendation surfaces itself as a potential invite (in figures above) that the user can choose whether or not to send to another person. If they accept, a calendar invite is automatically generated.

**Schedule Ahead** is a feature that bases itself around graph machine learning, calendar availability, personal relationship status (how close are y’all? When is the last time you saw each other?), dietary/financial preferences, and more. By looking ahead into the future, we take the time to look through our social network graph with associated metadata and infer relationships via Spectral Clustering 📊. Based on how long it’s been since you last hung out and the strength of your relationship, it will surface who to meet with as a priority queue and look at both calendars to determine mutually available times and locations with the same LLM.

We use retrieval augmented generation (RAG) 📝 throughout our app to power personalized friend insights (to learn more about which friends you should catch up with, learn that Jason is a foodie, and what cuisines you and Parth like). This method is also a part of our recommendation algorithm.

## Challenges we ran into

1. **Dealing with APIs.** We utilized a number of APIs to provide a level of granularity and practicality to this project, rather than something that’s solely a mockup. Dealing with APIs though comes with its own issues. The Yelp API, for example, continuously rate limited us even though we cycled through keys from all of our developer accounts :’). The Google Calendar API required a lot of exploration with refresh tokens, necessary scopes, managing state with google auth, etc.

2. **New Technologies.** We challenged ourselves by exploring some new technologies as a part of our stack to complete this project. Graph ML for example was a technology we hadn’t worked with much before, and we quickly ran into the cold start problem with meaningless graphs and unintuitive relationships. Reflex was another new technology that we used to complete our frontend and backend entirely in Python. None of us had ever even pip installed this package before, so learning how to work with it and then turn it into something complex and useful was a fun challenge. 💡

3. **Latency.** Because our app queries several APIs, we had to make our code as performant as possible, utilize concurrency where possible, and add caching for frequently-queried endpoints. 🖥️

## Accomplishments that we're proud of

The amount of complexity that we were able to introduce into this project made it mimic real-life as close as possible, which is something we’re very proud of. We’re also proud of all the new technologies and Machine Learning methods we were able to use to develop a product that would be most beneficial to end users.

## What we learned

This project was an incredible learning experience for our team as we took on multiple technically complex challenges to reach our ending solution -- something we all thought that we had a potential to use ourselves.

## What's next for BiteBuddy

The cool thing about this project was that there were a hundred more features we wanted to include but didn’t remotely have the time to implement. Here are some of our favorites 🙂:

1. **Groups.** Social circles often revolve around groups. Enabling the formation of groups on the app would give us more metadata information regarding the relationships between people, lending itself to improved GNN algorithms and recommendations, and improve the stickiness of the product by introducing network effects.

2. **New Intros: Extending to the Mutuals.** We’ve built a wonderful graph of relationships that includes metadata not super common to a social network. Why not leverage this to generate introductions and form new relationships between people?

3. **More Integrations.** Why use DonutBot when you can have BiteBuddy?

## Built with

Python, Reflex, Firebase, Together AI, ❤️, and boba 🧋 | losing |

## 💡 Inspiration

The objective of our application is to devise an effective and efficient written transmission optimization scheme, by converting esoteric text into an exoteric format.

If you read the above sentence more than once and the word ‘huh?’ came to mind, then you got my point. Jargon causes a problem when you are talking to someone who doesn't understand it. Yet, we face obscure, vague texts every day - from ['text speak'](https://www.goodnewsnetwork.org/dad-admits-hilarious-texting-blunder-on-the-moth/) to T&C agreements.

The most notoriously difficult to understand texts are legal documents, such as contracts or deeds. However, making legal language more straightforward would help people understand their rights better, be less susceptible to being punished or not being able to benefit from their entitled rights.

Introducing simpl.ai - A website application that uses NLP and Artificial Intelligence to recognize difficult to understand text and rephrase them with easy-to-understand language!

## 🔍 What it does

simpl.ai intelligently simplifies difficult text for faster comprehension. Users can send a PDF file of the document they are struggling to understand. They can select the exact sentences that are hard to read, and our NLP-model recognizes what elements make it tough. You'll love simpl.ai's clear, straightforward restatements - they change to match the original word or phrase's part of speech/verb tense/form, so they make sense!

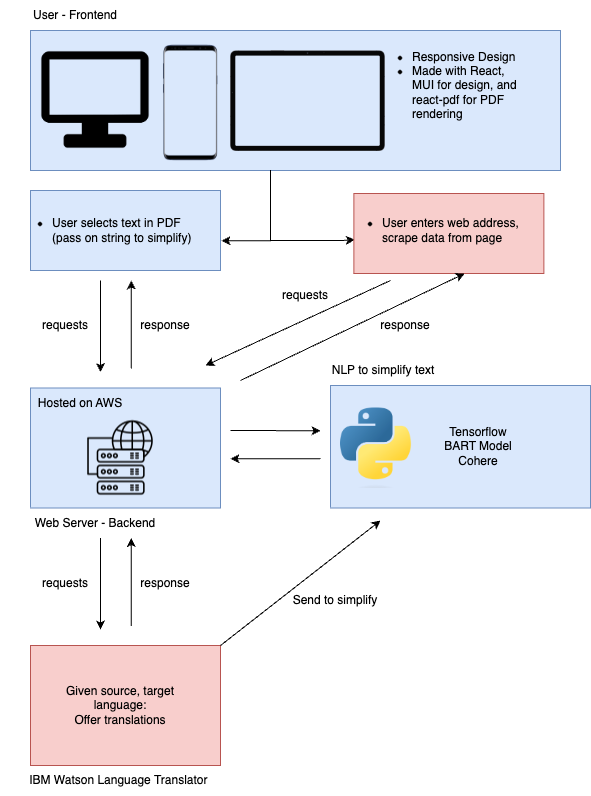

## ⚙️ Our Tech Stack

[](https://postimg.cc/gr2ZqkpW)

**Frontend:** We created the client side of our web app using React.js and JSX based on a high-fidelity prototype we created using Figma. Our components are styled using MaterialUI Library, and Intelllex's react-pdf package for rendering PDF documents within the app.

**Backend:** Python! The magic behind the scenes is powered by a combination of fastAPI, TensorFlow (TF), Torch and Cohere. Although we are newbies to the world of AI (NLP), we used a BART model and TF to create a working model that detects difficult-to-understand text! We used the following [dataset](https://www.inf.uni-hamburg.de/en/inst/ab/lt/resources/data/complex-word-identification-dataset/cwishareddataset.zip) from Stanford University to train our [model](http://nlp.stanford.edu/data/glove.6B.zip)- It's based on several interviews conducted with non-native English speakers, where they were tasked to identify difficult words and simpler synonyms for them. Finally, we used Cohere to rephrase the sentence and ensure it makes sense!

## 🚧 Challenges we ran into

This hackathon was filled with many challenges - but here are some of the most notable ones:

* We purposely choose an AI area where we didn't know too much in (NLP, TensorFlow, CohereAPI), which was a challenging and humbling experience. We faced several compatibility issues with TensorFlow when trying to deploy the server. We decided to go with AWS Platform after a couple of hours of trying to figure out Kubernetes 😅

* Finding a dataset that suited our needs! If there were no time constraints, we would have loved to develop a dataset that is more focused on addressing tacky legal and technical language. Since that was not the case, we made do with a database that enabled us to produce a proof-of-concept.

## ✔️ Accomplishments that we're proud of

* Creating a fully-functioning app with bi-directional communication between the AI server and the client.

* Working with NLP, despite having no prior experience or knowledge. The learning curve was immense!

* Able to come together as a team and move forward, despite all the challenges we faced together!

## 📚 What we learned

We learned so much in terms of the technical areas; using machine learning and having to pivot from one software to the other, state management and PDF rendering in React.

## 🔭 What's next for simpl.ai!

**1. Support Multilingual Documents.** The ability to translate documents and provide a simplified version in their desired language. We would use [IBM Watson's Language Translator API](https://cloud.ibm.com/apidocs/language-translator?code=node)

**2. URL Parameter** Currently, we are able to simplify text from a PDF, but we would like to be able to do the same for websites.

* Simplify legal jargon in T&C agreements to better understand what permissions and rights they are giving an application!

* We hope to extend this service as a Chrome Extension for easier access to the users.

**3. Relevant Datasets** We would like to expand our current model's capabilities to better understand legal jargon, technical documentation etc. by feeding it keywords in these areas. | ## Inspiration

Nowadays, we have been using **all** sorts of development tools for web development, from the simplest of HTML, to all sorts of high-level libraries, such as Bootstrap and React. However, what if we turned back time, and relived the *nostalgic*, good old times of programming in the 60s? A world where the programming language BASIC was prevalent. A world where coding on paper and on **office memo pads** were so popular. It is time, for you all to re-experience the programming of the **past**.

## What it does

It's a programming language compiler and runtime for the BASIC programming language. It allows users to write interactive programs for the web with the simple syntax and features of the BASIC language. Users can read our sample the BASIC code to understand what's happening, and write their own programs to deploy on the web. We're transforming code from paper to the internet.

## How we built it

The major part of the code is written in TypeScript, which includes the parser, compiler, and runtime, designed by us from scratch. After we parse and resolve the code, we generate an intermediate representation. This abstract syntax tree is parsed by the runtime library, which generates HTML code.

Using GitHub actions and GitHub Pages, we are able to implement a CI/CD pipeline to deploy the webpage, which is **entirely** written in BASIC! We also have GitHub Dependabot scanning for npm vulnerabilities.

We use Webpack to bundle code into one HTML file for easy deployment.

## Challenges we ran into

Creating a compiler from scratch within the 36-hour time frame was no easy feat, as most of us did not have prior experience in compiler concepts or building a compiler. Constructing and deciding on the syntactical features was quite confusing since BASIC was such a foreign language to all of us. Parsing the string took us the longest time due to the tedious procedure in processing strings and tokens, as well as understanding recursive descent parsing. Last but **definitely not least**, building the runtime library and constructing code samples caused us issues as minor errors can be difficult to detect.

## Accomplishments that we're proud of

We are very proud to have successfully "summoned" the **nostalgic** old times of programming and deployed all the syntactical features that we desired to create interactive features using just the BASIC language. We are delighted to come up with this innovative idea to fit with the theme **nostalgia**, and to retell the tales of programming.

## What we learned

We learned the basics of making a compiler and what is actually happening underneath the hood while compiling our code, through the *painstaking* process of writing compiler code and manually writing code samples as if we were the compiler.

## What's next for BASIC Web

This project can be integrated with a lot of modern features that is popular today. One of future directions can be to merge this project with generative AI, where we can feed the AI models with some of the syntactical features of the BASIC language and it will output code that is translated from the modern programming languages. Moreover, this can be a revamp of Bootstrap and React in creating interactive and eye-catching web pages. | ## Inspiration

I was compelled to undertake a project on my own for this first time in my hackathoning career. One that I covers my interests in web applications and image processing and would be something "do-able" within the competition.

## What it does

Umoji is a web-app that take's an image input and using facial recognition maps emoji symbols onto the faces in the image matching their emotion/facial expressions.

## How I built it

Using Google Cloud Vision API as the backbone for all the ML and visual recognition, flask to serve up the simple bootstrap based html front-end.

## Challenges I ran into

Creating an extensive list of Emoji to map to the different levels of emotion predicted by the ML Model. Web deployment / networking problems.

## Accomplishments that I'm proud of

That fact that I was able to hit all the check boxes for what I set out to do. Not overshooting with stretch features or getting to caught up with extending the main features beyond the original scope.

## What I learned

How to work with Google's cloud API / image processing and rapid live deployment.

## What's next for Umoji

More emojis, better UI/UX and social media integration for sharing. | winning |

## Inspiration

Over the Summer one of us was reading about climate change but then he realised that most of the news articles that he came across were very negative and affected his mental health to the point that it was hard to think about the world as a happy place. However one day he watched this one youtube video that was talking about the hope that exists in that sphere and realised the impact of this "goodNews" on his mental health. Our idea is fully inspired by the consumption of negative media and tries to combat it.

## What it does

We want to bring more positive news into people’s lives given that we’ve seen the tendency of people to only read negative news. Psychological studies have also shown that bringing positive news into our lives make us happier and significantly increases dopamine levels.

The idea is to maintain a score of how much negative content a user reads (detected using cohere) and once it reaches past a certain threshold (we store the scores using cockroach db) we show them a positive news related article in the same topic area that they were reading.

We do this by doing text analysis using a chrome extension front-end and flask, cockroach dp backend that uses cohere for natural language processing.

Since a lot of people also listen to news via video, we also created a part of our chrome extension to transcribe audio to text - so we included that into the start of our pipeline as well! At the end, if the “negativity threshold” is passed, the chrome extension tells the user that it’s time for some good news and suggests a relevant article.

## How we built it

**Frontend**

We used a chrome extension for the front end which included dealing with the user experience and making sure that our application actually gets the attention of the user while being useful. We used react js, HTML and CSS to handle this. There was also a lot of API calls because we needed to transcribe the audio from the chrome tabs and provide that information to the backend.

**Backend**

## Challenges we ran into

It was really hard to make the chrome extension work because of a lot of security constraints that websites have. We thought that making the basic chrome extension would be the easiest part but turned out to be the hardest. Also figuring out the overall structure and the flow of the program was a challenging task but we were able to achieve it.

## Accomplishments that we're proud of

1) (co:here) Finetuned a co:here model to semantically classify news articles based on emotional sentiment

2) (co:here) Developed a high-performing classification model to classify news articles by topic

3) Spun up a cockroach db node and client and used it to store all of our classification data

4) Added support for multiple users of the extension that can leverage the use of cockroach DB's relational schema.

5) Frontend: Implemented support for multimedia streaming and transcription from the browser, and used script injection into websites to scrape content.

6) Infrastructure: Deploying server code to the cloud and serving it using Nginx and port-forwarding.

## What we learned

1) We learned a lot about how to use cockroach DB in order to create a database of news articles and topics that also have multiple users

2) Script injection, cross-origin and cross-frame calls to handle multiple frontend elements. This was especially challenging for us as none of us had any frontend engineering experience.

3) Creating a data ingestion and machine learning inference pipeline that runs on the cloud, and finetuning the model using ensembles to get optimal results for our use case.

## What's next for goodNews

1) Currently, we push a notification to the user about negative pages viewed/a link to a positive article every time the user visits a negative page after the threshold has been crossed. The intended way to fix this would be to add a column to one of our existing cockroach db tables as a 'dirty bit' of sorts, which tracks whether a notification has been pushed to a user or not, since we don't want to notify them multiple times a day. After doing this, we can query the table to determine if we should push a notification to the user or not.

2) We also would like to finetune our machine learning more. For example, right now we classify articles by topic broadly (such as War, COVID, Sports etc) and show a related positive article in the same category. Given more time, we would want to provide more semantically similar positive article suggestions to those that the author is reading. We could use cohere or other large language models to potentially explore that. | ## Inspiration

When it comes to finding solutions to global issues, we often feel helpless: making us feel as if our small impact will not help the bigger picture. Climate change is a critical concern of our age; however, the extent of this matter often reaches beyond what one person can do....or so we think!

Inspired by the feeling of "not much we can do", we created *eatco*. *Eatco* allows the user to gain live updates and learn how their usage of the platform helps fight climate change. This allows us to not only present users with a medium to make an impact but also helps spread information about how mother nature can heal.

## What it does

While *eatco* is centered around providing an eco-friendly alternative lifestyle, we narrowed our approach to something everyone loves and can apt to; food! Other than the plenty of health benefits of adopting a vegetarian diet — such as lowering cholesterol intake and protecting against cardiovascular diseases — having a meatless diet also allows you to reduce greenhouse gas emissions which contribute to 60% of our climate crisis. Providing users with a vegetarian (or vegan!) alternative to their favourite foods, *eatco* aims to use small wins to create a big impact on the issue of global warming. Moreover, with an option to connect their *eatco* account with Spotify we engage our users and make them love the cooking process even more by using their personal song choices, mixed with the flavours of our recipe, to create a personalized playlist for every recipe.

## How we built it

For the front-end component of the website, we created our web-app pages in React and used HTML5 with CSS3 to style the site. There are three main pages the site routes to: the main app, and the login and register page. The login pages utilized a minimalist aesthetic with a CSS style sheet integrated into an HTML file while the recipe pages used React for the database. Because we wanted to keep the user experience cohesive and reduce the delay with rendering different pages through the backend, the main app — recipe searching and viewing — occurs on one page. We also wanted to reduce the wait time for fetching search results so rather than rendering a new page and searching again for the same query we use React to hide and render the appropriate components. We built the backend using the Flask framework. The required functionalities were implemented using specific libraries in python as well as certain APIs. For example, our web search API utilized the googlesearch and beautifulsoup4 libraries to access search results for vegetarian alternatives and return relevant data using web scraping. We also made use of Spotify Web API to access metadata about the user’s favourite artists and tracks to generate a personalized playlist based on the recipe being made. Lastly, we used a mongoDB database to store and access user-specific information such as their username, trees saved, recipes viewed, etc. We made multiple GET and POST requests to update the user’s info, i.e. saved recipes and recipes viewed, as well as making use of our web scraping API that retrieves recipe search results using the recipe query users submit.

## Challenges we ran into

In terms of the front-end, we should have considered implementing Routing earlier because when it came to doing so afterward, it would be too complicated to split up the main app page into different routes; this however ended up working out alright as we decided to keep the main page on one main component. Moreover, integrating animation transitions with React was something we hadn’t done and if we had more time we would’ve liked to add it in. Finally, only one of us working on the front-end was familiar with React so balancing what was familiar (HTML) and being able to integrate it into the React workflow took some time. Implementing the backend, particularly the spotify playlist feature, was quite tedious since some aspects of the spotify web API were not as well explained in online resources and hence, we had to rely solely on documentation. Furthermore, having web scraping and APIs in our project meant that we had to parse a lot of dictionaries and lists, making sure that all our keys were exactly correct. Additionally, since dictionaries in Python can have single quotes, when converting these to JSONs we had many issues with not having them be double quotes. The JSONs for the recipes also often had quotation marks in the title, so we had to carefully replace these before the recipes were themselves returned. Later, we also ran into issues with rate limiting which made it difficult to consistently test our application as it would send too many requests in a small period of time. As a result, we had to increase the pause interval between requests when testing which made it a slow and time consuming process. Integrating the Spotify API calls on the backend with the frontend proved quite difficult. This involved making sure that the authentication and redirects were done properly. We first planned to do this with a popup that called back to the original recipe page, but with the enormous amount of complexity of this task, we switched to have the playlist open in a separate page.

## Accomplishments that we're proud of

Besides our main idea of allowing users to create a better carbon footprint for themselves, we are proud of accomplishing our Spotify integration. Using the Spotify API and metadata was something none of the team had worked with before and we're glad we learned the new skill because it adds great character to the site. We all love music and being able to use metadata for personalized playlists satisfied our inner musical geek and the integration turned out great so we're really happy with the feature. Along with our vast recipe database this far, we are also proud of our integration! Creating a full-stack database application can be tough and putting together all of our different parts was quite hard, especially as it's something we have limited experience with; hence, we're really proud of our service layer for that. Finally, this was the first time our front-end developers used React for a hackathon; hence, using it in a time and resource constraint environment for the first time and managing to do it as well as we did is also one of our greatest accomplishments.

## What we learned

This hackathon was a great learning experience for all of us because everyone delved into a tool that they'd never used before! As a group, one of the main things we learned was the importance of a good git workflow because it allows all team members to have a medium to collaborate efficiently by combing individual parts. Moreover, we also learned about Spotify embedding which not only gave *eatco* a great feature but also provided us with exposure to metadata and API tools. Moreover, we also learned more about creating a component hierarchy and routing on the front end. Another new tool that we used in the back-end was learning how to perform database operations on a cloud-based MongoDB Atlas database from a python script using the pymongo API. This allowed us to complete our recipe database which was the biggest functionality in *eatco*.

## What's next for Eatco

Our team is proud of what *eatco* stands for and we want to continue this project beyond the scope of this hackathon and join the fight against climate change. We truly believe in this cause and feel eatco has the power to bring meaningful change; thus, we plan to improve the site further and release it as a web platform and a mobile application. Before making *eatco* available for users publically we want to add more functionality and further improve the database and present the user with a more accurate update of their carbon footprint. In addition to making our recipe database bigger, we also want to focus on enhancing the front-end for a better user experience. Furthermore, we also hope to include features such as connecting to maps (if the user doesn't have a certain ingredient, they will be directed to the nearest facility where that item can be found), and better use of the Spotify metadata to generate even better playlists. Lastly, we also want to add a saved water feature to also contribute into the global water crisis because eating green also helps cut back on wasteful water consumption! We firmly believe that *eatco* can go beyond the range of the last 36 hours and make impactful change on our planet; hence, we want to share with the world how global issues don't always need huge corporate or public support to be solved, but one person can also make a difference. | ## Inspiration

False news. False news. False news everywhere. Before reading your news article in depth, let us help you give you a brief overview of what you'll be ingesting.

## What it does

Our Google Chrome extension will analyze the news article you're about to read and give you a heads up on some on the article's sentiment (what emotion is the article trying to convey), top three keywords in the article, and the categories this article's topic belong to.

Our extension also allows you to fact check any statement by simply highlighting the statement, right-clicking, and selecting Fact check this with TruthBeTold.

## How we built it

Our Chrome extension pulls the url of the webpage you're browsing and sends that to our Google Cloud Platform hosted Google App Engine Python server. Our server is then able to parse the content of the page, determine the content of the news article through processing by Newspaper3k API. The scraped article is then sent to Google's Natural Language API Client which assess the article for sentiment, categories, and keywords. This data is then returned to your extension and displayed in a friendly manner.

Fact checking follows a similar path in that our extension sends the highlighted text to our server, which checks against Google's Fact Check Explorer API. The consensus is then returned and alerted.

## Challenges we ran into

* Understanding how to interact with Google's APIs.

* Working with Python flask and creating new endpoints in flask.

* Understanding how Google Chrome extensions are built.

## Accomplishments that I'm proud of

* It works! | winning |

## Inspiration

We were interested in machine learning and data analytics and decided to pursue a real-world application that could prove to have practical use for society. Many themes of this project were inspired by hip-hop artist Cardi B.

## What it does

Money Moves analyzes data about financial advisors and their attributes and uses machine's deep learning unsupervised algorithms to predict if certain financial advisors will most likely be beneficial or detrimental to an investor's financial standing.

## How we built it

We partially created a custom deep-learning library where we built a Self Organizing Map. The Self Organizing Map is a neural network that takes data and creates a layer of abstraction; essentially reducing the dimensionality of the data. To make this happened we had to parse several datasets. We used beautiful soup library, pandas and numpy to parse the data needed. Once it was parsed, we were able to pre-process the data, to feed it to our neural network (Self Organizing Map). After we were able to successfully analyze the data with the deep learning algorithm, we uploaded the neural network and dataset to our Google server where we are hosting a Django website. The website will show investors the best possible advisor within their region.

## Challenges we ran into

Due to the nature of this project, we struggled with moving large amounts of data through the internet, cloud computing, and designing a website to display analyzed data because of the difficult with WiFi connectivity that many hackers faced at this competition. We mostly overcame this through working late nights and lots of frustration.

We also struggled to find an optimal data structure for storing both raw and output data. We ended up using .csv files organized in a logical manner so that data is easier accessible through a simple parser.

## Accomplishments that we're proud of

Successfully parse the dataset needed to do preprocessing and analysis with deeplearing.

Being able to analyze our data with the Self Organizing Map neural network.

Side Note: Our team member Mikhail Sorokin placed 3rd in the Yhack Rap Battle

## What we learned

We learnt how to implement a Self Organizing Map, build a good file system and code base with Django. This led us to learn about Google's cloud service where we host our Django based website. In order to be able to analyze the data, we had to parse several files and format the data that we had to send through the network.

## What's next for Money Moves

We are looking to expand our Self Organizing Map to accept data from other financial dataset, other than stock advisors; this way we are able to have different models that will work together. One way we were thinking is to have unsupervised and supervised deep-learning systems where, we have the unsupervised find the patterns that would be challenging to find; and the supervised algorithm will direct the algorithm to a certain goal that could help investors choose the best decision possible for their financial options. | ## Inspiration

Members of our team know multiple people who suffer from permanent or partial paralysis. We wanted to build something that could be fun to develop and use, but at the same time make a real impact in people's everyday lives. We also wanted to make an affordable solution, as most solutions to paralysis cost thousands and are inaccessible. We wanted something that was modular, that we could 3rd print and also make open source for others to use.

## What it does and how we built it

The main component is a bionic hand assistant called The PulseGrip. We used an ECG sensor in order to detect electrical signals. When it detects your muscles are trying to close your hand it uses a servo motor in order to close your hand around an object (a foam baseball for example). If it stops detecting a signal (you're no longer trying to close) it will loosen your hand back to a natural resting position. Along with this at all times it sends a signal through websockets to our Amazon EC2 server and game. This is stored on a MongoDB database, using API requests we can communicate between our games, server and PostGrip. We can track live motor speed, angles, and if it's open or closed. Our website is a full-stack application (react styled with tailwind on the front end, node js on the backend). Our website also has games that communicate with the device to test the project and provide entertainment. We have one to test for continuous holding and another for rapid inputs, this could be used in recovery as well.

## Challenges we ran into

This project forced us to consider different avenues and work through difficulties. Our main problem was when we fried our EMG sensor, twice! This was a major setback since an EMG sensor was going to be the main detector for the project. We tried calling around the whole city but could not find a new one. We decided to switch paths and use an ECG sensor instead, this is designed for heartbeats but we managed to make it work. This involved wiring our project completely differently and using a very different algorithm. When we thought we were free, our websocket didn't work. We troubleshooted for an hour looking at the Wifi, the device itself and more. Without this, we couldn't send data from the PulseGrip to our server and games. We decided to ask for some mentor's help and reset the device completely, after using different libraries we managed to make it work. These experiences taught us to keep pushing even when we thought we were done, and taught us different ways to think about the same problem.

## Accomplishments that we're proud of

Firstly, just getting the device working was a huge achievement, as we had so many setbacks and times we thought the event was over for us. But we managed to keep going and got to the end, even if it wasn't exactly what we planned or expected. We are also proud of the breadth and depth of our project, we have a physical side with 3rd printed materials, sensors and complicated algorithms. But we also have a game side, with 2 (questionable original) games that can be used. But they are not just random games, but ones that test the user in 2 different ways that are critical to using the device. Short burst and hold term holding of objects. Lastly, we have a full-stack application that users can use to access the games and see live stats on the device.

## What's next for PulseGrip

* working to improve sensors, adding more games, seeing how we can help people

We think this project had a ton of potential and we can't wait to see what we can do with the ideas learned here.

## Check it out

<https://hacks.pulsegrip.design>

<https://github.com/PulseGrip> | ## Inspiration

Fraud is a crime that can impact any Canadian, regardless of their education, age or income.

From January 2014 to December 2017, Canadians lost more than $405 million to fraudsters. ~ [Statistic Info](https://www.competitionbureau.gc.ca/eic/site/cb-bc.nsf/eng/04334.html)

We wanted to develop technology that detects potentially fraudulent activity and give account owners the ability to cancel such transactions.

## How it works

Using scikit-learn, we were able to detect patterns in a user's previous banking data provided by TD's davinci API.

We examined categories such as the location of the purchase, the cost of the purchase, and the purchase category. Afterwards, we determined certain parameters for the cost of purchase based on the purchase category, and purchase locations to validate transactions that met the requirements. Transactions that were made outside of these parameters were deemed suspicious activity and an alert is sent to the account owner, providing them with the ability to validate/decline the purchase. If the transaction is approved, it is added to the MongoDB database with the rest of the user's previous transactions.

[TD's davinci API](https://td-davinci.com/)

[Presentation Slide Show](https://slides.com/malharshah/deck#/projectapati)

[Github Repository](https://github.com/mshah0722/FraudDetectionDeltaHacks2020)

## Challenges we ran into

Initially, we tried to use Tensorflow for our ML model to analyze the user's previous banking history to find patterns and make the parameters. However, we were having difficulty correctly implementing it and there were mistakes being made in the model. This is why we decided to switch to scikit-learn, which our team had success using and our ML model turned out as we had expected.

## Accomplishments that we are proud of

Learning to use and implement Machine Learning with such a large data set that we were provided with. Training the model to detect suspicious activity was finally achieved after several attempts.

## What we learned

Handling large data files.

Pattern detection/Data Analysis.

Data Interpretation and Model development.

## What's next for Project Apati

Improving the model by looking at other categories in the data to refine the model based on other transactions statistics. Providing more user's data to improve the training and testing data-set for the model. | winning |

## Inspiration

Our solution was named on remembrance of Mother Teresa

## What it does

Robotic technology to assist nurses and doctors in medicine delivery, patient handling across the hospital, including ICU’s. We are planning to build an app in Low code/No code that will help the covid patients to scan themselves as the mobile app is integrated with the CT Scanner for doctors to save their time and to prevent human error . Here we have trained with CT scans of Covid and developed a CNN model and integrated it into our application to help the covid patients. The data sets are been collected from the kaggle and tested with an efficient algorithm with the efficiency of around 80% and the doctors can maintain the patient’s record. The beneficiary of the app is PATIENTS.

## How we built it

Bots are potentially referred to as the most promising and advanced form of human-machine interactions. The designed bot can be handled with an app manually through go and cloud technology with e predefined databases of actions and further moves are manually controlled trough the mobile application. Simultaneously, to reduce he workload of doctor, a customized feature is included to process the x-ray image through the app based on Convolution neural networks part of image processing system. CNN are deep learning algorithms that are very powerful for the analysis of images which gives a quick and accurate classification of disease based on the information gained from the digital x-ray images .So to reduce the workload of doctors these features are included. To know get better efficiency on the detection I have used open source kaggle data set.

## Challenges we ran into

The data source for the initial stage can be collected from the kaggle and but during the real time implementation the working model and the mobile application using flutter need datasets that can be collected from the nearby hospitality which was the challenge.

## Accomplishments that we're proud of

* Counselling and Entertainment

* Diagnosing therapy using pose detection

* Regular checkup of vital parameters

* SOS to doctors with live telecast

-Supply off medicines and foods

## What we learned

* CNN

* Machine Learning

* Mobile Application

* Cloud Technology

* Computer Vision

* Pi Cam Interaction

* Flutter for Mobile application

## Implementation

* The bot is designed to have the supply carrier at the top and the motor driver connected with 4 wheels at the bottom.

* The battery will be placed in the middle and a display is placed in the front, which will be used for selecting the options and displaying the therapy exercise.

* The image aside is a miniature prototype with some features

* The bot will be integrated with path planning and this is done with the help mission planner, where we will be configuring the controller and selecting the location as a node

* If an obstacle is present in the path, it will be detected with lidar placed at the top.

* In some scenarios, if need to buy some medicines, the bot is attached with the audio receiver and speaker, so that once the bot reached a certain spot with the mission planning, it says the medicines and it can be placed at the carrier.

* The bot will have a carrier at the top, where the items will be placed.

* This carrier will also have a sub-section.

* So If the bot is carrying food for the patients in the ward, Once it reaches a certain patient, the LED in the section containing the food for particular will be blinked. | ## Inspiration

Donut was originally inspired by a viral story about dmdm hydantoin, a chemical preservative used in hair products rumoured to be toxic and lead to hair loss. This started a broader discussion about commercial products in general and the plethora of chemical substances and ingredients we blindly use and consume on a daily basis. We wanted to remove these veils that can impact the health of the community and encourage people to be more informed consumers.

## What it does

Donut uses computer vision to read the labels off packaging through a camera. After acquiring this data, it displays all the ingredients in a list and uses sentiment analysis to determine the general safety of each ingredient. Users can click into each ingredient to learn more and read related articles that we recommend in order to make more educated purchases.

## How we built it

## Challenges we ran into

Front end development was a challenge since it was something our team was inexperienced with, but there’s no better place to learn than at a hackathon! Fighting away the sleepiness was another hurdle too.

## Accomplishments that we're proud of

We got more done than we imagined with a 3 person team :)

Michael is proud that he was very productive with the backend code :D

Grace is proud that she wrote any code at all as a designer o\_o

Denny is proud to have learned more about HTTP requests and worked with both the front and backend :0

## What we learned

We could be benefitted from a more well-balanced team (befriend some front end devs!). Sleep is important. Have snacks at the ready.

## What's next for Donut Eat This

Features that we would love to implement next would be a way to upload photos from a user’s album and a way to view recent scans. | ## Inspiration

“**Social media sucks these days.**” — These were the first few words we heard from one of the speakers at the opening ceremony, and they struck a chord with us.

I’ve never genuinely felt good while being on my phone, and like many others I started viewing social media as nothing more than a source of distraction from my real life and the things I really cared about.

In December 2019, I deleted my accounts on Facebook, Instagram, Snapchat, and WhatsApp.

For the first few months — I honestly felt great. I got work done, focused on my small but valuable social circle, and didn’t spend hours on my phone.

But one year into my social media detox, I realized that **something substantial was still missing.** I had personal goals, routines, and daily checklists of what I did and what I needed to do — but I wasn’t talking about them. By not having social media I bypassed superficial and addictive content, but I was also entirely disconnected from my network of friends and acquaintances. Almost no one knew what I was up to, and I didn’t know what anyone was up to either. A part of me longed for a level of social interaction more sophisticated than Gmail, but I didn’t want to go back to the forms of social media I had escaped from.

One of the key aspects of being human is **personal growth and development** — having a set of values and living them out consistently. Especially in the age of excess content and the disorder of its partly-consumed debris, more people are craving a sense of **routine, orientation, and purpose** in their lives. But it’s undeniable that **humans are social animals** — we also crave **social interaction, entertainment, and being up-to-date with new trends.**

Our team’s problem with current social media is its attention-based reward system. Most platforms reward users based on numeric values of attention, through measures such as likes, comments and followers. Because of this reward system, people are inclined to create more appealing, artificial, and addictive content. This has led to some of the things we hate about social media today — **addictive and superficial content, and the scarcity of genuine interactions with people in the network.**

This leads to a **backward-looking user-experience** in social media. The person in the 1080x1080 square post is an ephemeral and limited representation of who the person really is. Once the ‘post’ button has been pressed, the post immediately becomes an invitation for users to trap themselves in the past — to feel dopamine boosts from likes and comments that have been designed to make them addicted to the platform and waste more time, ultimately **distorting users’ perception of themselves, and discouraging their personal growth outside of social media.**

In essence — We define the question of reinventing social media as the following:

*“How can social media align personal growth and development with meaningful content and genuine interaction among users?”*

**Our answer is High Resolution — a social media platform that orients people’s lives toward an overarching purpose and connects them with liked-minded, goal-oriented people.**

The platform seeks to do the following:

**1. Motivate users to visualize and consistently achieve healthy resolutions for personal growth**

**2. Promote genuine social interaction through the pursuit of shared interests and values**

**3. Allow users to see themselves and others for who they really are and want to be, through natural, progress-inspired content**

## What it does

The following are the functionalities of High Resolution (so far!):

After Log in or Sign Up:

**1. Create Resolution**

* Name your resolution, whether it be Learning Advanced Korean, or Spending More Time with Family.

* Set an end date to the resolution — i.e. December 31, 2022

* Set intervals that you want to commit to this goal for (Daily / Weekly / Monthly)

**2. Profile Page**

* Ongoing Resolutions

+ Ongoing resolutions and level of progress