anchor

stringlengths 1

23.8k

| positive

stringlengths 1

23.8k

| negative

stringlengths 1

31k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## AI, AI, AI...

The number of projects using LLMs has skyrocketed with the wave of artificial intelligence. But what if you *were* the AI, tasked with fulfilling countless orders and managing requests in real time? Welcome to chatgpME, a fast-paced, chaotic game where you step into the role of an AI who has to juggle multiple requests, analyzing input, and delivering perfect responses under pressure!

## Inspired by games like Overcooked...

chatgpME challenges you to process human queries as quickly and accurately as possible. Each round brings a flood of requests—ranging from simple math questions to complex emotional support queries—and it's your job to fulfill them quickly with high-quality responses!

## How to Play

Take Orders: Players receive a constant stream of requests, represented by different "orders" from human users. The orders vary in complexity—from basic facts and math solutions to creative writing and emotional advice.

Process Responses: Quickly scan each order, analyze the request, and deliver a response before the timer runs out.

Get analyzed - our built-in AI checks how similar your answer is to what a real AI would say :)

## Key Features

Fast-Paced Gameplay: Just like Overcooked, players need to juggle multiple tasks at once. Keep those responses flowing and maintain accuracy, or you’ll quickly find yourself overwhelmed.

Orders with a Twist: The more aware the AI becomes, the more unpredictable it gets. Some responses might start including strange, existential musings—or it might start asking you questions in the middle of a task!

## How We Built It

Concept & Design: We started by imagining a game where the player experiences life as ChatGPT, but with all the real-time pressure of a time management game like Overcooked. Designs were created in Procreate and our handy notebooks.

Tech Stack: Using Unity, we integrated a system where mock requests are sent to the player, each with specific requirements and difficulty levels. A template was generated using defang, and we also used it to sanitize user inputs. Answers are then evaluated using the fantastic Cohere API!

Playtesting: Through multiple playtests, we refined the speed and unpredictability of the game to keep players engaged and on their toes. | ## Inspiration

On our way to PennApps, our team was hungrily waiting in line at Shake Shack while trying to think of the best hack idea to bring. Unfortunately, rather than being able to sit comfortably and pull out our laptops to research, we were forced to stand in a long line to reach the cashier, only to be handed a clunky buzzer that countless other greasy fingered customer had laid their hands on. We decided that there has to be a better way; a way to simply walk into a restaurant, be able to spend more time with friends, and to stand in line as little as possible. So we made it.

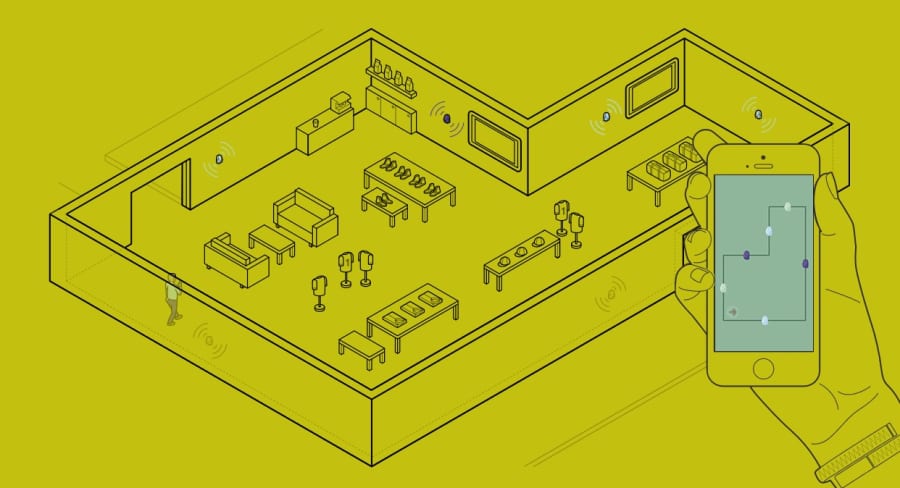

## What it does

Q'd (pronounced queued) digitizes the process of waiting in line by allowing restaurants and events to host a line through the mobile app and for users to line up digitally through their phones as well. Also, it functions to give users a sense of the different opportunities around them by searching for nearby queues. Once in a queue, the user "takes a ticket" which decrements until they are the first person in line. In the meantime, they are free to do whatever they want and not be limited to the 2-D pathway of a line for the next minutes (or even hours).

When the user is soon to be the first in line, they are sent a push notification and requested to appear at the entrance where the host of the queue can check them off, let them in, and remove them from the queue. In addition to removing the hassle of line waiting, hosts of queues can access their Q'd Analytics to learn how many people were in their queues at what times and learn key metrics about the visitors to their queues.

## How we built it

Q'd comes in three parts; the native mobile app, the web app client, and the Hasura server.

1. The mobile iOS application built with Apache Cordova in order to allow the native iOS app to be built in pure HTML and Javascript. This framework allows the application to work on both Android, iOS, and web applications as well as to be incredibly responsive.

2. The web application is built with good ol' HTML, CSS, and JavaScript. Using the Materialize CSS framework gives the application a professional feel as well as resources such as AmChart that provide the user a clear understanding of their queue metrics.

3. Our beast of a server was constructed with the Hasura application which allowed us to build our own data structure as well as to use the API calls for the data across all of our platforms. Therefore, every method dealing with queues or queue analytics deals with our Hasura server through API calls and database use.

## Challenges we ran into

A key challenge we discovered was the implementation of Cordova and its associated plugins. Having been primarily Android developers, the native environment of the iOS application challenged our skills and provided us a lot of learn before we were ready to properly implement it.

Next, although a less challenge, the Hasura application had a learning curve before we were able to really us it successfully. Particularly, we had issues with relationships between different objects within the database. Nevertheless, we persevered and were able to get it working really well which allowed for an easier time building the front end.

## Accomplishments that we're proud of

Overall, we're extremely proud of coming in with little knowledge about Cordova, iOS development, and only learning about Hasura at the hackathon, then being able to develop a fully responsive app using all of these technologies relatively well. While we considered making what we are comfortable with (particularly web apps), we wanted to push our limits to take the challenge to learn about mobile development and cloud databases.

Another accomplishment we're proud of is making it through our first hackathon longer than 24 hours :)

## What we learned

During our time developing Q'd, we were exposed to and became proficient in various technologies ranging from Cordova to Hasura. However, besides technology, we learned important lessons about taking the time to properly flesh out our ideas before jumping in headfirst. We devoted the first two hours of the hackathon to really understand what we wanted to accomplish with Q'd, so in the end, we can be truly satisfied with what we have been able to create.

## What's next for Q'd

In the future, we're looking towards enabling hosts of queues to include premium options for users to take advantage to skip lines of be part of more exclusive lines. Furthermore, we want to expand the data analytics that the hosts can take advantage of in order to improve their own revenue and to make a better experience for their visitors and customers. | ## Inspiration

Have you been in a situation where your friend asks about that fascinating movie you just watched yet you find yourself get stuck and don't know how to describe it? All of us have.

As our team attended the Workshop presented by Cohere, we were incredibly impressed with the AI's capability with generating text-based media. We decided to use the technology to help alleviate stress in menial writing tasks, such as describing a movie to a friend, making a Twitter post about the team introducing a place to others, etc.

## What it does

Our product is a front-end web platform serving anyone who needs a piece of paragraph on a simple everyday topic.

The functionality of Writeeasy is very simple. Given a prompt(in question format) or a list of keywords, our web app will use a Cohere model to generate a response of up to/around 80 words.

For example, a response to the prompt: `Why do you like coding?` generated by our software is:

`Coding has opened my eyes to a new world of possibilities. I used to think coding was about writing computer programs. Now, I see coding as a way to make something new. It is about creating a new idea, and seeing it come to life. Coding is a way to create something that had never existed before.`

The response for personal questions, such as when your newly-made friend is curious about why you like a particular hobby ("Why do you like playing basketball?"), may be hard to answer on the spot. Our goal is for our AI to save user time and effort if they ever find themselves get stuck. They can look at what our AI writes, and use is either as an inspiration or as a template based on which further edit is added. Essentially, we are providing the user with a starting point and then the user ample freedom to build on that, hopefully with more ease.

## How we built it

We built the project entirely using Cohere, an open-sourced AI model that provides many NLP functionalities. We split the workload into two different areas, mainly the backend and the frontend. In the backend, we made API calls to Cohere. Then we tested the model with our desired prompts. Afterwards, we customized our prompt successfully with prompt engineering, effectively using 3 distinct prompt answer examples to guide the AI on the right path.

In terms of the frontend, we used the Python-based web framework called Flask. The website is hosted by Heroku.

## Challenges we ran into

With just one prompt, we get a variety of different results. Sometimes, rather than getting the AI answering the prompt, the AI makes many new prompts. Sometimes we get the AI asking its own similar prompts, rather than answering the prompt itself. It took us a long time to be able to figure out how to get the AI to output the specific response we want, which is a reasonable answer to the prompt. We also had many setbacks in relation to getting the Cohere platform working, such as setting up Client key, using stop phrases, and others. Luckily, we managed to get Cohere working successfully, as demonstrated by our implementation.

In terms of the frontend, we were met with certain difficulties when it comes to font and formatting. However, we were able to pull it off at the end.

## Accomplishments that we're proud of

We were able to collaborate as a team even as we previously didn't know each other. Writeeasy still has plenty of limitations, but we were able to successfully apply an AI NLP generative model in a front-based web-interface despite having no experience with this area.

## What we learned

Aaron learnt how to use Cohere API and integrate that with a webapp!!

## What's next for Writeeasy

We have many plans for Writeeasy! We hope to include a chrome extension and improve model effectiveness a larger range of questions. | winning |

# Trusty Paws

## Inspiration

We believe that every pet deserves to find a home. Animal shelters have often had to euthanize animals due to capacity issues and lack of adoptions. Our objective with Trusty Paws is to increase the rate of adoptions of these animals by increasing the exposure of shelters to potential pet owners as well as to provide an accessible platform for users to browse and adopt their next best friend.

Trusty Paws also aims to be an online hub for all things related to your pet, from offering an online marketplace for pet products to finding a vet near you.

## What it does

Our vision for Trusty Paws is to create a platform that brings together all the players that contribute to the health and happiness of our pawed friends, while allowing users to support local businesses. Each user, shelter, seller, and veterinarian contributes to a different aspect of Trusty Paws.

**Users**:

Users are your everyday users who own pets or are looking to adopt pets. They will be able to access the marketplace to buy items for their pets, browse through each shelter's pet profiles to fill out adoption requests, and find the nearest pet clinic.

**Shelters**:

Shelters accounts will be able to create pet profiles for each of their animals that are up for adoption! Each pet will have its own profile that can be customized with pictures and other fields providing further information on them. The shelter receives an adoption request form each time an user applies to adopt one of their pets.

**Sellers**:

Sellers will be able to set up stores showing all of their product listings, which include, but are not limited to, food, toys, accessories, and many other. Our marketplace will provide the opportunity for local businesses that have been affected by Covid-19 to reach their target audience while abiding by health and safety guidelines. For users, it will be a convenient way to satisfy all the needs of their pet in one place. Finally, our search bar will allow users to search for specific items for a quick and efficient shopping experience.

**Veterinarians**:

Veterinarians will be able to set up a profile for their clinic, with all the pertinent information such as their opening hours, services provided, and location.

## How we built it

For the front-end, React., Bootstrap and Materialized CSS were used to acquire the visual effects of the current website. In fact, the very first step we undertook was to draft an initial prototype of the product on Figma to ensure all the requirements and required features were met. After a few iterations of redesigning, we each dove into developing the necessary individual components, forms, and pages for the website. Once all components were completed, the next step was to route pages together in order to achieve a seamless navigation of the website.

We used Firebase within Node.Js to implement a framwork for the back-end. Using Firebase, we implemented a NoSQL database using Cloud Firestore. Data for users (all types), pets, products, and adoption forms along with their respective fields were stored as documents in their respective collections.

Finally, we used Google's Distance Matrix API to compute distances between two addresses and find the nearest services when necessary, such as the closest vet clinics or the closest shelters.

## Challenges we ran into

Although we were successful at accomplishing the major features of the website, we encountered many challenges throughout the weekend. As we started working on Trusty Paws, we realized that the initial implementation was not as user-friendly as we wanted it to be. We then decided to take a step back and to return to the initial design phase. Another challenge we ran into was that most of the team was unfamiliar with the development tools necessary for this project, such as Firebase, Node.Js, bootstrap, and redux.

## Accomplishments that we're proud of

We are proud that our team learned so much over the course of a few days.

## What's next for Trusty Paws

We want to keep improving our users' experience by optimizing the current features. We also want to improve the design and user friendliness of the interface. | Worldwide, there have been over a million cases of animal cruelty over the past decade. With people stuck at home, bottling up their frustrations. Moreover, due to COVID, these numbers aren’t going down anytime soon. To tackle these issues, we built this game, which has features like:

* Reminiscent of city-building simulators like Sim-City.

* Build and manage your animal shelter.

* Learn to manage finances, take care of animals, set up adoption drives.

* Grow and expand your shelter by taking in homeless animals, and giving them a life.

The game is built with Unity game engine, and runs on WebGL, utilizing the power of Wix Velo, which allows us to quickly host and distribute the game across platforms from a single code base. | # Pawsome: Revolutionizing Pet Health and Fitness

Welcome to Pawsome, the cutting-edge platform dedicated to enhancing the health and fitness of our beloved pets. Combining a deep love for pets with the latest in technology, we provide personalized wellness plans to ensure every pet gets the care they deserve.

## About Pawsome

Pawsome isn't just a project—it's a mission to ensure the well-being of pets worldwide. Our platform offers tools and resources for pet owners to maintain and improve their pet's health and fitness, featuring interactive consultations and tailored fitness schedules.

### Our Vision

We envision a world where every pet enjoys a happy, healthy life. Our goal is to prioritize pets' health and wellness, offering accessible, reliable solutions for their care.

## Technology Stack

To deliver a seamless, efficient user experience, Pawsome leverages a comprehensive and robust stack:

* **Frontend**:

+ React: A JavaScript library for building user interfaces.

+ Next.js: A React framework for production; it makes building scalable applications easier.

+ JavaScript: A programming language that allows you to implement complex features on web pages.

+ TypeScript: A typed superset of JavaScript that compiles to plain JavaScript.

+ HTML: The standard markup language for documents designed to be displayed in a web browser.

+ CSS: The language used to describe the presentation of a document written in HTML.

+ Tailwind CSS: A utility-first CSS framework for rapidly building custom designs.

* **Backend**:

+ Flask: A micro web framework written in Python.

+ Python: A programming language that lets you work quickly and integrate systems more effectively.

* **Integrations**:

+ Fetch.ai: An artificial intelligence lab working on creating a decentralized digital world.

Agent 1 Using Fetch AI

*

Agent 2 Using Fetch AI

*

Agent 3 Using Fetch AI

*

* Together.ai: A platform that provides AI-based solutions.

* Postman: An API platform for building and using APIs. Postman simplifies each step of the API lifecycle and streamlines collaboration.

* Bun: Bun is an all-in-one JavaScript runtime & toolkit designed for speed, complete with a bundler, test runner, and Node.js-compatible package manager. It served as a JavaScript runtime optimizer for our project.

These technologies are carefully chosen to ensure that Pawsome is not only cutting-edge but also robust and scalable, catering to the dynamic needs of pet owners and their beloved pets.

This tech combination enables dynamic, responsive interactions and complex data processing across our platform.

## Features

### Interactive Pet Wellness AI

Pawsome features a chat button for users to interact with our pet wellness AI. Depending on user needs, it offers appointment booking, medicine orders, or grooming session scheduling.

### Customized Fitness Center

Our Fitness Center allows pet owners to input details like breed, weight, and health, creating a custom fitness plan tailored to their pet's needs, and promoting a holistic wellness approach.

### Custom Push Notifications

To ensure pets and their owners stay on track with their wellness routines, Pawsome sends custom push notifications via email, reminding users of upcoming activities and schedules.

## Future Enhancements

We're committed to continuously improving Pawsome with several key initiatives:

* **Data-Driven Personalization**: By gathering more data, we aim to make even more personalized recommendations, enhancing the effectiveness of wellness plans.

* **E-commerce Platform**: Developing a one-stop destination for all pet needs, from food to toys, ensuring pet owners have access to high-quality products.

* **Network of Veterinarians**: Establishing a network of doctors available for consultations and emergencies, providing peace of mind for pet owners.

## Getting Started

1. Clone repo

`git clone https://github.com/mounika2000/treehacks.git

cd Treehacks

yarn`

2. Install dependencies

`npm install`

3.Then, you can run locally in development mode with live reload:

`yarn dev`

## Contributing

Contributions are welcome! Whether you're a developer, designer, or pet wellness enthusiast, there are many ways to contribute to Pawsome. Please see our contributing guidelines for more details.

## Contact Us

Sai Mounika Peteti: [[email protected]](mailto:[email protected])

Chinmay Nilesh Mahagaonkar: [[email protected]](mailto:[email protected])

Sanket Kulkarni: [[email protected]](mailto:[email protected])

Tanay Godse: [[email protected]](mailto:[email protected]) | winning |

## Inspiration

Ctrl-C, Ctrl-V: The only two buttons a programmer really needs ;)

We enhance the power of Ctrl-C and Ctrl-V, enabling you to paste seamlessly, leverage AI to analyze your clipboard content, visualize what's on your clipboard, or even play a song!

---

## What it does

Clippy automatically analyzes your clipboard and provides intelligent suggestions for enhancing, transforming, and utilizing your clipboard content efficiently in your development workflow.

### Matlab AI

Example use cases:

**Play a song**

1. Copy “Play Twinkle Twinkle Little Star”

2. Press Ctrl+v+1

This will give you code to play twinkle twinkle little star in Matlab.

Matlab will automatically run the code it generated to confirm its validity (and so you can enjoy your twinkle twinkle~ :)

**Visualize your clipboard content**

1. Copy something like “graph the weather for past 2 months”

2. Press Ctrl+v+1

This will open Matlab automatically, and display its respective matlab code.

Clippy will run the matlab code it generated and show you your clipboard content, visualized.

**Run Complex Calculations and Algorithms**

Example use cases:

1. Copy a prompt such as "Multiply matrices ( 0 & 6 & 7 \ 8 & 0 & 7 )( 5 & 8 & 0 )"

2. Press Ctrl+v+1

MATLAB will open and perform the specified calculation of the given expression.

(The answer is ( 48 & 40 ) by the way!)

### Adobe Express Add-on

Example use cases:

**Create QR Code**

1. Copy a link (Clippy will also suggest this option if you copied a link)

2. Press Ctrl+v+2

This will allow you to insert a QR code in Adobe Express, from there you may export and use at your discretion.

### LLM Integration

Example use cases:

**Summarization**

1. Copy a paragraph

2. Press Ctrl+v+3

This will create a summary of your clipboard content.

**Question and Answer**

1. Copy a question like “What are the top ten biggest tech companies in revenue”?”

2. Press Ctrl+v+3

Clippy will answer the question from your clipboard content.

---

## How we built it

**Langchain**: LLM Engine Development

**FastAPI**: backend server that communicates with Adobe Express, Langchain LLM Engine, and MatLab.

**OpenAI gpt-4o Model**: LLM for generative AI and instructional tasks

**Auth0**: OAuth authentication provider

**Sauce Labs**: Testing various components of our application

**Adobe Express**: Interaction with clipboard content

**Matlab**: Visualizing clipboard content

**MongoDB**: Vector Database

---

## Challenges we ran into

**When a program deletes itself…**

When we tested shortcut “ctrl+v+1”, we didn’t wish to paste the content when we're triggering a feature. To overcome default pasting from "ctrl+v" keybinding, we simulated “ctrl-z” on the system; however, it undid every second “ctrl-v” was pressed - as a result, testing the application caused it to delete itself ;-;

The running joke goes: “Did we make a malware-”

---

## Accomplishments that we're proud of

* Started on Saturday afternoon and finished

* Everyone worked on a piece they weren’t familiar with, the usual “backend peeps” did frontend this time, and vice versa - was a good learning experience for all

* Slept

* Had fun!

---

## What's next for Clippy

* More Adobe Express features!

* Streamline deployment process for easy setup of all users

--- | ## 💡Inspiration

Have you ever hit a mental **BufferOverflow**? Spending countless hours in front of your screen, sifting through data, only to realize you're drowning in information beyond your brain's capacity? 🤯

In our current era of information overload, researchers, professionals, and curious minds alike struggle not just with the sheer volume of data, but with identifying what's truly relevant. With an average of **250 academic articles read per researcher annually**, the challenge isn't just in consuming this information—it's in effectively filtering through the noise to find the gems that matter. Often, this quest ends with valuable insights lost in isolation, making it difficult to see the big picture and connect the dots of our knowledge.

Our team has experienced first-hand the frustration of juggling disjointed tools. The critical missing piece? A way to not only manage but intelligently filter the flood of information to highlight what's relevant to our unique knowledge landscape.

## 😄What It Does

Dr. Clippy asks questions to gauge your knowledge by communicating with you through **voice or text**, identifies gaps, and suggests papers tailored to you from online searches. It then leverages spatial computing to build and visualize a **3D spatial mind map** of your existing knowledge. The suggested papers are visually suggested as new nodes that connect to your knowledge graph, showing how new information connects to other papers you know so you can decide whether to read the suggested papers and expand on your knowledge.

Here’s how **Dr. Clippy** stands out:

* **Personalized Discovery**: Dr. Clippy recommends academic papers and resources tailored to your knowledge base and gaps.

* **Visual Knowledge Mapping**: Dr. Clippy visualizes how new information slots into your existing knowledge, highlighting connections and sparking innovation through a spatial 3D mind map.

* **Collaborative and Interactive**: Dr. Clippy is your AI assistant and research partner that you can talk to. Beyond solo research, Dr. Clippy enables shared knowledge building with your research colleagues. Edit, expand, and share collective wisdom in a mixed reality space powered by Apple Vision Pro.

## 💻 How We Built It

We created **Dr. Clippy** to be an interactive, AI-driven research assistant, featuring the iconic Clippy from the Windows XP era. Alongside Clippy, there are 8 other avatars that echo MS Windows, offering a variance in agent flavor. We utilized maps to animate these avatars, with JavaScript enabling a range of actions. Our web application is developed using Flask, integrating JavaScript, HTML, and Python for a comprehensive user experience.

When a user has an existing knowledge graph, we visualize it in 3D and store the graph's nodes and edges in a database for persistence and easy manipulation. Users can upload papers they've read, and our system applies cosine similarity to analyze how the abstracts relate to one another. This approach helps us build an initial knowledge graph, offering users a head start in integrating their research into our platform.

The research assistant has voice and text input functionalities, employing the Whisper API to seamlessly convert voice to text. We've innovated in speeding up text-to-speech conversion by parsing sentences individually, achieving a 40% reduction in response wait times. To signal the end of a conversation, we crafted detailed prompts with keywords, culminating in a research question that highlights gaps in the user's knowledge base, prompting an online search.

This search process utilizes the Google Search API, specifically through the Google Scholar Engine, to identify personalized paper recommendations tailored to the user's research interests. We then conduct a similarity search among the top three new personalized papers and the user's existing knowledge base. This allows us to determine how these new papers interlink with the foundational knowledge, visually representing these connections through nodes and text on their edges in the user's knowledge graph.

We designed and implemented a 3D mind map in Unity, providing an intuitive overview of the user's knowledge base. This visualization represents categories, research fields, and resources, along with their interconnections. Using visionOS and Unity Polyspatial tools, we enabled interactive manipulation of the knowledge graphs for collaborative sharing, editing, and expansion, embodying a plug-and-play approach to collective knowledge building. Additionally, we included an entertainment mode for users looking for

## 🚧 Challenges We Ran Into

Our project encountered several technical and integration challenges across various aspects of development:

* **Animated Agents Integration**: Achieving a seamless user experience with animated agents in Flask proved challenging.

* **Real-time Text-to-Speech**: We faced hurdles in generating near real-time text-to-speech responses. To manage data streams efficiently and incorporate non-verbal cues like “uh-huh” and “hmm” to mask processing delays, we had to use multithreading.

* **Functional Memory in Interactions**: Implementing a functional memory in interactions with OpenAI presented difficulties in reducing latency and improving responsiveness.

* **Similarity Search with Chroma's Library**: We encountered limitations in returning distinct results due to insufficient documentation, leading to redundant data.

* **Chroma Vector Databases Management**: Managing multiple Chroma vector databases was challenging due to the need for specific labeling for persistence and local storage.

* **Multi-threading Challenges**: We faced issues with multi-threading, especially when dealing with global variables.

* **Transition from OpenAI Assistant to Chat**: The transition impacted performance due to initial unfamiliarity with the latest models.

* **Web Search Functionality**: Initial inconsistency and a steep learning curve with insufficient documentation made web search integration challenging, especially when combined with Langchain and other components.

* **Intercommunication Framework Design**: Designing a complex framework for communication between Vision Pro, web apps, and external APIs was necessary to maintain consistency between audio threads and visual output in a web server and Flask environment.

* **Integration with Unity and Apple Vision Pro**: Integrating the web applications with Unity and Apple Vision Pro was particularly challenging without prior examples or established practices.

* **Network Connection on Apple Vision Pro**: Establishing an inbound network connection on the Apple Vision Pro posed the most significant challenge due to compatibility issues between Unity's .NET framework and iOS hardware.

## 🏆 Accomplishments We're Proud Of

* **First Research Assistant App for Apple Vision Pro**: We pioneered the first research assistant application specifically designed for Apple Vision Pro, setting a new standard in interactive and immersive research tools.

* **Interactive Spatial Knowledge Graph**: Conceptualized and implemented an innovative, shareable spatial knowledge graph that allows for a dynamic way to represent, search, filter, add, and share knowledge.

* **External Device Interaction with Vision Pro**: Pioneered methods allowing external devices (laptops, IoT devices, etc.) to interact with Vision Pro, greatly expanding the potential of spatial computing and enabling impactful downstream use cases.

* **Spatial Research Knowledge Graph App**: Introduced the world's first spatial Research Knowledge Graph Application, achieving two-way communication between PCs and Apple Vision Pro.

* **Novel Text-to-Audio Speed Algorithm**: Implemented a groundbreaking algorithm to accelerate text-to-audio generation, significantly enhancing the user experience.

* **Engaging Animations for AI Assistants**: Developed captivating animations for AI assistants, fostering user engagement and enhancing the overall user experience while establishing distinct personalities for each AI research assistant.

* **Integrated System**: Successfully integrated a comprehensive system encompassing speech recognition, speech generation, AI assistant functionality, AI-driven information retrieval and filtering, Unity modeling and interaction, communication with Apple Vision Pro, web server communication, and advanced, interactive web UI.

## 📚 What We Learned

As a team, we gained valuable insights and developed a range of skills throughout the project:

* **Mastering JavaScript Animations**: Learned the intricacies of creating engaging animations using JavaScript.

* **System Design Importance**: Realized the critical importance of thorough system design before commencing development to avoid wasted efforts.

* **Tool Proficiency**: Became proficient with tools like Whisper, OpenAI's Assistant and Chat, LangChain, vector databases, and similarity search techniques, especially in applications for Apple Vision Pro through VisionOS.

* **Code Simplicity**: Understood the crucial importance of maintaining a simple code structure for ease of debugging and maintenance.

* **Clear Communication**: Learned the significance of clear communication and regular check-ins within the team to ensure seamless integration of different project components.

## 🔮 What's Next for Dr. Clippy

The future of Dr. Clippy extends well beyond academic research:

* **Consulting Applications**: Consultants could leverage Dr. Clippy to navigate through industry reports, market analyses, and case studies, constructing comprehensive knowledge graphs that reveal trends, gaps, and opportunities in their specific sectors.

* **Healthcare Integration**: Healthcare professionals could use it to bridge the latest research findings with clinical guidelines and patient data, enhancing diagnosis and treatment plans with evidence-based insights.

* **Personalized Research Experience**: By integrating with authors' publication records, Dr. Clippy could offer a unique perspective on how their research fits within the broader context of existing work, encouraging cross-disciplinary innovation and collaboration.

* **Broadening Horizons**: The potential of Dr. Clippy is to not only streamline information discovery but also to foster a deeper, more intuitive understanding of complex data across various fields and industries. | ## Inspiration

Ideas for interactions from:

* <http://paperprograms.org/>

* <http://dynamicland.org/>

but I wanted to go from the existing computer down, rather from the bottom up, and make something that was a twist on the existing desktop: Web browser, Terminal, chat apps, keyboard, windows.

## What it does

Maps your Mac desktop windows onto pieces of paper + tracks a keyboard and lets you focus on whichever one is closest to the keyboard. Goal is to make something you might use day-to-day as a full computer.

## How I built it

A webcam and pico projector mounted above desk + OpenCV doing basic computer vision to find all the pieces of paper and the keyboard.

## Challenges I ran into

* Reliable tracking under different light conditions.

* Feedback effects from projected light.

* Tracking the keyboard reliably.

* Hooking into macOS to control window focus

## Accomplishments that I'm proud of

Learning some CV stuff, simplifying the pipelines I saw online by a lot and getting better performance (binary thresholds are great), getting a surprisingly usable system.

Cool emergent things like combining pieces of paper + the side ideas I mention below.

## What I learned

Some interesting side ideas here:

* Playing with the calibrated camera is fun on its own; you can render it in place and get a cool ghost effect

* Would be fun to use a deep learning thing to identify and compute with arbitrary objects

## What's next for Computertop Desk

* Pointing tool (laser pointer?)

* More robust CV pipeline? Machine learning?

* Optimizations: run stuff on GPU, cut latency down, improve throughput

* More 'multiplayer' stuff: arbitrary rotations of pages, multiple keyboards at once | losing |

## Inspiration

Oftentimes, roommates deal with a lot of conflicts due to differences in living habits and aren't comfortable sorting things out by confronting one another. This problem creates unwanted tension between individuals in the household and usually ends up leading to a poor living experience.

## What it does

Broomies is a mobile app that creates a fun environment to help roommates assign chores for one another, anonymously. Assigned chores can be seen by each roommate, with a separate tab to view your own assigned chores. Once a chore is created by a roommate, it randomly cycles weekly to ensure nobody gets repeated chores. Completing chores on time gives roommates points that get tallied up per month. There is also a way to track expenses with a built-in financing tool to record each transaction a roommate has made for their household. At the end of each month, the monthly sum gets split based on roommate performance, and the top roommate(s) get to pay the least.

## How we built it

Our project utilizes a React Native Frontend with a Go backend and CockroachDB as our db. All our front-end components are designed and implemented in-house. Currently, the app is hosted locally, but in the future, we plan to host our backend on Google cloud, and hopefully publish a polished version on the app store. Finally, we used Git and Github for version control and collaboration tools.

## Challenges we ran into

1. This was our first time developing a mobile application and working with CockroachDB. A big challenge was adjusting and understanding the nuances of mobile development

2. Figuring out an equitable method for distributing points that rewarded doing chores but didn't promote sabotaging your roomies took much polling from fellow hackers

3. Our app covered a lot of features, and we would often run into bugs involving chore management and transaction splitting

## Accomplishments that we're proud of

1. We are really proud of managing to create a full-stack, functioning mobile app as not only first-time mobile developers, but CockroachDB users as well.

2. Entering the hackathon, our goal was to create something that would make any part of our life easier, and we believe Broomies does just that. We are proud to build an app that we hope to actually use in the future.

3. We are really proud of the overall design and theme of Broomies, and how effectively we were able to translate our designs into reality

## What we learned

1. The power of design, both in components and in data structures. Before we started, we took the time to plan out our data structures and relationships, this helped us flesh out a scope for our project, and effective divide work amongst the team.

2. Lots of experience working on new technologies: From IOS and React Native, to leveraging CockroachDB Serverless in quickly turning an idea into a prototype

3. How to effectively ideate: Going into the hackathon, not having a good idea was our biggest concern, but once we learned to let go of finding "The One" hackathon idea, and instead explored every possible avenue, we were able to flesh out ideas that were relevant to our life.

## What's next for Broomies

After fleshing out user management and authentication, we want to deploy the Broomies app on the App store, as well as host our backend on Google Cloud. Also, we want to add the ability to react/respond to task completions, so your roomies can evaluate your tasks. | ## Inspiration

Our inspiration stemmed from the desire to implement a machine learning / A.I. API.

## What it does

Taper analyzes images using IBM's Watson API and our custom classifiers. This data is used to query the USDA food database and return nutritional facts about the product.

## How we built it

Using android Studio and associated libraries we created the UI in the form of an Android App. To improve Watson's image recognition we created our custom classifier and to recognize specific product brands.

## Challenges we ran into

For most of us this was our first time using both Android Studios and Watson so there was a steep initial learning curve. Additionally we attempted to use Microsoft Azure along side Watson but were unsuccessful.

## Accomplishments that we're proud of

-Successful integrating Watson API into a Android App.

-Training our own visual recognition classifier using python and bash scripts.

-Retrieving a products nutritional information based on data from visual recognition.

## What we learned

We experience and learned the difficulty of product integration. As well, we learned how to better consume API's

## What's next for taper

-Creating a cleaner UI

-Text analysis of nutritional data

-day to day nutrition tracking | ## Inspiration

Being certified in Standard First Aid, we personally know the great difference that quick intervention can have on a victim. For example, according to the American Heart Association, if CPR is performed in the first few minutes of cardiac arrest, it can double or triple a person's chance of survival. Unfortunately, only 46% of people who suffer Cardiac Arrest outside of hospitals get the immediate aid they need before professional help arrives. Thus was the creation of Heal-A-Kit.

## What it does

Heal-A-Kit is an app that can identify serious health issues like Heart Attacks, Strokes, Seizures, etc. through the input of various symptoms and provide multiple treatment plans. Additionally, it offers users a step-by-step guide of CPR and encourages them to continue compressions until professional help arrives.

## How we built it

To program our Discord bot we used Python with Discord.py, which is an API wrapper for Discord’s JS API. We chose to use Discord.py as opposed to Discord.js because Python is a more familiar language for us compared to JavaScript and their runtimes such as Node.js.

## Challenges we ran into

Aside from simple syntax errors, we had an issue at first making the bots' messages aesthetically pleasing. We then realized that using embeds would be a clean way to display messages from the bot. Another challenge we ran into was with our logic in the compare command. Originally it would break out the loop once finding one condition that matches with the symptom and to combat this we created a for loop that would keep searching through our data if there were any more conditions and if the string was empty (i.e there were no conditions for the symptoms) then instead of returning an empty string it would return “No conditions match the given symptom."

## Accomplishments that we're proud of

Being able to make a Discord bot for the first time was a massive accomplishment. In addition, the fact that our Discord bot is fully functional and helpful in a real life scenario is something to be proud of.

## What we learned

We learnt how to use Discord’s API wrapper for Python (Discord.py) and how to use modules in Python. In addition, learning how to use object-oriented programming instead of functional programming for the Discord bot which makes the code cleaner and more efficient.

## What's next for Heal-A-Kit

Currently, we have a fully functioning Discord bot, and a prototype for our mobile application. In the future, we plan on adding more health conditions and their respective symptoms and treatments to “heal” as many people as possible. We also plan on further optimizing our search bar, so that even if the scientific or exact wordings are not used, the app can detect the symptom and thus be able to help the victim. | partial |

## Want to find cheap flights to major cities?

This webapp will list the top five cities in terms of cheapest options from your departure location

Using HTML, CSS, Node.js, React, Redux, Python, and an Amadeus API, we created a webpage that uses past airlines data to predict the future prices of the airlines to the destination cities.

When trying to use the python script to access data and pass along that data to the front, we ran into many errors and issues. It took 3 different mentors to help us out and finally after a couple hours, we finally figured out how to resolve the issue.

## Accomplishments that we're proud of

We are proud that we were able to combine our skills and make a product that almost worked exactly how we wanted it to. We are happy we got to learn so many new technologies and combine them all into a working product. Not only this, but acknowledging each other's strengths and weaknesses really helped us come together and work persistently as a team.

We didn't know much about the different javascript frameworks, but through persistence and trial-and-error we were able to figure out how they interacted and got most of the platform running from front-end to back-end.

We will be looking to figure out prices for more than just a limited amount of cities, based upon a limited amount of inputs. We would love to expand the number of inputs to better suit the consumer's needs and be able to also predict where they would like to go next. | ## Inspiration

Often times, we travel to destination based on its popularity like New York or Hawaii, rather than looking for places that we would really enjoy the most. So we decided to create a web application that would recommend travel destinations to users based on images they upload based on their own interests. Each suggestion is tailored to every query.

## What it does

This website will generate a list of suggestions based off a user inputted image via url or upload. Using Microsoft Azure Computer Vision, the website will generate a list of suggested travel destinations, including lowest roundtrip airfare and predicted non-flight related vacation costs, by analyzing the tags from Computer Vision.

## How we built it

We have three main documents for code- the database of locations, the html file for the website, and the javascript file that defines the methods and implements the API. The html website receives user input and returns the suggestions. The database contains formatted data about various possible travel destinations. The javascript file gathers information from each inputted photo, and assigns the photo scores in various categories. Those scores are then compared to those in the database, and using factors such as cost and location type, we generate a list of 7 travel recommendations.

## Challenges we ran into

While trying to acquire a suitable database, we attempted to scrape Wikipedia articles using node.js. After using cheerio and building the basic structure, we found that the necessary information was located in different parts of each article. We were ultimately unable to write code that could filter through the article and extract information, and had to hand generate a database after 5 or more hours of work. Another challenge was finding efficient comparison method so our website did not take too long to process photos.

## Accomplishments that we're proud of

Our website accomplished our main goal. It is able to take in an image, process the tags, and return a list of possible destinations. We also have a basic algorithm that maximizes the accuracy of our website given the current database format.

## What we learned

Since our team had first-time hackers, who were also first time javascript coders, we learned a great deal about how the language functioned, and standards for coding website. We also learned how to share and update code through gitHub. More specifically to our project, this was the first time we used Microsoft Azure and Amadeus APIs, and learned how to implement them into our code.

## What's next for Travel Match

We want to build a more comprehensive database, that includes more locations with more specific data. We also want to come up with a more efficient and accurate comparison algorithm than we currently have. | ## Inspiration

We know the struggles of students. Trying to get to that one class across campus in time. Deciding what to make for dinner. But there was one that stuck out to all of us: finding a study spot on campus. There have been countless times when we wander around Mills or Thode looking for a free space to study, wasting our precious study time before the exam. So, taking inspiration from parking lots, we designed a website that presents a live map of the free study areas of Thode Library.

## What it does

A network of small mountable microcontrollers that uses ultrasonic sensors to check if a desk/study spot is occupied. In addition, it uses machine learning to determine peak hours and suggested availability from the aggregated data it collects from the sensors. A webpage that presents a live map, as well as peak hours and suggested availability .

## How we built it

We used a Raspberry Pi 3B+ to receive distance data from an ultrasonic sensor and used a Python script to push the data to our database running MongoDB. The data is then pushed to our webpage running Node.js and Express.js as the backend, where it is updated in real time to a map. Using the data stored on our database, a machine learning algorithm was trained to determine peak hours and determine the best time to go to the library.

## Challenges we ran into

We had an **life changing** experience learning back-end development, delving into new frameworks such as Node.js and Express.js. Although we were comfortable with front end design, linking the front end and the back end together to ensure the web app functioned as intended was challenging. For most of the team, this was the first time dabbling in ML. While we were able to find a Python library to assist us with training the model, connecting the model to our web app with Flask was a surprising challenge. In the end, we persevered through these challenges to arrive at our final hack.

## Accomplishments that we are proud of

We think that our greatest accomplishment is the sheer amount of learning and knowledge we gained from doing this hack! Our hack seems simple in theory but putting it together was one of the toughest experiences at any hackathon we've attended. Pulling through and not giving up until the end was also noteworthy. Most importantly, we are all proud of our hack and cannot wait to show it off!

## What we learned

Through rigorous debugging and non-stop testing, we earned more experience with Javascript and its various frameworks such as Node.js and Express.js. We also got hands-on involvement with programming concepts and databases such as mongoDB, machine learning, HTML, and scripting where we learned the applications of these tools.

## What's next for desk.lib

If we had more time to work on this hack, we would have been able to increase cost effectiveness by branching four sensors off one chip. Also, we would implement more features to make an impact in other areas such as the ability to create social group beacons where others can join in for study, activities, or general socialization. We were also debating whether to integrate a solar panel so that the installation process can be easier. | losing |

## Inspiration

So, like every Hackathon we’ve done in the past, we wanted to build a solution based on the pain points of actual, everyday people. So when we decided to pursue the Healthtech track, we called the nurses and healthcare professionals in our lives. To our surprise, they all seemed to have the same gripe – that there was no centralized system for overviewing the procedures, files, and information about specific patients in a hospital or medical practice setting. Even a quick look through google showed that there wasn’t any new technology that was really addressing this particular issue. So, we created UniMed - united medical - to offer an innovate alternative to the outdated software that exists – or for some practices, pen and paper.

While this isn’t necessarily the sexiest idea, it’s probably one of the most important issues to address for healthcare professionals. Looking over the challenge criteria, we couldn’t come up with a more fitting solution – what comes to mind immediately is the criterion about increasing practitioner efficiency. The ability to have a true CMS – not client management software, but CARE management software – eliminates any need for annoying patients with a barrage of questions they’ve answered a hundred times, and allows nurses and doctors to leave observations and notes in a system where they can be viewed from other care workers going forward.

## What it does

From a technical, data-flow perspective, this is the gist of how UniMed works: Solace connects our React-based front end to our database. While we normally would have a built a SQL database or perhaps gone the noSQL route and leveraged mongoDB, due to time constraints we’re using JSON for simplicities sake. So while JSON is acting, typically, like a REST API, we’re pulling real-time data with Solace’s functionality. Any time an event-based subscription is called – for example, a nurse updates a patient’s records reporting that their post-op check-up went well and they should continue on their current dosage of medication – that value, in this case a comment value, is passed to that event (updating our React app by populating the comments section of a patient’s record with a new comment).

## How we built it

We all learned a lot at this hackathon – Jackson had some Python experience but learned some HTML5 to design the basic template of our log-in page. I had never used React before, but spent several hours watching youtube videos (the React workshop was also very helpful!) and Manny mentored me through some of the React app creation. Augustine is a marketing student but it turns out he has a really good eye for design, and he was super helpful in mockups and wireframes!

## What's next for UniMed

There are plenty of cool ideas we have for integrating new features - the ability to give patients a smartwatch that monitors their vital signs and pushes that bio-information to their patient "card" in real time would be super cool. It would be great to also integrate scheduling functionality so that practitioners can use our program as the ONLY program they need while they're at work - a complete hub for all of their information and duties! | ## Inspiration

Frustrated by the traditional MBTI's barrage of questions? So were we. Introducing MBTIFY: our web application streamlines your MBTI assessment using advanced Natural Language Processing and Machine Learning. Forget the indecision of Likert scales; MBTIFY's conversational AI prompts you with tailored, open-ended questions. Answer naturally—the AI adapts, selecting queries to pinpoint your personality type with ease. Welcome to a smarter, streamlined path to self-discovery.

## What it does

MBTIFY is a web application designed to administer MBTI personality tests in a more efficient and streamlined manner. Unlike traditional MBTI tests that require answering hundreds of questions, MBTIFY aims to achieve accurate results with fewer than 10 short-answer questions. Users can respond to these questions via text or audio input. The application leverages Natural Language Processing (NLP) and Machine Learning (ML) technologies to analyze the answers and determine the user's MBTI type.

## How we built it

Frontend: Reflex for a user-friendly interface.

Voice Recognition: Converts spoken answers to text.

NLP: Cohere and OpenAI analyze responses for emotional and syntactic insights.

ML: Intersystems IntegratedML utilizes 60,000+ Kaggle MBTI questionnaire responses to train a predictive AutoML model. This model interprets Likert scale responses, determining MBTI type with a confidence level. Immediate results are provided if a confidence threshold is met, or the system dynamically selects further clarifying questions.

## Challenges we ran into

Reflex on M1 Macs: Faced compatibility issues with the Reflex UI framework on M1 chipset Macs, requiring optimization for cross-platform functionality.

SQLAlchemy with sqlalchemy-iris: Experienced limitations in integrating SQLAlchemy with the sqlalchemy-iris dialect, leading to custom code solutions for effective database operations.

IRIS Cloud Connectivity: Encountered difficulties in connecting to the IRIS cloud server, necessitating adjustments in network and security settings for reliable deployment.

Model Training Time: The machine learning model training took over 6 hours due to the large dataset and complex algorithms, prompting a need for pipeline optimization to enhance efficiency.

## Accomplishments that we're proud of

Responsive Design: Developed with Reflex for an adaptive user experience.

Real-Time ML Analysis: Intersystems algorithms provide instant MBTI prediction with a confidence indicator using SQL. We infer Likert scores from user responses to our conversational prompts using LLM, then input these as features into our predictive model, thereby combining discriminative with generative AI models.

Smart Questioning: A dynamic question bank evolves based on user inputs, distinguishing similar MBTI profiles through adaptive questioning and iterative model refinements with updated scores.

## What we learned

Stay on Track: Consistently ensure that you are on the right path by periodically reviewing your goals and progress.

Purposeful Implementation: Before committing to a new feature or task, evaluate its significance to avoid exerting effort on non-impactful activities.

## What's next for MBTIFY

Audio Transcribing: Our roadmap includes the implementation of an advanced audio transcribing feature. This will allow us to extend our voice recognition capabilities to capture even more nuanced responses from users, further refining our MBTI analysis.

Emotion Detection with HumeAI: We plan to integrate HumeAI technology for real-time emotion detection based on the user's voice. This will add an additional layer of depth to the analysis, enabling us to distinguish between closely matched MBTI types with a greater degree of accuracy.

Optimized Machine Learning Algorithms: We aim to continually fine-tune our existing machine learning models within Intersystem to accommodate these new features, ensuring that our confidence levels and MBTI type predictions are as accurate as possible.

Dynamic Questioning 2.0: Building on our adaptive questioning framework, we will incorporate feedback loops that consider not only the content of the user’s responses but also the detected emotional tone. This will make our question selection even more responsive and targeted. | ## Inspiration

Reading about issues with patient safety was... not exactly inspiring, but eye-opening. Issues that were only a matter of human error (understaffed or forgetfulness or etc) like bed sores seemed like things that could easily be kept track of to at least make sure patients could get a heightened quality of life. So we decided to make an app that tracked patient wellness and needs, not necessarily just concrete items, but all the necessary follow-up items from them as well.

We understand that schedulers for more concrete events like appointments already exist, but something that can remind providers to check up on patients in 3 days to see if they have had any side effects to their new prescription or any other task would be helpful.

## What it does

The Med-O-Matic keeps track of patient needs and when they're needed, and sets those needs up in a calendar and matching to-do list for a team of healthcare providers in a hospital to take care of. Providers can claim tasks that they will get to, and can mark them down as they go throughout their day. This essentially serves as a scheduled task-list for providers.

## How we built it

To build the frontend, we used Vue.js. We have a database holding all the tasks on AWS DynamoDB.

## Challenges we ran into

Getting started was a bit difficult and we weren't really sure which direction we should take for Med-O-Matic. There were a lot of uncertainties about what exactly would be best for our application, so we had to delve in a bit deeper by thinking about what the current process is like at hospitals and clinics, and finding areas for improvement. This has led us to addressing a process issue in task assignment to reduce the number of errors associated with inattentiveness.

## Accomplishments that we're proud of

What makes our application different than others is that you can sequence tasks and use these sequences as a template. For example, a procedure like heart-surgery always has required follow up steps. You can create a heart-surgery template, that will be used to set all the required follow-up steps. After the template is created, we can easily reapply that template however many times we want!

## What we learned

We learned how to deploy using DeFang, and also how to connect our frontend with DynamoDB. And we learned more about the domain of our project, which is patient safety.

## What's next for Med-O-Matic

More automation would be next. We've already got some bit for making sequences of tasks, but features like a send-a-text feature for example to make the following-up-on process easier would be next- in other words, we'd add features that help do the tasks as well, instead of simply reminding providers of what they need to do.

We would also connect it to some medical scheduler like EPIC's API, like EPIC. This would allow us to

really get the task sequencing working seamlessly with a real workflow, as something like a surgery can be scheduled in epic, happen, and then trigger the Med-O-Matic to create all the necessary follow-up tasks from that. | partial |

## Inspiration

MISSION: Our mission is to create an intuitive and precisely controlled arm for situations that are tough or dangerous for humans to be in.

VISION: This robotic arm application can be used in the medical industry, disaster relief, and toxic environments.

## What it does

The arm imitates the user in a remote destination. The 6DOF range of motion allows the hardware to behave close to a human arm. This would be ideal in environments where human life would be in danger if physically present.

The HelpingHand can be used with a variety of applications, with our simple design the arm can be easily mounted on a wall or a rover. With the simple controls any user will find using the HelpingHand easy and intuitive. Our high speed video camera will allow the user to see the arm and its environment so users can remotely control our hand.

## How I built it

The arm is controlled using a PWM Servo arduino library. The arduino code receives control instructions via serial from the python script. The python script is using opencv to track the user's actions. An additional feature use Intel Realsense and Tensorflow to detect and track user's hand. It uses the depth camera to locate the hand, and use CNN to identity the gesture of the hand out of the 10 types trained. It gave the robotic arm an additional dimension and gave it a more realistic feeling to it.

## Challenges I ran into

The main challenge was working with all 6 degrees of freedom on the arm without tangling it. This being a POC, we simplified the problem to 3DOF, allowing for yaw, pitch and gripper control only. Also, learning the realsense SDK and also processing depth image was an unique experiences, thanks to the hardware provided by Dr. Putz at the nwhacks.

## Accomplishments that I'm proud of

This POC project has scope in a majority of applications. Finishing a working project within the given time frame, that involves software and hardware debugging is a major accomplishment.

## What I learned

We learned about doing hardware hacks at a hackathon. We learned how to control servo motors and serial communication. We learned how to use camera vision efficiently. We learned how to write modular functions for easy integration.

## What's next for The Helping Hand

Improve control on the arm to imitate smooth human arm movements, incorporate the remaining 3 dof and custom build for specific applications, for example, high torque motors would be necessary for heavy lifting applications. | ## Inspiration

We wanted to promote an easy learning system to introduce verbal individuals to the basics of American Sign Language. Often people in the non-verbal community are restricted by the lack of understanding outside of the community. Our team wants to break down these barriers and create a fun, interactive, and visual environment for users. In addition, our team wanted to replicate a 3D model of how to position the hand as videos often do not convey sufficient information.

## What it does

**Step 1** Create a Machine Learning Model To Interpret the Hand Gestures

This step provides the foundation for the project. Using OpenCV, our team was able to create datasets for each of the ASL alphabet hand positions. Based on the model trained using Tensorflow and Google Cloud Storage, a video datastream is started, interpreted and the letter is identified.

**Step 2** 3D Model of the Hand

The Arduino UNO starts a series of servo motors to activate the 3D hand model. The user can input the desired letter and the 3D printed robotic hand can then interpret this (using the model from step 1) to display the desired hand position. Data is transferred through the SPI Bus and is powered by a 9V battery for ease of transportation.

## How I built it

Languages: Python, C++

Platforms: TensorFlow, Fusion 360, OpenCV, UiPath

Hardware: 4 servo motors, Arduino UNO

Parts: 3D-printed

## Challenges I ran into

1. Raspberry Pi Camera would overheat and not connect leading us to remove the Telus IoT connectivity from our final project

2. Issues with incompatibilities with Mac and OpenCV and UiPath

3. Issues with lighting and lack of variety in training data leading to less accurate results.

## Accomplishments that I'm proud of

* Able to design and integrate the hardware with software and apply it to a mechanical application.

* Create data, train and deploy a working machine learning model

## What I learned

How to integrate simple low resource hardware systems with complex Machine Learning Algorithms.

## What's next for ASL Hand Bot

* expand beyond letters into words

* create a more dynamic user interface

* expand the dataset and models to incorporate more | ## What is it?

Using the Intel RealSense, we first extracted a user's hand movements and mapped certain gestures to specific actions for the drone. Then we transmitted the data to an Arduino using low energy bluetooth. The Arduinos generated the signals readable by the transmitter chip using digital potentiometers. Having never used FPV before, we included a GoPro with a 5.8 GHz transmitter to stream live first person view to a wireless monitor.

## Inspiration

We have always been interested in drones and their increased prevalence in our culture. We love reverse engineering commercial products, and we wanted to experiment with the Intel RealSense.

## How We built it

In order to extract gestures, we used the Realsense SDK in C++ to extract the coordinates on a user's hand. Doing so, we were able to match certain combinations of coordinates to different instructions for the drone. The camera first tracks where the user's hand is and will move the drone according to where that is. More complex custom instructions such as landing the drone and havering can be called by using two hands. The drone was flown by taking apart its transmitter and completely disassembling it. We used digital potentiometers to digitally and autonomously emulate the transmitter joysticks. Out homemade transmitter is controlled by four Arduino Unos, which was necessary because the digital potentiometers were controlled by SPI and we had to work with a limited number of SPI inputs in the Arduino Uno.

## Challenges I ran into

The most difficult part of this project was emulating the drone control system. Due to the tight specifications of the controller, it was very difficult to create signals that fit within its tolerance boundaries. It took many hours of calibration for us to figure out exactly what signals were allowed. After that, we had to map controls to these signals. An additional challenge was generating data using Intel RealSense that was then transmitted to the master Arduino device using a Sparkfun low energy Bluetooth board. | partial |

## 💡 Inspiration ✨

Embarking on our CalTrack adventure, we were fueled by a vision to revolutionize the way individuals approach their health. We aimed to dismantle the tedious barriers of traditional calorie counting, transforming it from a burdensome task into a delightful, almost effortless daily interaction. It’s where advanced tech seamlessly blends with the essential aspects of nutrition, condensed into the simple act of capturing a photo. We embraced the philosophy that pursuing a wholesome lifestyle should be convenient, straightforward, and completely devoid of stress.

CalTrack emerged as our innovative response to this vision—the ultimate tool to keep wellness not just within reach, but actively engaged in the foreground of our users' lives. We noticed that while other platforms provided pieces to the puzzle, they often fell short with clunky interfaces and tiring manual data entries. CalTrack is designed to bridge that gap, offering a solution that’s intuitive and as natural as taking a selfie.

## 🍎 What it does 🥗

Enter the world of CalTrack, where sophisticated computer vision meets artificial intelligence to create a seamless meal identification experience. Forget the cumbersome process of scouring through endless food lists to painstakingly log every bite. No more dealing with complex user interfaces, searching through databases, and manual logging. With CalTrack, you’re one photo away from a comprehensive nutritional breakdown in an instant. It’s like having a personal dietitian in your pocket, always ready to help you make smarter food choices with ease and precision.

Additionally, we used the Revised Harris-Benedict Equation to give users an estimate of their suggested daily calories, factoring in their sex, height, weight, and age. This makes the application more personalized and tailored to the user.

## 🛠️ How we built it 💬

By integrating Google Cloud, we aimed to take the guesswork out of calorie counting by using easily available information and integrating the Vision API. Our app is designed to remove barriers, providing a seamless and engaging experience. Just snap a photo, and the rest is taken care of by our intelligent algorithms.

On the front end, we use Python with the CustomTktinter library to create a simple and intuitive user interface. Our features include allowing users to upload or capture photos to be processed, calculating the calories they need in a day, and tracking everything a user eats in a day with a clear bar to represent their calorie goal.

## 🚧 Challenges we ran into ⛔

One of our earliest and most significant hurdles was defining the scope of the AI to handle a diversity of meals. This was the reason we used the Vision API from Google Cloud to process our images. Given the timeframe of this project, we had to limit our ability to easily identifiable food.

We also worked hard to optimize the app and API calls for speed, as we understand that every second counts when you're hungry. Balancing accuracy with speed was a delicate process that our team navigated with perseverance and creativity.

## 🎉 Accomplishments that we're proud of 🏆

Being a brand-new team to hackathons, we are incredibly proud of what we have achieved in 36 hours. Our journey led us from the intricacies of building a sleek, user-friendly front-end interface with Python's Tkinter to architecting a robust backend powered by the sophistication of the Google Cloud Platform. The culmination of this effort is CalTrack—an integration of technologies that not only embraces but excels at transforming images into actionable nutritional insights.

## 🧠 What we learned 🤓

Building CalTrack has been a rigorous educational experience, packed with valuable lessons at every stage. We ventured into the complex territories of machine learning and user interface design, greatly enhancing our technical skill set. Taking on the Google Cloud Platform, we went from novices to proficient users, tapping into the power of its Vision API, which now serves as the backbone of our app. The learning process was challenging, but we embraced the opportunity to grow and improve, much like adventurers enjoy the ascent and the perspective it brings.

## 🔮 What's next for CalTrack ⏭️

The road ahead for CalTrack includes expanding our food database to cover even more variety, implementing user-friendly features like meal suggestions, and enhancing our AI to provide even more precise nutritional information.

We're on a mission to make healthy eating hassle-free for everyone, and this is just the beginning. | ## Inspiration

Currently, there is an exponential growth of obesity in the world, leading to devastating consequences such as an increased rate of diabetes and heart diseases. All three of our team members are extremely passionate about nutrition issues and wish to educate others and promote healthy active living.

## What it does

This iOS app allows users to take pictures of meals that they eat and understand their daily nutrition intake. For each food that is imaged, the amount of calories, carbohydrates, fats and proteins are shown, contributing to the daily percentage on the nutrition tab. In the exercise tab, the users are able to see how much physical activities they need to do to burn off their calories, accounting for their age and weight differences. The data that is collected easily syncs with the iPhone built-in health app.

## How we built it

We built the iOS app in Swift programming language in Xcode. For the computer vision of the machine learning component, we used CoreML, and more specifically its Resnet 50 Model. We also implemented API calls to Edamam to receive nutrition details on each food item.

## Challenges we ran into

Two of our three team members have never used Swift before - it is definitely a challenge writing in an unfamiliar coding language. It was also challenging calling different APIs and integrating them back in Xcode, as the CoreML documentation is unclear.

## Accomplishments that we're proud of

We are proud of learning an entirely new programming language and building a substantial amount of a well-functioning app within 36 hours.

## What's next for NutriFitness

Building our own machine learning model and getting more accurate image descriptions. | # We'd love if you read through this in its entirety, but we suggest reading "What it does" if you're limited on time

## The Boring Stuff (Intro)

* Christina Zhao - 1st-time hacker - aka "Is cucumber a fruit"

* Peng Lu - 2nd-time hacker - aka "Why is this not working!!" x 30

* Matthew Yang - ML specialist - aka "What is an API"

## What it does

It's a cross-platform app that can promote mental health and healthier eating habits!

* Log when you eat healthy food.

* Feed your "munch buddies" and level them up!

* Learn about the different types of nutrients, what they do, and which foods contain them.

Since we are not very experienced at full-stack development, we just wanted to have fun and learn some new things. However, we feel that our project idea really ended up being a perfect fit for a few challenges, including the Otsuka Valuenex challenge!

Specifically,

>

> Many of us underestimate how important eating and mental health are to our overall wellness.

>

>

>

That's why we we made this app! After doing some research on the compounding relationship between eating, mental health, and wellness, we were quite shocked by the overwhelming amount of evidence and studies detailing the negative consequences..

>

> We will be judging for the best **mental wellness solution** that incorporates **food in a digital manner.** Projects will be judged on their ability to make **proactive stress management solutions to users.**

>

>

>

Our app has a two-pronged approach—it addresses mental wellness through both healthy eating, and through having fun and stress relief! Additionally, not only is eating healthy a great method of proactive stress management, but another key aspect of being proactive is making your de-stressing activites part of your daily routine. I think this app would really do a great job of that!

Additionally, we also focused really hard on accessibility and ease-of-use. Whether you're on android, iphone, or a computer, it only takes a few seconds to track your healthy eating and play with some cute animals ;)

## How we built it

The front-end is react-native, and the back-end is FastAPI (Python). Aside from our individual talents, I think we did a really great job of working together. We employed pair-programming strategies to great success, since each of us has our own individual strengths and weaknesses.

## Challenges we ran into

Most of us have minimal experience with full-stack development. If you look at my LinkedIn (this is Matt), all of my CS knowledge is concentrated in machine learning!

There were so many random errors with just setting up the back-end server and learning how to make API endpoints, as well as writing boilerplate JS from scratch.