anchor

stringlengths 1

23.8k

| positive

stringlengths 1

23.8k

| negative

stringlengths 1

31k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

Inspired by Nicole's many trips to the ER.

## What it does

OctoBud is a chat bot for Amazon Alexa that helps decrease wait times in the ER by decreasing the amount of time that nurses have to spend with each patient and by giving alternative options to the current ER. OctoBud gets the information from the patient (currently just through the Alexa but eventually also through a computer to secure sensitive information like health card number) and runs analysis on it to determine the patient's priority and therefore their order in the queue. The patients data is then sent to the ER nurses as a card and they are given the opportunity to validate the decision made by the bot and update it if necessary or add comments. It doesn't eliminate the interaction between the patient and the ER nurses but instead decreases the time of these interactions by getting the patient's information prior to seeing the nurse and storing it in an organized fashion.

## How we built it

We utilized the StdLib api to build the Alexa skill as well as access MongoDB. The Alexa skill was written in node.js and the website for the nurse side was written in HTML(EJS)/CSS/JS.

## Challenges we ran into

StdLib did not have the functionality built in to do a session in the Alexa skill that is longer than one request and one response so we had to build that functionality ourselves and because we were using the StdLib api and not AWS lamda the solutions on the internet for this problem were not possible (at least not in the period of time that we have in the hackathon). Another problem is that amazon doesn't send the user's exact request (just the variables/slots) so we had no way of getting their answer for each reprompt without destroying the rest of the functionality or adding a trigger word/phrase. We solved this by using a trigger word/phrase that we believe still moves smoothly with the conversation with the bot and allows us to grab the values that we want.

## Accomplishments that we're proud of

No one on our team had ever built an Alexa app before last weekend (we were at another hackathon) and before this hackathon none of us had ever built a chat bot so we are proud that we were able to create a successful and useful chat bot despite the many challenges that we faced along the way.

## What we learned

We learned a lot about Alexa skills and more about persevering through challenges and building something that we can be proud of. In addition, we gained experience with database communication with MongoDB and between different files.

## What's next for OctoBud

We see a lot of potential for OctoBud. Wait times in ERs are a huge problem that we have all been affected by and is a solvable problem. The next steps that we see for OctoBud are to be able to submit information from a smart phone or smart home device at home and have your information either sent to your closest hospital or a different hospital depending on the wait times in near by hospitals and how good they are at treating your illness/injury. We also see this possibly integrating with other companies in this area such as house call doctors so that you can have a doctor come to your hospital instead of going to the hospital and spending hours waiting to see a doctor, or giving you the option and information about alternatives to ERs in hospitals such as urgent care centres. All of these things will help decrease the wait times in our emergency rooms and improve our health care system to make health care more accessible in a reasonable amount of time for everyone. | We were inspired by the daily struggle of social isolation.

Shows the emotion of a text message on Facebook

We built this using Javascript, IBM-Watson NLP API, Python https server, and jQuery.

Accessing the message string was a lot more challenging than initially anticipated.

Finding the correct API for our needs and updating in real time also posed challenges.

The fact that we have a fully working final product.

How to interface JavaScript with Python backend, and manually scrape a templated HTML doc for specific key words in specific locations

Incorporate the ability to display alternative messages after a user types their initial response. | ## Inspiration

In this era, with medicines being readily available for consumption, people take on pills without even consulting with a specialist to find out what diagnosis they have. We have created this project to find out what specific illnesses that a person can be diagnosed with, so that they can seek out the correct treatment, without self-treating themselves with pills which might in turn harm them in the long run.

## What it does

This is your personal medical assistant bot which takes in a set of symptoms you are experiencing and returns some illnesses that are most closely matched with that set of symptoms. It is powered by Machine learning which enables it to return more accurate data (tested and verified!) as to what issue the person might have.

## How we built it

We used React for building the front-end. We used Python and its vast array of libraries to design the ML model. For building the model, we used scikit-learn. We used pandas for the data processing. To connect the front end with the model, we used Fast API. We used a Random Forest multi-label classification model to give the diagnosis. Since the model takes in a string, we used the Bag-of-Words from Scikit-Learn to convert it to number-related values.

## Challenges we ran into

Since none of us had significant ML experience, we had to learn how to create an ML model specifically the multi-label classification model, train it and get it deployed on time. Furthermore, FAST API does not have good documentation, we ran into numerous errors while configuring and interfacing it between our front-end and back-end.

## Accomplishments that we're proud of

Creating a Full-Stack Application that would help the public to find a quick diagnosis for the symptoms they experience. Working on the Project as a team and brainstorming ideas for the proof of concept and how to get our app working.

We trained the model with use cases which evaluated to 97% accuracy

## What we learned

Working with Machine Learning and creating a full-stack App. We also learned how to coordinate with the team to work effectively. Reading documentation and tutorials to get an understanding of how the technologies we used work.

## What's next for Medical Chatbot

The first stage for the Medical Chatbot would be to run tests and validate that it works using different datasets. We also plan about adding more features in the front end such as authentication so that different users can register before using the feature. We can get inputs from professionals in healthcare to increase coverage and add more questions to give the correct prediction. | partial |

## Inspiration

40 million people in the world are blind, including 20% of all people aged 85 or older. Half a million people suffer paralyzing spinal cord injuries every year. 8.5 million people are affected by Parkinson’s disease, with the vast majority of these being senior citizens. The pervasive difficulty for these individuals to interact with objects in their environment, including identifying or physically taking the medications vital to their health, is unacceptable given the capabilities of today’s technology.

First, we asked ourselves the question, what if there was a vision-powered robotic appliance that could serve as a helping hand to the physically impaired? Then we began brainstorming: Could a language AI model make the interface between these individual’s desired actions and their robot helper’s operations even more seamless? We ended up creating Baymax—a robot arm that understands everyday speech to generate its own instructions for meeting exactly what its loved one wants. Much more than its brilliant design, Baymax is intelligent, accurate, and eternally diligent.

We know that if Baymax was implemented first in high-priority nursing homes, then later in household bedsides and on wheelchairs, it would create a lasting improvement in the quality of life for millions. Baymax currently helps its patients take their medicine, but it is easily extensible to do much more—assisting these same groups of people with tasks like eating, dressing, or doing their household chores.

## What it does

Baymax listens to a user’s requests on which medicine to pick up, then picks up the appropriate pill and feeds it to the user. Note that this could be generalized to any object, ranging from food, to clothes, to common household trinkets, to more. Baymax responds accurately to conversational, even meandering, natural language requests for which medicine to take—making it perfect for older members of society who may not want to memorize specific commands. It interprets these requests to generate its own pseudocode, later translated to robot arm instructions, for following the tasks outlined by its loved one. Subsequently, Baymax delivers the medicine to the user by employing a powerful computer vision model to identify and locate a user’s mouth and make real-time adjustments.

## How we built it

The robot arm by Reazon Labs, a 3D-printed arm with 8 servos as pivot points, is the heart of our project. We wrote custom inverse kinematics software from scratch to control these 8 degrees of freedom and navigate the end-effector to a point in three dimensional space, along with building our own animation methods for the arm to follow a given path. Our animation methods interpolate the arm’s movements through keyframes, or defined positions, similar to how film editors dictate animations. This allowed us to facilitate smooth, yet precise, motion which is safe for the end user.

We built a pipeline to take in speech input from the user and process their request. We wanted users to speak with the robot in natural language, so we used OpenAI’s Whisper system to convert the user commands to text, then used OpenAI’s GPT-4 API to figure out which medicine(s) they were requesting assistance with.

We focused on computer vision to recognize the user’s face and mouth. We used OpenCV to get the webcam live stream and used 3 different Convolutional Neural Networks for facial detection, masking, and feature recognition. We extracted coordinates from the model output to extrapolate facial landmarks and identify the location of the center of the mouth, simultaneously detecting if the user’s mouth is open or closed.

When we put everything together, our result was a functional system where a user can request medicines or pills, and the arm will pick up the appropriate medicines one by one, feeding them to the user while making real time adjustments as it approaches the user’s mouth.

## Challenges we ran into

We quickly learned that working with hardware introduced a lot of room for complications. The robot arm we used was a prototype, entirely 3D-printed yet equipped with high-torque motors, and parts were subject to wear and tear very quickly, which sacrificed the accuracy of its movements. To solve this, we implemented torque and current limiting software and wrote Python code to smoothen movements and preserve the integrity of instruction.

Controlling the arm was another challenge because it has 8 motors that need to be manipulated finely enough in tandem to reach a specific point in 3D space. We had to not only learn how to work with the robot arm SDK and libraries but also comprehend the math and intuition behind its movement. We did this by utilizing forward kinematics and restricted the servo motors’ degrees of freedom to simplify the math. Realizing it would be tricky to write all the movement code from scratch, we created an animation library for the arm in which we captured certain arm positions as keyframes and then interpolated between them to create fluid motion.

Another critical issue was the high latency between the video stream and robot arm’s movement, and we spent much time optimizing our computer vision pipeline to create a near instantaneous experience for our users.

## Accomplishments that we're proud of

As first-time Hackathon participants, we are incredibly proud of the incredible progress we were able to make in a very short amount of time, proving to ourselves that with hard work, passion, and a clear vision, anything is possible. Our team did a fantastic job embracing the challenge of using technology unfamiliar to us, and stepped out of our comfort zones to bring our idea to life. Whether it was building the computer vision model, or learning how to interface the robot arm’s movements with voice controls, we ended up building a robust prototype which far surpassed our initial expectations. One of our greatest successes was coordinating our work so that each function could be pieced together and emerge as a functional robot. Let’s not overlook the success of not eating our hi-chews we were using for testing!

## What we learned

We developed our skills in frameworks we were initially unfamiliar with such as how to apply Machine Learning algorithms in a real-time context. We also learned how to successfully interface software with hardware - crafting complex functions which we could see work in 3-dimensional space. Through developing this project, we also realized just how much social impact a robot arm can have for disabled or elderly populations.

## What's next for Baymax

Envision a world where Baymax, a vigilant companion, eases medication management for those with mobility challenges. First, Baymax can be implemented in nursing homes, then can become a part of households and mobility aids. Baymax is a helping hand, restoring independence to a large disadvantaged group.

This innovation marks an improvement in increasing quality of life for millions of older people, and is truly a human-centric solution in robotic form. | ## Inspiration

We are currently living through one of the largest housing crises in human history. As a result, more Canadians than ever before are seeking emergency shelter to stay off the streets and find a safe place to recuperate. However, finding a shelter is still a challenging, manual process, where no digital service exists that lets individuals compare shelters by eligibility criteria, find the nearest one they are eligible for, and verify that the shelter has room in real-time. Calling shelters in a city with hundreds of different programs and places to go is a frustrating burden to place on someone who is in need of safety and healing. Further, we want to raise the bar: people shouldn't be placed in just any shelter, they should go to the shelter best for them based on their identity and lifestyle preferences.

70% of homeless individuals have cellphones, compared to 85% of the rest of the population; homeless individuals are digitally connected more than ever before, especially through low-bandwidth mediums like voice and SMS. We recognized an opportunity to innovate for homeless individuals and make the process for finding a shelter simpler; as a result, we could improve public health, social sustainability, and safety for the thousands of Canadians in need of emergency housing.

## What it does

Users connect with the ShelterFirst service via SMS to enter a matching system that 1) identifies the shelters they are eligible for, 2) prioritizes shelters based on the user's unique preferences, 3) matches individuals to a shelter based on realtime availability (which was never available before) and the calculated priority and 4) provides step-by-step navigation to get to the shelter safely.

Shelter managers can add their shelter and update the current availability of their shelter on a quick, easy to use front-end. Many shelter managers are collecting this information using a simple counter app due to COVID-19 regulations. Our counter serves the same purpose, but also updates our database to provide timely information to those who need it. As a result, fewer individuals will be turned away from shelters that didn't have room to take them to begin with.

## How we built it

We used the Twilio SMS API and webhooks written in express and Node.js to facilitate communication with our users via SMS. These webhooks also connected with other server endpoints that contain our decisioning logic, which are also written in express and Node.js.

We used Firebase to store our data in real time.

We used Google Cloud Platform's Directions API to calculate which shelters were the closest and prioritize those for matching and provide users step by step directions to the nearest shelter. We were able to capture users' locations through natural language, so it's simple to communicate where you currently are despite not having access to location services

Lastly, we built a simple web system for shelter managers using HTML, SASS, JavaScript, and Node.js that updated our data in real time and allowed for new shelters to be entered into the system.

## Challenges we ran into

One major challenge was with the logic of the SMS communication. We had four different outgoing message categories (statements, prompting questions, demographic questions, and preference questions), and shifting between these depending on user input was initially difficult to conceptualize and implement. Another challenge was collecting the distance information for each of the shelters and sorting between the distances, since the response from the Directions API was initially confusing. Lastly, building the custom decisioning logic that matched users to the best shelter for them was an interesting challenge.

## Accomplishments that we're proud of

We were able to build a database of potential shelters in one consolidated place, which is something the city of London doesn't even have readily available. That itself would be a win, but we were able to build on this dataset by allowing shelter administrators to update their availability with just a few clicks of a button. This information saves lives, as it prevents homeless individuals from wasting their time going to a shelter that was never going to let them in due to capacity constraints, which often forced homeless individuals to miss the cutoff for other shelters and sleep on the streets. Being able to use this information in a custom matching system via SMS was a really cool thing for our team to see - we immediately realized its potential impact and how it could save lives, which is something we're proud of.

## What we learned

We learned how to use Twilio SMS APIs and webhooks to facilitate communications and connect to our business logic, sending out different messages depending on the user's responses. In addition, we taught ourselves how to integrate the webhooks to our Firebase database to communicate valuable information to the users.

This experience taught us how to use multiple Google Maps APIs to get directions and distance data for the shelters in our application. We also learned how to handle several interesting edge cases with our database since this system uses data that is modified and used by many different systems at the same time.

## What's next for ShelterFirst

One addition to make could be to integrate locations for other basic services like public washrooms, showers, and food banks to connect users to human rights resources. Another feature that we would like to add is a social aspect with tags and user ratings for each shelter to give users a sense of what their experience may be like at a shelter based on the first-hand experiences of others. We would also like to leverage the Twilio Voice API to make this system accessible via a toll free number, which can be called for free at any payphone, reaching the entire homeless demographic.

We would also like to use Raspberry Pis and/or Arduinos with turnstiles to create a cheap system for shelter managers to automatically collect live availability data. This would ensure the occupancy data in our database is up to date and seamless to collect from otherwise busy shelter managers. Lastly, we would like to integrate into municipalities "smart cities" initiatives to gather more robust data and make this system more accessible and well known. | ## Inspiration

One of our team members' grandfathers went blind after slipping and hitting his spinal cord, going from a completely independent individual to reliant on others for everything. The lack of options was upsetting, how could a man who was so independent be so severely limited by a small accident. There is current technology out there for blind individuals to navigate their home, however, there is no such technology that allows blind AND frail individuals to do so. With an increasing aging population, Elderlyf is here to be that technology. We hope to help our team member's grandfather and others like him regain his independence by making a tool that is affordable, efficient, and liberating.

## What it does

Ask your Alexa to take you to a room in the house, and Elderlyf will automatically detect which room you're currently in, mapping out a path from your current room to your target room. With vibration disks strategically located underneath the hand rests, Elderlyf gives you haptic feedback to let you know when objects are in your way and in which direction you should turn. With an intelligent turning system, Elderlyf gently helps with turning corners and avoiding obstacles.

## How I built it

With a Jetson Nano and RealSense Cameras, front view obstacles are detected and a map of the possible routes are generated. SLAM localization was also achieved using those technologies. An Alexa and AWS Speech to Text API was used to activate the mapping and navigation algorithms. By using two servo motors that could independently apply a gentle brake to the wheels to aid users when turning and avoiding obstacles. Piezoelectric vibrating disks were also used to provide haptic feedback in which direction to turn and when obstacles are close.

## Challenges I ran into

Mounting the turning assistance system was a HUGE challenge as the setup needed to be extremely stable. We ended up laser-cutting mounting pieces to fix this problem.

## Accomplishments that we're proud of

We're proud of creating a project that is both software and hardware intensive and yet somehow managing to get it finished up and working.

## What I learned

Learned that the RealSense camera really doesn't like working on the Jetson Nano.

## What's next for Elderlyf

Hoping to incorporate a microphone to the walker so that you can ask Alexa to take you to various rooms even though the Alexa may be out of range. | winning |

## Inspiration

We spend a lot of our time sitting in front of the computer. The idea is to use the video feed from webcam to determine the emotional state of the user, analyze and provide a feedback accordingly in the form of music, pictures and videos.

## How I built it

Using Microsoft Cognitive Services (Video + Emotion API) we get the emotional state of the user through the webcam feed. We parse that to the bot framework which in turn sends responses based upon the change in the values of emotional state.

## Challenges I ran into

Passing data between the bot framework and the desktop application which captured the webcam feed.

## Accomplishments that I'm proud of

A fully functional bot which provides feedback to the user based upon the changes in the emotion.

## What I learned

Visual studio is a pain to work with.

## What's next for ICare

Use Recurrent Neural Network to keep track of the emotional state of the user before and after and improve the content provided to the user over the period of time. | ## Inspiration

After years of teaching methods remaining constant, technology has not yet infiltrated the classroom to its full potential. One day in class, it occurred to us that there must be a correlation between students behaviour in classrooms and their level of comprehension.

## What it does

We leveraged Apple's existing API's around facial detection and combined it with the newly added Core ML features to track students emotions based on their facial queues. The app can follow and analyze up to ~ ten students and provide information in real time using our dashboard.

## How we built it

The iOS app integrated Apple's Core ML framework to run a [CNN](https://www.openu.ac.il/home/hassner/projects/cnn_emotions/) to detect people's emotions from facial queues. The model was then used in combination with Apple's Vision API to identify and extract student's face's. This data was then propagated to Firebase for it to be analyzed and displayed on a dashboard in real time.

## Challenges we ran into

Throughout this project, there were several issues regarding how to improve the accuracy of the facial results. Furthermore, there were issues regarding how to properly extract and track users throughout the length of the session. As for the dashboard, we ran into problems around how to display data in real time.

## Accomplishments that we're proud of

We are proud of the fact that we were able to build such a real-time solution. However, we are happy to have met such a great group of people to have worked with.

## What we learned

Ozzie learnt more regarding CoreML and Vision frameworks.

Haider gained more experience with front-end development as well as working on a team.

Nakul gained experience with real-time graphing as well as helped developed the dashboard.

## What's next for Flatline

In the future, Flatline could grow it's dashboard features to provide more insight for the teachers. Also, the accuracy of the results could be improved by training a model to detect emotions that are more closely related to learning and student's behaviours. | ## Inspiration

In a world where education has become increasingly remote and reliant on online platforms, we need human connection **more than ever**. Many students often find it difficult to express their feelings without unmuting themselves and drawing unwanted attention. As a result, teachers are unaware of how their students are feeling and if the material is engaging. This situation is especially challenging for students who struggle with communicating their feelings–such as individuals with autism, selective mutism, social anxiety, and more.

We want to help **bridge this gap** by creating a tool that will both enable students to express themselves with less effort and enable teachers to understand and respond to their overall needs.

We strongly believe in the importance of **accessibility in education** and supplementing human connection, because at the end of the day, humans are all social beings.

## What it does

Our application helps measure the general emotions of participants in a video meeting, displaying a stream of emojis representing up to **80 different emotions**. We periodically sample video frames from all participants with their cameras on at 10-second intervals, feeding this data into **Hume’s Expression Management API** to identify the most prominent expressions. From this, we generate a composite view of the general sentiment using a custom weighted algorithm.

Using this aggregated sentiment data, our frontend displays the most frequent emotions with their corresponding emojis on the screen. This way, hosts can adapt their teaching to the general sentiment of the classroom, while students can share how they’re feeling without having to experience the social anxiety that comes with typing a message in the chat or sharing a thought out loud.

## How we built it

We leveraged **LiveKit** to create our video conference infrastructure and **Vercel** to deploy our application. We also utilized **Supabase Realtime** as our communication protocol, forwarding livestream data from clients per room and saving that data to Supabase Storage.

Our backend, implemented with **FastAPI**, interfaces with the frontend to pull this data from Supabase and feed the captured facial data into Hume AI to detect human emotions.

The results are then aggregated and stored back into our Supabase table. Our frontend, built with **Next.js** and styled with **Tailwind CSS**, listens to real-time event triggers from Supabase to detect changes in the table.

From this, we’re able to display the stream of emotions in **near real-time**, finally delivering aggregated emotion data as a light-hearted fun animation to keep everyone engaged!

## Challenges we ran into

* Livekit Egress has limited documentation

* Coordination of different parts using Supabase Realtime

* Hume AI API

* First-time Frontenders

* Hosting our backend thru Vercel (lots of config)

## Accomplishments that we're proud of

* Livekit real time streaming video conference

* Streaming video data to Hume Supabase Realtime

* Emoji animation using Framer Motion

* Efficient scoring algorithm using heaps

## What we learned

We learned how to use a lot of new tools and frameworks such as Next.js and Supabase as it was some of our members' first time doing full-stack software engineering. From our members all the way from SoCal and the East Coast, we learned how to ride the BART, and we all learned LiveKit for live streaming and video conferencing.

## What's next for Moji

We see the potential of this tool in a **wide variety of industries** and have other features in mind that we want to implement. For example, we can focus on enhancing this tool to help streamers with any kind of virtual audience by:

* Implementing a dynamic **checklist** that generates to-dos based on questions or requests from viewers.

This can benefit teachers in providing efficient learning to their studies or large entertainment streamers in managing a fast-moving chat. This can also be extended to eCommerce, as livestream shopping requires sellers to efficiently navigate their chat interactions.

* Using Whisper for **real-time audio speech recognition** to automatically check off answered questions.

This provides a hands-free way for streamers to meet their viewers’ requests without having to look extensively through chat. This is especially beneficial for the livestream shopping industry as sellers are typically displaying items while reading messages

* Using **RAG** to store answers to previously asked questions and using this data to answer any future questions.

This can be a great way to save time for streamers from answering repeated questions.

Enhancing video recognition capabilities to identify more complex interactions and objects in real-time.

With video recognition, we can lean even heavier into the eCommerce industry, identifying what type of products sellers are displaying and providing a hands-free and AI enhanced way of managing their checklist of requests.

* Adding **integrations** with other streaming platforms to broaden its applicability and improve the user experience.

The possibilities are endless and we’re excited to see where Moji can go! We hope that Moji can bring a touch of humanity and help us all stay connected and engaged in the digital world. | partial |

## Inspiration

When you are prescribed medication by a Doctor, it is crucial that you complete the dosage cycle in order to ensure that you recover fully and quickly. Unfortunately forgetting to take your medication is something that we have all done. Failing to run the full course of medicine often results in a delayed recovery and leads to more suffering through the painful and annoying symptoms of illness. This has inspired us to create Re-Pill. With Re-Pill, you can automatically generate scheduling and reminders to take you medicine by simply uploading a photo of your prescription.

## What it does

A user uploads an image of their prescription which is then processed by image to text algorithms that extract the details of the medication. Data such as the name of the medication, its dosage, and total tablets is stored and presented to the user. The application synchronizes with google calendar and automatically sets reminders for taking pills into the user's schedule based on the dosage instructions on the prescription. The user can view their medication details at any time by logging into Re-Pill.

## How we built it

We built the application using the Python web framework Flask. Simple endpoints were created for login, registration, and viewing of the user's medication. User data is stored in Google Cloud's Firestore. Images are uploaded and sent to a processing endpoint through a HTTP Request which delivers the medication information. Reminders are set using the Google Calendar API.

## Challenges we ran into

We initially struggled to figure out the right tech-stack to use for building the app. We struggled with Android development before settling for a web-app. One big challenge we faced was to merge all the different part of our application into one smoothly running product. Another challenge was finding a method to inform/notify the user of his/her medication time through a web-based application.

## Accomplishments that we're proud of

There are a couple of things that we are proud of. One of them is how well our team was able to communicate with one another. All team members knew what the other members were working on and the work was divided in such a manner that all teammates worked on the projects using his/her strengths. One important accomplishment is that we were able to overcome a huge time constraint and come up with a prototype of an idea that has potential to change people's lives.

## What we learned

We learned how to set up and leverage Google API's, manage non-relational databases and process image to text using various python libraries.

## What's next for Re-Pill

The next steps for Re-Pill would be to move to a mobile environment and explore useful features that we can implement. Building a mobile application would make it easier for the user to stay connected with the schedules and upload prescription images at a click of a button using the built in camera. Some features we hope to explore are creating automated activities, such as routine appointment bookings with the family doctor and monitoring dietary considerations with regards to stronger medications that might conflict with a patients diet. | ## Inspiration

Forgetting to take a medication is a common problem in older people and is especially likely when an older patient takes several drugs simultaneously. Around 40% of Patients forget to take medicines and most of them have difficulty to differentiate between multiple medications.

1. Patients forgets to take medication

2. Patients get confused in taking multiple medications as they look similar.

3. Patients don't take medications at correct time

Such thing leads to bad health and now-a-days these cases are increasing day by day and most people think that skipping medications is normal thing, which is bad for their health.

To avoid this I wanted to make a device which automatically gives medicines to the patients.

## What it does

It is a 3D printed Vending Box actuated by using servo motor and controlled using Raspberry Pi 4 and Mobile App. The Box will automatically sort out medicine according to Time and Schedule and Vend it using Servo Motor from the Box, the App will be connected via Firebase to Store, Add and Modify the Medicine Schedule and Raspberry Pi will collect that data from Firebase and Actuate the Servos Accordingly.

1. Doctors/Care Takers Can Add Medicine Schedule via the Mobile App.

2. The Patient will hear a Buzzer and Sound Details about Medicine and how much Doses he must take of that medicine.

3. The Box will automatically rotate via servo and Drop Off the Scheduled Medicine off the Box .

## How we built it

I bought Raspberry Pi and Servos at start of Hackathon, and Started Development of App until the Hardware got Shipped.

Timeline

* Day 1-2 : Planning of Idea and Hardware Ordering

* Day 3-4 : Research Work and Planning

* Day4 -6: App Development from Flutter + Firebase

* Day 7-9: CAD Design and started 3D Printing(took 13 Hours to print)

* Day 10: Hardware Arrived and Tested

* Day 10 -Day 12 Integrated 3D Printed Parts and Motors and Done with Project

* Day 13: Video Editing, Devpost Documentation , Code Uploading

* Day 14: Minor Video Edits and Documentation

#### Initial Research and CAD Drawings:

[](https://ibb.co/KWGMGhk)

[](https://ibb.co/cJQD8ss)

[](https://ibb.co/0ZrjBjp)

## Challenges we ran into

* 3D Printing Failed and took lot of time

* Late Shipment of Hardware

* Servo Motor Gear Issues

## Accomplishments that we're proud of

1. New to 3d Printing and Printed such Big Project for First Time

2. Used Raspberry Pi for First Time

## What we learned

* CAD Design

* Flutter

* Raspberry Pi

## What's next for MediBox

1. Creating 2 Level Box, for more medicine capacity using same amount of motors. [image]

2. Add good UI to App

3. Adding Medicine Recognition and automatically ordering . | Introducing Melo-N – where your favorite tunes get a whole new vibe! Melo-N combines "melody" and "Novate" to bring you a fun way to switch up your music.

Here's the deal: You pick a song and a genre, and we do the rest. We keep the lyrics and melody intact while changing up the music style. It's like listening to your favourite songs in a whole new light!

How do we do it? We use cool tech tools like Spleeter to separate vocals from instruments, so we can tweak things just right. Then, with the help of the MusicGen API, we switch up the genre to give your song a fresh spin. Once everything's mixed up, we deliver your custom version – ready for you to enjoy.

Melo-N is all about exploring new sounds and having fun with your music. Whether you want to rock out to a country beat or chill with a pop vibe, Melo-N lets you mix it up however you like.

So, get ready to rediscover your favourite tunes with Melo-N – where music meets innovation, and every listen is an adventure! | losing |

## Inspiration

One of our teammate’s grandfathers suffers from diabetic retinopathy, which causes severe vision loss.

Looking on a broader scale, over 2.2 billion people suffer from near or distant vision impairment worldwide. After examining the issue closer, it can be confirmed that the issue disproportionately affects people over the age of 50 years old. We wanted to create a solution that would help them navigate the complex world independently.

## What it does

### Object Identification:

Utilizes advanced computer vision to identify and describe objects in the user's surroundings, providing real-time audio feedback.

### Facial Recognition:

It employs machine learning for facial recognition, enabling users to recognize and remember familiar faces, and fostering a deeper connection with their environment.

### Interactive Question Answering:

Acts as an on-demand information resource, allowing users to ask questions and receive accurate answers, covering a wide range of topics.

### Voice Commands:

Features a user-friendly voice command system accessible to all, facilitating seamless interaction with the AI assistant: Sierra.

## How we built it

* Python

* OpenCV

* GCP & Firebase

* Google Maps API, Google Pyttsx3, Google’s VERTEX AI Toolkit (removed later due to inefficiency)

## Challenges we ran into

* Slow response times with Google Products, resulting in some replacements of services (e.g. Pyttsx3 was replaced by a faster, offline nlp model from Vosk)

* Due to the hardware capabilities of our low-end laptops, there is some amount of lag and slowness in the software with average response times of 7-8 seconds.

* Due to strict security measures and product design, we faced a lack of flexibility in working with the Maps API. After working together with each other and viewing some tutorials, we learned how to integrate Google Maps into the dashboard

## Accomplishments that we're proud of

We are proud that by the end of the hacking period, we had a working prototype and software. Both of these factors were able to integrate properly. The AI assistant, Sierra, can accurately recognize faces as well as detect settings in the real world. Although there were challenges along the way, the immense effort we put in paid off.

## What we learned

* How to work with a variety of Google Cloud-based tools and how to overcome potential challenges they pose to beginner users.

* How to connect a smartphone to a laptop with a remote connection to create more opportunities for practical designs and demonstrations.

How to connect a smartphone to a laptop with a remote connection to create more opportunities for practical designs and demonstrations.

How to create docker containers to deploy google cloud-based flask applications to host our dashboard.

How to develop Firebase Cloud Functions to implement cron jobs. We tried to developed a cron job that would send alerts to the user.

## What's next for Saight

### Optimizing the Response Time

Currently, the hardware limitations of our computers create a large delay in the assistant's response times. By improving the efficiency of the models used, we can improve the user experience in fast-paced environments.

### Testing Various Materials for the Mount

The physical prototype of the mount was mainly a proof-of-concept for the idea. In the future, we can conduct research and testing on various materials to find out which ones are most preferred by users. Factors such as density, cost and durability will all play a role in this decision. | ## Inspiration

Fresh fruit, vegetables, nuts, and legumes don’t play the **central role** they need to in our food system. The result is a **chronic health epidemic**, disastrous impacts on the **climate**, and **unequal access** to food. We wanted to find a tangible solution to this very real and consequential problem.

## What it does

Cropscape analyzes location-specific **soil**, **climate**, and **hardiness** data to provide personalized crop recommendations for a thriving backyard garden. The user inputs a

## How we built it

We built our project using React, Express, Node.js, and MySQL.

## Challenges we ran into

ChatGPT required tokens that cost money. We had difficulties implementing React on the frontend, so we had to opt for vanilla HTML/CSS.

## Accomplishments that we're proud of

We are proud of our ability to leverage several APIs to create a centralized source of gardening-relevant information.

## What's next for Cropscape

Gaining access to more robust databases of soil information, implementing a better soil analysis system. | ## Inspiration

As we sat down to brainstorm ideas for our next project, we were struck by a common thread that connected all of us. Each one of us had a family member who suffered from some form of visual impairment. It was a heart-wrenching reminder of the challenges that these individuals face on a daily basis. We shared stories of our loved ones struggling to read books, watch movies, or even navigate through everyday tasks. It was a deeply emotional conversation that left us feeling both empathetic and determined to make a difference. According to the World Health Organization, approximately 2.2 billion people worldwide have a vision impairment or blindness, with the majority of cases occurring in low and middle-income countries. The impact of visual impairment is far-reaching and significantly affects various daily activities such as reading, recognizing faces, navigating unfamiliar environments, and accessing information on digital platforms. This problem is valid, and it needs to be addressed to enhance the quality of life of those affected. We are passionate about developing a solution that will make a meaningful difference in the lives of those affected by visual impairment. Our project is inspired by personal experiences and fueled by a desire to make a real-world impact. We believe that everyone deserves equal access to information and the ability to participate fully in daily life. By addressing the challenges of visual impairment, we hope to create a more inclusive world for all.

## What it does

The product aims to bridge the gap for individuals with limited vision to experience the world around them. It helps individuals with visual impairments to perform various daily activities that are otherwise challenging, such as reading, recognizing faces, and navigating unfamiliar environments. It also assists in accessing information on digital platforms. The product can be particularly helpful for those who face barriers in accessing healthcare services due to their visual impairments. It can aid in reading prescription labels, understanding medical instructions, and navigating healthcare facilities, especially for older individuals who are aging.

## How we built it

In our project, we leverage cutting-edge computer vision techniques to interpret the surrounding environment of individuals with visual impairments. By utilizing advanced algorithms and neural networks, we process real-time visual data captured by a camera, enabling us to identify and analyze objects, obstacles, and spatial cues in the user's surroundings. We integrate state-of-the-art language models and natural language generation powered by Wisp AI software to bridge the gap between the interpreted world and the user. This allows us to generate detailed and contextually relevant descriptions of the environment in real time, providing visually impaired individuals with comprehensive auditory feedback about their surroundings. Additionally, our solution extends beyond descriptive capabilities to enhance accessibility in public transportation. By leveraging the interpreted environmental data, we develop guidance systems that assist users in navigating through streets and accessing transportation hubs safely and independently. For efficient and scalable deployment of our model, we utilize Intel's AI environment, leveraging its robust infrastructure and resources to host and optimize our machine learning algorithms. Our system architecture is implemented on a Raspberry Pi embedded platform, equipped with a high-resolution camera for real-time visualization and data capture. This combination of hardware and software components enables seamless integration and efficient visual information processing, empowering visually impaired individuals with enhanced mobility and independence in their daily lives.

## Challenges we ran into

As beginners in machine learning, we faced the tough challenge of setting up a machine learning model on a Raspberry Pi and connecting it to a camera, which was quite difficult to learn. Moreover, we had to figure out a way to train our model not only to understand text but also to recognize public transportation and calculate the distance to a bus entrance, which was quite a task. Adding our Intel-AI environment to the project made things even more complicated. Additionally, finding an affordable solution that could be easily accessible to people all around the world was a significant obstacle that we had to overcome.

## Accomplishments that we're proud of

Through this process of building a hardware product from scratch and learning how to use raspberry pi with computer vision, we not only gained technical knowledge but also learned how to work as a team. There were challenges and obstacles along the way, but we figured it out by collaborating, communicating, and leveraging each other's strengths. It was a great learning experience, and we are proud of what we have achieved together.

## What we learned

We learned about LLM, real-time text analysis, real-time text comprehension, and implementation of text-to-speech.

## What's next for True-Sight

With the growing potential of Artificial Intelligence, our idea of True-Sight is expanding to include not only text recognition but also the ability to detect surroundings, which could greatly benefit public transportation users who rely on finding stops and navigating their way onto the correct buses/trains. After further development, True-Sight could potentially allow users to locate their desired stop and use environment detection to guide them towards the door with specific step-by-step instructions. In addition, we aim to make True-Sight accessible to children who are visually impaired, so they can have an immersive learning experience. Adding sensors and custom software will also allow for a more personalized and relatable experience with the AI assistant. | winning |

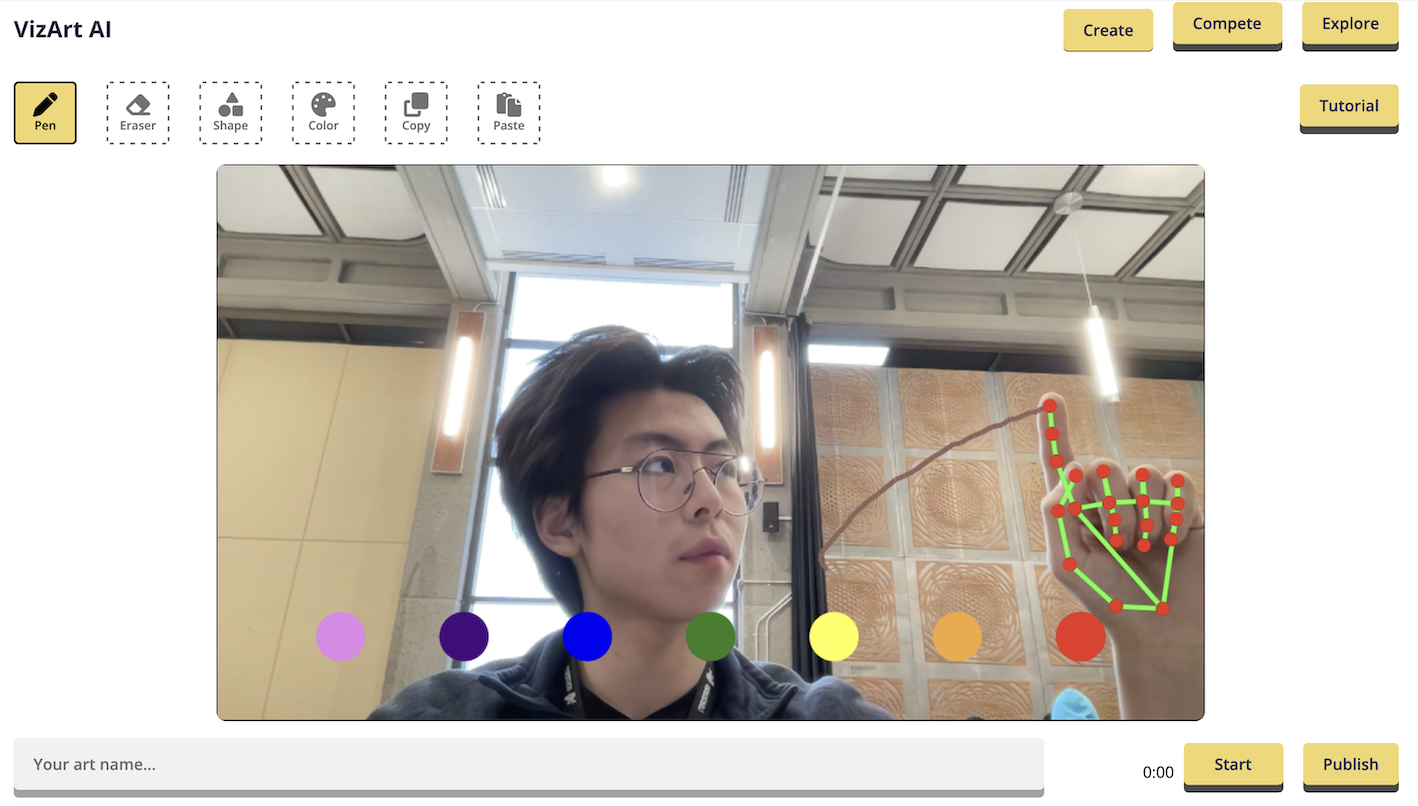

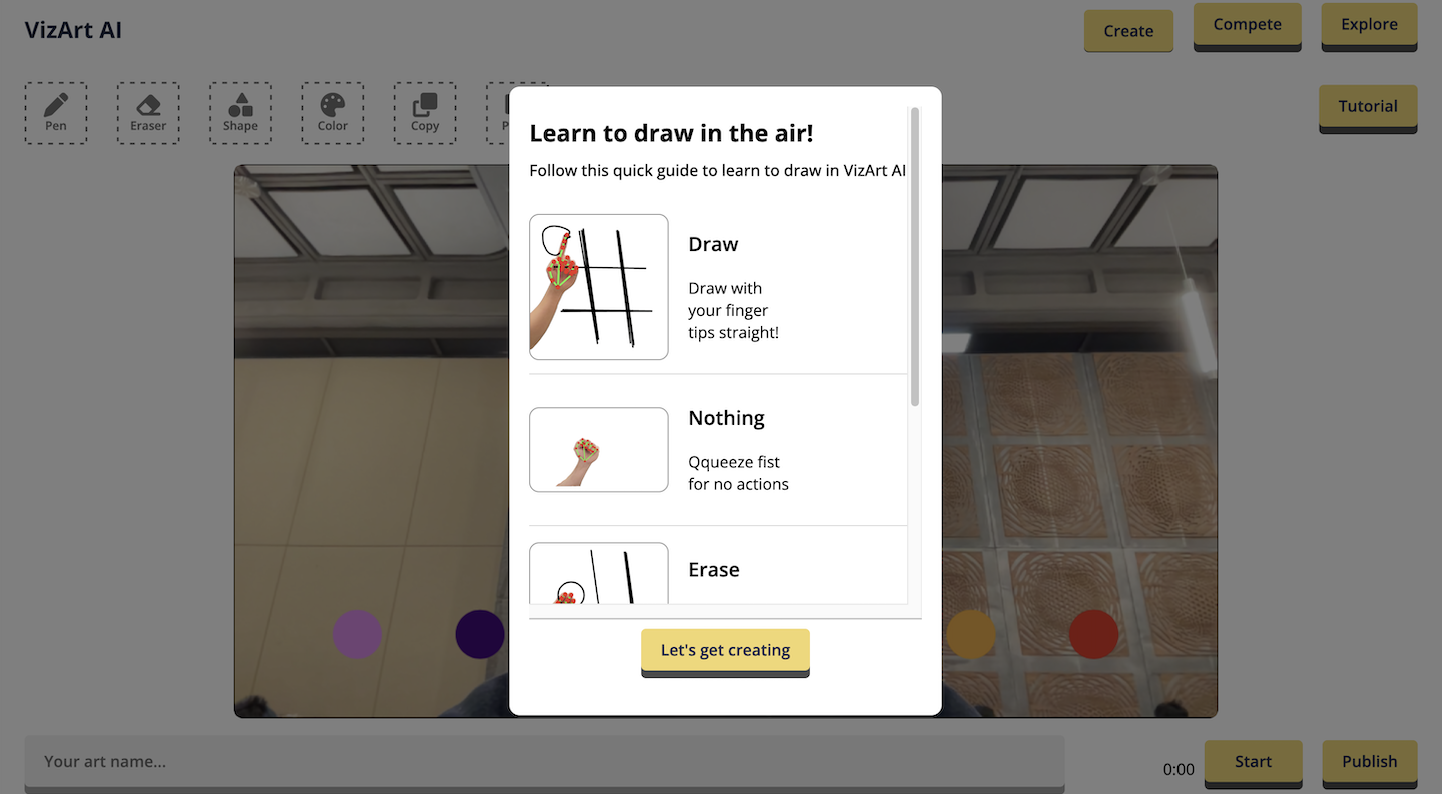

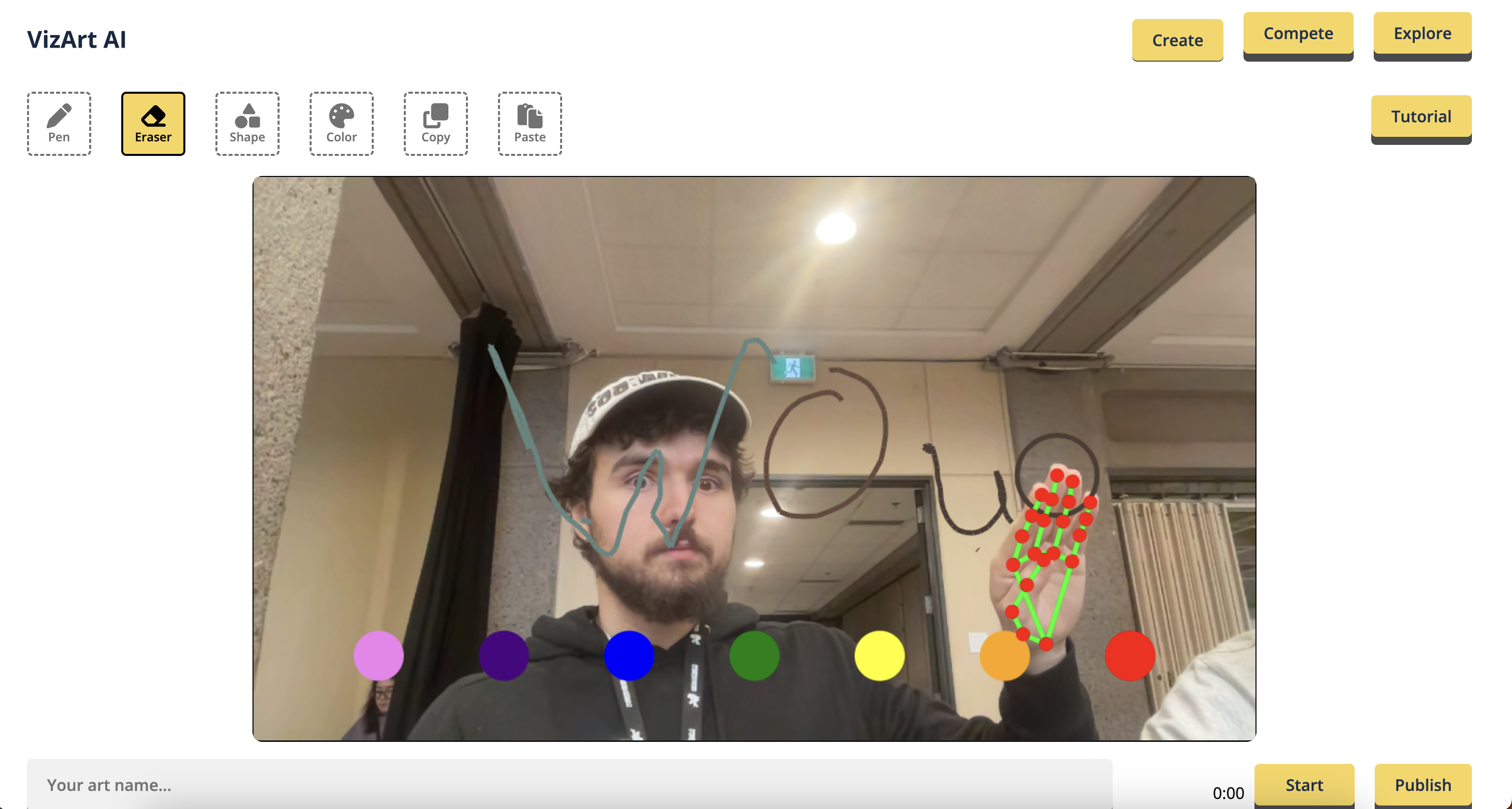

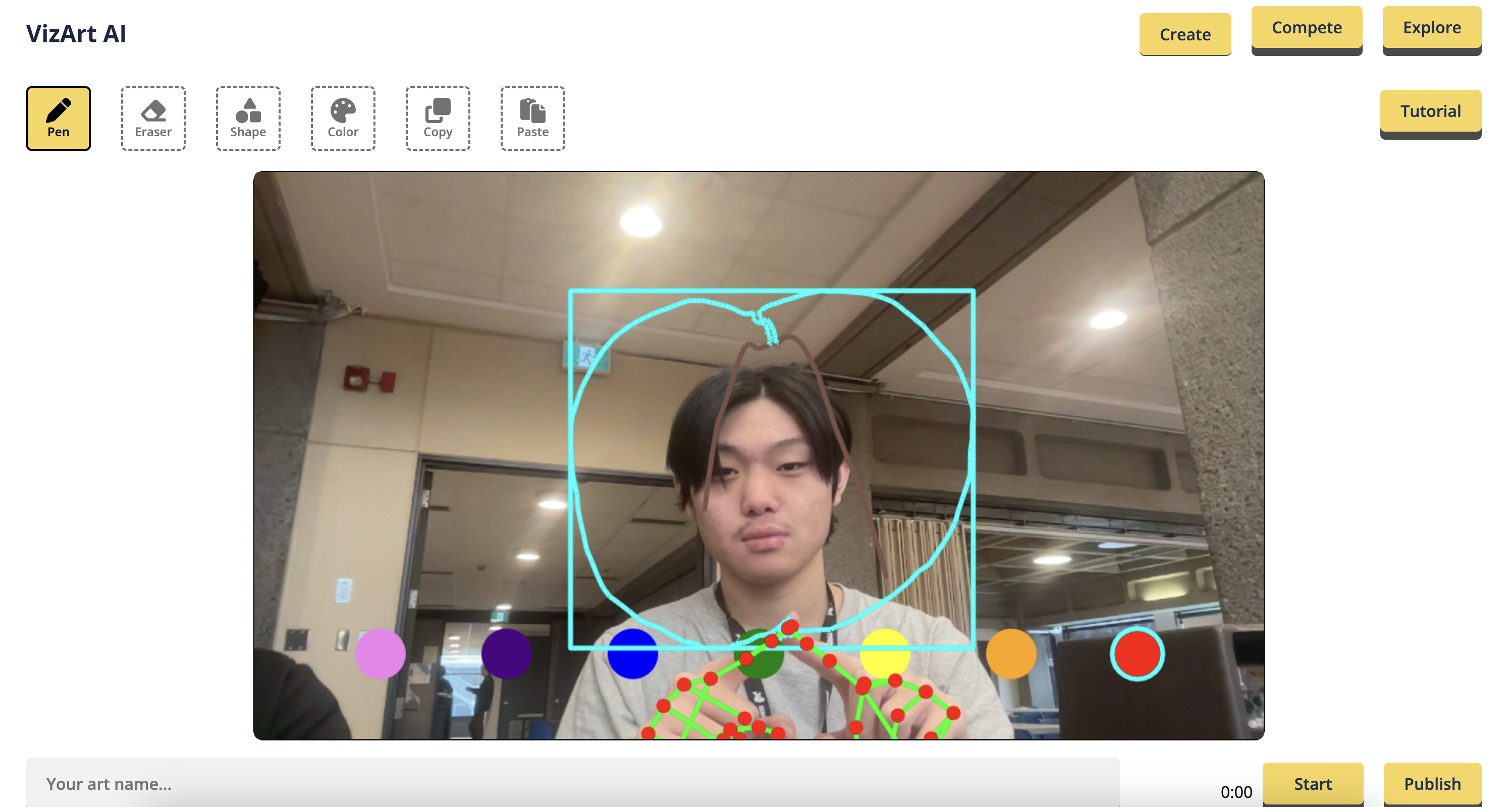

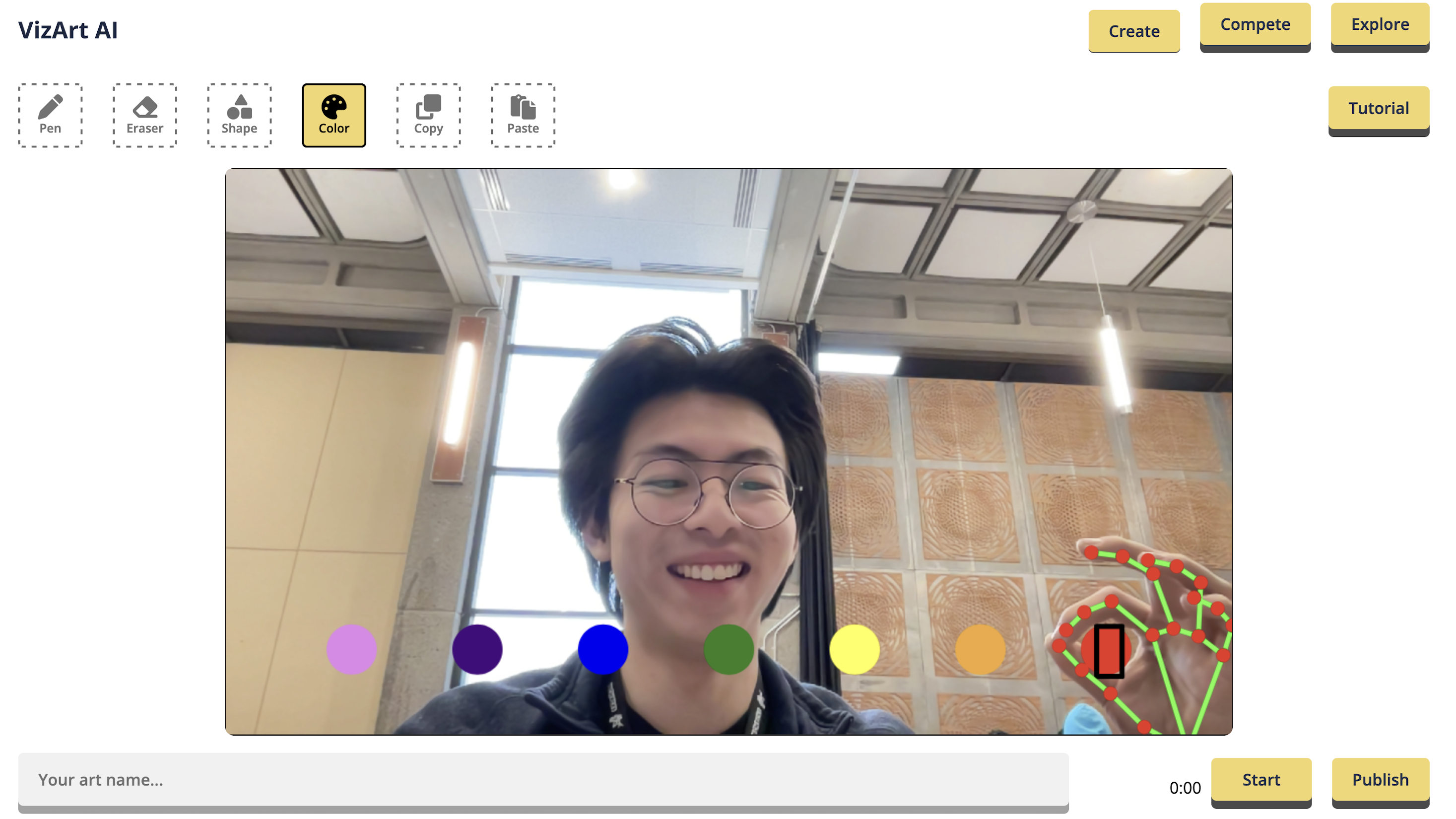

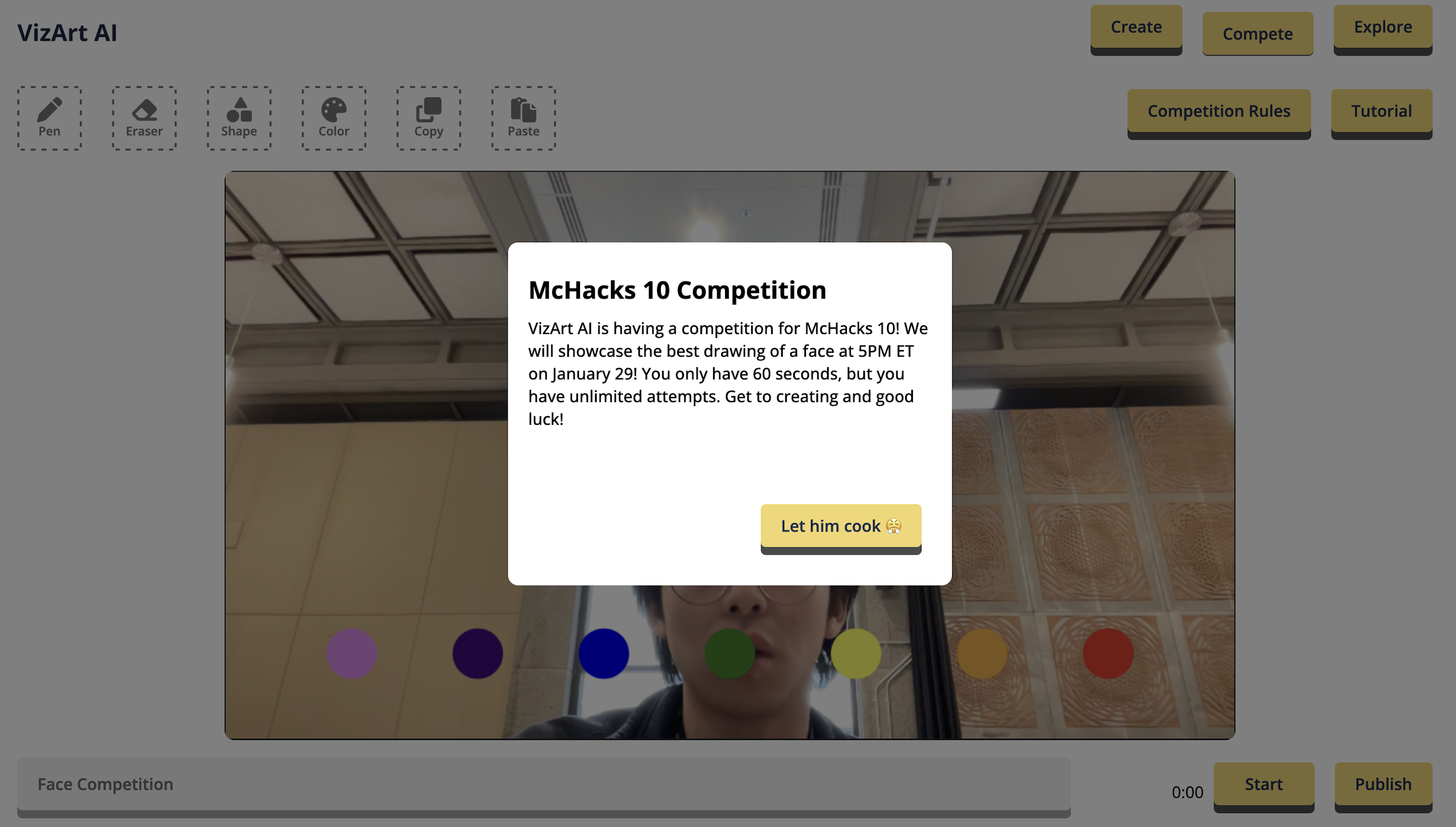

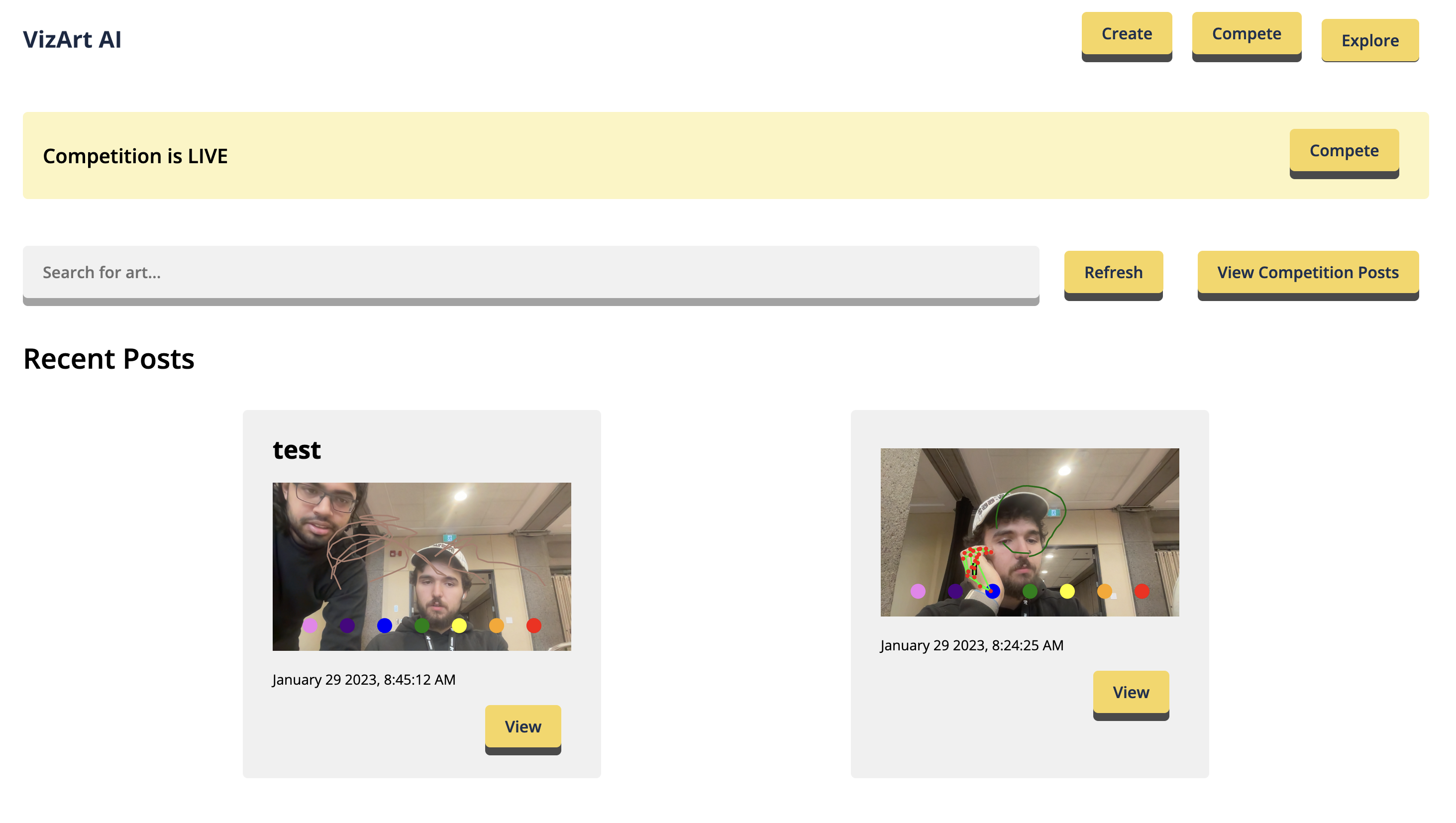

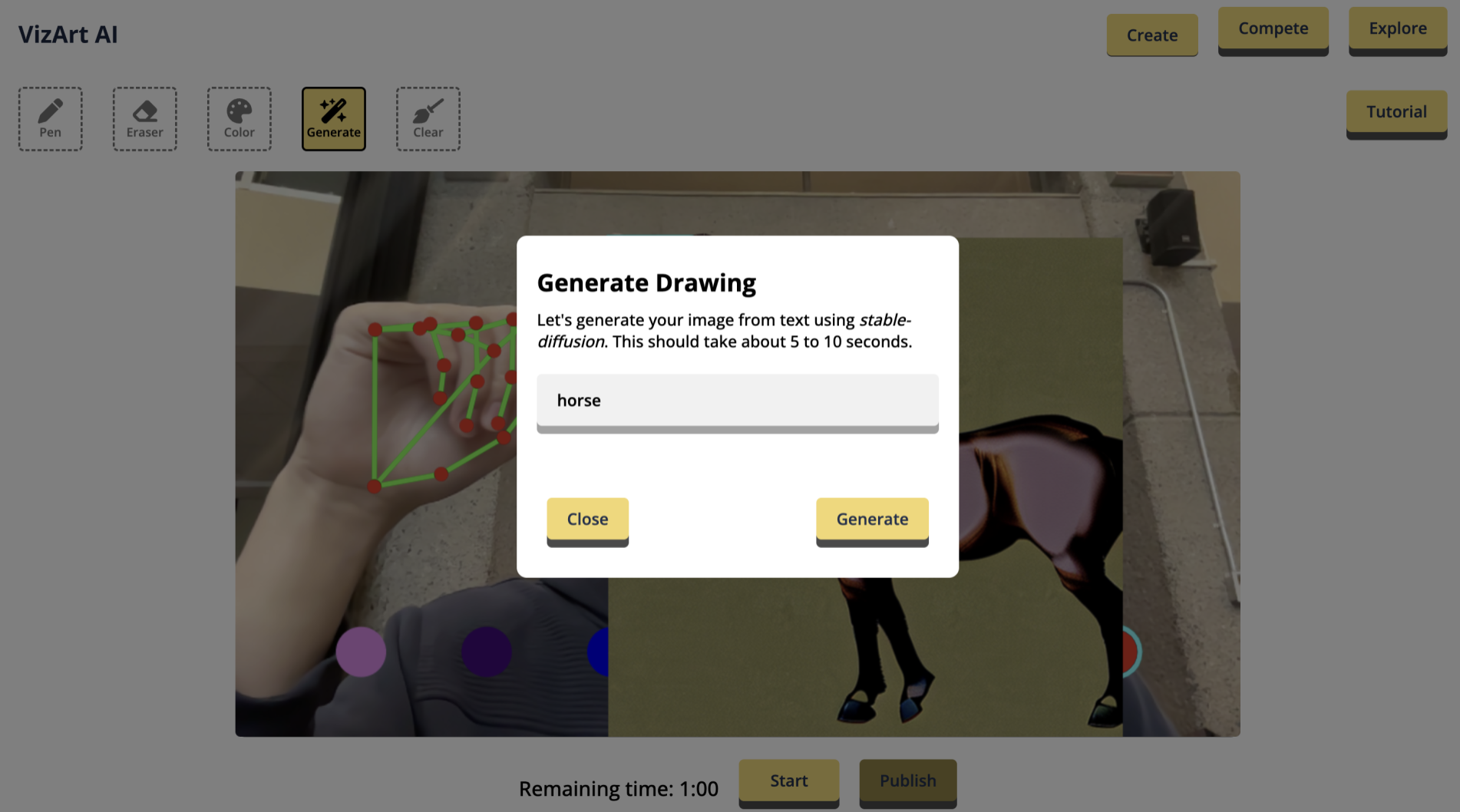

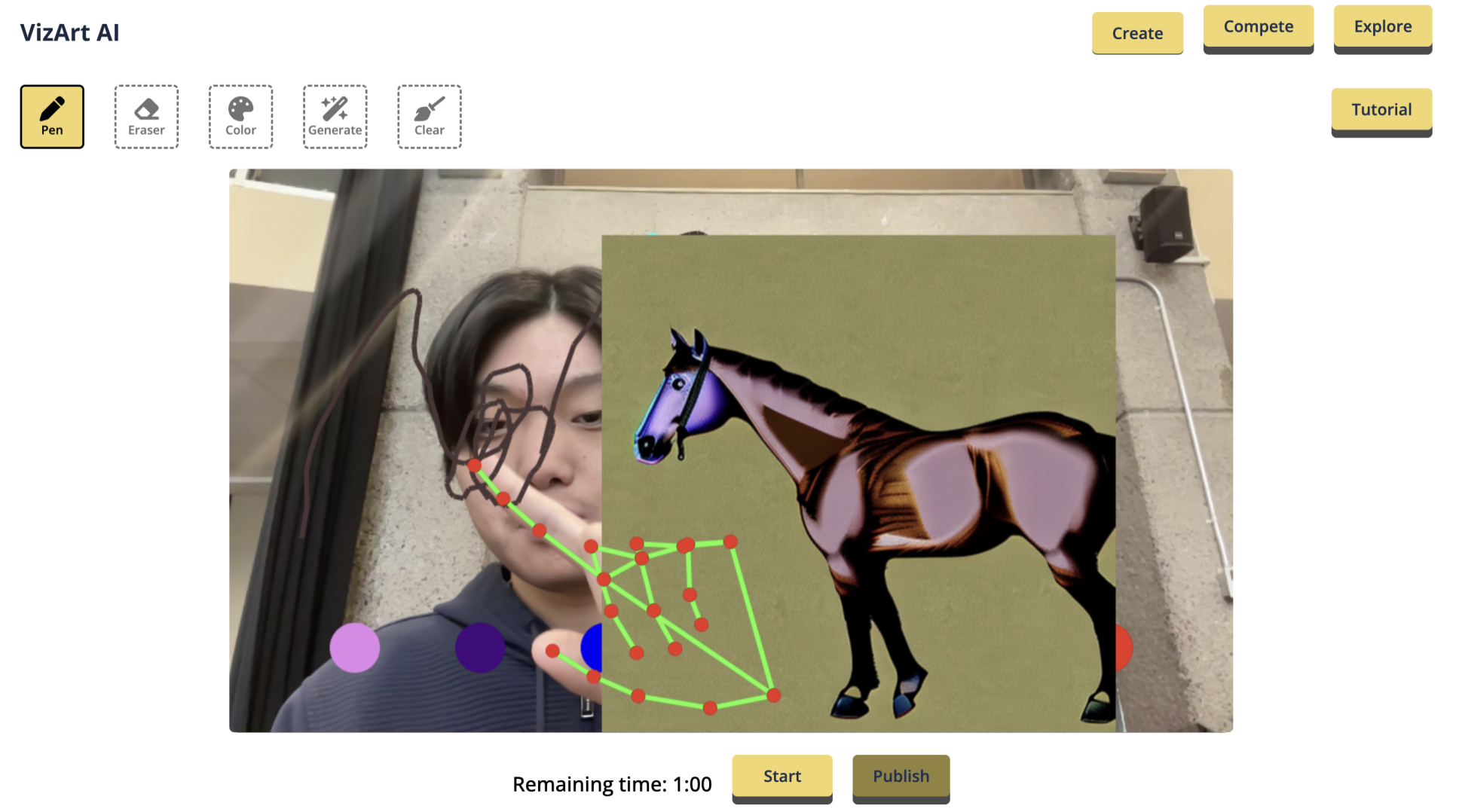

# 🤖🖌️ [VizArt Computer Vision Drawing Platform](https://vizart.tech)

Create and share your artwork with the world using VizArt - a simple yet powerful air drawing platform.

## 💫 Inspiration

>

> "Art is the signature of civilizations." - Beverly Sills

>

>

>

Art is a gateway to creative expression. With [VizArt](https://vizart.tech/create), we are pushing the boundaries of what's possible with computer vision and enabling a new level of artistic expression. ***We envision a world where people can interact with both the physical and digital realms in creative ways.***

We started by pushing the limits of what's possible with customizable deep learning, streaming media, and AR technologies. With VizArt, you can draw in art, interact with the real world digitally, and share your creations with your friends!

>

> "Art is the reflection of life, and life is the reflection of art." - Unknow

>

>

>

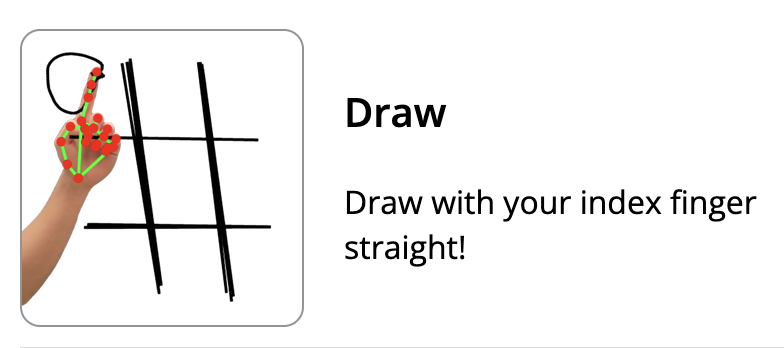

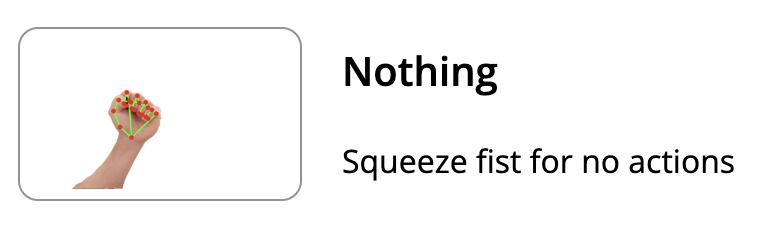

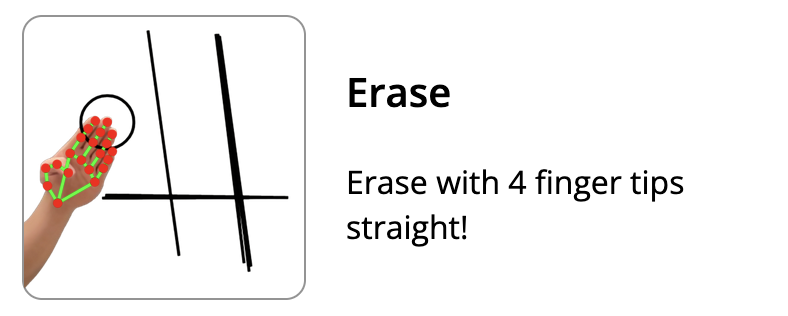

Air writing is made possible with hand gestures, such as a pen gesture to draw and an eraser gesture to erase lines. With VizArt, you can turn your ideas into reality by sketching in the air.

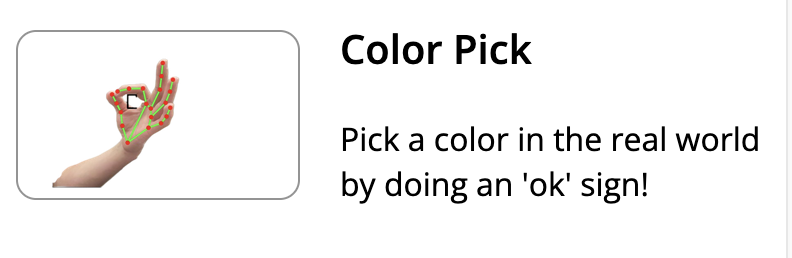

Our computer vision algorithm enables you to interact with the world using a color picker gesture and a snipping tool to manipulate real-world objects.

>

> "Art is not what you see, but what you make others see." - Claude Monet

>

>

>

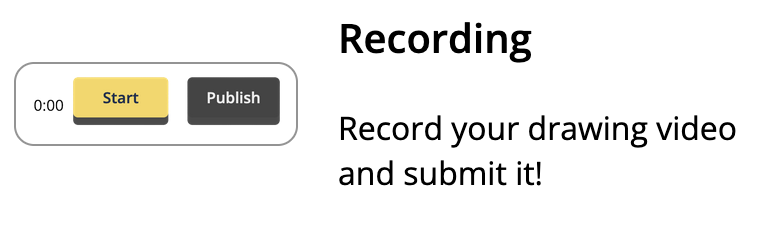

The features I listed above are great! But what's the point of creating something if you can't share it with the world? That's why we've built a platform for you to showcase your art. You'll be able to record and share your drawings with friends.

I hope you will enjoy using VizArt and share it with your friends. Remember: Make good gifts, Make good art.

# ❤️ Use Cases

### Drawing Competition/Game

VizArt can be used to host a fun and interactive drawing competition or game. Players can challenge each other to create the best masterpiece, using the computer vision features such as the color picker and eraser.

### Whiteboard Replacement

VizArt is a great alternative to traditional whiteboards. It can be used in classrooms and offices to present ideas, collaborate with others, and make annotations. Its computer vision features make drawing and erasing easier.

### People with Disabilities

VizArt enables people with disabilities to express their creativity. Its computer vision capabilities facilitate drawing, erasing, and annotating without the need for physical tools or contact.

### Strategy Games

VizArt can be used to create and play strategy games with friends. Players can draw their own boards and pieces, and then use the computer vision features to move them around the board. This allows for a more interactive and engaging experience than traditional board games.

### Remote Collaboration

With VizArt, teams can collaborate remotely and in real-time. The platform is equipped with features such as the color picker, eraser, and snipping tool, making it easy to interact with the environment. It also has a sharing platform where users can record and share their drawings with anyone. This makes VizArt a great tool for remote collaboration and creativity.

# 👋 Gestures Tutorial

# ⚒️ Engineering

Ah, this is where even more fun begins!

## Stack

### Frontend

We designed the frontend with Figma and after a few iterations, we had an initial design to begin working with. The frontend was made with React and Typescript and styled with Sass.

### Backend

We wrote the backend in Flask. To implement uploading videos along with their thumbnails we simply use a filesystem database.

## Computer Vision AI

We use MediaPipe to grab the coordinates of the joints and upload images. WIth the coordinates, we plot with CanvasRenderingContext2D on the canvas, where we use algorithms and vector calculations to determinate the gesture. Then, for image generation, we use the DeepAI open source library.

# Experimentation

We were using generative AI to generate images, however we ran out of time.

# 👨💻 Team (”The Sprint Team”)

@Sheheryar Pavaz

@Anton Otaner

@Jingxiang Mo

@Tommy He | ## Inspiration

With more people working at home due to the pandemic, we felt empowered to improve healthcare at an individual level. Existing solutions for posture detection are expensive, lack cross-platform support, and often require additional device purchases. We sought to remedy these issues by creating Upright.

## What it does

Upright uses your laptop's camera to analyze and help you improve your posture. Register and calibrate the system in less than two minutes, and simply keep Upright open in the background and continue working. Upright will notify you if you begin to slouch so you can correct it. Upright also has the Upright companion iOS app to view your daily metrics.

Some notable features include:

* Smart slouch detection with ML

* Little overhead - get started in < 2 min

* Native notifications on any platform

* Progress tracking with an iOS companion app

## How we built it

We created Upright’s desktop app using Electron.js, an npm package used to develop cross-platform apps. We created the individual pages for the app using HTML, CSS, and client-side JavaScript. For the onboarding screens, users fill out an HTML form which signs them in using Firebase Authentication and uploads information such as their name and preferences to Firestore. This data is also persisted locally using NeDB, a local JavaScript database. The menu bar addition incorporates a camera through a MediaDevices web API, which gives us frames of the user’s posture. Using Tensorflow’s PoseNet model, we analyzed these frames to determine if the user is slouching and if so, by how much. The app sends a desktop notification to alert the user about their posture and also uploads this data to Firestore. Lastly, our SwiftUI-based iOS app pulls this data to display metrics and graphs for the user about their posture over time.

## Challenges we ran into

We faced difficulties when managing data throughout the platform, from the desktop app backend to the frontend pages to the iOS app. As this was our first time using Electron, our team spent a lot of time discovering ways to pass data safely and efficiently, discussing the pros and cons of different solutions. Another significant challenge was performing the machine learning on the video frames. The task of taking in a stream of camera frames and outputting them into slouching percentage values was quite demanding, but we were able to overcome several bugs and obstacles along the way to create the final product.

## Accomplishments that we're proud of

We’re proud that we’ve come up with a seamless and beautiful design that takes less than a minute to setup. The slouch detection model is also pretty accurate, something that we’re pretty proud of. Overall, we’ve built a robust system that we believe outperforms other solutions using just the webcamera of your computer, while also integrating features to track slouching data on your mobile device.

## What we learned

This project taught us how to combine multiple complicated moving pieces into one application. Specifically, we learned how to make a native desktop application with features like notifications built-in using Electron. We also learned how to connect our backend posture data with Firestore to relay information from our Electron application to our OS app. Lastly, we learned how to integrate a machine learning model in Tensorflow within our Electron application.

## What's next for Upright

The next step is improving the posture detection model with more training data, tailored for each user. While the posture detection model we currently use is pretty accurate, by using more custom-tailored training data, it would take Upright to the next level. Another step for Upright would be adding Android integration for our mobile app, which currently only supports iOS as of now. | ## Overview

We designed fingerpaint, an app that utilizes visual image processing to implement a drawing tool through motion detection. Using OpenCV, we analyze video streams through a user's webcam footage and use that information to inform the user's interaction with a Canvas. This allows users to more easily whiteboard and prototype basic designs through drawing than with existing systems as Microsoft Paint.

## How it Works

When the app first loads, it opens up on an initialization screen that allows users to calibrate the app to their drawing tool of choice. To simplify the model required, we detected a marker on the user's finger using brightly colored electrical tape. After calibration finishes, the user's marked finger is recognized by the app and can be used to interact with the Canvas (implemented with tkinter). Informed by a history of past points, our model detects where the finger is moving and uses this information to move a cursor. When the user presses the 'a' key while the cursor moves, the user can draw on the Canvas.

## Challenges

This was our first time working with OpenCV/Computer Vision in general, so we had a lot of difficulties in determining how best to leverage all the tools the library has and the best way to accomplish the Computer Vision task we had. We had a lot of iteration playing with different approaches to figure out what would work best.

## Next Steps

Implement more advanced gesture recognition and UI related features (Undos, Saving Images to Local File, etc.) | winning |

## Inspiration

Since this was the first hackathon for most of our group, we wanted to work on a project where we could learn something new while sticking to familiar territory. Thus we settled on programming a discord bot, something all of us have extensive experience using, that works with UiPath, a tool equally as intriguing as it is foreign to us. We wanted to create an application that will allow us to track the prices and other related information of tech products in order to streamline the buying process and enable the user to get the best deals. We decided to program a bot that utilizes user input, web automation, and web-scraping to generate information on various items, focusing on computer components.

## What it does

Once online, our PriceTracker bot runs under two main commands: !add and !price. Using these two commands, a few external CSV files, and UiPath, this stores items input by the user and returns related information found via UiPath's web-scraping features. A concise display of the product’s price, stock, and sale discount is displayed to the user through the Discord bot.

## How we built it

We programmed the Discord bot using the comprehensive discord.py API. Using its thorough documentation and a handful of tutorials online, we quickly learned how to initialize a bot using Discord's personal Development Portal and create commands that would work with specified text channels. To scrape web pages, in our case, the Canada Computers website, we used a UiPath sequence along with the aforementioned CSV file, which contained input retrieved from the bot's "!add" command. In the UiPath process, each product is searched on the Canada Computers website and then through data scraping, the most relevant results from the search and all related information are processed into a csv file. This csv file is then parsed through to create a concise description which is returned in Discord whenever the bot's "!prices" command was called.

## Challenges we ran into

The most challenging aspect of our project was figuring out how to use UiPath. Since Python was such a large part of programming the discord bot, our experience with the language helped exponentially. The same could be said about working with text and CSV files. However, because automation was a topic none of us hardly had any knowledge of; naturally, our first encounter with it was rough. Another big problem with UiPath was learning how to use variables as we wanted to generalize the process so that it would work for any product inputted.

Eventually, with enough perseverance, we were able to incorporate UiPath into our project exactly the way we wanted to.

## Accomplishments that we're proud of

Learning the ins and outs of automation alone was a strenuous task. Being able to incorporate it into a functional program is even more difficult, but incredibly satisfying as well. Albeit small in scale, this introduction to automation serves as a good stepping stone for further research on the topic of automation and its capabilities.

## What we learned

Although we stuck close to our roots by relying on Python for programming the discord bot, we learned a ton of new things about how these bots are initialized, the various attributes and roles they can have, and how we can use IDEs like Pycharm in combination with larger platforms like Discord. Additionally, we learned a great deal about automation and how it functions through UiPath which absolutely fascinated us the first time we saw it in action. As this was the first Hackathon for most of us, we also got a glimpse into what we have been missing out on and how beneficial these competitions can be. Getting the extra push to start working on side-projects and indulging in solo research was greatly appreciated.

## What's next for Tech4U

We went into this project with a plethora of different ideas, and although we were not able to incorporate all of them, we did finish with something we were proud of. Some other ideas we wanted to integrate include: scraping multiple different websites, formatting output differently on Discord, automating the act of purchasing an item, taking input and giving output under the same command, and more. | ## Inspiration

As students, we have found that there are very few high-quality resources on investing for those who are interested but don't have enough resources. Furthermore, we have found that investing and saving money can be a stressful experience. We hope to change this for those who want to save better with the help of our app, hopefully making it fun in the process!

## What it does

Our app first asks a new client a brief questionnaire about themselves. Then, using their banking history, it generates 3 "demons", aka bad spending habits, to kill. Then, after the client chooses a habit to work on, it brings them to a dashboard where they can monitor their weekly progress on a task. Once the week is over, the app declares whether the client successfully beat the mission - if they did, they get rewarded with points which they can exchange for RBC Loyalty points!

## How we built it

We built the frontend using React + Tailwind, using Routes to display our different pages. We used Cohere for our AI services, both for generating personalized weekly goals and creating a more in-depth report. We used Firebase for authentication + cloud database to keep track of users. For our data of users and transactions, as well as making/managing loyalty points, we used the RBC API.

## Challenges we ran into

Piecing the APIs together was probably our most difficult challenge. Besides learning the different APIs in general, integrating the different technologies got quite tricky when we are trying to do multiple things at the same time!

Besides API integration, definitely working without any sleep though was the hardest part!

## Accomplishments that we're proud of

Definitely our biggest accomplishment was working so well together as a team. Despite only meeting each other the day before, we got along extremely well and were able to come up with some great ideas and execute under a lot of pressure (and sleep deprivation!) The biggest reward from this hackathon are the new friends we've found in each other :)

## What we learned

I think each of us learned very different things: this was Homey and Alex's first hackathon, where they learned how to work under a small time constraint (and did extremely well!). Paige learned tons about React, frontend development, and working in a team. Vassily learned lots about his own strengths and weakness (surprisingly reliable at git, apparently, although he might have too much of a sweet tooth).

## What's next for Savvy Saver

Demos! After that, we'll just have to see :) | ## Inspiration

For Watchr, we took inspiration from old-school DVR machines. You know, the kind that records your favourite television show watching at your leisure. Looking at our lives, we saw that we missed countless live web events due to other commitments. And thus, Watchr was born!

## What it does

Watchr is a simple desktop platform that records live screen events. Users can set the target source, live stream time and file directory. A small preview will be displayed to confirm all choices. Users can schedule multiple live stream recordings, perfect for all occasions. When the event rolls around, sit back and let Watchr go to work! Watchr will record live stream events, before automatically downloading and storing them on your device.

Users can also scroll through a list of past live streams recorded through Watchr and delete upcoming scheduled recordings.

## How we built it

Watchr was built on an Electron, with a React.js frontend. We relied on a couple of crucial react libraries such as “react-datetimepicker” and “react-popup” which we styled with CSS.

## Challenges we ran into

While creating Watchr, we ran into a few different problems. One key issue dealt with using unfamiliar technology and software. Getting our bearings on Electron and React took some time, but once we gave ourselves some time to become comfortable with the software, we were able to make solid progress.

Furthermore, we ran into problems with rapidly updating software. For example, many online guides and resources concerning Electron were outdated due to the quick pace at which software updates were released. We also dealt with many merge conflicts, but through this experience, we learned how to avoid and resolve them when working collaboratively.

## Accomplishments that we're proud of

We are proud of creating a working desktop application through Electron and React. This project was the first time many members came into contact with either technology, and we are proud of being able to stretch our thinking and step outside of our comfort zones.

## What we learned

By creating Watchr, we learned the importance of teamwork and communicating with one another in an effective manner. Due to the countless merge conflicts we faced, we wasted many hours that could have been spent creating new features or coding. This will be a learning lesson for all of us next time.

Furthermore, coding Watchr taught many team members fundamentals in Electron and React. Watchr helped to create core skills and helped to inspire many possible future ideas due to our newfound skills. | partial |

## Inspiration

How many times have you opened your fridge door and examined its contents for something to eat/cook/stare at and ended up finding a forgotten container of food in the back of the fridge (a month past its expiry date) instead? Are you brave enough to eat it, or does it immediately go into the trash?

The most likely answer would be to dispose of it right away for health and safety reasons, but you'd be surprised - food wastage is a problem that [many countries such as Canada](https://seeds.ca/schoolfoodgardens/food-waste-in-canada-3/) contend with every year, even as world hunger continues to increase! Big corporations and industries contribute to most of the wastage that occurs worldwide, but we as individual consumers can do our part to reduce food wastage as well by minding our fridges and pantries and making sure we eat everything that we procure for ourselves.

Enter chec:xpire - the little app that helps reduce food wastage, one ingredient at a time!

## What it does

chec:xpire takes stock of the contents of your fridge and informs you which food items are close to their best-before date. chec:xpire also provides a suggested recipe which makes use of the expiring ingredients, allowing you to use the ingredients in your next meal without letting them go to waste due to spoilage!

## How we built it

We built the backend using Express.js, which laid the groundwork for interfacing with Solace, an event broker. The backend tracks food items (in a hypothetical refrigerator) as well as their expiry dates, and picks out those that are two days away from their best-before date so that the user knows to consume them soon. The app also makes use of the co:here AI to retrieve and return recipes that make use of the expiring ingredients, thus providing a convenient way to use up the expiring food items without having to figure out what to do with them in the next couple days.

The frontend is a simple Node.js app that subscribes to "events" (in this case, food approaching their expiry date) through Solace, which sends the message to the frontend app once the two-day mark before the expiry date is reached. A notification is sent to the user detailing which ingredients (and their quantities) are expiring soon, along with a recipe that uses the ingredients up.

## Challenges we ran into

The scope of our project was a little too big for our current skillset; we ran into a few problems finding ways to implement the features that we wanted to include in the project, so we had to find ways to accomplish what we wanted to do using other methods.

## Accomplishments that we're proud of

All but one member of the team are first-time hackathon participants - we're very proud of the fact that we managed to create a working program that did what we wanted it to, despite the hurdles we came across while trying to figure out what frameworks we wanted to use for the project!

## What we learned

* planning out a project that's meant to be completed within 36 hours is difficult, especially if you've never done it before!

* there were some compromises that needed to be made due to a combination of framework-related hiccups and the limitations of our current skillsets, but there's victory to be had in seeing a project through to the end even if we weren't able to accomplish every single little thing we wanted to

* Red Bull gives you wings past midnight, apparently

## What's next for chec:xpire

A fully implemented frontend would be great - we ran out of time! | ## Inspiration

The inspiration for our food waste reduction web app stems from the everyday challenges faced by college students like us. Balancing our limited time and budget, we often found ourselves making frequent grocery runs or inadvertently wasting ingredients forgotten in the fridge. Recognizing the need to optimize resource utilization, we conceived this project. We sought to empower fellow students to minimize food waste by generating innovative recipe suggestions from their leftovers. Our goal is to help users save time, money, and the environment while making the most of their groceries. This project embodies our commitment to efficiency, sustainability, and the pursuit of a more conscientious college life.

## What it does

Our web app revolutionizes kitchen efficiency. Users simply input their available leftovers, and our AI takes charge. It crafts personalized recipe suggestions, utilizing as many mentioned ingredients as possible. This culinary wizardry provides users with a comprehensive array of cooking options for their surplus items, reducing food waste and saving money. Whether you've got a hodgepodge of ingredients or a few stragglers in the fridge, our app transforms them into delectable, resourceful dishes. It's the ultimate kitchen companion, harnessing the power of AI to make every meal a creative and waste-free delight. Say goodbye to food waste and hello to culinary innovation.

## How we built it

Initially, we crafted a user-friendly front-end webpage using HTML, CSS, and JavaScript, complete with an input slot for users to list their leftover food items. Moving to the backend, we employed Python and Flask to build a robust server-side system. This backend handled the critical logic, transforming the user's input into an API request. The magic ingredient, so to speak, came from the OpenAI API, which we integrated to convert the list of ingredients into a treasure trove of creative recipes.

## Challenges we ran into

Bridging the gap between our front-end and back-end code, particularly in a JavaScript environment, posed a significant challenge. As newcomers to commercial APIs, we grappled with the initial learning curve, navigating the intricacies of API implementation. However, through the help of our numerous mentors, we were eventually able to overcome the challenges and learn from the experience.

## Accomplishments that we're proud of

Our journey was marked by significant achievements and personal growth. For many of us, Git and API implementation were uncharted territories at the project's start. However, we are immensely proud of our collective dedication and determination, which enabled us to not only learn but also seamlessly integrate these two key skills into our project. The ability to navigate Git and harness the power of APIs has not only enriched our project but also expanded our own skill sets, making us better-equipped developers and problem solvers. These accomplishments stand as a testament to our commitment to continual learning and improvement.

## What we learned

We learned a wide range of both technical and non-technical skills from this project.

Some new technical skills that we learned, that were new to most or all of us were:

Flask,

Git,

APIs,

Hosting backed and frontend servers separately,

Javascript,

collaboration and peer programming.

## What's next for Pantry Puzzle

An additional feature that allows users to take a photo of their leftovers for an image-to-text conversion, instead of manually inserting as text. | ## Inspiration

The first step of our development process was conducting user interviews with University students within our social circles. When asked of some recently developed pain points, 40% of respondents stated that grocery shopping has become increasingly stressful and difficult with the ongoing COVID-19 pandemic. The respondents also stated that some motivations included a loss of disposable time (due to an increase in workload from online learning), tight spending budgets, and fear of exposure to covid-19.

While developing our product strategy, we realized that a significant pain point in grocery shopping is the process of price-checking between different stores. This process would either require the user to visit each store (in-person and/or online) and check the inventory and manually price check. Consolidated platforms to help with grocery list generation and payment do not exist in the market today - as such, we decided to explore this idea.

**What does G.e.o.r.g.e stand for? : Grocery Examiner Organizer Registrator Generator (for) Everyone**

## What it does

The high-level workflow can be broken down into three major components:

1: Python (flask) and Firebase backend

2: React frontend

3: Stripe API integration

Our backend flask server is responsible for web scraping and generating semantic, usable JSON code for each product item, which is passed through to our React frontend.

Our React frontend acts as the hub for tangible user-product interactions. Users are given the option to search for grocery products, add them to a grocery list, generate the cheapest possible list, compare prices between stores, and make a direct payment for their groceries through the Stripe API.

## How we built it

We started our product development process with brainstorming various topics we would be interested in working on. Once we decided to proceed with our payment service application. We drew up designs as well as prototyped using Figma, then proceeded to implement the front end designs with React. Our backend uses Flask to handle Stripe API requests as well as web scraping. We also used Firebase to handle user authentication and storage of user data.

## Challenges we ran into

Once we had finished coming up with our problem scope, one of the first challenges we ran into was finding a reliable way to obtain grocery store information. There are no readily available APIs to access price data for grocery stores so we decided to do our own web scraping. This lead to complications with slower server response since some grocery stores have dynamically generated websites, causing some query results to be slower than desired. Due to the limited price availability of some grocery stores, we decided to pivot our focus towards e-commerce and online grocery vendors, which would allow us to flesh out our end-to-end workflow.

## Accomplishments that we're proud of