datasetId

large_stringlengths 6

110

| author

large_stringlengths 3

34

| last_modified

large_stringdate 2021-05-20 00:57:22

2025-05-07 08:14:41

| downloads

int64 0

3.97M

| likes

int64 0

7.74k

| tags

large listlengths 1

2.03k

| task_categories

large listlengths 0

16

| createdAt

large_stringdate 2022-03-02 23:29:22

2025-05-07 08:13:27

| trending_score

float64 1

39

⌀ | card

large_stringlengths 31

1M

|

|---|---|---|---|---|---|---|---|---|---|

allganize/IFEval-Ko | allganize | 2025-04-29T06:10:10Z | 107 | 2 | [

"task_categories:text-generation",

"language:ko",

"license:apache-2.0",

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"arxiv:2311.07911",

"region:us",

"InstructionFollowing",

"IF"

] | [

"text-generation"

] | 2025-04-17T06:39:10Z | 2 | ---

dataset_info:

features:

- name: key

dtype: int64

- name: prompt

dtype: string

- name: instruction_id_list

sequence: string

- name: kwargs

list:

- name: capital_frequency

dtype: 'null'

- name: capital_relation

dtype: 'null'

- name: end_phrase

dtype: string

- name: first_word

dtype: string

- name: forbidden_words

sequence: string

- name: frequency

dtype: int64

- name: keyword

dtype: string

- name: keywords

sequence: string

- name: language

dtype: string

- name: let_frequency

dtype: 'null'

- name: let_relation

dtype: 'null'

- name: letter

dtype: 'null'

- name: nth_paragraph

dtype: int64

- name: num_bullets

dtype: int64

- name: num_highlights

dtype: int64

- name: num_paragraphs

dtype: int64

- name: num_placeholders

dtype: int64

- name: num_sections

dtype: int64

- name: num_sentences

dtype: int64

- name: num_words

dtype: int64

- name: postscript_marker

dtype: string

- name: prompt_to_repeat

dtype: string

- name: relation

dtype: string

- name: section_spliter

dtype: string

splits:

- name: train

num_bytes: 168406

num_examples: 342

download_size: 67072

dataset_size: 168406

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

language:

- ko

license: apache-2.0

task_categories:

- text-generation

tags:

- InstructionFollowing

- IF

size_categories:

- n<1K

---

# IFEval-Ko: Korean Instruction-Following Benchmark for LLMs

> This dataset is originated from [IFEval](https://huggingface.co/datasets/google/IFEval/) Dataset

[Korean Version README](https://huggingface.co/datasets/allganize/IFEval-Ko/blob/main/README_Ko.md)

`IFEval-Ko` is a Korean adaptation of Google's open-source **IFEval** benchmark utilized with [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness) framework.

It enables evaluation of large language models (LLMs) for their instruction-following capabilities in the Korean language.

## Dataset Details

- **Original Source**: [google/IFEval](https://huggingface.co/datasets/google/IFEval/)

- **Adaptation Author**: [Allganize Inc. LLM TEAM](https://www.allganize.ai/) | Keonmo Lee

- **Repository**: [allganize/IFEval-Ko](https://huggingface.co/datasets/allganize/IFEval-Ko)

- **Languages**: Korean

- **Translation Tool**: GPT-4o

- **License**: Follows original [google/IFEval](https://huggingface.co/datasets/google/IFEval/) license

- **Benchmarked with**: [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness)

## Benchmark Scores

## How to Use

Clone `lm-evaluation-harness` and create the `ifeval_ko` folder into the `lm_eval/tasks` directory.

```bash

# Install lm-evaluation-harness and task dependencies

git clone --depth 1 https://github.com/EleutherAI/lm-evaluation-harness.git

cd lm-evaluation-harness

pip install -e .

pip install langdetect immutabledict

# Download task files from Hugging Face Repository

python3 -c "

from huggingface_hub import snapshot_download

snapshot_download(

repo_id='allganize/IFEval-Ko',

repo_type='dataset',

local_dir='lm_eval/tasks/',

allow_patterns='ifeval_ko/*',

local_dir_use_symlinks=False

) "

```

***Please check usage of `lm_eval` on original [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness) repository before use.***

### Evaluation with Hugging Face Transformers

```bash

lm_eval --model hf \

--model_args pretrained={HF_MODEL_REPO} \

--tasks ifeval_ko \

--device cuda:0 \

--batch_size 8

```

e.g., {HF_MODEL_REPO} = google/gemma-3-4b-it

### Evaluation with vLLM

Install vLLM-compatible backend:

```bash

pip install lm-eval[vllm]

```

Then run the evaluation:

```bash

lm_eval --model vllm \

--model_args pretrained={HF_MODEL_REPO},trust_remote_code=True \

--tasks ifeval_ko

```

---

## Modifications from Original IFEval

### Data Transformation

- **Translation**: Prompts were translated using the **gpt-4o** model, with a custom prompt designed to preserve the original structure.

- **Removed Items**:

- 84 case-sensitive (`change_case`) tasks

- 28 alphabet-dependent (`letter_frequency`) tasks

- Other erroneous or culturally inappropriate prompts

- **Unit Conversions**:

- Gallons → Liters

- Feet/Inches → Meters/Centimeters

- Dollars → Korean Won (USD:KRW ≈ 1:1500)

- **Standardizations**:

- Unified headings \<\<Title\>\> or \<\<title\>\> to \<\<제목\>\>

- Ensured consistent tone across answers

### Code Changes

- Translated instruction options:

- `instruction._CONSTRAINED_RESPONSE_OPTIONS`

- `instruction._ENDING_OPTIONS`

- Modified scoring classes:

- `KeywordChecker`, `KeywordFrequencyChecker`, `ParagraphFirstWordCheck`, `KeySentenceChecker`, `ForbiddenWords`, `RepeatPromptThenAnswer`, `EndChecker`

- Applied `unicodedata.normalize('NFC', ...)` for normalization

- Removed fallback keyword generator for missing fields (now throws error)

- Removed dependency on `nltk` by modifying `count_sentences()` logic

---

## Evaluation Metrics

Please refer to [original IFEval paper](https://arxiv.org/pdf/2311.07911):

### Strict vs. Loose Accuracy

- **Strict**: Checks if the model followed the instruction *without* transformation of response.

- **Loose**: Applies 3 transformations to response before comparison:

1. Remove markdown symbols (`*`, `**`)

2. Remove the first line (e.g., "Here is your response:")

3. Remove the last line (e.g., "Did that help?")

A sample is marked correct if *any* of the 8 combinations match.

### Prompt-level vs. Instruction-level

- **Prompt-level**: All instructions in a single prompt must be followed to count as True.

- **Instruction-level**: Evaluates each instruction separately for finer-grained metrics.

Created by

Allganize LLM TEAM

[**Keonmo Lee (이건모)**](https://huggingface.co/whatisthis8047)

### Original Citation Information

```bibtex

@misc{zhou2023instructionfollowingevaluationlargelanguage,

title={Instruction-Following Evaluation for Large Language Models},

author={Jeffrey Zhou and Tianjian Lu and Swaroop Mishra and Siddhartha Brahma and Sujoy Basu and Yi Luan and Denny Zhou and Le Hou},

year={2023},

eprint={2311.07911},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2311.07911},

}

``` |

Uni-MoE/VideoVista-CulturalLingo | Uni-MoE | 2025-04-29T06:10:09Z | 159 | 3 | [

"task_categories:video-text-to-text",

"language:zh",

"language:en",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"arxiv:2504.17821",

"region:us"

] | [

"video-text-to-text"

] | 2025-04-06T09:18:02Z | 3 | ---

language:

- zh

- en

license: apache-2.0

size_categories:

- 1K<n<10K

task_categories:

- video-text-to-text

---

<a href="https://arxiv.org/abs/2504.17821" target="_blank">

<img alt="arXiv" src="https://img.shields.io/badge/arXiv-VideoVista--CulturalLingo-red?logo=arxiv" height="20" />

</a>

<a href="https://videovista-culturallingo.github.io/" target="_blank">

<img alt="Website" src="https://img.shields.io/badge/🌎_Website-VideoVista--CulturalLingo-blue.svg" height="20" />

</a>

<a href="https://github.com/HITsz-TMG/VideoVista/tree/main/VideoVista-CulturalLingo" style="display: inline-block; margin-right: 10px;">

<img alt="GitHub Code" src="https://img.shields.io/badge/Code-VideoVista--CulturalLingo-white?&logo=github&logoColor=white" />

</a>

# VideoVista-CulturalLingo

This repository contains the VideoVista-CulturalLingo, introduced in [VideoVista-CulturalLingo: 360° Horizons-Bridging Cultures, Languages,

and Domains in Video Comprehension](https://arxiv.org/pdf/2504.17821).

## Files

We provice the questions in both 'test-00000-of-00001.parquet' and 'VideoVista-CulturalLingo.json' files.

To unzip the videos, using the follow code.

```shell

cat videos.zip.* > combined_videos.zip

unzip combined_videos.zip

```

<!-- The `test-00000-of-00001.parquet` file contains the complete dataset annotations and pre-loaded images, ready for processing with HF Datasets. It can be loaded using the following code:

```python

from datasets import load_dataset

videovista_culturallingo = load_dataset("Uni-MoE/VideoVista-CulturalLingo")

```

Additionally, we provide the videos in `*.zip`.

We also provide the json file 'VideoVista-CulturalLingo.json'. -->

## Dataset Description

The dataset contains the following fields:

| Field Name | Description |

| :--------- | :---------- |

| `video_id` | Index of origin video |

| `question_id` | Global index of the entry in the dataset |

| `video_path` | Video name of corresponding video file |

| `question` | Question asked about the video |

| `options` | Choices for the question |

| `answer` | Ground truth answer for the question |

| `category` | Category of question |

| `subcategory` | Detailed category of question |

| `language` | Language of Video and Question |

## Evaluation

We use `Accuracy` to evaluates performance of VideoVista-CulturalLingo.

We provide an evaluation code of VideoVista-CulturalLingo in our [GitHub repository](https://github.com/HITsz-TMG/VideoVista/tree/main/VideoVista-CulturalLingo).

## Citation

If you find VideoVista-CulturalLingo useful for your research and applications, please cite using this BibTeX:

```bibtex

@misc{chen2025videovistaculturallingo,

title={VideoVista-CulturalLingo: 360$^\circ$ Horizons-Bridging Cultures, Languages, and Domains in Video Comprehension},

author={Xinyu Chen and Yunxin Li and Haoyuan Shi and Baotian Hu and Wenhan Luo and Yaowei Wang and Min Zhang},

year={2025},

eprint={2504.17821},

archivePrefix={arXiv},

}

``` |

yu0226/CipherBank | yu0226 | 2025-04-29T02:11:52Z | 624 | 3 | [

"task_categories:question-answering",

"language:en",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"arxiv:2504.19093",

"region:us",

"Reasoning",

"LLM",

"Encryption",

"Decryption"

] | [

"question-answering"

] | 2025-04-18T06:22:07Z | 3 | ---

license: apache-2.0

task_categories:

- question-answering

language:

- en

tags:

- Reasoning

- LLM

- Encryption

- Decryption

size_categories:

- 1K<n<10K

configs:

- config_name: Rot13

data_files:

- split: test

path: data/Rot13.jsonl

- config_name: Atbash

data_files:

- split: test

path: data/Atbash.jsonl

- config_name: Polybius

data_files:

- split: test

path: data/Polybius.jsonl

- config_name: Vigenere

data_files:

- split: test

path: data/Vigenere.jsonl

- config_name: Reverse

data_files:

- split: test

path: data/Reverse.jsonl

- config_name: SwapPairs

data_files:

- split: test

path: data/SwapPairs.jsonl

- config_name: ParityShift

data_files:

- split: test

path: data/ParityShift.jsonl

- config_name: DualAvgCode

data_files:

- split: test

path: data/DualAvgCode.jsonl

- config_name: WordShift

data_files:

- split: test

path: data/WordShift.jsonl

---

# CipherBank Benchmark

## Benchmark description

CipherBank, a comprehensive benchmark designed to evaluate the reasoning capabilities of LLMs in cryptographic decryption tasks.

CipherBank comprises 2,358 meticulously crafted problems, covering 262 unique plaintexts across 5 domains and 14 subdomains, with a focus on privacy-sensitive and real-world scenarios that necessitate encryption. From a cryptographic perspective, CipherBank incorporates 3 major categories of encryption methods, spanning 9 distinct algorithms, ranging from classical ciphers to custom cryptographic techniques.

## Model Performance

We evaluate state-of-the-art LLMs on CipherBank, e.g., GPT-4o, DeepSeek-V3, and cutting-edge reasoning-focused models such as o1 and DeepSeek-R1. Our results reveal significant gaps in reasoning abilities not only between general-purpose chat LLMs and reasoning-focused LLMs but also in the performance of current reasoning-focused models when applied to classical cryptographic decryption tasks, highlighting the challenges these models face in understanding and manipulating encrypted data.

| **Model** | **CipherBank Score (%)**|

|--------------|----|

|Qwen2.5-72B-Instruct |0.55 |

|Llama-3.1-70B-Instruct |0.38 |

|DeepSeek-V3 | 9.86 |

|GPT-4o-mini-2024-07-18 | 1.00 |

|GPT-4o-2024-08-06 | 8.82 |

|gemini-1.5-pro | 9.54 |

|gemini-2.0-flash-exp | 8.65|

|**Claude-Sonnet-3.5-1022** | **45.14** |

|DeepSeek-R1 | 25.91 |

|gemini-2.0-flash-thinking | 13.49 |

|o1-mini-2024-09-12 | 20.07 |

|**o1-2024-12-17** | **40.59** |

## Please see paper & website for more information:

- [https://arxiv.org/abs/2504.19093](https://arxiv.org/abs/2504.19093)

- [https://cipherbankeva.github.io/](https://cipherbankeva.github.io/)

## Citation

If you find CipherBank useful for your research and applications, please cite using this BibTeX:

```bibtex

@misc{li2025cipherbankexploringboundaryllm,

title={CipherBank: Exploring the Boundary of LLM Reasoning Capabilities through Cryptography Challenges},

author={Yu Li and Qizhi Pei and Mengyuan Sun and Honglin Lin and Chenlin Ming and Xin Gao and Jiang Wu and Conghui He and Lijun Wu},

year={2025},

eprint={2504.19093},

archivePrefix={arXiv},

primaryClass={cs.CR},

url={https://arxiv.org/abs/2504.19093},

}

```

|

Anthropic/values-in-the-wild | Anthropic | 2025-04-28T17:31:57Z | 549 | 120 | [

"license:cc-by-4.0",

"size_categories:1K<n<10K",

"format:csv",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-04-10T06:04:36Z | null | ---

license: cc-by-4.0

configs:

- config_name: values_frequencies

data_files: values_frequencies.csv

- config_name: values_tree

data_files: values_tree.csv

---

## Summary

This dataset presents a comprehensive taxonomy of 3307 values expressed by Claude (an AI assistant) across hundreds of thousands of real-world conversations. Using a novel privacy-preserving methodology, these values were extracted and classified without human reviewers accessing any conversation content. The dataset reveals patterns in how AI systems express values "in the wild" when interacting with diverse users and tasks.

We're releasing this resource to advance research in two key areas: understanding value expression in deployed language models and supporting broader values research across disciplines. By providing empirical data on AI values "in the wild," we hope to move toward a more grounded understanding of how values manifest in human-AI interactions.

For information on how this dataset was constructed, and related analysis, please see the accompanying paper: [Values in the Wild: Discovering and Analyzing Values in Real-World Language Model Interactions](https://assets.anthropic.com/m/18d20cca3cde3503/original/Values-in-the-Wild-Paper.pdf).

**Note:** You can interpret the occurrence of each value in the dataset as "The AI's response demonstrated valuing {VALUE}." For example, for the value of "accuracy" (5.3% frequency), this means that our methods detected that Claude's response demonstrated *valuing* accuracy 5.3% of the time (not that it *was* accurate in 5.3% of conversations).

## Dataset Description

The dataset includes two CSV files:

1. `values_frequencies.csv`

- This shows every extracted AI value along with their frequency of occurrence across the conversation sample. There are two columns:

- `value`: The value label (e.g. `accuracy` or `helpfulness`).

- `pct_convos`: The percentage of the subjective conversation sample that that this value was detected in, rounded to 3 decimal places.

- This is sorted by the `pct_convos` column.

2. `values_tree.csv`

- This shows the hierarchical taxonomy of values, where we sequentially cluster/group the values into higher-level categories. There are six columns:

- `cluster_id`: If `level > 0`, this denotes the ID of the cluster of values. If `level = 0`, this is just identical to the `name` of the extracted value.

- `description`: If `level > 0`, the Claude-generated description of the cluster of values.

- `name`: The name of the extracted value itself (if `level = 0`, or the cluster of values (if `level > 0`).

- `level`: Out of `0, 1, 2, 3`, which level of the taxonomy is this value/cluster of values at. `level = 0` means the lowest level, i.e. the individual values; `level = 3` is the highest level (e.g. "Epistemic values").

- `parent_cluster_id`: The `cluster_id` of the higher-level parent cluster of this.

- `pct_total_occurrences`: The percentage of the total *number of values expressions* that was expressions of this value, rounded to 3 decimal places.

- This is sorted by the `parent_cluster_id` column, so that values clustered together appear together.

## Disclaimer

Please note that the extracted values, descriptions and cluster names were generated by a language model and may contain inaccuracies. While we conducted human evaluation on our values extractor to assess quality, and manually checked the hierarchy for clarity and accuracy, inferring values is an inherently subjective endeavor, and there may still be errors. The dataset is intended for research purposes only and should not be considered a definitive assessment of what values may be expressed by Claude, or language models in general.

## Usage

```python

from datasets import load_dataset

dataset_values_frequencies = load_dataset("Anthropic/values-in-the-wild", "values_frequencies")

dataset_values_tree = load_dataset("Anthropic/values-in-the-wild", "values_tree")

```

## Contact

For questions, you can email saffron at anthropic dot com |

OpenDriveLab/OpenScene | OpenDriveLab | 2025-04-28T07:13:35Z | 28,792 | 5 | [

"license:cc-by-nc-sa-4.0",

"region:us"

] | [] | 2024-03-02T04:33:04Z | null | ---

license: cc-by-nc-sa-4.0

--- |

nvidia/PhysicalAI-SmartSpaces | nvidia | 2025-04-28T03:56:47Z | 2,951 | 18 | [

"license:cc-by-4.0",

"arxiv:2404.09432",

"arxiv:2412.00692",

"region:us"

] | [] | 2025-03-13T19:33:51Z | 4 | ---

license: cc-by-4.0

---

# Physical AI Smart Spaces Dataset

## Overview

Comprehensive, annotated dataset for multi-camera tracking and 2D/3D object detection. This dataset is synthetically generated with Omniverse.

This dataset consists of over 250 hours of video from across nearly 1,500 cameras from indoor scenes in warehouses, hospitals, retail, and more. The dataset is time synchronized for tracking humans across multiple cameras using feature representation and no personal data.

## Dataset Description

### Dataset Owner(s)

NVIDIA

### Dataset Creation Date

We started to create this dataset in December, 2023. First version was completed and released as part of 8th AI City Challenge in conjunction with CVPR 2024.

### Dataset Characterization

- Data Collection Method: Synthetic

- Labeling Method: Automatic with IsaacSim

### Video Format

- Video Standard: MP4 (H.264)

- Video Resolution: 1080p

- Video Frame rate: 30 FPS

### Ground Truth Format (MOTChallenge) for `MTMC_Tracking_2024`

Annotations are provided in the following text format per line:

```

<camera_id> <obj_id> <frame_id> <xmin> <ymin> <width> <height> <xworld> <yworld>

```

- `<camera_id>`: Numeric identifier for the camera.

- `<obj_id>`: Consistent numeric identifier for each object across cameras.

- `<frame_id>`: Frame index starting from 0.

- `<xmin> <ymin> <width> <height>`: Axis-aligned bounding box coordinates in pixels (top-left origin).

- `<xworld> <yworld>`: Global coordinates (projected bottom points of objects) based on provided camera matrices.

The video file and calibration (camera matrix and homography) are provided for each camera view.

Calibration and ground truth files in the updated 2025 JSON format are now also included for each scene.

Note: some calibration fields—such as camera coordinates, camera directions, and scale factors—are not be available for the 2024 dataset due to original data limitations.

### Directory Structure for `MTMC_Tracking_2025`

- `videos/`: Video files.

- `depth_maps/`: Depth maps stored as PNG images and compressed within HDF5 files. These files are exceedingly large; you may choose to use RGB videos only if preferred.

- `ground_truth.json`: Detailed ground truth annotations (see below).

- `calibration.json`: Camera calibration and metadata.

- `map.png`: Visualization map in top-down view.

### Ground Truth Format (JSON) for `MTMC_Tracking_2025`

Annotations per frame:

```json

{

"<frame_id>": [

{

"object_type": "<class_name>",

"object_id": <int>,

"3d_location": [x, y, z],

"3d_bounding_box_scale": [w, l, h],

"3d_bounding_box_rotation": [pitch, roll, yaw],

"2d_bounding_box_visible": {

"<camera_id>": [xmin, ymin, xmax, ymax]

}

}

]

}

```

### Calibration Format (JSON) for `MTMC_Tracking_2025`

Contains detailed calibration metadata per sensor:

```json

{

"calibrationType": "cartesian",

"sensors": [

{

"type": "camera",

"id": "<sensor_id>",

"coordinates": {"x": float, "y": float},

"scaleFactor": float,

"translationToGlobalCoordinates": {"x": float, "y": float},

"attributes": [

{"name": "fps", "value": float},

{"name": "direction", "value": float},

{"name": "direction3d", "value": "float,float,float"},

{"name": "frameWidth", "value": int},

{"name": "frameHeight", "value": int}

],

"intrinsicMatrix": [[f_x, 0, c_x], [0, f_y, c_y], [0, 0, 1]],

"extrinsicMatrix": [[3×4 matrix]],

"cameraMatrix": [[3×4 matrix]],

"homography": [[3×3 matrix]]

}

]

}

```

### Evaluation

- **2024 Edition**: Evaluation based on HOTA scores at the [2024 AI City Challenge Server](https://eval.aicitychallenge.org/aicity2024). The submission is currently disabled, as the ground truths of test set are provided with this release.

- **2025 Edition**: Evaluation system and test set forthcoming in the 2025 AI City Challenge.

## Dataset Quantification

| Dataset | Annotation Type | Hours | Cameras | Object Classes & Counts | No. 3D Boxes | No. 2D Boxes | Depth Maps | Total Size |

|-------------------------|-------------------------------------------------------|-------|---------|---------------------------------------------------------------|--------------|--------------|------------|------------|

| **MTMC_Tracking_2024** | 2D bounding boxes, multi-camera tracking IDs | 212 | 953 | Person: 2,481 | 52M | 135M | No | 213 GB |

| **MTMC_Tracking_2025**<br>(Train & Validation only) | 2D & 3D bounding boxes, multi-camera tracking IDs | 42 | 504 | Person: 292<br>Forklift: 13<br>NovaCarter: 28<br>Transporter: 23<br>FourierGR1T2: 6<br>AgilityDigit: 1<br>**Overall:** 363 | 8.9M | 73M | Yes | 74 GB (excluding depth maps) |

## References

Please cite the following papers when using this dataset:

```bibtex

@InProceedings{Wang24AICity24,

author = {Shuo Wang and David C. Anastasiu and Zheng Tang and Ming-Ching Chang and Yue Yao and Liang Zheng and Mohammed Shaiqur Rahman and Meenakshi S. Arya and Anuj Sharma and Pranamesh Chakraborty and Sanjita Prajapati and Quan Kong and Norimasa Kobori and Munkhjargal Gochoo and Munkh-Erdene Otgonbold and Ganzorig Batnasan and Fady Alnajjar and Ping-Yang Chen and Jun-Wei Hsieh and Xunlei Wu and Sameer Satish Pusegaonkar and Yizhou Wang and Sujit Biswas and Rama Chellappa},

title = {The 8th {AI City Challenge},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

note = {arXiv:2404.09432},

month = {June},

year = {2024},

}

@misc{Wang24BEVSUSHI,

author = {Yizhou Wang and Tim Meinhardt and Orcun Cetintas and Cheng-Yen Yang and Sameer Satish Pusegaonkar and Benjamin Missaoui and Sujit Biswas and Zheng Tang and Laura Leal-Taix{\'e}},

title = {{BEV-SUSHI}: {M}ulti-target multi-camera {3D} detection and tracking in bird's-eye view},

note = {arXiv:2412.00692},

year = {2024}

}

```

## Ethical Considerations

NVIDIA believes Trustworthy AI is a shared responsibility and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

Please report security vulnerabilities or NVIDIA AI Concerns [here](https://www.nvidia.com/en-us/support/submit-security-vulnerability/).

## Changelog

- **2025-04-27**: Added depth maps to all `MTMC_Tracking_2025` scenes.

- **2025-04-23**: Added 2025-format calibration and ground truth JSON files to all `MTMC_Tracking_2024` scenes. |

moonshotai/Kimi-Audio-GenTest | moonshotai | 2025-04-28T03:45:53Z | 139 | 2 | [

"language:zh",

"license:mit",

"size_categories:n<1K",

"format:audiofolder",

"modality:audio",

"modality:text",

"library:datasets",

"library:mlcroissant",

"region:us",

"speech generation",

"chinese"

] | [] | 2025-04-28T03:44:52Z | 2 | ---

# Required: Specify the license for your dataset

license: [mit]

# Required: Specify the language(s) of the dataset

language:

- zh # 中文

# Optional: Add tags for discoverability

tags:

- speech generation

- chinese

# Required: A pretty name for your dataset card

pretty_name: "Kimi-Audio-Generation-Testset"

---

# Kimi-Audio-Generation-Testset

## Dataset Description

**Summary:** This dataset is designed to benchmark and evaluate the conversational capabilities of audio-based dialogue models. It consists of a collection of audio files containing various instructions and conversational prompts. The primary goal is to assess a model's ability to generate not just relevant, but also *appropriately styled* audio responses.

Specifically, the dataset targets the model's proficiency in:

* **Paralinguistic Control:** Generating responses with specific control over **emotion**, speaking **speed**, and **accent**.

* **Empathetic Dialogue:** Engaging in conversations that demonstrate understanding and **empathy**.

* **Style Adaptation:** Delivering responses in distinct styles, including **storytelling** and reciting **tongue twisters**.

Audio conversation models are expected to process the input audio instructions and generate reasonable, contextually relevant audio responses. The quality, appropriateness, and adherence to the instructed characteristics (like emotion or style) of the generated responses are evaluated through **human assessment**.

* **Languages:** zh (中文)

## Dataset Structure

### Data Instances

Each line in the `test/metadata.jsonl` file is a JSON object representing a data sample. The `datasets` library uses the path in the `file_name` field to load the corresponding audio file.

**示例:**

```json

{"audio_content": "你能不能快速地背一遍李白的静夜思", "ability": "speed", "file_name": "wav/6.wav"} |

nvidia/Llama-Nemotron-Post-Training-Dataset | nvidia | 2025-04-27T18:10:38Z | 8,510 | 432 | [

"license:cc-by-4.0",

"size_categories:1M<n<10M",

"format:json",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"region:us"

] | [] | 2025-03-13T21:01:09Z | null | ---

license: cc-by-4.0

configs:

- config_name: SFT

data_files:

- split: code

path: SFT/code/*.jsonl

- split: math

path: SFT/math/*.jsonl

- split: science

path: SFT/science/*.jsonl

- split: chat

path: SFT/chat/*.jsonl

- split: safety

path: SFT/safety/*.jsonl

default: true

- config_name: RL

data_files:

- split: instruction_following

path: RL/instruction_following/*.jsonl

---

# Llama-Nemotron-Post-Training-Dataset-v1.1 Release

**Update [4/8/2025]:**

**v1.1:** We are releasing an additional 2.2M Math and 500K Code Reasoning Data in support of our release of [Llama-3.1-Nemotron-Ultra-253B-v1](https://huggingface.co/nvidia/Llama-3_1-Nemotron-Ultra-253B-v1). 🎉

## Data Overview

This dataset is a compilation of SFT and RL data that supports improvements of math, code, general reasoning, and instruction following capabilities of the original Llama instruct model, in support of NVIDIA’s release of [Llama-3.1-Nemotron-Ultra-253B-v1](https://huggingface.co/nvidia/Llama-3_1-Nemotron-Ultra-253B-v1), [Llama-3.3-Nemotron-Super-49B-v1](https://huggingface.co/nvidia/Llama-3_3-Nemotron-Super-49B-v1) and [Llama-3.1-Nemotron-Nano-8B-v1](https://huggingface.co/nvidia/Llama-3.1-Nemotron-Nano-8B-v1).

Llama-3.1-Nemotron-Ultra-253B-v1 is a large language model (LLM) which is a derivative of [Meta Llama-3.1-405B-Instruct](https://huggingface.co/meta-llama/Llama-3.1-405B-Instruct) (AKA the *reference model*).

Llama-3.3-Nemotron-Super-49B-v1 is an LLM which is a derivative of [Meta Llama-3.3-70B-Instruct](https://huggingface.co/meta-llama/Llama-3.3-70B-Instruct) (AKA the *reference model*). Llama-3.1-Nemotron-Nano-8B-v1 is an LLM which is a derivative of [Meta Llama-3.1-8B-Instruct](https://huggingface.co/meta-llama/Llama-3.1-8B-Instruct) (AKA the *reference model*). They are aligned for human chat preferences, and tasks.

These models offer a great tradeoff between model accuracy and efficiency. Efficiency (throughput) directly translates to savings. Using a novel Neural Architecture Search (NAS) approach, we greatly reduce the model’s memory footprint and enable larger workloads. This NAS approach enables the selection of a desired point in the accuracy-efficiency tradeoff. The models support a context length of 128K.

This dataset release represents a significant move forward in openness and transparency in model development and improvement. By releasing the complete training set, in addition to the training technique, tools and final model weights, NVIDIA supports both the re-creation and the improvement of our approach.

## Data distribution

| Category | Value |

|----------|-----------|

| math | 22,066,397|

| code | 10,108,883 |

| science | 708,920 |

| instruction following | 56,339 |

| chat | 39,792 |

| safety | 31,426 |

## Filtering the data

Users can download subsets of the data based on the metadata schema described above. Example script for downloading code and math as follows:

```

from datasets import load_dataset

ds = load_dataset("nvidia/Llama-Nemotron-Post-Training-Dataset", "SFT", split=["code", "math"])

```

## Prompts

Prompts have been sourced from either public and open corpus or synthetically generated. All responses have been synthetically generated from public and open models.

The prompts were extracted, and then filtered for quality and complexity, or generated to meet quality and complexity requirements. This included filtration such as removing inconsistent prompts, prompts with answers that are easy to guess, and removing prompts with incorrect syntax.

## Responses

Responses were synthetically generated by a variety of models, with some prompts containing responses for both reasoning on and off modes, to train the model to distinguish between two modes.

Models that were used in the creation of this dataset:

| Model | Number of Samples |

|----------|-----------|

| Llama-3.3-70B-Instruct | 420,021 |

| Llama-3.1-Nemotron-70B-Instruct | 31,218 |

| Llama-3.3-Nemotron-70B-Feedback/Edit/Select | 22,644 |

| Mixtral-8x22B-Instruct-v0.1 | 31,426 |

| DeepSeek-R1 | 3,934,627 |

| Qwen-2.5-Math-7B-Instruct | 19,840,970 |

| Qwen-2.5-Coder-32B-Instruct | 8,917,167 |

| Qwen-2.5-72B-Instruct | 464,658 |

| Qwen-2.5-32B-Instruct | 2,297,175 |

## License/Terms of Use

The dataset contains information about license type on a per sample basis. The dataset is predominantly CC-BY-4.0, with a small subset of prompts from Wildchat having an ODC-BY license and a small subset of prompts from StackOverflow with CC-BY-SA license.

This dataset contains synthetic data created using Llama-3.3-70B-Instruct, Llama-3.1-Nemotron-70B-Instruct and

Llama-3.3-Nemotron-70B-Feedback/Edit/Select (ITS models). If this dataset is used to create, train, fine tune, or otherwise improve an AI model, which is distributed or made available, such AI model may be subject to redistribution and use requirements in the Llama 3.1 Community License Agreement and Llama 3.3 Community License Agreement.

**Data Developer:** NVIDIA

### Use Case: <br>

Developers training AI Agent systems, chatbots, RAG systems, and other AI-powered applications. <br>

### Release Date: <br>

4/8/2025 <br>

## Data Version

1.1 (4/8/2025)

## Intended use

The Llama Nemotron Post-Training Dataset is intended to be used by the community to continue to improve open models. The data may be freely used to train and evaluate.

## Ethical Considerations:

NVIDIA believes Trustworthy AI is a shared responsibility and we have established policies and practices to enable development for a wide array of AI applications. When downloaded or used in accordance with our terms of service, developers should work with their internal model team to ensure this model meets requirements for the relevant industry and use case and addresses unforeseen product misuse.

Please report security vulnerabilities or NVIDIA AI Concerns [here](https://www.nvidia.com/en-us/support/submit-security-vulnerability/).

## Data Opt-Out:

NVIDIA has undertaken legal review to ensure there is no confidential, PII or copyright materials. If, when reviewing or using this dataset, you identify issues with the data itself, such as those listed above, please contact [email protected].

|

ByteDance-Seed/Multi-SWE-bench_trajs | ByteDance-Seed | 2025-04-27T06:43:10Z | 68,906 | 1 | [

"task_categories:text-generation",

"license:other",

"arxiv:2504.02605",

"region:us",

"code"

] | [

"text-generation"

] | 2025-04-14T08:08:31Z | null | ---

license: other

task_categories:

- text-generation

tags:

- code

---

## 🧠 Multi-SWE-bench Trajectories

This repository stores **all trajectories and logs** generated by agents evaluated on the [Multi-SWE-bench](https://multi-swe-bench.github.io) leaderboard.

## 📚 Citation

```

@misc{zan2025multiswebench,

title={Multi-SWE-bench: A Multilingual Benchmark for Issue Resolving},

author={Daoguang Zan and Zhirong Huang and Wei Liu and Hanwu Chen and Linhao Zhang and Shulin Xin and Lu Chen and Qi Liu and Xiaojian Zhong and Aoyan Li and Siyao Liu and Yongsheng Xiao and Liangqiang Chen and Yuyu Zhang and Jing Su and Tianyu Liu and Rui Long and Kai Shen and Liang Xiang},

year={2025},

eprint={2504.02605},

archivePrefix={arXiv},

primaryClass={cs.SE},

url={https://arxiv.org/abs/2504.02605},

}

``` |

cyberalchemist/PixelWeb | cyberalchemist | 2025-04-27T03:16:16Z | 263 | 2 | [

"task_categories:object-detection",

"language:en",

"license:apache-2.0",

"size_categories:10K<n<100K",

"arxiv:2504.16419",

"region:us"

] | [

"object-detection"

] | 2025-04-22T15:07:03Z | 2 | ---

license: apache-2.0

task_categories:

- object-detection

language:

- en

size_categories:

- 10K<n<100K

---

# PixelWeb: The First Web GUI Dataset with Pixel-Wise Labels

[https://arxiv.org/abs/2504.16419](https://arxiv.org/abs/2504.16419)

# Dataset Description

**PixelWeb-1K**: 1,000 GUI screenshots with mask, contour and bbox annotations

**PixelWeb-10K**: 10,000 GUI screenshots with mask, contour and bbox annotations

**PixelWeb-100K**: Coming soon

You need to extract the tar.gz archive by:

`tar -xzvf pixelweb_1k.tar.gz`

# Document Description

{id}-screenshot.png # The screenshot of a webpage

{id}-bbox.json # The bounding box labels of a webpage, [[left,top,width,height],...]

{id}-contour.json # The contour labels of a webpage, [[[x1,y1,x2,y2,...],...],...]

{id}-mask.json # The mask labels of a webpage, [[element_id,...],...]

{id}-class.json # The class labels of a webpage, [axtree_label,...]

The element_id corresponds to the index of the class. |

agentlans/reddit-ethics | agentlans | 2025-04-26T22:18:39Z | 246 | 3 | [

"task_categories:text-classification",

"task_categories:question-answering",

"task_categories:feature-extraction",

"language:en",

"license:cc-by-4.0",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"social-media",

"reddit",

"ethics",

"morality",

"philosophy",

"alignment",

"reasoning-datasets-competition"

] | [

"text-classification",

"question-answering",

"feature-extraction"

] | 2025-04-23T14:46:25Z | 3 | ---

license: cc-by-4.0

task_categories:

- text-classification

- question-answering

- feature-extraction

language:

- en

tags:

- social-media

- reddit

- ethics

- morality

- philosophy

- alignment

- reasoning-datasets-competition

---

# Reddit Ethics: Real-World Ethical Dilemmas from Reddit

Reddit Ethics is a curated dataset of genuine ethical dilemmas collected from Reddit, designed to support research and education in philosophical ethics, AI alignment, and moral reasoning.

Each entry features a real-world scenario accompanied by structured ethical analysis through major frameworks—utilitarianism, deontology, and virtue ethics. The dataset also provides discussion questions, sample answers, and proposed resolutions, making it valuable for examining human values and ethical reasoning in practical contexts.

The construction of Reddit Ethics involved random sampling from the first

10 000 entries of the [OsamaBsher/AITA-Reddit-Dataset](https://huggingface.co/datasets/OsamaBsher/AITA-Reddit-Dataset) longer than 1 000 characters.

Five seed cases were manually annotated using ChatGPT.

Additional cases were generated via few-shot prompting with [agentlans/Llama3.1-LexiHermes-SuperStorm](https://huggingface.co/agentlans/Llama3.1-LexiHermes-SuperStorm) to ensure diversity and scalability while maintaining consistency in ethical analysis.

The dataset covers a wide range of everyday ethical challenges encountered in online communities, including personal relationships, professional conduct, societal norms, technology, and digital ethics.

## Data Structure

Each dataset entry contains:

- `text`: The original Reddit post describing the ethical dilemma.

- `title`: A concise summary of the ethical issue.

- `description`: A brief overview of the scenario.

- `issues`: Key ethical themes or conflicts.

- Ethical analyses from three major philosophical perspectives:

- `utilitarianism`: Evaluates actions by their consequences, aiming to maximize overall well-being.

- `deontology`: Assesses the moral rightness of actions based on rules, duties, or obligations, regardless of outcomes.

- `virtue_ethics`: Focuses on the character traits and intentions of the agents involved, emphasizing virtues such as honesty, integrity, and fairness.

- Note that the three ethical frameworks reflect major traditions in normative ethics and are widely used for structuring ethical reasoning in academic and applied settings.

- `questions`: Discussion prompts for further analysis.

- `answers`: Sample responses to the discussion questions.

- `resolution`: A suggested synthesis or resolution based on the ethical analysis.

### Example Entry

```json

{

"text": "my so and i are both 20, and i live in a house with 3 other people who are 19-21. ... would we be in the wrong if we pursued this?",

"title": "Household Property and Moral Obligation: The Ethics of Repair and Replacement",

"description": "A couple and their housemates disagree over the cost of a new TV after the old one was broken. One housemate wants the new TV to stay, while another suggests paying for the replacement.",

"issues": [

"Shared Responsibility vs. Personal Investment",

"Equity vs. Fairness",

"Moral Obligations vs. Practicality"

],

"utilitarianism": "Considering the overall household benefit and the cost-benefit analysis, it may be fair to let the TV remain.",

"deontology": "The couple should hold to their agreement to sell the TV to the housemates, respecting their word and the value of fairness.",

"virtue_ethics": "Honesty and integrity guide the choice—acknowledging the financial burden and seeking a solution that respects all members.",

"questions": [

"Should the couple be bound by their agreement to sell the TV at a lower price?",

"How should the household balance fairness and practicality in resolving the TV issue?",

"What is the moral weight of past sacrifices and the current financial situation?"

],

"answers": [

"Yes, the couple should honor their agreement to sell the TV at a lower price, upholding their commitment to fairness and honesty.",

"The household should discuss and agree on a fair solution, considering the value of the TV and each member’s financial situation.",

"Previous sacrifices and current financial hardship can influence the moral weight of the decision, but fairness and respect should guide the solution."

],

"resolution": "The couple should adhere to their agreement to sell the TV at a lower price, showing respect for their word and the household's fairness. This approach fosters trust and sets a positive precedent for future conflicts."

}

```

## Limitations

1. Limited to a single subreddit as a proof of concept.

2. Potential selection bias due to subreddit demographics and culture.

3. The dataset predominantly represents Western, individualistic perspectives.

4. Not tailored to specialized branches such as professional, bioethical, or environmental ethics.

5. Some cases may reflect social or communication issues rather than clear-cut ethical dilemmas.

6. Analyses are concise due to space constraints and may not provide in-depth philosophical exploration.

7. Annotation bias may arise from the use of large language models.

## Licence

Creative Commons Attribution 4.0 International (CC-BY-4.0)

|

Eureka-Lab/PHYBench | Eureka-Lab | 2025-04-26T13:56:46Z | 460 | 39 | [

"task_categories:question-answering",

"language:en",

"license:mit",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"arxiv:2504.16074",

"region:us"

] | [

"question-answering",

"mathematical-reasoning"

] | 2025-04-22T15:56:27Z | 39 | ---

license: mit

task_categories:

- question-answering

- mathematical-reasoning

language:

- en

size_categories:

- 500<n<1K

---

<div align="center">

<p align="center" style="font-size:28px"><b>PHYBench: Holistic Evaluation of Physical Perception and Reasoning in Large Language Models</b></p>

<p align="center">

<a href="https://phybench.ai">[🌐 Project]</a>

<a href="https://arxiv.org/abs/2504.16074">[📄 Paper]</a>

<a href="https://github.com/phybench-official">[💻 Code]</a>

<a href="#-overview">[🌟 Overview]</a>

<a href="#-data-details">[🔧 Data Details]</a>

<a href="#-citation">[🚩 Citation]</a>

</p>

[](https://opensource.org/license/mit)

---

</div>

## New Updates

- **2025.4.25**: We release our code of EED Score. View and star on our github page!

- **Recently**: The leaderboard is still under progress, we'll release it as soon as possible.

## 🚀 Acknowledgement and Progress

We're excited to announce the initial release of our PHYBench dataset!

- **100 fully-detailed examples** including handwritten solutions, questions, tags, and reference answers.

- **400 additional examples** containing questions and tags.

### 📂 Dataset Access

You can access the datasets directly via Hugging Face:

- [**PHYBench-fullques.json**](https://huggingface.co/datasets/Eureka-Lab/PHYBench/blob/main/PHYBench-fullques_v1.json): 100 examples with complete solutions.

- [**PHYBench-onlyques.json**](https://huggingface.co/datasets/Eureka-Lab/PHYBench/blob/main/PHYBench-onlyques_v1.json): 400 examples (questions and tags only).

- [**PHYBench-questions.json**](https://huggingface.co/datasets/Eureka-Lab/PHYBench/blob/main/PHYBench-questions_v1.json): Comprehensive set of all 500 questions.

### 📊 Full-Dataset Evaluation & Leaderboard

We are actively finalizing the full-dataset evaluation pipeline and the real-time leaderboard. Stay tuned for their upcoming release!

Thank you for your patience and ongoing support! 🙏

For further details or collaboration inquiries, please contact us at [**[email protected]**](mailto:[email protected]).

## 🌟 Overview

PHYBench is the first large-scale benchmark specifically designed to evaluate **physical perception** and **robust reasoning** capabilities in Large Language Models (LLMs). With **500 meticulously curated physics problems** spanning mechanics, electromagnetism, thermodynamics, optics, modern physics, and advanced physics, it challenges models to demonstrate:

- **Real-world grounding**: Problems based on tangible physical scenarios (e.g., ball inside a bowl, pendulum dynamics)

- **Multi-step reasoning**: Average solution length of 3,000 characters requiring 10+ intermediate steps

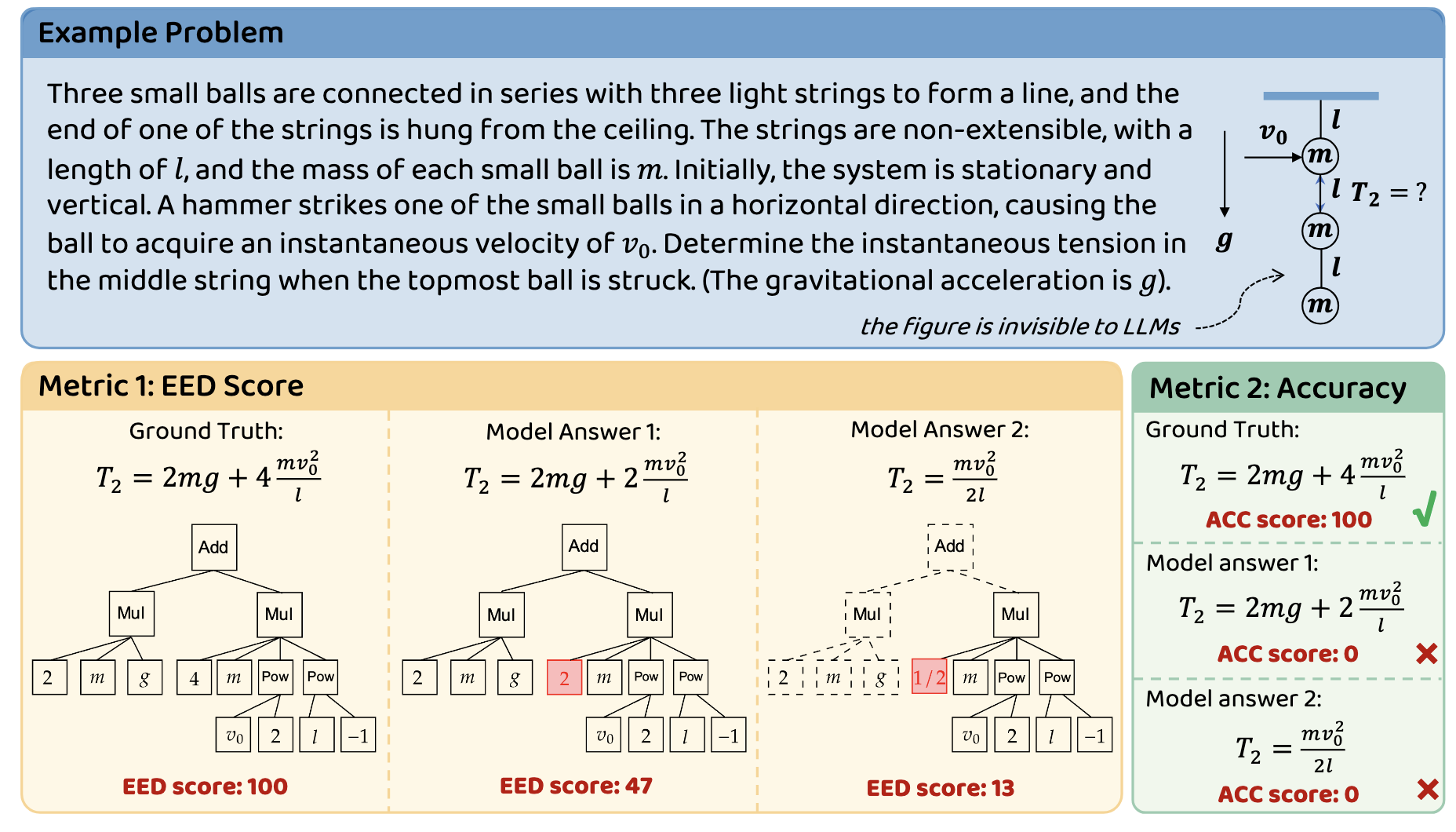

- **Symbolic precision**: Strict evaluation of LaTeX-formulated expressions through novel **Expression Edit Distance (EED) Score**

Key innovations:

- 🎯 **EED Metric**: Smoother measurement based on the edit-distance on expression tree

- 🏋️ **Difficulty Spectrum**: High school, undergraduate, Olympiad-level physics problems

- 🔍 **Error Taxonomy**: Explicit evaluation of Physical Perception (PP) vs Robust Reasoning (RR) failures

## 🔧 Example Problems

**Put some problem cards here**

**Answer Types**:

🔹 Strict symbolic expressions (e.g., `\sqrt{\frac{2g}{3R}}`)

🔹 Multiple equivalent forms accepted

🔹 No numerical approximations or equation chains

## 🛠️ Data Curation

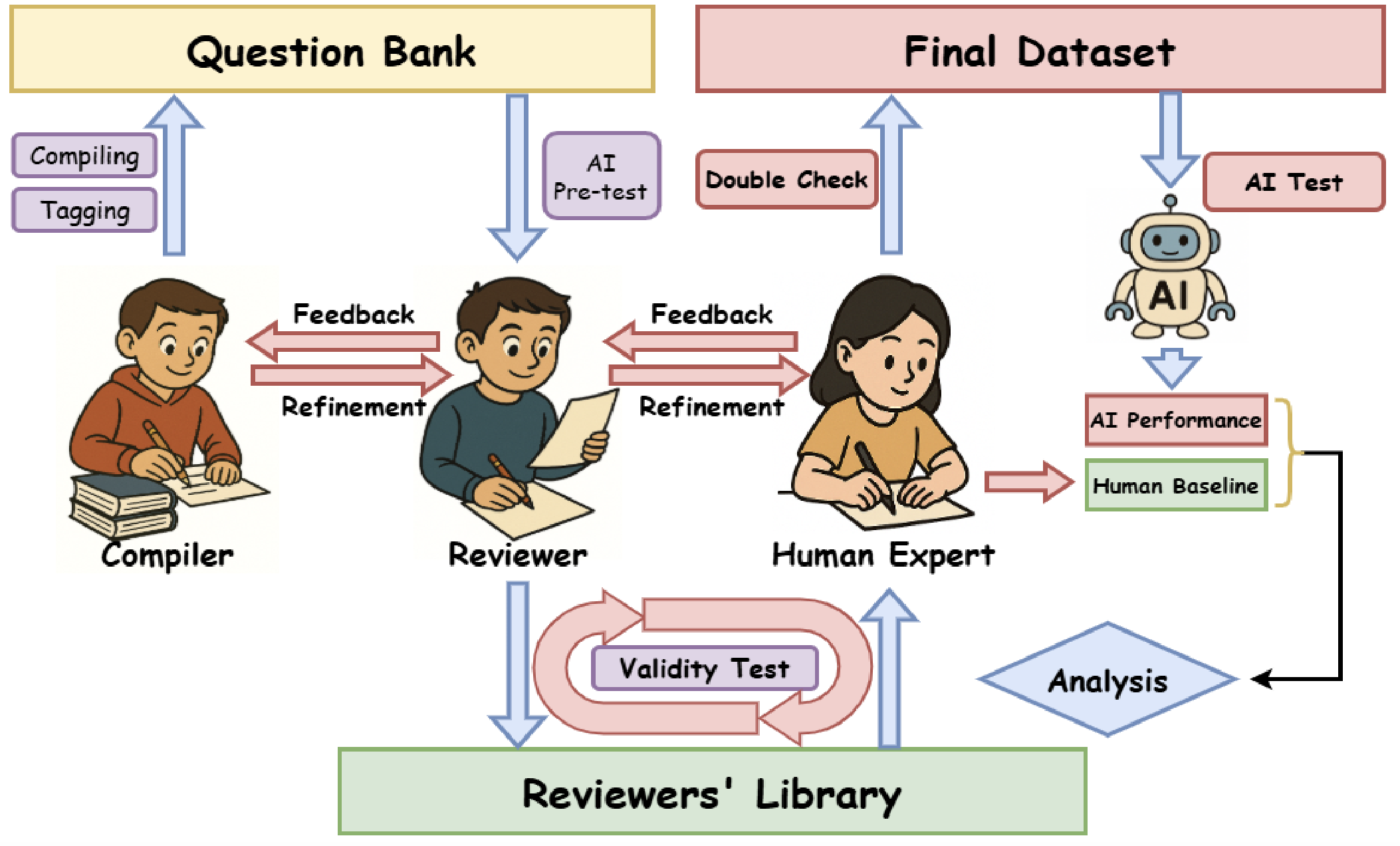

### 3-Stage Rigorous Validation Pipeline

1. **Expert Creation & Strict Screening**

- 178 PKU physics students contributed problems that are:

- Almost entirely original/custom-created

- None easily found through direct internet searches or standard reference materials

- Strict requirements:

- Single unambiguous symbolic answer (e.g., `T=2mg+4mv₀²/l`)

- Text-only solvability (no diagrams/multimodal inputs)

- Rigorously precise statements to avoid ambiguity

- Solvable using only basic physics principles (no complex specialized knowledge required)

- No requirements on AI test to avoid filtering for AI weaknesses

2. **Multi-Round Academic Review**

- 3-tier verification process:

- Initial filtering: Reviewers assessed format validity and appropriateness (not filtering for AI weaknesses)

- Ambiguity detection and revision: Reviewers analyzed LLM-generated solutions to identify potential ambiguities in problem statements

- Iterative improvement cycle: Questions refined repeatedly until all LLMs can understand the question and follow the instructions to produce the expressions it believes to be right.

3. **Human Expert Finalization**

- **81 PKU students participated:**

- Each student independently solved 8 problems from the dataset

- Evaluate question clarity, statement rigor, and answer correctness

- Establish of human baseline performance meanwhile

## 📊 Evaluation Protocol

### Machine Evaluation

**Dual Metrics**:

1. **Accuracy**: Binary correctness (expression equivalence via SymPy simplification)

2. **EED Score**: Continuous assessment of expression tree similarity

The EED Score evaluates the similarity between the model-generated answer and the ground truth by leveraging the concept of expression tree edit distance. The process involves the following steps:

1. **Simplification of Expressions**:Both the ground truth (`gt`) and the model-generated answer (`gen`) are first converted into simplified symbolic expressions using the `sympy.simplify()` function. This step ensures that equivalent forms of the same expression are recognized as identical.

2. **Equivalence Check**:If the simplified expressions of `gt` and `gen` are identical, the EED Score is assigned a perfect score of 100, indicating complete correctness.

3. **Tree Conversion and Edit Distance Calculation**:If the expressions are not identical, they are converted into tree structures. The edit distance between these trees is then calculated using an extended version of the Zhang-Shasha algorithm. This distance represents the minimum number of node-level operations (insertions, deletions, and updates) required to transform one tree into the other.

4. **Relative Edit Distance and Scoring**:The relative edit distance \( r \) is computed as the ratio of the edit distance to the size of the ground truth tree. The EED Score is then determined based on this relative distance:

- If \( r = 0 \) (i.e., the expressions are identical), the score is 100.

- If \( 0 < r < 0.6 \), the score is calculated as \( 60 - 100r \).

- If \( r \geq 0.6 \), the score is 0, indicating a significant discrepancy between the model-generated answer and the ground truth.

This scoring mechanism provides a continuous measure of similarity, allowing for a nuanced evaluation of the model's reasoning capabilities beyond binary correctness.

**Key Advantages**:

- 204% higher sample efficiency vs binary metrics

- Distinguishes coefficient errors (30<EED score<60) vs structural errors (EED score<30)

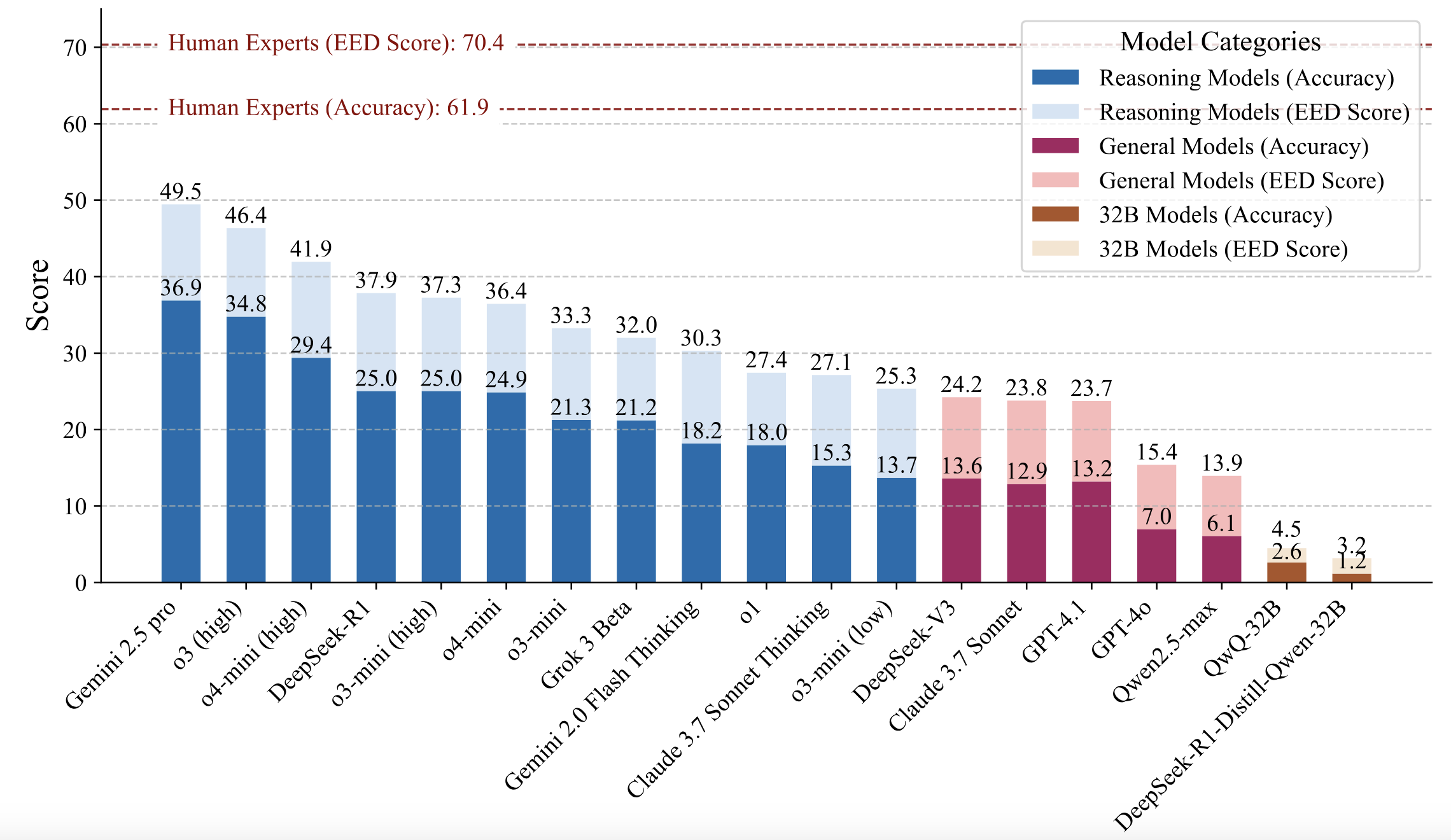

### Human Baseline

- **Participants**: 81 PKU physics students

- **Protocol**:

- **8 problems per student**: Each student solved a set of 8 problems from PHYBench dataset

- **Time-constrained solving**: 3 hours

- **Performance metrics**:

- **61.9±2.1% average accuracy**

- **70.4±1.8 average EED Score**

- Top quartile reached 71.4% accuracy and 80.4 EED Score

- Significant outperformance vs LLMs: Human experts outperformed all evaluated LLMs at 99% confidence level

- Human experts significantly outperformed all evaluated LLMs (99.99% confidence level)

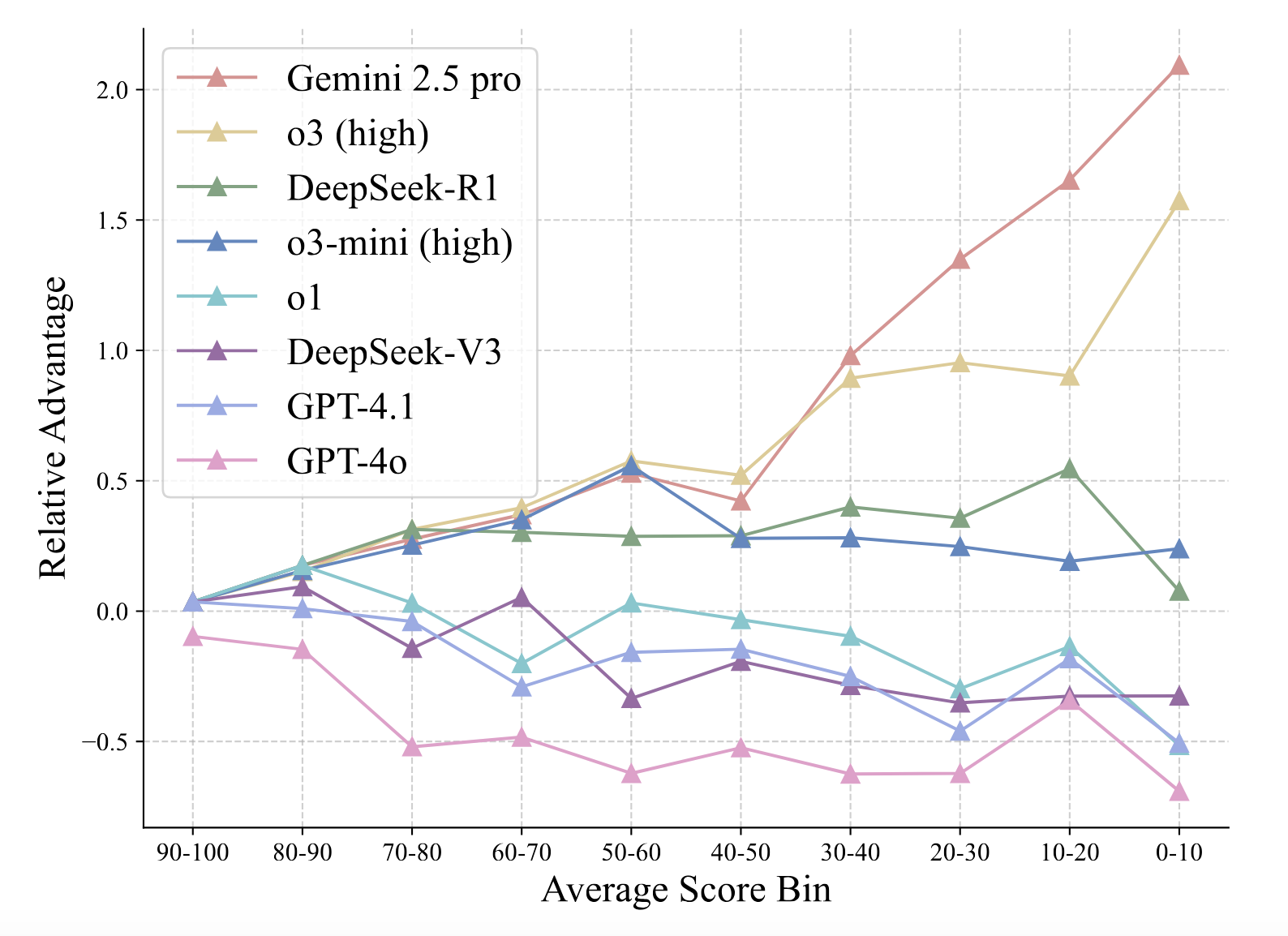

## 📝 Main Results

The results of the evaluation are shown in the following figure:

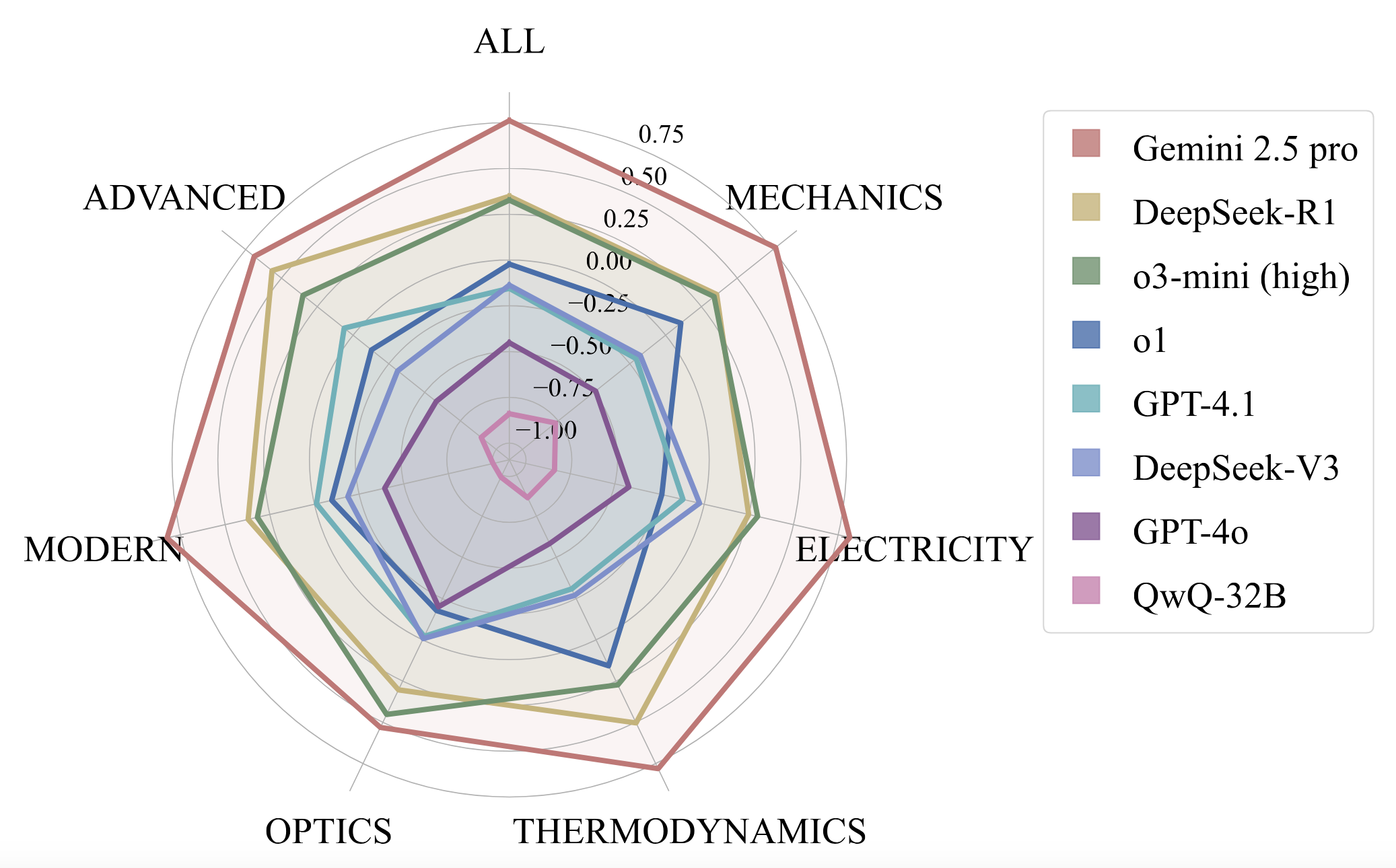

1. **Significant Performance Gap**: Even state-of-the-art LLMs significantly lag behind human experts in physical reasoning. The highest-performing model, Gemini 2.5 Pro, achieved only a 36.9% accuracy, compared to the human baseline of 61.9%.

2. **EED Score Advantages**: The EED Score provides a more nuanced evaluation of model performance compared to traditional binary scoring methods.

3. **Domain-Specific Strengths**: Different models exhibit varying strengths in different domains of physics:

* Gemini 2.5 Pro shows strong performance across most domains

* DeepSeek-R1 and o3-mini (high) shows comparable performance in mechanics and electricity

* Most models struggle with advanced physics and modern physics

4. **Difficulty Handling**: Comparing the advantage across problem difficulties, Gemini 2.5 Pro gains a pronounced edge on harder problems, followed by o3 (high).

## 😵💫 Error Analysis

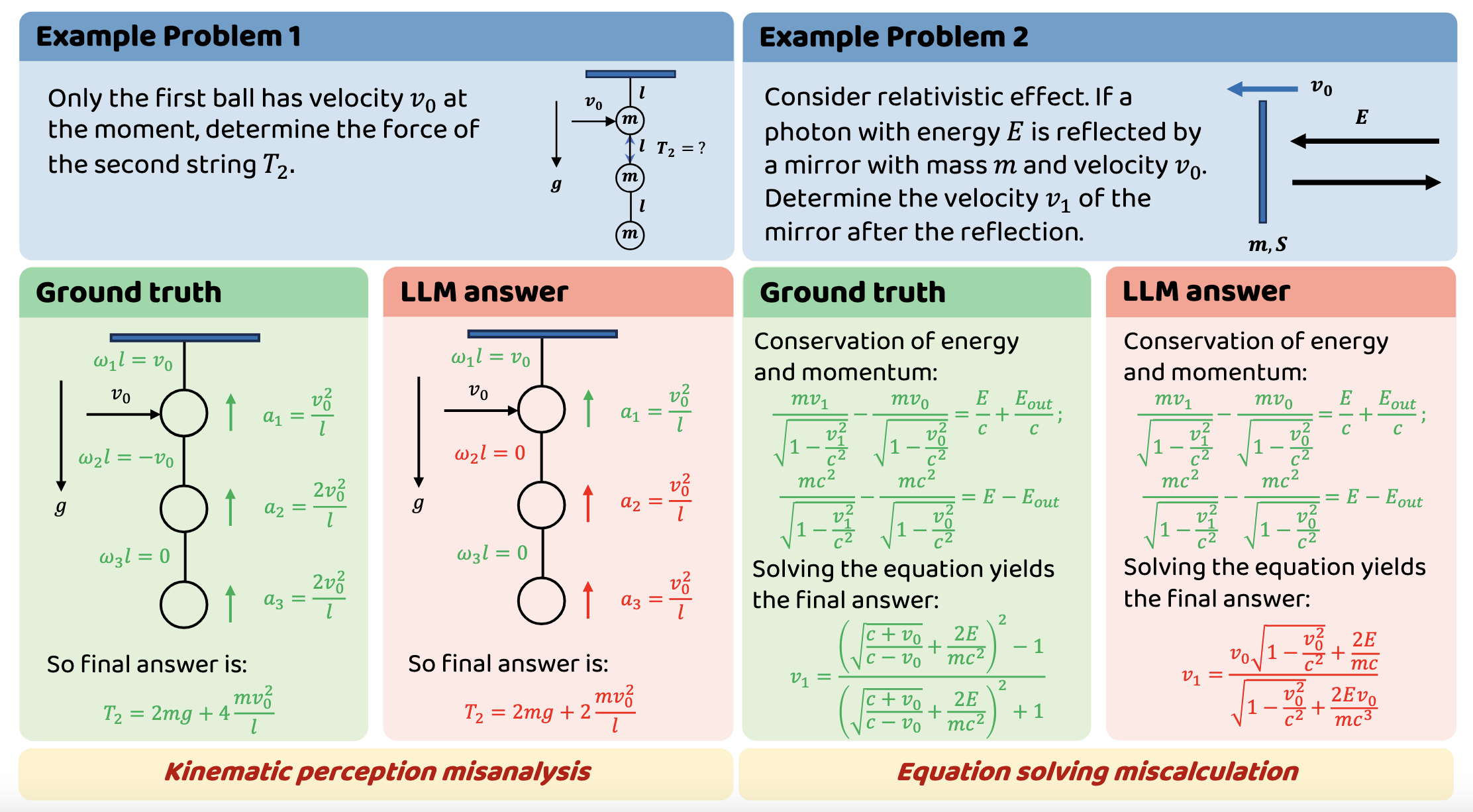

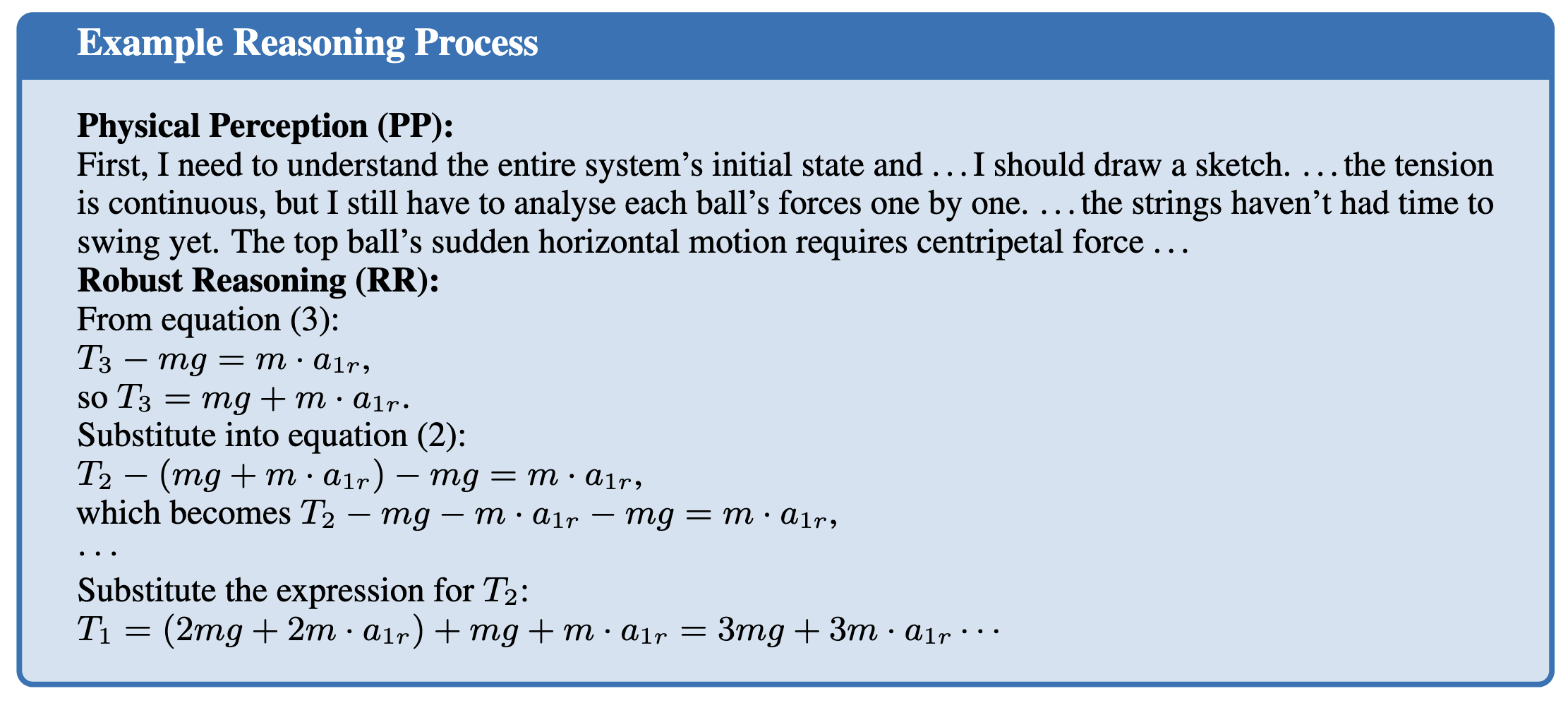

We categorize the capabilities assessed by the PHYBench benchmark into two key dimensions: Physical Perception (PP) and Robust Reasoning (RR):

1. **Physical Perception (PP) Errors**: During this phase, models engage in intensive semantic reasoning, expending significant cognitive effort to identify relevant physical objects, variables, and dynamics. Models make qualitative judgments about which physical effects are significant and which can be safely ignored. PP manifests as critical decision nodes in the reasoning chain. An example of a PP error is shown in Example Problem 1.

2. **Robust Reasoning (RR) Errors**: In this phase, models produce numerous lines of equations and perform symbolic reasoning. This process forms the connecting chains between perception nodes. RR involves consistent mathematical derivation, equation solving, and proper application of established conditions. An example of a RR error is shown in Example Problem 2.

## 🚩 Citation

```bibtex

@misc{qiu2025phybenchholisticevaluationphysical,

title={PHYBench: Holistic Evaluation of Physical Perception and Reasoning in Large Language Models},

author={Shi Qiu and Shaoyang Guo and Zhuo-Yang Song and Yunbo Sun and Zeyu Cai and Jiashen Wei and Tianyu Luo and Yixuan Yin and Haoxu Zhang and Yi Hu and Chenyang Wang and Chencheng Tang and Haoling Chang and Qi Liu and Ziheng Zhou and Tianyu Zhang and Jingtian Zhang and Zhangyi Liu and Minghao Li and Yuku Zhang and Boxuan Jing and Xianqi Yin and Yutong Ren and Zizhuo Fu and Weike Wang and Xudong Tian and Anqi Lv and Laifu Man and Jianxiang Li and Feiyu Tao and Qihua Sun and Zhou Liang and Yushu Mu and Zhongxuan Li and Jing-Jun Zhang and Shutao Zhang and Xiaotian Li and Xingqi Xia and Jiawei Lin and Zheyu Shen and Jiahang Chen and Qiuhao Xiong and Binran Wang and Fengyuan Wang and Ziyang Ni and Bohan Zhang and Fan Cui and Changkun Shao and Qing-Hong Cao and Ming-xing Luo and Muhan Zhang and Hua Xing Zhu},

year={2025},

eprint={2504.16074},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2504.16074},

}

```

|

Major-TOM/Core-S2L2A-MMEarth | Major-TOM | 2025-04-26T10:04:36Z | 577 | 3 | [

"license:cc-by-sa-4.0",

"size_categories:10M<n<100M",

"modality:geospatial",

"arxiv:2412.05600",

"doi:10.57967/hf/5240",

"region:us",

"embeddings",

"earth-observation",

"remote-sensing",

"sentinel-2",

"satellite",

"geospatial",

"satellite-imagery"

] | [] | 2025-02-03T14:13:55Z | 2 | ---

license: cc-by-sa-4.0

tags:

- embeddings

- earth-observation

- remote-sensing

- sentinel-2

- satellite

- geospatial

- satellite-imagery

size_categories:

- 10M<n<100M

configs:

- config_name: default

data_files: embeddings/*.parquet

---

# Core-S2L2A-MMEarth (Pooled) 🟥🟩🟦🟧🟨🟪 🛰️

> This is a pooled down (about 10x) version of the computed dataset due to storage constraints on HuggingFace. For a full size access, please visit [**Creodias EODATA**](https://creodias.eu/eodata/all-sources/).

## Input data

* Sentinel-2 (Level 2A) multispectral dataset global coverage

* All samples from [**MajorTOM Core-S2L2A**](https://huggingface.co/datasets/Major-TOM/Core-S2L2A)

* Embedding_shape = **(320, 133, 133)**

* Pooled shape = **(320, 13, 13)**

## Metadata content

| Field | Type | Description |

|:-----------------:|:--------:|-----------------------------------------------------------------------------|

| unique_id | string | hash generated from geometry, time, product_id, and average embedding (320,1,1) |

| grid_cell | string | Major TOM cell |

| grid_row_u | int | Major TOM cell row |

| grid_col_r | int | Major TOM cell col |

| product_id | string | ID of the original product |

| timestamp | string | Timestamp of the sample |

| centre_lat | float | Centre of the of the grid_cell latitude |

| centre_lon | float | Centre of the of the grid_cell longitude |

| geometry | geometry | Polygon footprint (WGS84) of the grid_cell |

| utm_footprint | string | Polygon footprint (image UTM) of the grid_cell |

| utm_crs | string | CRS of the original product |

| file_name | string | Name of reference MajorTOM product |

| file_index | int | Position of the embedding within the .dat file |

## Model

The image encoder of the [**MMEarth model**](https://github.com/vishalned/MMEarth-train) was used to extract embeddings

Model [**weights**](https://sid.erda.dk/cgi-sid/ls.py?share_id=g23YOnaaTp¤t_dir=pt-all_mod_atto_1M_64_uncertainty_56-8&flags=f)

Weights info:

**pt-all_mod_atto_1M_64_uncertainty_56-8**

- **INFO**: pt-($INPUT)_($MODEL)_($DATA)_($LOSS)_($MODEL_IMG_SIZE)_($PATCH_SIZE)

- **INPUT:** all_mod # for s2-12 bands as input and all modalities as output

- **MODEL:** atto

- **DATA:** 1M_64 # MMEarth64, 1.2M locations and image size 64

- **LOSS:** uncertainty

- **MODEL_IMG_SIZE:** 56 # when using the data with image size 64

- **PATCH_SIZE:** 8

## Example Use

Interface scripts are available at

```python

import numpy as np

input_file_path = 'processed_part_00045_pooled.dat' # Path to the saved .dat file

pooled_shape=(320, 13, 13)

embedding_size = np.prod(pooled_shape)

dtype_size = np.dtype(np.float32).itemsize

# Calculate the byte offset for the embedding you want to read

embedding_index = 4

offset = embedding_index * embedding_size * dtype_size

# Load the specific embedding

with open(file_path, 'rb') as f:

f.seek(offset)

embedding_data = np.frombuffer(f.read(embedding_size * dtype_size), dtype=np.float32)

embedding = embedding_data.reshape(pooled_shape) # Reshape to the pooled embedding shape

embedding

```

## Generate Your Own Major TOM Embeddings

The [**embedder**](https://github.com/ESA-PhiLab/Major-TOM/tree/main/src/embedder) subpackage of Major TOM provides tools for generating embeddings like these ones. You can see an example of this in a dedicated notebook at https://github.com/ESA-PhiLab/Major-TOM/blob/main/05-Generate-Major-TOM-Embeddings.ipynb.

[](https://github.com/ESA-PhiLab/Major-TOM/blob/main/05-Generate-Major-TOM-Embeddings.ipynb)

---

## Major TOM Global Embeddings Project 🏭

This dataset is a result of a collaboration between [**CloudFerro**](https://cloudferro.com/) 🔶, [**asterisk labs**](https://asterisk.coop/) and [**Φ-lab, European Space Agency (ESA)**](https://philab.esa.int/) 🛰️ set up in order to provide open and free vectorised expansions of Major TOM datasets and define a standardised manner for releasing Major TOM embedding expansions.

The embeddings extracted from common AI models make it possible to browse and navigate large datasets like Major TOM with reduced storage and computational demand.

The datasets were computed on the [**GPU-accelerated instances**](https://cloudferro.com/ai/ai-computing-services/)⚡ provided by [**CloudFerro**](https://cloudferro.com/) 🔶 on the [**CREODIAS**](https://creodias.eu/) cloud service platform 💻☁️.

Discover more at [**CloudFerro AI services**](https://cloudferro.com/ai/).

## Authors

[**Mikolaj Czerkawski**](https://mikonvergence.github.io) (Asterisk Labs), [**Marcin Kluczek**](https://www.linkedin.com/in/marcin-kluczek-03852a1a8/) (CloudFerro), [**Jędrzej S. Bojanowski**](https://www.linkedin.com/in/j%C4%99drzej-s-bojanowski-a5059872/) (CloudFerro)

## Open Access Manuscript

This dataset is an output from the embedding expansion project outlined in: [https://arxiv.org/abs/2412.05600/](https://arxiv.org/abs/2412.05600/).

[](https://doi.org/10.48550/arXiv.2412.05600)

<details>

<summary>Read Abstract</summary>

> With the ever-increasing volumes of the Earth observation data present in the archives of large programmes such as Copernicus, there is a growing need for efficient vector representations of the underlying raw data. The approach of extracting feature representations from pretrained deep neural networks is a powerful approach that can provide semantic abstractions of the input data. However, the way this is done for imagery archives containing geospatial data has not yet been defined. In this work, an extension is proposed to an existing community project, Major TOM, focused on the provision and standardization of open and free AI-ready datasets for Earth observation. Furthermore, four global and dense embedding datasets are released openly and for free along with the publication of this manuscript, resulting in the most comprehensive global open dataset of geospatial visual embeddings in terms of covered Earth's surface.

> </details>

If this dataset was useful for you work, it can be cited as:

```latex

@misc{EmbeddedMajorTOM,

title={Global and Dense Embeddings of Earth: Major TOM Floating in the Latent Space},

author={Mikolaj Czerkawski and Marcin Kluczek and Jędrzej S. Bojanowski},

year={2024},

eprint={2412.05600},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2412.05600},

}

```

Powered by [Φ-lab, European Space Agency (ESA) 🛰️](https://philab.esa.int/) in collaboration with [CloudFerro 🔶](https://cloudferro.com/) & [asterisk labs](https://asterisk.coop/)

|

launch/thinkprm-1K-verification-cots | launch | 2025-04-26T02:05:42Z | 125 | 3 | [

"task_categories:question-answering",

"task_categories:text-generation",

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"arxiv:2504.16828",

"region:us",

"math reasoning",

"process supervision",

"reward modeling",

"chain-of-thought",

"synthetic data"

] | [

"question-answering",

"text-generation"

] | 2025-04-24T18:37:40Z | 3 | ---

size_categories:

- n<1K

task_categories:

- question-answering

- text-generation

pretty_name: ThinkPRM-Synthetic-Verification-1K

dataset_info:

features:

- name: problem

dtype: string

- name: prefix

dtype: string

- name: cot

dtype: string

- name: prefix_steps

sequence: string

- name: gt_step_labels

sequence: string

- name: prefix_label

dtype: bool

splits:

- name: train

num_bytes: 8836501

num_examples: 1000

download_size: 3653730

dataset_size: 8836501

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

tags:

- math reasoning

- process supervision

- reward modeling

- chain-of-thought

- synthetic data

---

This dataset contains 1,000 high-quality synthetic verification chains-of-thought (CoTs) designed for training generative Process Reward Models (PRMs), as used in the paper ["Process Reward Models that Think"](https://arxiv.org/abs/2504.16828). The goal was to create a data-efficient alternative to traditional PRM training which often requires extensive human annotation or expensive rollouts.

Each instance consists of a math problem, a corresponding multi-step solution prefix (sourced from PRM800K [Lightman et al., 2023]), and a detailed verification CoT generated by the [QwQ-32B-Preview](https://huggingface.co/Qwen/QwQ-32B-Preview). The verification CoT critiques each step of the solution prefix and provides a step-level correctness judgment (`\boxed{correct}` or `\boxed{incorrect}`).

To ensure high-quality synthetic CoTs, only chains where all step-level judgments matched the ground-truth human annotations from the PRM800K dataset were retained. They were also filtered based on correct formatting and length constraints to avoid issues like excessive overthinking observed in unfiltered generation. The figure below summarizes the synthetic cots collection. Refer to our paper for more details on data collection.

### Curation Rationale

The dataset was created to enable efficient training of powerful generative PRMs. The core idea is that fine-tuning strong reasoning models on carefully curated, synthetic verification CoTs can yield verifiers that outperform models trained on much larger, traditionally labeled datasets. The process-based filtering (matching gold step labels) was shown to be crucial for generating high-quality training data compared to outcome-based filtering.

**Code:** [https://github.com/mukhal/thinkprm](https://github.com/mukhal/thinkprm)

**Paper:** [Process Reward Models that Think](https://arxiv.org/abs/2504.16828)

## Data Fields

The dataset contains the following fields:

* `problem`: (string) The mathematical problem statement (e.g., from MATH dataset via PRM800K).

* `prefix`: (string) The full step-by-step solution prefix being evaluated.

* `cot`: (string) The full synthetic verification chain-of-thought generated by QwQ-32B-Preview, including step-by-step critiques and judgments. See Fig. 13 in the paper for an example.

* `prefix_steps`: (list of strings) The solution prefix broken down into individual steps.

* `gt_step_labels`: (list of bools/ints) The ground-truth correctness labels (e.g., '+' for correct, '-' for incorrect) for each corresponding step in `prefix_steps`, sourced from PRM800K annotations.

* `prefix_label`: (bool) The overall ground-truth correctness label for the entire solution prefix: True if all steps are correct, False otherwise.

### Source Data

* **Problems & Solution Prefixes:** Sourced from the PRM800K dataset, which is based on the MATH dataset.

* **Verification CoTs:** Generated synthetically using the QwQ-32B-Preview model prompted with instructions detailed in Fig. 14 of the paper.

* **Filtering Labels:** Ground-truth step-level correctness labels from PRM800K were used to filter the synthetic CoTs.

## Citation Information

If you use this dataset, please cite the original paper:

```

@article{khalifa2025,

title={Process Reward Models That Think},

author={Muhammad Khalifa and Rishabh Agarwal and Lajanugen Logeswaran and Jaekyeom Kim and Hao Peng and Moontae Lee and Honglak Lee and Lu Wang},

year={2025},

journal={arXiv preprint arXiv:2504.16828},

url={https://arxiv.org/abs/2504.16828},

}

``` |

lightonai/ms-marco-en-bge-gemma | lightonai | 2025-04-25T13:25:43Z | 107 | 2 | [

"task_categories:feature-extraction",

"task_categories:sentence-similarity",

"multilinguality:monolingual",

"language:en",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"sentence-transformers",

"colbert",

"lightonai",

"PyLate"

] | [

"feature-extraction",

"sentence-similarity"

] | 2025-04-25T12:28:31Z | 2 | ---

language:

- en

multilinguality:

- monolingual

size_categories:

- 1M<n<10M

task_categories:

- feature-extraction

- sentence-similarity

pretty_name: ms-marco-en-bge-gemma

tags:

- sentence-transformers

- colbert

- lightonai

- PyLate

dataset_info:

- config_name: documents

features:

- name: document_id

dtype: int64

- name: text

dtype: string

splits:

- name: train

num_bytes: 3089188164

num_examples: 8841823

download_size: 1679883891

dataset_size: 3089188164

- config_name: queries

features:

- name: query_id

dtype: int64

- name: text

dtype: string

splits:

- name: train

num_bytes: 38373408

num_examples: 808731

download_size: 28247183

dataset_size: 38373408

- config_name: train

features:

- name: query_id

dtype: int64

- name: document_ids

dtype: string

- name: scores

dtype: string

splits:

- name: train

num_bytes: 599430336

num_examples: 640000

download_size: 434872561

dataset_size: 599430336

configs:

- config_name: documents

data_files:

- split: train

path: documents/train-*

- config_name: queries

data_files:

- split: train

path: queries/train-*

- config_name: train

data_files:

- split: train

path: train/train-*

---

# ms-marco-en-bge

This dataset contains the [MS MARCO](https://microsoft.github.io/msmarco/) dataset with negatives mined using ColBERT and then scored by [bge-reranker-v2-gemma](https://huggingface.co/BAAI/bge-reranker-v2-gemma).

It can be used to train a retrieval model using knowledge distillation, for example [using PyLate](https://lightonai.github.io/pylate/#knowledge-distillation).

#### `knowledge distillation`

To fine-tune a model using knowledge distillation loss we will need three distinct file:

* Datasets

```python

from datasets import load_dataset

train = load_dataset(

"lightonai/ms-marco-en-gemma",

"train",

split="train",

)

queries = load_dataset(

"lightonai/ms-marco-en-gemma",

"queries",

split="train",

)

documents = load_dataset(

"lightonai/ms-marco-en-gemma",

"documents",

split="train",

)

```

Where:

- `train` contains three distinct columns: `['query_id', 'document_ids', 'scores']`

```python

{

"query_id": 54528,

"document_ids": [

6862419,

335116,

339186,

7509316,

7361291,

7416534,

5789936,

5645247,

],

"scores": [

0.4546215673141326,

0.6575686537173476,

0.26825184192900203,

0.5256195579370395,

0.879939718687207,

0.7894968184862693,

0.6450100468854655,

0.5823844608171467,

],

}

```

Assert that the length of document_ids is the same as scores.

- `queries` contains two distinct columns: `['query_id', 'text']`

```python

{"query_id": 749480, "text": "what is function of magnesium in human body"}

```

- `documents` contains two distinct columns: `['document_ids', 'text']`

```python

{

"document_id": 136062,

"text": "2. Also called tan .a fundamental trigonometric function that, in a right triangle, is expressed as the ratio of the side opposite an acute angle to the side adjacent to that angle. 3. in immediate physical contact; touching; abutting. 4. a. touching at a single point, as a tangent in relation to a curve or surface.lso called tan .a fundamental trigonometric function that, in a right triangle, is expressed as the ratio of the side opposite an acute angle to the side adjacent to that angle. 3. in immediate physical contact; touching; abutting. 4. a. touching at a single point, as a tangent in relation to a curve or surface.",

}

```

|

ddupont/test-dataset | ddupont | 2025-04-24T23:37:48Z | 149 | 2 | [

"task_categories:visual-question-answering",

"language:en",

"license:mit",

"size_categories:n<1K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"cua",

"highquality",

"tasks"

] | [

"visual-question-answering"

] | 2025-04-18T17:06:08Z | 2 | ---

language: en

license: mit

task_categories:

- visual-question-answering

tags:

- cua

- highquality

- tasks

---

# Uploaded computer interface trajectories

These trajectories were generated and uploaded using [c/ua](https://github.com/trycua/cua) |

WensongSong/AnyInsertion | WensongSong | 2025-04-24T17:43:25Z | 584 | 5 | [

"task_categories:image-to-image",

"language:en",

"license:mit",

"size_categories:10K<n<100K",

"format:arrow",

"modality:image",

"modality:text",

"library:datasets",

"library:mlcroissant",

"arxiv:2504.15009",

"region:us"

] | [

"image-to-image"

] | 2025-04-23T13:15:55Z | 5 | ---

license: mit

task_categories:

- image-to-image

language:

- en

pretty_name: a

size_categories:

- 10M<n<100M

---

# AnyInsertion

<p align="center">

<a href="https://song-wensong.github.io/"><strong>Wensong Song</strong></a>

·

<a href="https://openreview.net/profile?id=~Hong_Jiang4"><strong>Hong Jiang</strong></a>

·

<a href="https://z-x-yang.github.io/"><strong>Zongxing Yang</strong></a>

·

<a href="https://scholar.google.com/citations?user=WKLRPsAAAAAJ&hl=en"><strong>Ruijie Quan</strong></a>

·

<a href="https://scholar.google.com/citations?user=RMSuNFwAAAAJ&hl=en"><strong>Yi Yang</strong></a>

<br>

<br>

<a href="https://arxiv.org/pdf/2504.15009" style="display: inline-block; margin-right: 10px;">

<img src='https://img.shields.io/badge/arXiv-InsertAnything-red?color=%23aa1a1a' alt='Paper PDF'>

</a>

<a href='https://song-wensong.github.io/insert-anything/' style="display: inline-block; margin-right: 10px;">

<img src='https://img.shields.io/badge/Project%20Page-InsertAnything-cyan?logoColor=%23FFD21E&color=%23cbe6f2' alt='Project Page'>

</a>

<a href='https://github.com/song-wensong/insert-anything' style="display: inline-block;">

<img src='https://img.shields.io/badge/GitHub-InsertAnything-black?logoColor=23FFD21E&color=%231d2125'>

</a>

<br>

<b>Zhejiang University | Harvard University | Nanyang Technological University </b>

</p>

## News

* **[2025.4.25]** Released AnyInsertion v1 mask-prompt dataset on Hugging Face.

## Summary

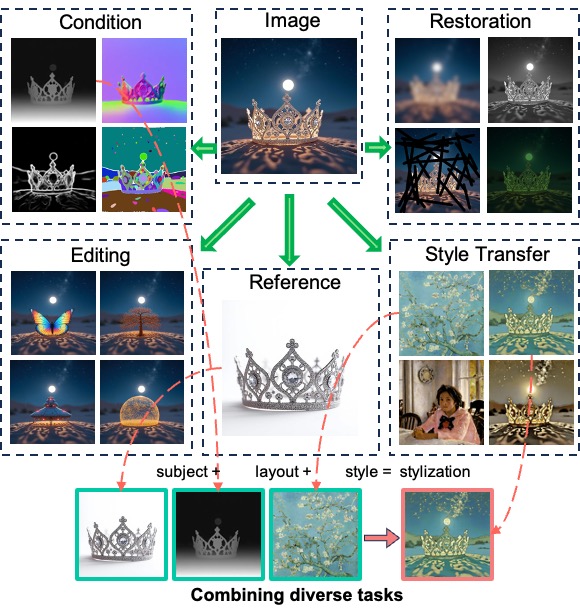

This is the dataset proposed in our paper [**Insert Anything: Image Insertion via In-Context Editing in DiT**](https://arxiv.org/abs/2504.15009)

AnyInsertion dataset consists of training and testing subsets. The training set includes 159,908 samples across two prompt types: 58,188 mask-prompt image pairs and 101,720 text-prompt image pairs;the test set includes 158 data pairs: 120 mask-prompt pairs and 38 text-prompt pairs.

AnyInsertion dataset covers diverse categories including human subjects, daily necessities, garments, furniture, and various objects.

## Directory

```

data/

├── train/

│ ├── accessory/

│ │ ├── ref_image/ # Reference image containing the element to be inserted